Font Clustering and Classification in Document Images *

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Supreme Court of the State of New York Appellate Division: Second Judicial Department

Supreme Court of the State of New York Appellate Division: Second Judicial Department A GLOSSARY OF TERMS FOR FORMATTING COMPUTER-GENERATED BRIEFS, WITH EXAMPLES The rules concerning the formatting of briefs are contained in CPLR 5529 and in § 1250.8 of the Practice Rules of the Appellate Division. Those rules cover technical matters and therefore use certain technical terms which may be unfamiliar to attorneys and litigants. The following glossary is offered as an aid to the understanding of the rules. Typeface: A typeface is a complete set of characters of a particular and consistent design for the composition of text, and is also called a font. Typefaces often come in sets which usually include a bold and an italic version in addition to the basic design. Proportionally Spaced Typeface: Proportionally spaced type is designed so that the amount of horizontal space each letter occupies on a line of text is proportional to the design of each letter, the letter i, for example, being narrower than the letter w. More text of the same type size fits on a horizontal line of proportionally spaced type than a horizontal line of the same length of monospaced type. This sentence is set in Times New Roman, which is a proportionally spaced typeface. Monospaced Typeface: In a monospaced typeface, each letter occupies the same amount of space on a horizontal line of text. This sentence is set in Courier, which is a monospaced typeface. Point Size: A point is a unit of measurement used by printers equal to approximately 1/72 of an inch. -

Typography One Typeface Classification Why Classify?

Typography One typeface classification Why classify? Classification helps us describe and navigate type choices Typeface classification helps to: 1. sort type (scholars, historians, type manufacturers), 2. reference type (educators, students, designers, scholars) Approximately 250,000 digital typefaces are available today— Even with excellent search engines, a common system of description is a big help! classification systems Many systems have been proposed Francis Thibaudeau, 1921 Maximillian Vox, 1952 Vox-ATypI, 1962 Aldo Novarese, 1964 Alexander Lawson, 1966 Blackletter Venetian French Dutch-English Transitional Modern Sans Serif Square Serif Script-Cursive Decorative J. Ben Lieberman, 1967 Marcel Janco, 1978 Ellen Lupton, 2004 The classification system you will learn is a combination of Lawson’s and Lupton’s systems Black Letter Old Style serif Transitional serif Modern Style serif Script Cursive Slab Serif Geometric Sans Grotesque Sans Humanist Sans Display & Decorative basic characteristics + stress + serifs (or lack thereof) + shape stress: where the thinnest parts of a letter fall diagonal stress vertical stress no stress horizontal stress Old Style serif Transitional serif or Slab Serif or or reverse stress (Centaur) Modern Style serif Sans Serif Display & Decorative (Baskerville) (Helvetica) (Edmunds) serif types bracketed serifs unbracketed serifs slab serifs no serif Old Style Serif and Modern Style Serif Slab Serif or Square Serif Sans Serif Transitional Serif (Bodoni) or Egyptian (Helvetica) (Baskerville) (Rockwell/Clarendon) shape Geometric Sans Serif Grotesk Sans Serif Humanist Sans Serif (Futura) (Helvetica) (Gill Sans) Geometric sans are based on basic Grotesk sans look precisely drawn. Humanist sans are based on shapes like circles, triangles, and They have have uniform, human writing. -

Basic Styles of Lettering for Monuments and Markers.Indd

BASIC STYLES OF LETTERING FOR MONUMENTS AND MARKERS Monument Builders of North America, Inc. AA GuideGuide ToTo TheThe SelectionSelection ofof LETTERINGLETTERING From primitive times, man has sought to crude or garish or awkward letters, but in communicate with his fellow men through letters of harmonized alphabets which have symbols and graphics which conveyed dignity, balance and legibility. At the same meaning. Slowly he evolved signs and time, they are letters which are designed to hieroglyphics which became the visual engrave or incise cleanly and clearly into expression of his language. monumental stone, and to resist change or obliteration through year after year of Ultimately, this process evolved into the exposure. writing and the alphabets of the various tongues and civilizations. The early scribes The purpose of this book is to illustrate the and artists refi ned these alphabets, and the basic styles or types of alphabets which have development of printing led to the design been proved in memorial art, and which are of alphabets of related character and ready both appropriate and practical in the lettering readability. of monuments and markers. Memorial art--one of the oldest of the arts- Lettering or engraving of family memorials -was among the fi rst to use symbols and or individual markers is done today with “letters” to inscribe lasting records and history superb fi delity through the use of lasers or the into stone. The sculptors and carvers of each sandblast process, which employs a powerful generation infl uenced the form of letters and stream or jet of abrasive “sand” to cut into the numerals and used them to add both meaning granite or marble. -

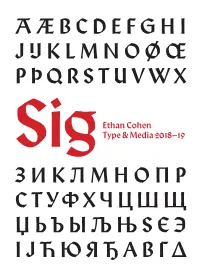

Sig Process Book

A Æ B C D E F G H I J IJ K L M N O Ø Œ P Þ Q R S T U V W X Ethan Cohen Type & Media 2018–19 SigY Z А Б В Г Ґ Д Е Ж З И К Л М Н О П Р С Т У Ф Х Ч Ц Ш Щ Џ Ь Ъ Ы Љ Њ Ѕ Є Э І Ј Ћ Ю Я Ђ Α Β Γ Δ SIG: A Revival of Rudolf Koch’s Wallau Type & Media 2018–19 ЯREthan Cohen ‡ Submitted as part of Paul van der Laan’s Revival class for the Master of Arts in Type & Media course at Koninklijke Academie von Beeldende Kunsten (Royal Academy of Art, The Hague) INTRODUCTION “I feel such a closeness to William Project Overview Morris that I always have the feeling Sig is a revival of Rudolf Koch’s Wallau Halbfette. My primary source that he cannot be an Englishman, material was the Klingspor Kalender für das Jahr 1933 (Klingspor Calen- dar for the Year 1933), a 17.5 × 9.6 cm book set in various cuts of Wallau. he must be a German.” The Klingspor Kalender was an annual promotional keepsake printed by the Klingspor Type Foundry in Offenbach am Main that featured different Klingspor typefaces every year. This edition has a daily cal- endar set in Magere Wallau (Wallau Light) and an 18-page collection RUDOLF KOCH of fables set in 9 pt Wallau Halbfette (Wallau Semibold) with woodcut illustrations by Willi Harwerth, who worked as a draftsman at the Klingspor Type Foundry. -

Choosing Fonts – Quick Tips

Choosing Fonts – Quick Tips 1. Choose complementary fonts – choose a font that matches the mood of your design. For business cards, it is probably best to choose a classic font. *Note: These fonts are not available in Canva, but are in the Microsoft Office Suite. For some good Canva options, go to this link – https://www.canva.com/learn/canva-for-work-brand-fonts/ Examples: Serif Fonts: Sans Serif Fonts: Times New Roman Helvetica Cambria Arial Georgia Verdana Courier New Calibri Century Schoolbook 2. Establish a visual hierarchy – Use fonts to separate different types of information and guide the reader - Use different fonts, sizes, weights (boldness), and even color - Example: Heading (Helvetica, SZ 22, Bold) Sub-heading (Helvetica, SZ 16, Italics) Body Text (Garamond, SZ 12, Regular) Captions (Garamond, SZ 10, Regular 3. Mix Serifs and Sans Serifs – This is one of the best ways to add visual interest to type. See in the above example how I combined Helvetica, a sans serif font, with Garamond, a serif font. 4. Create Contrast, Not Conflict: Fonts that are too dissimilar may not pair well together. Contrast is good, but fonts need a connecting element. Conflict Contrast 5. Use Fonts from the Same Family: These fonts were created to work together. For example, the fonts in the Arial or Courier families. 6. Limit Your Number of Fonts: No more than 2 or 3 is a good rule – for business cards, choose 2. 7. Trust Your Eye: These are not concrete rules – you will know if a design element works or not! . -

Background a Short Introduction to Font Characteristics

fonts: background A short introduction to font characteristics Maarten Gelderman Hardly anyone will dispute the statement that proporion- ally spaced fonts are more beautiful and legible than mono- abstract spaced designs. In a monospaced design the letter i takes as Almost anyone who develops an interest in fonts is bound to much space as a letter m or W. Consequently, some char- be overwelmed by the bewildering variety of letterforms acters look simply too compressed, whereas around oth- available. The number of fonts available from commercial ers too much white space is found. Monospaced fonts are suppliers like Adobe, URW, LinoType and others runs into the simply not suited for body text. Only in situations where it thousands. A recent catalog issued by FontShop [Truong et al., is important that all characters are of equal width, e.g., in 1998] alone lists over 25.000 different varieties.1 And listings of computer programs, where it may be important somehow, although the differences of the individual letters are that each individual character can be discerned and where hardly noticable, each font has its own character, its own the layout of the program may depend on using mono- personality. Even the atmosphere elucided by a text set from spaced fonts, can the usage of a monospaced font be de- Adobe Garamond is noticably different from the atmosphere of the same text set from Stempel Garamond. Although fended. In most other situations, they should simply be decisions about the usage of fonts, will always remain in the avoided. realm of esthetics, some knowledge about font characteristics may nevertheless help to create some order and to find out Romans, italics and slant A second typeface character- why certain design decisions just do not work. -

Vision Performance Institute

Vision Performance Institute Technical Report Individual character legibility James E. Sheedy, OD, PhD Yu-Chi Tai, PhD John Hayes, PhD The purpose of this study was to investigate the factors that influence the legibility of individual characters. Previous work in our lab [2], including the first study in this sequence, has studied the relative legibility of fonts with different anti- aliasing techniques or other presentation medias, such as paper. These studies have tested the relative legibility of a set of characters configured with the tested conditions. However the relative legibility of individual characters within the character set has not been studied. While many factors seem to affect the legibility of a character (e.g., character typeface, character size, image contrast, character rendering, the type of presentation media, the amount of text presented, viewing distance, etc.), it is not clear what makes a character more legible when presenting in one way than in another. In addition, the importance of those different factors to the legibility of one character may not be held when the same set of factors was presented in another character. Some characters may be more legible in one typeface and others more legible in another typeface. What are the character features that affect legibility? For example, some characters have wider openings (e.g., the opening of “c” in Calibri is wider than the character “c” in Helvetica); some letter g’s have double bowls while some have single (e.g., “g” in Batang vs. “g” in Verdana); some have longer ascenders or descenders (e.g., “b” in Constantia vs. -

A Collection of Mildly Interesting Facts About the Little Symbols We Communicate With

Ty p o g raph i c Factettes A collection of mildly interesting facts about the little symbols we communicate with. Helvetica The horizontal bars of a letter are almost always thinner than the vertical bars. Minion The font size is approximately the measurement from the lowest appearance of any letter to the highest. Most of the time. Seventy-two points equals one inch. Fridge256 point Cochin most of 50the point Zaphino time Letters with rounded bottoms don’t sit on the baseline, but slightly below it. Visually, they would appear too high if they rested on the same base as the squared letters. liceAdobe Caslon Bold UNITED KINGDOM UNITED STATES LOLITA LOLITA In Ancient Rome, scribes would abbreviate et (the latin word for and) into one letter. We still use that abbreviation, called the ampersand. The et is still very visible in some italic ampersands. The word ampersand comes from and-per-se-and. Strange. Adobe Garamond Regular Adobe Garamond Italic Trump Mediaval Italic Helvetica Light hat two letters ss w it cam gue e f can rom u . I Yo t h d. as n b ha e rt en ho a s ro n u e n t d it r fo w r s h a u n w ) d r e e m d a s n o r f e y t e t a e r b s , a b s u d t e d e e n m t i a ( n l d o b s o m a y r S e - d t w A i e t h h t t , h d e n a a s d r v e e p n t m a o f e e h m t e a k i i l . -

The Selnolig Package: Selective Suppression of Typographic Ligatures*

The selnolig package: Selective suppression of typographic ligatures* Mico Loretan† 2015/10/26 Abstract The selnolig package suppresses typographic ligatures selectively, i.e., based on predefined search patterns. The search patterns focus on ligatures deemed inappropriate because they span morpheme boundaries. For example, the word shelfful, which is mentioned in the TEXbook as a word for which the ff ligature might be inappropriate, is automatically typeset as shelfful rather than as shelfful. For English and German language documents, the selnolig package provides extensive rules for the selective suppression of so-called “common” ligatures. These comprise the ff, fi, fl, ffi, and ffl ligatures as well as the ft and fft ligatures. Other f-ligatures, such as fb, fh, fj and fk, are suppressed globally, while making exceptions for names and words of non-English/German origin, such as Kafka and fjord. For English language documents, the package further provides ligature suppression rules for a number of so-called “discretionary” or “rare” ligatures, such as ct, st, and sp. The selnolig package requires use of the LuaLATEX format provided by a recent TEX distribution, e.g., TEXLive 2013 and MiKTEX 2.9. Contents 1 Introduction ........................................... 1 2 I’m in a hurry! How do I start using this package? . 3 2.1 How do I load the selnolig package? . 3 2.2 Any hints on how to get started with LuaLATEX?...................... 4 2.3 Anything else I need to do or know? . 5 3 The selnolig package’s approach to breaking up ligatures . 6 3.1 Free, derivational, and inflectional morphemes . -

Brand Manual the NEW BRAND DESIGN GUIDELINES for “LIPSUS”, a PRODUCT by SHAHAK-TEC LTD

AppleBay Media [email protected] Uziya Street 15/10 www.applebaymedia.com Jerusalem 9314315 Company Representative +972 50 990 2285 David Metzler Brand Manual THE NEW BRAND DESIGN GUIDELINES FOR “LIPSUS”, A PRODUCT BY SHAHAK-TEC LTD. March 2019 BRAND DESIGN MANUAL V1 PREPARED FOR LIPSUS Address Phone & Fax Online Shahak-Tec Ltd. Email 1: [email protected] 1 Avshalom Road, P.O. Box 166 Phone: +972 4 624 4444 Email 2: [email protected] Zikhron Yaaqov 3095101 Israel Fax: +972 4 635 0999 Website: www.shahaktec.com Brand Identity? Have a Look when Image meets Design. Lipsus Corporate Brand Guidelines Version: v1 // 2019 Table of content Brand Design Manual SECTION 1 | INTRODUCTION .........................................................................PAGE 04 SECTION 2 | BRAND LOGO ............................................................................PAGE 06 SECTION 3 | BRAND TYPOGRAPHY ..................................................................PAGE 10 SECTION 4 | BRAND COLOR SYSTEM ...............................................................PAGE 14 These guidelines describe the visual and verbal elements that represent Lipsus´ brand identity. welcome4 // 16 1. INTRODUCTION // Introduction THE DESIGN GUIDELINES These guidelines describe the visual and verbal elements that represent the corporate identity of Lipsus. This includes our name, logo and other elements such as color, type and graphics. Sending a consistent and controlled message of who you are is essential to presenting a strong, unified image of your company. These guidelines should reflect Lipsus’ commitment to quality, consitency and style. The Lipsus brand, including the logo, name, colors and identifying elements, are valuable company assets. Each of you is responsible for protecting the company’s interests by preventing unauthorized or incorrect use of the Lipsus name and marks. DAVID METZLER AppleBay Media welcome5 // 16 The Logo • Introduction • Elements • Versions • Dimensions • Applications 6 // 16 2. -

Zapfcoll Minikatalog.Indd

Largest compilation of typefaces from the designers Gudrun and Hermann Zapf. Most of the fonts include the Euro symbol. Licensed for 5 CPUs. 143 high quality typefaces in PS and/or TT format for Mac and PC. Colombine™ a Alcuin™ a Optima™ a Marconi™ a Zapf Chancery® a Aldus™ a Carmina™ a Palatino™ a Edison™ a Zapf International® a AMS Euler™ a Marcon™ a Medici Script™ a Shakespeare™ a Zapf International® a Melior™ a Aldus™ a Melior™ a a Melior™ Noris™ a Optima™ a Vario™ a Aldus™ a Aurelia™ a Zapf International® a Carmina™ a Shakespeare™ a Palatino™ a Aurelia™ a Melior™ a Zapf book® a Kompakt™ a Alcuin™ a Carmina™ a Sistina™ a Vario™ a Zapf Renaissance Antiqua® a Optima™ a AMS Euler™ a Colombine™ a Alcuin™ a Optima™ a Marconi™ a Shakespeare™ a Zapf Chancery® Aldus™ a Carmina™ a Palatino™ a Edison™ a Zapf international® a AMS Euler™ a Marconi™ a Medici Script™ a Shakespeare™ a Zapf international® a Aldus™ a Melior™ a Zapf Chancery® a Kompakt™ a Noris™ a Zapf International® a Car na™ a Zapf book® a Palatino™ a Optima™ Alcuin™ a Carmina™ a Sistina™ a Melior™ a Zapf Renaissance Antiqua® a Medici Script™ a Aldus™ a AMS Euler™ a Colombine™ a Vario™ a Alcuin™ a Marconi™ a Marconi™ a Carmina™ a Melior™ a Edison™ a Shakespeare™ a Zapf book® aZapf international® a Optima™ a Zapf International® a Carmina™ a Zapf Chancery® Noris™ a Optima™ a Zapf international® a Carmina™ a Sistina™ a Shakespeare™ a Palatino™ a a Kompakt™ a Aurelia™ a Melior™ a Zapf Renaissance Antiqua® Antiqua® a Optima™ a AMS Euler™ a Introduction Gudrun & Hermann Zapf Collection The Gudrun and Hermann Zapf Collection is a special edition for Macintosh and PC and the largest compilation of typefaces from the designers Gudrun and Hermann Zapf. -

Vademecum Logo+Font

Vademecum per l’uso di logotipo e font Logotipo Il logo Mosaico+ è disponibile in due versioni: logotipo completo e pittogramma con o senza payoff 1. logotipo completo (MOSAICO+) da utilizzare in ogni contesto istituzionale come: · lettere · documenti legali · firme email · presentazioni · cataloghi · progetti · listini · offerte · ... 2. pittogramma (M+) da utilizzare all’interno di: · sito e canali social di Mosaico+ in cui il profilo stesso si chiami Mosaico+ · presentazioni o strumenti che già riportino il logo completo · su immagini fotografiche e realizzazioni da ricondurre al brand · laddove lo spazio sia estremamente piccolo da rendere il logo completo illeggibile · ... 3. Il pittogramma può essere accompagnato dal payoff (Shaping mosaics) solo se leggibile. Logotipo e pittogramma sono disponibili esclusivamente in: · nero per tutte le comunicazioni istituzionali · bianco per cover o per brandizzare immagini Non si modificano il colore, le proporzioni e la direzione del logo. Nelle immagini il logo viene posizionato in basso a destra. Le immagini di collezione sono parte integrande della comunicazione del brand, pertanto non è possibile apporvi loghi diversi da quello ufficiale. Font ufficiali 4. Per tutte le comunicazioni istituzionali o commerciali: · Helvetica light 45 o Helvetica heavy 85 per i grassetti (in alternativa Helvetica regular o Helvetica bold per i grassetti) Per testi tecnici o lunghi testi contrattuali o legali · Trade gothic condensed 18 o Trade gothic condensed 20 per i grassetti. Per ogni chiarimento o specifica esigenza, vi preghiamo di contattare preventivamente l’ufficio marketing: [email protected] 1. logotipo completo lettera catalogo Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua.