Multimedia Computing: Applications, Designs, and Human Factors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Nova College-Wide Course Content Summary Ite 170 - Multimedia Software (3 Cr.)

Revised 8/2012 NOVA COLLEGE-WIDE COURSE CONTENT SUMMARY ITE 170 - MULTIMEDIA SOFTWARE (3 CR.) Course Description Explores technical fundamentals of creating multimedia projects with related hardware and software. Students will learn to manage resources required for multimedia production and evaluation and techniques for selection of graphics and multimedia software. Lecture 3 hours per week. General Purpose In this course students will learn the concepts, design and implementation of multimedia for the web. Students will create professional websites which incorporate images, sound, video and animation. The materials created will correspond to standards for Web accessibility and copyright issues. Course Prerequisites/Corequisites Prerequisite: ITE 115 Course Objectives Upon completion of this course, the student will be able to: a) Plan and organize a multimedia web site b) Understand the design concepts for creating a multimedia website c) Use a web authoring tool to create a multimedia web site d) Understand the design concepts related to creating and using graphics for the web e) Use graphics software to create and edit images for the web f) Understand the design concepts related to creating and using animation, audio and video for the web g) Use animation software to create and edit animations for the web h) Use software tools to publish and maintain a multimedia web site Major Topics to be Included Plan and organize a multimedia web site User characteristics and their effect upon design considerations Principles of good design -

Image Compression, Comparison Between Discrete Cosine Transform and Fast Fourier Transform and the Problems Associated with DCT

Image Compression, Comparison between Discrete Cosine Transform and Fast Fourier Transform and the problems associated with DCT Imdad Ali Ismaili 1, Sander Ali Khowaja 2, Waseem Javed Soomro 3 1Institute of Information and Communication Technology, University of Sindh, Jamshoro, Sindh, Pakistan 2Institute of Information and Communication Technology, University of Sindh, Jamshoro, Sindh, Pakistan Abstract - The research article focuses on the Image much higher than the calculations mentioned above; this Compression techniques such as. Discrete Cosine Transform increases the bandwidth requirement of the channel which is (DCT) and Fast Fourier Transform (FFT). These techniques very costly. This is the challenging part for the researchers to are chosen because of their vast use in image processing field, transmit theses digital signals through limited bandwidth JPEG (Joint Photographic Experts Group) is one of the communication channel, most of the times the way is found to examples of compression technique which uses DCT. The overcome this obstacle but sometimes it is impossible to send Research compares the two compression techniques based on these digital signals in its raw form. Though there has been a DCT and FFT and compare their results using MATLAB revolution in the increased capacity and decreased cost of software, Graphical User Interface (GUI). These results are storage over the past years but the requirement of data storage based on two compression techniques with different rates of and data processing applications is growing explosively to out compression i.e. Compression rates are 90%, 60%, 30% and space this achievement.[8] 5%. The technique allows compressing any picture format to JPG format. -

Network Requirements for High-Speed Real-Time Multimedia

NETWORK REQUIREMENTS FOR HIGH-SPEED REAL-TIME MULTIMEDIA DATA STREAMS Andrei Sukhov1), Prasad Calyam2), Warren Daly3), Alexander Iliin4) 1) Laboratory of Network Technologies, Samara Academy of Transport Engineering, Samara, Russia 2) Department of Electrical and Computer Engineering, Ohio State University, Ohio USA 4) HEANet Ltd, Dublin, Ireland 4) Russian Institute for Public Network, Moscow, Russia Abstract High-speed real-time multimedia applications such as Videoconferencing and HDTV have already become popular in many end-user communities. Developing better network protocols and systems that support such applications requires a sound understanding of the voice and video traffic characteristics and factors that ultimately affect end-user perception of audiovisual quality. In this paper we attempt to propose an analytical model for the various network factors whose interdependencies need to be modeled for characterizing end- user perception of audiovisual quality for high-speed real-time multimedia data streams. Towards validating our theory presented in this paper, we describe our initial results and future plans that consist of conducting a series of experiments and potential modifications to our proposed model. As the portion of middle and high-speed RTP (Real-Time Protocols) applications in the total communication over the Internet grows, it is important to understand the behavior of such traffic over the TCP/IP networks. The impetuous growth of traffic volumes and the rapid advances in the technologies of new-generation networks like IPv6 have made it possible for use of such high-speed applications, but with new demands on the network to deliver superior Quality of Service (QoS) to these applications. Our research focuses on the area of quality criteria for middle and high-speed network applications like • Video Conferencing / Streaming • Grid applications • P2P applications Audio- and Videoconferencing are two major multimedia communications that are defined by various international standards such as H.320, H.323 and SIP. -

Multimedia in Teacher Education: Perceptions & Uses

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by International Institute for Science, Technology and Education (IISTE): E-Journals Journal of Education and Practice www.iiste.org ISSN 2222-1735 (Paper) ISSN 2222-288X (Online) Vol 3, No 1, 2012 Multimedia in Teacher Education: Perceptions & Uses Gourav Mahajan* Sri Sai College of Education, Badhani, Pathankot,Punjab, India * E-mail of the corresponding author: [email protected] Abstract Educational systems around the world are under increasing pressure to use the new technologies to teach students the knowledge and skills they need in the 21st century. Education is at the confluence of powerful and rapidly shifting educational, technological and political forces that will shape the structure of educational systems across the globe for the remainder of this century. Many countries are engaged in a number of efforts to effect changes in the teaching/learning process to prepare students for an information and technology based society. Multimedia provide an array of powerful tools that may help in transforming the present isolated, teacher-centred and text-bound classrooms into rich, student-focused, interactive knowledge environments. The schools must embrace the new technologies and appropriate multimedia approach for learning. They must also move toward the goal of transforming the traditional paradigm of learning. Teacher education institutions may either assume a leadership role in the transformation of education or be left behind in the swirl of rapid technological change. For education to reap the full benefits of multimedia in learning, it is essential that pre-service and in-service teachers have basic skills and competencies required for using multimedia. -

History of Computer Game Design Our Collaboration Will Build on Projects That We Two Have Been Engaged in for Some Time

How They Got Game: The History and Culture of Interactive Simulations and Video Games http://www.stanford.edu/dept/HPS/VideoGameProposal/ Principal Investigators Tim Lenoir, History of Science, email: [email protected] Henry Lowood, Stanford University Libraries, email: [email protected] Research and Development Team Carlos Seligo, ATS Freshman Sophomore Programs, email: [email protected] Carlos co-designed the Word and the World sites. His Blade Runner site is one of the most innovative interactive educational sites on the web Rosemary Rogers, Program Administrator, HPS, email: [email protected] Rosemary has designed the Mousesite, the HPS sites, and the Sloan Science and Technology in the Making sites. She has won numerous awards for her website designs. Undergraduate Interns Zachary Pogue, sophomore, email: [email protected] Zach is part of the DMZ design team that won the VPUE website design contract. He has extensive experience in interactive website design John Eric Fu, senior, email: [email protected] As a Presidential Scholar John has completed an outstanding research project comparing British and American video game products and culture. Since his early teens he has been a video game reviewer for Macworld. Grad Students Casey Alt, History of Science and Film Studies, email: [email protected] Casey has worked with Scott Bukatman, Pamela Lee, and Lenoir. He is working on Japanese Manga film and has extensive media experience. Yorgos Panzaris, History of Science, email: [email protected] Yorgos has a master's degree in educational technologies from the MIT MediaLab and has extensive experience with databases and online design. He has worked with Lenoir on several recent projects. -

Lecture 11 : Discrete Cosine Transform Moving Into the Frequency Domain

Lecture 11 : Discrete Cosine Transform Moving into the Frequency Domain Frequency domains can be obtained through the transformation from one (time or spatial) domain to the other (frequency) via Fourier Transform (FT) (see Lecture 3) — MPEG Audio. Discrete Cosine Transform (DCT) (new ) — Heart of JPEG and MPEG Video, MPEG Audio. Note : We mention some image (and video) examples in this section with DCT (in particular) but also the FT is commonly applied to filter multimedia data. External Link: MIT OCW 8.03 Lecture 11 Fourier Analysis Video Recap: Fourier Transform The tool which converts a spatial (real space) description of audio/image data into one in terms of its frequency components is called the Fourier transform. The new version is usually referred to as the Fourier space description of the data. We then essentially process the data: E.g . for filtering basically this means attenuating or setting certain frequencies to zero We then need to convert data back to real audio/imagery to use in our applications. The corresponding inverse transformation which turns a Fourier space description back into a real space one is called the inverse Fourier transform. What do Frequencies Mean in an Image? Large values at high frequency components mean the data is changing rapidly on a short distance scale. E.g .: a page of small font text, brick wall, vegetation. Large low frequency components then the large scale features of the picture are more important. E.g . a single fairly simple object which occupies most of the image. The Road to Compression How do we achieve compression? Low pass filter — ignore high frequency noise components Only store lower frequency components High pass filter — spot gradual changes If changes are too low/slow — eye does not respond so ignore? Low Pass Image Compression Example MATLAB demo, dctdemo.m, (uses DCT) to Load an image Low pass filter in frequency (DCT) space Tune compression via a single slider value n to select coefficients Inverse DCT, subtract input and filtered image to see compression artefacts. -

Image Compression Using Discrete Cosine Transform Method

Qusay Kanaan Kadhim, International Journal of Computer Science and Mobile Computing, Vol.5 Issue.9, September- 2016, pg. 186-192 Available Online at www.ijcsmc.com International Journal of Computer Science and Mobile Computing A Monthly Journal of Computer Science and Information Technology ISSN 2320–088X IMPACT FACTOR: 5.258 IJCSMC, Vol. 5, Issue. 9, September 2016, pg.186 – 192 Image Compression Using Discrete Cosine Transform Method Qusay Kanaan Kadhim Al-Yarmook University College / Computer Science Department, Iraq [email protected] ABSTRACT: The processing of digital images took a wide importance in the knowledge field in the last decades ago due to the rapid development in the communication techniques and the need to find and develop methods assist in enhancing and exploiting the image information. The field of digital images compression becomes an important field of digital images processing fields due to the need to exploit the available storage space as much as possible and reduce the time required to transmit the image. Baseline JPEG Standard technique is used in compression of images with 8-bit color depth. Basically, this scheme consists of seven operations which are the sampling, the partitioning, the transform, the quantization, the entropy coding and Huffman coding. First, the sampling process is used to reduce the size of the image and the number bits required to represent it. Next, the partitioning process is applied to the image to get (8×8) image block. Then, the discrete cosine transform is used to transform the image block data from spatial domain to frequency domain to make the data easy to process. -

Hybrid Multimedia Room — System User Instructions

Office of Information Technology and Resources Customer Services 516.877.3340 [email protected] Hybrid Multimedia Room — System User Instructions POWER ON/OFF To begin using the system, select the PWR button. Note: PWR button will blink to confirm selection while powering up and powering down. The button will stop blinking when system is ready for use. (Source buttons will light to confirm selections.) When the power light remains solid, the system is ready for use. To turn system off later, simply select the PWR button again. CONNECTING TO THE DESKTOP COMPUTER To use the computer, push the power button and select the COMP button on the control panel. Once this is done the computer power button should light up green and you should see the computer boot up screen displayed on the projector. After the computer finishes booting up, the desktop will be displayed on the screen and you will have full use of the computer. CONNECTING TO A LAPTOP Using the VGA cable provided, connect your laptop to the system. Make sure the COMP button on the control panel is selected and power on your laptop. Once the laptop is on, its signal will automatically override the built-in computer signal and you will be able to see the laptop screen through the projector. If the projector is not showing the laptop screen, confirm that the laptop is set to display external video (depress key combination Fn + F8 on most Adelphi laptops; Fn + F5 on HPs). Note: If you need to play audio from your laptop, plug the provided audio cable in the headphone jack on your laptop. -

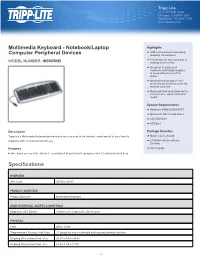

Specifications Multimedia Keyboard

Multimedia Keyboard - Notebook/Laptop Highlights ● USB connection provides plug- Computer Peripheral Devices and-play convenience ● Perfect size for any notebook or MODEL NUMBER: IN3005KB desktop environment ● Sleep hot key puts your notebook or desktop computer to sleep with a touch of the button ● Internet hot keys deliver one- touch instant access to a variety Internet functions ● Multimedia hot keys allow you to control music, videos and other media System Requirements ● Windows 98/ME/2000/XP/NT ● Macintosh OS 8.5 and above ● CD-ROM drive ● USB port Description Package Includes Tripp Lite's Multimedia Keyboard provides one-touch access to the Internet, email and all of your favorite ● Multimedia keyboard programs with 12 customized hot keys. ● CD-ROM with iKeyWorks Software Features ● User's guide ● One-touch access to the Internet, email and all of your favorite programs with 12 customized hot keys Specifications OVERVIEW UPC Code 037332126917 PRODUCT OVERVIEW Product Overview Multimedia Keyboard USER INTERFACE, ALERTS & CONTROLS Diagnostic LED Details 3 (Num Lock, Caps Lock, Scroll Lock) PHYSICAL Color Black; Silver Programmable Buttons / Hot Keys 12 (sleep hot key, multimedia hot keys and Internet hot keys Shipping Dimensions (hwd / cm) 20.83 x 4.44 x 45.47 Shipping Dimensions (hwd / in.) 8.20 x 1.75 x 17.90 1 / 2 Shipping Weight (kg) 1.00 Shipping Weight (lbs.) 2.20 Unit Dimensions (hwd / cm) 42.55 x 20.32 x 1.91 Unit Dimensions (hwd / in.) 16.75 x 8 x 0.75 Unit Weight (kg) 0.71 Unit Weight (lbs.) 1.57 FEATURES & SPECIFICATIONS USB 1.1 Compatible Yes USB 2.0 Compatible Yes USB Powered Yes STANDARDS & COMPLIANCE Certifications FCC and CE WARRANTY Product Warranty Period (Worldwide) 1-year limited warranty © 2021 Tripp Lite. -

An Overview of Multimedia Content Protection in Consumer Electronics Devices

An overview of multimedia content protection in consumer electronics devices Ahmet M. Eskicioglu* and Edward J. Delp‡ *Thomson Consumer Electronics Corporate Research 101 W. 103rd Street Indianapolis, Indiana 46290-1102 USA ‡ Video and Image Processing Laboratory (VIPER) School of Electrical and Computer Engineering Purdue University West Lafayette, Indiana 47907-1285 USA ABSTRACT A digital home network is a cluster of digital audio/visual (A/V) devices including set-top boxes, TVs, VCRs, DVD players, and general-purpose computing devices such as personal computers. The network may receive copyrighted digital multimedia content from a number of sources. This content may be broadcast via satellite or terrestrial systems, transmitted by cable operators, or made available as prepackaged media (e.g., a digital tape or a digital video disc). Before releasing their content for distribution, the content owners may require protection by specifying access conditions. Once the content is delivered to the consumer, it moves across home the network until it reaches its destination where it is stored or displayed. A copy protection system is needed to prevent unauthorized access to bit streams in transmission from one A/V device to another or while it is in storage on magnetic or optical media. Recently, two fundamental groups of technologies, encryption and watermarking, have been identified for protecting copyrighted digital multimedia content. This paper is an overview of the work done for protecting content owners’ investment in intellectual property. Keywords: multimedia, copy protection, cryptography, watermarking, consumer electronics, digital television, digital video disc, digital video cassette, home networks. 1. INTRODUCTION In the entertainment world, original multimedia content (e.g., text, audio, video and still images) is made available for consumers through a variety of channels. -

Steerable Discrete Cosine Transform

Politecnico di Torino Porto Institutional Repository [Proceeding] Steerable Discrete Cosine Transform Original Citation: Fracastoro, G.; Magli, E. (2015). Steerable Discrete Cosine Transform. In: 2015 IEEE International Workshop on Multimedia Signal Processing, 2015. pp. 1-6 Availability: This version is available at : http://porto.polito.it/2638898/ since: April 2016 Publisher: IEEE Published version: DOI:10.1109/MMSP.2015.7340827 Terms of use: This article is made available under terms and conditions applicable to Open Access Policy Article ("Public - All rights reserved") , as described at http://porto.polito.it/terms_and_conditions. html Porto, the institutional repository of the Politecnico di Torino, is provided by the University Library and the IT-Services. The aim is to enable open access to all the world. Please share with us how this access benefits you. Your story matters. (Article begins on next page) Steerable Discrete Cosine Transform Giulia Fracastoro #1, Enrico Magli #2 # Department of Electronics and Telecommunications, Politecnico di Torino, Italy 1 [email protected] 2 [email protected] Abstract—Block-based separable transforms tend to be inef- the use of symmetry to reduce the number of transform ficient when blocks contain arbitrarily shaped discontinuities. matrices needed [7]–[9]. However, the main problem of this For this reason, transforms incorporating directional information methods is that training sets must be processed to obtain are an appealing alternative. In this paper, we propose a new approach to this problem, designing a new transform that can transforms that are optimal for a given mode. be steered in any chosen direction and that is defined in a Recently, a new approach in image and video coding is rigorous mathematical way. -

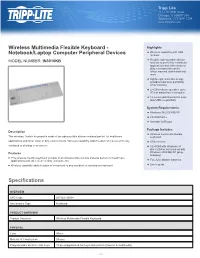

Specifications Wireless Multimedia Flexible Keyboard

Wireless Multimedia Flexible Keyboard - Highlights ● Wireless capability with USB Notebook/Laptop Computer Peripheral Devices receiver ● Flexible, indestructible silicone MODEL NUMBER: IN3010KB material is perfect for healthcare applications and other clean or dirty environments-can be rolled, washed, disinfected and more ● Lightweight, ultra-slim design provides maximum portability while traveling ● 2.4 GHz allows operation up to 30 feet away from a computer ● 18 multimedia/Internet hot keys plus USB compatibility System Requirements ● Windows 98/2000/ME/XP ● CD-ROM drive ● Available USB port Package Includes Description ● Wireless multimedia flexible This wireless, flexible keyboard is made of an indestructible silicone material perfect for healthcare keyboard applications and other clean or dirty environments. Wireless capability adds freedom of movement to any ● USB receiver notebook or desktop environment. ● CD-ROM with Windows 98 driver (Driver not required with Features Windows 2000/ME/XP (plug- and-play) ● This wireless, flexible keyboard is made of an indestructible silicone material perfect for healthcare ● applications and other clean or dirty environments Two AAA alkaline batteries ● ● Wireless capability adds freedom of movement to any notebook or desktop environment User's guide Specifications OVERVIEW UPC Code 037332135834 Accessories Type Keyboard PRODUCT OVERVIEW Product Overview Wireless Multimedia Flexible Keyboard PHYSICAL Color White Material of Construction Silicone Programmable Buttons / Hot Keys 18 pre-programmed