Oculus out to Let People Touch Virtual Worlds 18 June 2015, by Glenn Chapman

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Oculus Rift CV1 (Model HM-A) Virtual Reality Headset System Report by Wilfried THERON March 2017

Oculus Rift CV1 (Model HM-A) Virtual Reality Headset System report by Wilfried THERON March 2017 21 rue la Noue Bras de Fer 44200 NANTES - FRANCE +33 2 40 18 09 16 [email protected] www.systemplus.fr ©2017 by System Plus Consulting | Oculus Rift CV1 Head-Mounted Display (SAMPLE) 1 Table of Contents Overview / Introduction 4 Cost Analysis 83 o Executive Summary o Accessing the BOM o Main Chipset o PCB Cost o Block Diagram o Display Cost o Reverse Costing Methodology o BOM Cost – Main Electronic Board o BOM Cost – NIR LED Flex Boards Company Profile 9 o BOM Cost – Proximity Sensor Flex o Oculus VR, LLC o Housing Parts – Estimation o BOM Cost - Housing Physical Analysis 11 o Material Cost Breakdown by Sub-Assembly o Material Cost Breakdown by Component Category o Views and Dimensions of the Headset o Accessing the Added Value (AV) cost o Headset Opening o Main Electronic Board Manufacturing Flow o Fresnel Lens Details o Details of the Main Electronic Board AV Cost o NIR LED Details o Details of the System Assembly AV Cost o Microphone Details o Added-Value Cost Breakdown o Display Details o Manufacturing Cost Breakdown o Main Electronic Board Top Side – Global view Estimated Price Analysis 124 Top Side – High definition photo o Estimation of the Manufacturing Price Top Side – PCB markings Top Side – Main components markings Company services 128 Top Side – Main components identification Top Side – Other components markings Top Side – Other components identification Bottom Side – High definition photo o LED Driver Board o NIR LED Flex Boards o Proximity Sensor Flex ©2017 by System Plus Consulting | Oculus Rift CV1 Head-Mounted Display (SAMPLE) 2 OVERVIEW METHODOLOGY ©2017 by System Plus Consulting | Oculus Rift CV1 Head-Mounted Display (SAMPLE) 3 Executive Summary Overview / Introduction o Executive Summary This full reverse costing study has been conducted to provide insight on technology data, manufacturing cost and selling price of the Oculus Rift Headset* o Main Chipset supplied by Oculus VR, LLC (website). -

Phobulus, Tratamiento De Fobias Con Realidad Virtual

Universidad ORT Uruguay Facultad de Ingeniería Phobulus Tratamiento de fobias con realidad virtual Entregado como requisito para la obtención del título de Ingeniero en Sistemas Juan Martín Corallo – 172169 Christian Eichin – 173551 Santiago Pérez – 170441 Horacio Torrendell - 172844 Tutor: Nicolás Fornaro 2016 Declaración de autoría Nosotros, Juan Martín Corallo, Christian Eichin, Santiago Pérez y Horacio Torrendell, declaramos que el trabajo que se presenta en esa obra es de nuestra propia mano. Podemos asegurar que: La obra fue producida en su totalidad mientras realizábamos el proyecto de grado de fin de curso de la carrera Ingeniería en Sistemas; Cuando hemos consultado el trabajo publicado por otros, lo hemos atribuido con claridad; Cuando hemos citado obras de otros, hemos indicado las fuentes. Con excepción de estas citas, la obra es enteramente nuestra; En la obra, hemos acusado recibo de las ayudas recibidas; Cuando la obra se basa en trabajo realizado conjuntamente con otros, hemos explicado claramente qué fue contribuido por otros, y qué fue contribuido por nosotros; Ninguna parte de este trabajo ha sido publicada previamente a su entrega, excepto donde se han realizado las aclaraciones correspondientes. Fecha: 23 de Agosto, 2016. Juan Martín Corallo Christian Eichin Santiago Pérez Horacio Torrendell 2 Agradecimientos En primer lugar queremos agradecer al tutor del proyecto, MSc. Nicolás Fornaro, por acompañarnos y ayudarnos con entusiasmo desde el principio del mismo. Siempre estuvo disponible con la mejor voluntad para resolver dudas en reuniones o vía medios electrónicos, y guiarnos en todo lo referente al proyecto. Queremos agradecer también a los miembros del laboratorio ORT Software Factory, Dr. -

647 Virtual Anatomy: Expanding Veterinary Student Learning

647 VIRTUAL PROJECTS DOI: dx.doi.org/10.5195/jmla.2020.1057 Virtual Anatomy: expanding veterinary student learning Kyrille DeBose See end of article for author’s affiliation. Traditionally, there are three primary ways to learn anatomy outside the classroom. Books provide foundational knowledge but are limited in terms of object manipulation for deeper exploration. Three- dimensional (3D) software programs produced by companies including Biosphera, Sciencein3D, and Anatomage allow deeper exploration but are often costly, offered through restrictive licenses, or require expensive hardware. A new approach to teaching anatomy is to utilize virtual reality (VR) environments. The Virginia–Maryland College of Veterinary Medicine and University Libraries have partnered to create open education–licensed VR anatomical programs for students to freely download, access, and use. The first and most developed program is the canine model. After beta testing, this program was integrated into the first- year students’ physical examination labs in fall 2019. The VR program enabled students to walk through the VR dog model to build their conceptual knowledge of the location of certain anatomical features and then apply that knowledge to live animals. This article briefly discusses the history, pedagogical goals, system requirements, and future plans of the VR program to further enrich student learning experiences. Virtual Projects are published on an annual basis in the Journal of the Medical Library Association (JMLA) following an annual call for virtual projects in MLAConnect and announcements to encourage submissions from all types of libraries. An advisory committee of recognized technology experts selects project entries based on their currency, innovation, and contribution to health sciences librarianship. -

Novel Approach to Measure Motion-To-Photon and Mouth-To-Ear Latency in Distributed Virtual Reality Systems

Novel Approach to Measure Motion-To-Photon and Mouth-To-Ear Latency in Distributed Virtual Reality Systems Armin Becher∗, Jens Angerer†, Thomas Grauschopf? ∗ Technische Hochschule Ingolstadt † AUDI AG ? Technische Hochschule Ingolstadt Esplanade 10 Auto-Union-Straße 1 Esplanade 10 85049 Ingolstadt 85045 Ingolstadt 85049 Ingolstadt [email protected] [email protected] [email protected] Abstract: Distributed Virtual Reality systems enable globally dispersed users to interact with each other in a shared virtual environment. In such systems, different types of latencies occur. For a good VR experience, they need to be controlled. The time delay between the user’s head motion and the corresponding display output of the VR system might lead to adverse effects such as a reduced sense of presence or motion sickness. Additionally, high network latency among world- wide locations makes collaboration between users more difficult and leads to misunderstandings. To evaluate the performance and optimize dispersed VR solutions it is therefore important to mea- sure those delays. In this work, a novel, easy to set up, and inexpensive method to measure local and remote system latency will be described. The measuring setup consists of a microcontroller, a microphone, a piezo buzzer, a photosensor, and a potentiometer. With these components, it is possible to measure motion-to-photon and mouth-to-ear latency of various VR systems. By using GPS-receivers for timecode-synchronization it is also possible to obtain the end-to-end delays be- tween different worldwide locations. The described system was used to measure local and remote latencies of two HMD based distributed VR systems. -

Q4-15 Earnings

January 27th, 2016 Facebook Q4-15 and Full Year 2015 Earnings Conference Call Operator: Good afternoon. My name is Chris and I will be your conference operator today. At this time I would like to welcome everyone to the Facebook Fourth Quarter and Full Year 2015 Earnings Call. All lines have been placed on mute to prevent any background noise. After the speakers' remarks, there will be a question and answer session. If you would like to ask a question during that time, please press star then the number 1 on your telephone keypad. This call will be recorded. Thank you very much. Deborah Crawford, VP Investor Relations: Thank you. Good afternoon and welcome to Facebook’s fourth quarter and full year 2015 earnings conference call. Joining me today to discuss our results are Mark Zuckerberg, CEO; Sheryl Sandberg, COO; and Dave Wehner, CFO. Before we get started, I would like to take this opportunity to remind you that our remarks today will include forward-looking statements. Actual results may differ materially from those contemplated by these forward-looking statements. Factors that could cause these results to differ materially are set forth in today’s press release, our annual report on form 10 -K and our most recent quarterly report on form 10-Q filed with the SEC. Any forward-looking statements that we make on this call are based on assumptions as of today and we undertake no obligation to update these statements as a result of new information or future events. During this call we may present both GAAP and non-GAAP financial measures. -

Virtual Reality Tremor Reduction in Parkinson's Disease

Preprints (www.preprints.org) | NOT PEER-REVIEWED | Posted: 29 February 2020 doi:10.20944/preprints202002.0452.v1 Virtual Reality tremor reduction in Parkinson's disease John Cornacchioli, Alec Galambos, Stamatina Rentouli, Robert Canciello, Roberta Marongiu, Daniel Cabrera, eMalick G. Njie* NeuroStorm, Inc, New York City, New York, United States of America stoPD, Long Island City, New York, United States of America *Corresponding author at: Dr. eMalick G. Njie, NeuroStorm, Inc, 1412 Broadway, Fl21, New York, NY 10018. Tel.: +1 347 651 1267; [email protected], [email protected] Abstract: Multidisciplinary neurotechnology holds the promise of understanding and non-invasively treating neurodegenerative diseases. In this preclinical trial on Parkinson's disease (PD), we combined neuroscience together with the nascent field of medical virtual reality and generated several important observations. First, we established the Oculus Rift virtual reality system as a potent measurement device for parkinsonian involuntary hand tremors (IHT). Interestingly, we determined changes in rotation were the most sensitive marker of PD IHT. Secondly, we determined parkinsonian tremors can be abolished in VR with algorithms that remove tremors from patients' digital hands. We also found that PD patients were interested in and were readily able to use VR hardware and software. Together these data suggest PD patients can enter VR and be asymptotic of PD IHT. Importantly, VR is an open-medium where patients can perform actions, activities, and functions that positively impact their real lives - for instance, one can sign tax return documents in VR and have them printed on real paper or directly e-sign via internet to government tax agencies. -

Virtual Reality Sickness During Immersion: an Investigation of Potential Obstacles Towards General Accessibility of VR Technology

Examensarbete 30hp August 2016 Virtual Reality sickness during immersion: An investigation of potential obstacles towards general accessibility of VR technology. A controlled study for investigating the accessibility of modern VR hardware and the usability of HTC Vive’s motion controllers. Dongsheng Lu Abstract People call the year of 2016 as the year of virtual reality. As the world leading tech giants are releasing their own Virtual Reality (VR) products, the technology of VR has been more available than ever for the mass market now. However, the fact that the technology becomes cheaper and by that reaches a mass-market, does not in itself imply that long-standing usability issues with VR have been addressed. Problems regarding motion sickness (MS) and motion control (MC) has been two of the most important obstacles for VR technology in the past. The main research question of this study is: “Are there persistent universal access issues with VR related to motion control and motion sickness?” In this study a mixed method approach has been utilized for finding more answers related to these two important aspects. A literature review in the area of VR, MS and MC was followed by a quantitative controlled study and a qualitative evaluation. 32 participants were carefully selected for this study, they were divided into different groups and the quantitative data collected from them were processed and analyzed by using statistical test. An interview was also carried out with all of the participants of this study in order to gather more details about the usability of the motion controllers used in this study. -

Off-The-Shelf Stylus: Using XR Devices for Handwriting and Sketching on Physically Aligned Virtual Surfaces

TECHNOLOGY AND CODE published: 04 June 2021 doi: 10.3389/frvir.2021.684498 Off-The-Shelf Stylus: Using XR Devices for Handwriting and Sketching on Physically Aligned Virtual Surfaces Florian Kern*, Peter Kullmann, Elisabeth Ganal, Kristof Korwisi, René Stingl, Florian Niebling and Marc Erich Latoschik Human-Computer Interaction (HCI) Group, Informatik, University of Würzburg, Würzburg, Germany This article introduces the Off-The-Shelf Stylus (OTSS), a framework for 2D interaction (in 3D) as well as for handwriting and sketching with digital pen, ink, and paper on physically aligned virtual surfaces in Virtual, Augmented, and Mixed Reality (VR, AR, MR: XR for short). OTSS supports self-made XR styluses based on consumer-grade six-degrees-of-freedom XR controllers and commercially available styluses. The framework provides separate modules for three basic but vital features: 1) The stylus module provides stylus construction and calibration features. 2) The surface module provides surface calibration and visual feedback features for virtual-physical 2D surface alignment using our so-called 3ViSuAl procedure, and Edited by: surface interaction features. 3) The evaluation suite provides a comprehensive test bed Daniel Zielasko, combining technical measurements for precision, accuracy, and latency with extensive University of Trier, Germany usability evaluations including handwriting and sketching tasks based on established Reviewed by: visuomotor, graphomotor, and handwriting research. The framework’s development is Wolfgang Stuerzlinger, Simon Fraser University, Canada accompanied by an extensive open source reference implementation targeting the Unity Thammathip Piumsomboon, game engine using an Oculus Rift S headset and Oculus Touch controllers. The University of Canterbury, New Zealand development compares three low-cost and low-tech options to equip controllers with a *Correspondence: tip and includes a web browser-based surface providing support for interacting, Florian Kern fl[email protected] handwriting, and sketching. -

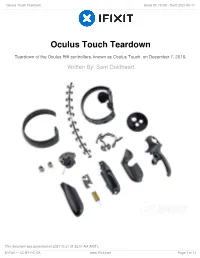

Oculus Touch Teardown Guide ID: 75109 - Draft: 2021-05-17

Oculus Touch Teardown Guide ID: 75109 - Draft: 2021-05-17 Oculus Touch Teardown Teardown of the Oculus Rift controllers, known as Oculus Touch, on December 7, 2016. Written By: Sam Goldheart This document was generated on 2021-05-21 01:32:07 AM (MST). © iFixit — CC BY-NC-SA www.iFixit.com Page 1 of 14 Oculus Touch Teardown Guide ID: 75109 - Draft: 2021-05-17 INTRODUCTION Oculus finally catches up with the big boys with the release of their ultra-responsive Oculus Touch controllers. Requiring a second IR camera and featuring a whole mess of tactile and capacitive input options, these controllers are bound to be chock full of IR LEDs and tons of exciting tech—but we'll only know for sure if we tear them down! Want to keep in touch? Follow us on Instagram, Twitter, and Facebook for all the latest gadget news. TOOLS: T5 Torx Screwdriver (1) T6 Torx Screwdriver (1) iOpener (1) Soldering Iron (1) iFixit Opening Tools (1) Spudger (1) Tweezers (1) This document was generated on 2021-05-21 01:32:07 AM (MST). © iFixit — CC BY-NC-SA www.iFixit.com Page 2 of 14 Oculus Touch Teardown Guide ID: 75109 - Draft: 2021-05-17 Step 1 — Oculus Touch Teardown Before we tear down, we take the opportunity to ogle the Oculus Touch system, which includes: An additional Oculus Sensor A Rockband adapter Two IR-emitting Touch controllers with finger tracking and a mess of buttons This document was generated on 2021-05-21 01:32:07 AM (MST). © iFixit — CC BY-NC-SA www.iFixit.com Page 3 of 14 Oculus Touch Teardown Guide ID: 75109 - Draft: 2021-05-17 Step 2 Using our spectrespecs fancy IR- viewing technology, we get an Oculus Sensor eye's view of the Touch controllers. -

Oculus Rift CV1 Teardown Anleitung Nr: 60612 - Entwurf: 2018-07-21

Oculus Rift CV1 Teardown Anleitung Nr: 60612 - Entwurf: 2018-07-21 Oculus Rift CV1 Teardown Teardown des Oculus Rift CV1 (Consumer Version 1) durchgeführt den 29. März 2016 Geschrieben von: Evan Noronha Dieses Dokument wurde am 2020-11-17 03:55:00 PM (MST) erstellt. © iFixit — CC BY-NC-SA de.iFixit.com Seite 1 von 20 Oculus Rift CV1 Teardown Anleitung Nr: 60612 - Entwurf: 2018-07-21 EINLEITUNG Seit vor vier Jahren Oculus ein VR-Headset ankündigte, hat iFixit die beiden Entwicklerversionen erfolgreich auseinander und wieder zusammen gebaut. Nun haben wir endlich die Consumerversion zum Teardown bekommen und können euch verraten was gleich blieb und was sich geändert hat. Schnappt euch euer Werkzeug und kommt mit uns an die Werkbank: Wir bauen das Oculus Rift auseinander Falls es euch gefällt, gebt uns ein Like auf Facebook, Instagram, oder Twitter. [video: https://youtu.be/zfZx_jthHM4] WERKZEUGE: Phillips #1 Screwdriver (1) T3 Torx Screwdriver (1) iFixit Opening Tools (1) Spudger (1) Dieses Dokument wurde am 2020-11-17 03:55:00 PM (MST) erstellt. © iFixit — CC BY-NC-SA de.iFixit.com Seite 2 von 20 Oculus Rift CV1 Teardown Anleitung Nr: 60612 - Entwurf: 2018-07-21 Schritt 1 — Oculus Rift CV1 Teardown Wir haben bereits das DK1 und das DK2 auseinandergebaut und sind nun gespannt was das CV1 kann. Die Spezifikationen soweit: Zwei OLED Displays mit einer Auflösung von ingesamt 2160 x 1200 Bildwiederholrate von 90 Hz Beschleunigungssensor, Gyroscope und Magnetometer 360° Headset-Tracking mit der Constellation Infrarot Kamera Horizontales Blickfeld von mehr als 100º Die Motion Controller, genannt Oculus Touch, werden später im Jahr 2016 veröffentlicht. -

Examining Motion Sickness in Virtual Reality

Masaryk University Faculty of Informatics Examining Motion Sickness in Virtual Reality Master’s Thesis Roman Lukš Brno, Fall 2017 Masaryk University Faculty of Informatics Examining Motion Sickness in Virtual Reality Master’s Thesis Roman Lukš Brno, Fall 2017 This is where a copy of the official signed thesis assignment and a copy ofthe Statement of an Author is located in the printed version of the document. Declaration Hereby I declare that this paper is my original authorial work, which I have worked out on my own. All sources, references, and literature used or excerpted during elaboration of this work are properly cited and listed in complete reference to the due source. Roman Lukš Advisor: doc. Fotis Liarokapis, PhD. i Acknowledgement I want to thank the following people who helped me: ∙ Fotis Liarokapis - for guidance ∙ Milan Doležal - for providing discount vouchers ∙ Roman Gluszny & Michal Sedlák - for advice ∙ Adam Qureshi - for advice ∙ Jakub Stejskal - for sharing ∙ people from Škool - for sharing ∙ various VR developers - for sharing their insights with me ∙ all participants - for participation in the experiment ∙ my parents - for supporting me during my studies iii Abstract Thesis is evaluating two visual methods and whether they help to alleviate motion sickness. The first method is the presence of a frame of reference (in form of a cockpit and a radial) and the second method is the visible path (in form of waypoints in the virtual environment). Four testing groups were formed. Two for each individual method, one combining both methods and one control group. Each group consisting of 15 subjects. It was a passive seated experience and Oculus Rift CV1 was used. -

Hand Interface Using Deep Learning in Immersive Virtual Reality

electronics Article DeepHandsVR: Hand Interface Using Deep Learning in Immersive Virtual Reality Taeseok Kang 1, Minsu Chae 1, Eunbin Seo 1, Mingyu Kim 2 and Jinmo Kim 1,* 1 Division of Computer Engineering, Hansung University, Seoul 02876, Korea; [email protected] (T.K.); [email protected] (M.C.); [email protected] (E.S.) 2 Program in Visual Information Processing, Korea University, Seoul 02841, Korea; [email protected] * Correspondence: [email protected]; Tel.: +82-2-760-4046 Received: 28 September 2020; Accepted: 4 November 2020; Published: 6 November 2020 Abstract: This paper proposes a hand interface through a novel deep learning that provides easy and realistic interactions with hands in immersive virtual reality. The proposed interface is designed to provide a real-to-virtual direct hand interface using a controller to map a real hand gesture to a virtual hand in an easy and simple structure. In addition, a gesture-to-action interface that expresses the process of gesture to action in real-time without the necessity of a graphical user interface (GUI) used in existing interactive applications is proposed. This interface uses the method of applying image classification training process of capturing a 3D virtual hand gesture model as a 2D image using a deep learning model, convolutional neural network (CNN). The key objective of this process is to provide users with intuitive and realistic interactions that feature convenient operation in immersive virtual reality. To achieve this, an application that can compare and analyze the proposed interface and the existing GUI was developed. Next, a survey experiment was conducted to statistically analyze and evaluate the positive effects on the sense of presence through user satisfaction with the interface experience.