Controlling VR Games: Control Schemes and the Player Experience

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Download the User Manual and Keymander PC Software from Package Contents 1

TM Quick Start Guide KeyMander - Keyboard & Mouse Adapter for Game Consoles GE1337P PART NO. Q1257-d This Quick Start Guide is intended to cover basic setup and key functions to get you up and running quickly. For a complete explanation of features and full access to all KeyMander functions, please download the User Manual and KeyMander PC Software from www.IOGEAR.com/product/GE1337P Package Contents 1 1 x GE1337P KeyMander Controller Emulator 2 x USB A to USB Mini B Cables 1 x 3.5mm Data Cable (reserved for future use) 1 x Quick Start Guide 1 x Warranty Card System Requirements Hardware Game Consoles: • PlayStation® 4 • PlayStation® 3 • Xbox® One S • Xbox® One • Xbox® 360 Console Controller: • PlayStation® 4 Controller • PlayStation® 3 Dual Shock 3 Controller (REQUIRED)* • Xbox® 360 Wired Controller (REQUIRED)* • Xbox® One Controller with Micro USB cable (REQUIRED)* • USB keyboard & USB mouse** Computer with available USB 2.0 port OS • Windows Vista®, Windows® 7 and Windows® 8, Windows® 8.1 *Some aftermarket wired controllers do not function correctly with KeyMander. It is strongly recommended to use official PlayStation/Xbox wired controllers. **Compatible with select wireless keyboard/mouse devices. Overview 2 1. Gamepad port 1 2 3 2. Keyboard port 3. Mouse port 4. Turbo/Keyboard Mode LED Gamepad Keyboard Mouse Indicator: a. Lights solid ORANGE when Turbo Mode is ON b. Flashes ORANGE when Keyboard Mode is ON 5. Setting LED indicator: a. Lights solid BLUE when PC port is connected to a computer. b. Flashes (Fast) BLUE when uploading a profile from a computer to the KeyMander. -

Video Games: 3Duis for the Masses Joseph J

Video Games: 3DUIs for the Masses Joseph J. LaViola Jr. Ivan Poupyrev Welcome, Introduction, & Roadmap 3DUIs 101 3DUIs 201 User Studies and 3DUIs Guidelines for Developing 3DUIs Video Games: 3DUIs for the Masses The Wii Remote and You 3DUI and the Physical Environment Beyond Visual: Shape, Haptics and Actuation in 3DUI Conclusion CHI 2009 Course Notes - LaViola | Kruijff | Bowman | Poupyrev | Stuerzlinger 163 !3DUI and Video Games – Why? ! Video games ! multi-billion dollar industry: $18.8 billion in 2007 ! major driving force in home entertainment: average gamer today is 33 years old ! advanced 3D graphics in HOME rather then universities or movies studios ! Driving force in technological innovation ! graphics algorithms and hardware, sound, AI, etc. ! technological transfer to healthcare, biomedical research defence, education (example: Folding@Home) ! Recent innovations in 3D user interfaces ! graphics is not enough anymore ! complex spatial, 3D user interfaces are coming to home (example: Nintendo Wii) ! Why 3D user interfaces for games? ! natural motion and gestures ! reduce complexity ! more immersive and engaging ! Research in 3D UI for games is exiting ! will transfer 3DUI to other practical applications, e.g. education and medicine LaViola | Kruijff | Bowman | Poupyrev | Stuerzlinger 164 - Video game industry $10.5 billions in US in 2005, $25.4 billions worldwide; -Not for kids anymore: average player is 33 years old, the most frequent game buyer is 40 years old; -Technological transfer and strong impact on other areas of technology: The poster on this slide (www.allposters.com) demonstrates a very common misconception. In fact its completely opposite, the rapid innovation in games software and hardware allows for economical and practical applications of 3D computers graphics in healthcare, biomedical research, education and other critical areas. -

English Sharkps3 Manual.Pdf

TM FRAGFX SHARK CLASSIC - WIRELESS CONTROLLER ENGLISH repaIR CONNECTION BETWEEN DONGLE AND MOUSE/CHUCK Should your dongle light up, but not connect to either mouse or chuck or both, the unit HAPPY FRAGGING! needs unpairing and pairing. This process should only have to be done once. Welcome and thank you for purchasing your new FragFX SHARK Classic for the Sony Playstation 3, PC and MAC. 1) unpair the mouse (switch OFF the Chuck): - Switch on the mouse The FragFX SHARK classic is specifically designed for the Sony PlayStation 3, PC / - Press R1, R2, mousewheel, start, G, A at the same time MAC and compatible with most games, however you may find it‘s best suited to shoo- - Switch the mouse off, and on again. The blue LED should now be blinking - The mouse is now unpaired, and ready to be paired again ting, action and sport games. To use your FragFX SHARK classic, you are expected to 2) pair the mouse have a working Sony PlayStation 3 console system. - (Switch on the mouse) - Insert the dongle into the PC or PS3 For more information visit our web site at www.splitfish.com. Please read the entire - Hold the mouse close (~10cm/~4inch) to the dongle, and press either F, R, A or instruction - you get most out of your FragFX SHARK classic. G button - The LED on the mouse should dim out and the green LED on the dongle should light Happy Fragging! - If not, repeat the procedure GET YOUR DONGLE READY 3) unpair the chuck (switch OFF the mouse): Select Platform Switch Position the Dongle - Switch on the chuck PS3 - ‘Gamepad mode’ for function as a game controller - Press F, L1, L2, select, FX, L3(press stick) at the same time - ‘Keyboard Mode’ for chat and browser only - Switch the chuck off, and on again. -

Q4-15 Earnings

January 27th, 2016 Facebook Q4-15 and Full Year 2015 Earnings Conference Call Operator: Good afternoon. My name is Chris and I will be your conference operator today. At this time I would like to welcome everyone to the Facebook Fourth Quarter and Full Year 2015 Earnings Call. All lines have been placed on mute to prevent any background noise. After the speakers' remarks, there will be a question and answer session. If you would like to ask a question during that time, please press star then the number 1 on your telephone keypad. This call will be recorded. Thank you very much. Deborah Crawford, VP Investor Relations: Thank you. Good afternoon and welcome to Facebook’s fourth quarter and full year 2015 earnings conference call. Joining me today to discuss our results are Mark Zuckerberg, CEO; Sheryl Sandberg, COO; and Dave Wehner, CFO. Before we get started, I would like to take this opportunity to remind you that our remarks today will include forward-looking statements. Actual results may differ materially from those contemplated by these forward-looking statements. Factors that could cause these results to differ materially are set forth in today’s press release, our annual report on form 10 -K and our most recent quarterly report on form 10-Q filed with the SEC. Any forward-looking statements that we make on this call are based on assumptions as of today and we undertake no obligation to update these statements as a result of new information or future events. During this call we may present both GAAP and non-GAAP financial measures. -

Virtual Reality Tremor Reduction in Parkinson's Disease

Preprints (www.preprints.org) | NOT PEER-REVIEWED | Posted: 29 February 2020 doi:10.20944/preprints202002.0452.v1 Virtual Reality tremor reduction in Parkinson's disease John Cornacchioli, Alec Galambos, Stamatina Rentouli, Robert Canciello, Roberta Marongiu, Daniel Cabrera, eMalick G. Njie* NeuroStorm, Inc, New York City, New York, United States of America stoPD, Long Island City, New York, United States of America *Corresponding author at: Dr. eMalick G. Njie, NeuroStorm, Inc, 1412 Broadway, Fl21, New York, NY 10018. Tel.: +1 347 651 1267; [email protected], [email protected] Abstract: Multidisciplinary neurotechnology holds the promise of understanding and non-invasively treating neurodegenerative diseases. In this preclinical trial on Parkinson's disease (PD), we combined neuroscience together with the nascent field of medical virtual reality and generated several important observations. First, we established the Oculus Rift virtual reality system as a potent measurement device for parkinsonian involuntary hand tremors (IHT). Interestingly, we determined changes in rotation were the most sensitive marker of PD IHT. Secondly, we determined parkinsonian tremors can be abolished in VR with algorithms that remove tremors from patients' digital hands. We also found that PD patients were interested in and were readily able to use VR hardware and software. Together these data suggest PD patients can enter VR and be asymptotic of PD IHT. Importantly, VR is an open-medium where patients can perform actions, activities, and functions that positively impact their real lives - for instance, one can sign tax return documents in VR and have them printed on real paper or directly e-sign via internet to government tax agencies. -

Arcade Guns User Manual

User Manual (v2.5) Table of Contents Recommended Links ..................................................................................................................................... 2 Supported Operating Systems ...................................................................................................................... 3 Quick Start Guide .......................................................................................................................................... 3 Default Light Gun Settings ............................................................................................................................ 4 Positioning the IR Sensor Bar ........................................................................................................................ 5 Light Gun Calibration .................................................................................................................................... 6 MAME (Multiple Arcade Machine Emulator) Setup ..................................................................................... 7 PlayStation 2 Console Games Setup ............................................................................................................. 9 Congratulations on your new Arcade Guns™ light guns purchase! We know you will enjoy them as much as we do! Recommended Links 2 Arcade Guns™ User Manual © Copyright 2019. Harbo Entertainment LLC. All rights reserved. Arcade Guns Home Page http://www.arcadeguns.com Arcade Guns Pro Utility Software (Windows XP, Vista, -

Video Game Control Dimensionality Analysis

http://www.diva-portal.org Postprint This is the accepted version of a paper presented at IE2014, 2-3 December 2014, Newcastle, Australia. Citation for the original published paper: Mustaquim, M., Nyström, T. (2014) Video Game Control Dimensionality Analysis. In: Blackmore, K., Nesbitt, K., and Smith, S.P. (ed.), Proceedings of the 2014 Conference on Interactive Entertainment (IE2014) New York: Association for Computing Machinery (ACM) http://dx.doi.org/10.1145/2677758.2677784 N.B. When citing this work, cite the original published paper. Permanent link to this version: http://urn.kb.se/resolve?urn=urn:nbn:se:uu:diva-234183 Video Game Control Dimensionality Analysis Moyen M. Mustaquim Tobias Nyström Uppsala University Uppsala University Uppsala, Sweden Uppsala, Sweden +46 (0) 70 333 51 46 +46 18 471 51 49 [email protected] [email protected] ABSTRACT notice that very few studies have concretely examined the effect In this paper we have studied the video games control of game controllers on game enjoyment [25]. A successfully dimensionality and its effects on the traditional way of designed controller can contribute in identifying different player interpreting difficulty and familiarity in games. This paper experiences by defining various types of games that have been presents the findings in which we have studied the Xbox 360 effortlessly played with a controller because of the controller’s console’s games control dimensionality. Multivariate statistical design [18]. One example is the Microsoft Xbox controller that operations were performed on the collected data from 83 different became a favorite among players when playing “first person- games of Xbox 360. -

Off-The-Shelf Stylus: Using XR Devices for Handwriting and Sketching on Physically Aligned Virtual Surfaces

TECHNOLOGY AND CODE published: 04 June 2021 doi: 10.3389/frvir.2021.684498 Off-The-Shelf Stylus: Using XR Devices for Handwriting and Sketching on Physically Aligned Virtual Surfaces Florian Kern*, Peter Kullmann, Elisabeth Ganal, Kristof Korwisi, René Stingl, Florian Niebling and Marc Erich Latoschik Human-Computer Interaction (HCI) Group, Informatik, University of Würzburg, Würzburg, Germany This article introduces the Off-The-Shelf Stylus (OTSS), a framework for 2D interaction (in 3D) as well as for handwriting and sketching with digital pen, ink, and paper on physically aligned virtual surfaces in Virtual, Augmented, and Mixed Reality (VR, AR, MR: XR for short). OTSS supports self-made XR styluses based on consumer-grade six-degrees-of-freedom XR controllers and commercially available styluses. The framework provides separate modules for three basic but vital features: 1) The stylus module provides stylus construction and calibration features. 2) The surface module provides surface calibration and visual feedback features for virtual-physical 2D surface alignment using our so-called 3ViSuAl procedure, and Edited by: surface interaction features. 3) The evaluation suite provides a comprehensive test bed Daniel Zielasko, combining technical measurements for precision, accuracy, and latency with extensive University of Trier, Germany usability evaluations including handwriting and sketching tasks based on established Reviewed by: visuomotor, graphomotor, and handwriting research. The framework’s development is Wolfgang Stuerzlinger, Simon Fraser University, Canada accompanied by an extensive open source reference implementation targeting the Unity Thammathip Piumsomboon, game engine using an Oculus Rift S headset and Oculus Touch controllers. The University of Canterbury, New Zealand development compares three low-cost and low-tech options to equip controllers with a *Correspondence: tip and includes a web browser-based surface providing support for interacting, Florian Kern fl[email protected] handwriting, and sketching. -

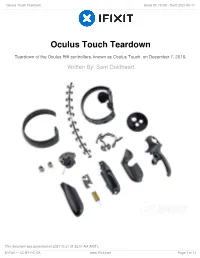

Oculus Touch Teardown Guide ID: 75109 - Draft: 2021-05-17

Oculus Touch Teardown Guide ID: 75109 - Draft: 2021-05-17 Oculus Touch Teardown Teardown of the Oculus Rift controllers, known as Oculus Touch, on December 7, 2016. Written By: Sam Goldheart This document was generated on 2021-05-21 01:32:07 AM (MST). © iFixit — CC BY-NC-SA www.iFixit.com Page 1 of 14 Oculus Touch Teardown Guide ID: 75109 - Draft: 2021-05-17 INTRODUCTION Oculus finally catches up with the big boys with the release of their ultra-responsive Oculus Touch controllers. Requiring a second IR camera and featuring a whole mess of tactile and capacitive input options, these controllers are bound to be chock full of IR LEDs and tons of exciting tech—but we'll only know for sure if we tear them down! Want to keep in touch? Follow us on Instagram, Twitter, and Facebook for all the latest gadget news. TOOLS: T5 Torx Screwdriver (1) T6 Torx Screwdriver (1) iOpener (1) Soldering Iron (1) iFixit Opening Tools (1) Spudger (1) Tweezers (1) This document was generated on 2021-05-21 01:32:07 AM (MST). © iFixit — CC BY-NC-SA www.iFixit.com Page 2 of 14 Oculus Touch Teardown Guide ID: 75109 - Draft: 2021-05-17 Step 1 — Oculus Touch Teardown Before we tear down, we take the opportunity to ogle the Oculus Touch system, which includes: An additional Oculus Sensor A Rockband adapter Two IR-emitting Touch controllers with finger tracking and a mess of buttons This document was generated on 2021-05-21 01:32:07 AM (MST). © iFixit — CC BY-NC-SA www.iFixit.com Page 3 of 14 Oculus Touch Teardown Guide ID: 75109 - Draft: 2021-05-17 Step 2 Using our spectrespecs fancy IR- viewing technology, we get an Oculus Sensor eye's view of the Touch controllers. -

Manual English.Pdf

Chapter 1 TABLE OF CONTENTS GETTING STARTED Chapter 1: Getting Started ...................................................................1 Operating Systems Chapter 2: Interactions & Classes ........................................................6 Chapter 3: School Supplies ...................................................................9 Windows XP Chapter 4: Bullworth Society .............................................................12 Windows Vista Chapter 5: Credits ..............................................................................16 Minimum System Requirements Memory: 1 GB RAM 4.7 GB of hard drive disc space Processor: Intel Pentium 4 (3+ GHZ) AMD Athlon 3000+ Video card: DirectX 9.0c Shader 3.0 supported Nvidia 6800 or 7300 or better ATI Radeon X1300 or better Sound card: DX9-compatible Installation You must have Administrator privileges to install and play Bully: Scholarship Edition. If you are unsure about how to achieve this, please consult your Windows system manual. Insert the Bully: Scholarship Edition DVD into your DVD-ROM Drive. If AutoPlay is enabled, the Launch Menu will appear otherwise use Explorer to browse the disc, and launch. ABOUT THIS BOOK Select the INSTALL option to run the installer. Since publishing the first edition there have been some exciting new Agree to the license Agreement. developments. This second edition has been updated to reflect those changes. You will also find some additional material has been added. Choose the install location: Entire chapters have been rewritten and some have simply been by default we use “C:\Program Files\Rockstar Games\Bully Scholarship deleted to streamline your learning experience. Enjoy! Edition” BULLY: SCHOLARSHIP EDITION CHAPTER 1: GETTING STARTED 1 Chapter 1 Installation continued CONTROLS Once Installation has finished, you will be returned to the Launch Menu. Controls: Standard If you do not have DirectX 9.0c installed on your PC, then we suggest Show Secondary Tasks Arrow Left launching DIRECTX INSTALL from the Launch Menu. -

The Trackball Controller: Improving the Analog Stick

The Trackball Controller: Improving the Analog Stick Daniel Natapov I. Scott MacKenzie Department of Computer Science and Engineering York University, Toronto, Canada {dnatapov, mack}@cse.yorku.ca ABSTRACT number of inputs was sufficient. Despite many future additions Two groups of participants (novice and advanced) completed a and improvements, the D-Pad persists on all standard controllers study comparing a prototype game controller to a standard game for all consoles introduced after the NES. controller for point-select tasks. The prototype game controller Shortcomings of the D-Pad became apparent with the introduction replaces the right analog stick of a standard game controller (used of 3D games. The Sony PlayStation and the Sega Saturn, for pointing and camera control) with a trackball. We used Fitts’ introduced in 1995, supported 3D environments and third-person law as per ISO 9241-9 to evaluate the pointing performance of perspectives. The controllers for those consoles, which used D- both controllers. In the novice group, the trackball controller’s Pads, were not well suited for 3D, since navigation was difficult. throughput was 2.69 bps – 60.1% higher than the 1.68 bps The main issue was that game characters could only move in eight observed for the standard controller. In the advanced group the directions using the D-Pad. To overcome this, some games, such trackball controller’s throughput was 3.19 bps – 58.7% higher than the 2.01 bps observed for the standard controller. Although as Resident Evil, used the forward and back directions of the D- the trackball controller performed better in terms of throughput, Pad to move the character, and the left and right directions for pointer path was more direct with the standard controller. -

Product Guide Version 1.1

Product Guide Version 1.1 Contents 1. Introduction ........................................................................................................................................... 2 2. Controls and Indicators ......................................................................................................................... 4 3. Setup and Operation ............................................................................................................................. 6 4. Mad Catz L.Y.N.X.3 App for Windows and Android .......................................................................... 10 5. Product Questions ............................................................................................................................... 36 1 1. Introduction The L.Y.N.X.3 takes the award winning design and functionality of the L.Y.N.X.9 and delivers a product for the mobile masses. This is a controller, designed to live with you, and work on as many devices as possible, whether at home, or on-the-go. The product contains all the controls needed for a core gaming experience on mobile phone and tablets running Android. For mobile gaming, the removable phone clip is ideal for grabbing some game time wherever you are. Whether you are on Android, or PC, gamers also have the freedom to tweak the response profiles of the sticks and triggers to suit their game. This is achieved via our free apps for both Android and Windows. L.Y.N.X.3 is not just about gaming. We understand that a customer’s smart device has become the centre of their world in terms of accessing social media and music, films and TV. The controller has two modes of operation. One for Gaming, the other for Media. When in Media mode the user can access full mouse cursor control with the left stick, and a host of media and mouse buttons for quick, intuitive control of your Android set-top-box or Windows Media PC. As well as Android-based phones and tablets, this controller can also function as an additional controller on the M.O.J.O.