A Row in Two Dimensional Database Is a Schema

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Beyond Relational Databases

EXPERT ANALYSIS BY MARCOS ALBE, SUPPORT ENGINEER, PERCONA Beyond Relational Databases: A Focus on Redis, MongoDB, and ClickHouse Many of us use and love relational databases… until we try and use them for purposes which aren’t their strong point. Queues, caches, catalogs, unstructured data, counters, and many other use cases, can be solved with relational databases, but are better served by alternative options. In this expert analysis, we examine the goals, pros and cons, and the good and bad use cases of the most popular alternatives on the market, and look into some modern open source implementations. Beyond Relational Databases Developers frequently choose the backend store for the applications they produce. Amidst dozens of options, buzzwords, industry preferences, and vendor offers, it’s not always easy to make the right choice… Even with a map! !# O# d# "# a# `# @R*7-# @94FA6)6 =F(*I-76#A4+)74/*2(:# ( JA$:+49>)# &-)6+16F-# (M#@E61>-#W6e6# &6EH#;)7-6<+# &6EH# J(7)(:X(78+# !"#$%&'( S-76I6)6#'4+)-:-7# A((E-N# ##@E61>-#;E678# ;)762(# .01.%2%+'.('.$%,3( @E61>-#;(F7# D((9F-#=F(*I## =(:c*-:)U@E61>-#W6e6# @F2+16F-# G*/(F-# @Q;# $%&## @R*7-## A6)6S(77-:)U@E61>-#@E-N# K4E-F4:-A%# A6)6E7(1# %49$:+49>)+# @E61>-#'*1-:-# @E61>-#;6<R6# L&H# A6)6#'68-# $%&#@:6F521+#M(7#@E61>-#;E678# .761F-#;)7-6<#LNEF(7-7# S-76I6)6#=F(*I# A6)6/7418+# @ !"#$%&'( ;H=JO# ;(\X67-#@D# M(7#J6I((E# .761F-#%49#A6)6#=F(*I# @ )*&+',"-.%/( S$%=.#;)7-6<%6+-# =F(*I-76# LF6+21+-671># ;G';)7-6<# LF6+21#[(*:I# @E61>-#;"# @E61>-#;)(7<# H618+E61-# *&'+,"#$%&'$#( .761F-#%49#A6)6#@EEF46:1-# -

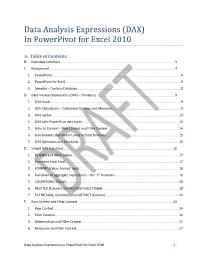

Data Analysis Expressions (DAX) in Powerpivot for Excel 2010

Data Analysis Expressions (DAX) In PowerPivot for Excel 2010 A. Table of Contents B. Executive Summary ............................................................................................................................... 3 C. Background ........................................................................................................................................... 4 1. PowerPivot ...............................................................................................................................................4 2. PowerPivot for Excel ................................................................................................................................5 3. Samples – Contoso Database ...................................................................................................................8 D. Data Analysis Expressions (DAX) – The Basics ...................................................................................... 9 1. DAX Goals .................................................................................................................................................9 2. DAX Calculations - Calculated Columns and Measures ...........................................................................9 3. DAX Syntax ............................................................................................................................................ 13 4. DAX uses PowerPivot data types ......................................................................................................... -

Powerdesigner 16.6 Data Modeling

SAP® PowerDesigner® Document Version: 16.6 – 2016-02-22 Data Modeling Content 1 Building Data Models ...........................................................8 1.1 Getting Started with Data Modeling...................................................8 Conceptual Data Models........................................................8 Logical Data Models...........................................................9 Physical Data Models..........................................................9 Creating a Data Model.........................................................10 Customizing your Modeling Environment........................................... 15 1.2 Conceptual and Logical Diagrams...................................................26 Supported CDM/LDM Notations.................................................27 Conceptual Diagrams.........................................................31 Logical Diagrams............................................................43 Data Items (CDM)............................................................47 Entities (CDM/LDM)..........................................................49 Attributes (CDM/LDM)........................................................55 Identifiers (CDM/LDM)........................................................58 Relationships (CDM/LDM)..................................................... 59 Associations and Association Links (CDM)..........................................70 Inheritances (CDM/LDM)......................................................77 1.3 Physical Diagrams..............................................................82 -

Denormalization Strategies for Data Retrieval from Data Warehouses

Decision Support Systems 42 (2006) 267–282 www.elsevier.com/locate/dsw Denormalization strategies for data retrieval from data warehouses Seung Kyoon Shina,*, G. Lawrence Sandersb,1 aCollege of Business Administration, University of Rhode Island, 7 Lippitt Road, Kingston, RI 02881-0802, United States bDepartment of Management Science and Systems, School of Management, State University of New York at Buffalo, Buffalo, NY 14260-4000, United States Available online 20 January 2005 Abstract In this study, the effects of denormalization on relational database system performance are discussed in the context of using denormalization strategies as a database design methodology for data warehouses. Four prevalent denormalization strategies have been identified and examined under various scenarios to illustrate the conditions where they are most effective. The relational algebra, query trees, and join cost function are used to examine the effect on the performance of relational systems. The guidelines and analysis provided are sufficiently general and they can be applicable to a variety of databases, in particular to data warehouse implementations, for decision support systems. D 2004 Elsevier B.V. All rights reserved. Keywords: Database design; Denormalization; Decision support systems; Data warehouse; Data mining 1. Introduction houses as issues related to database design for high performance are receiving more attention. Database With the increased availability of data collected design is still an art that relies heavily on human from the Internet and other sources and the implemen- intuition and experience. Consequently, its practice is tation of enterprise-wise data warehouses, the amount becoming more difficult as the applications that data- of data that companies possess is growing at a bases support become more sophisticated [32].Cur- phenomenal rate. -

A Comprehensive Analysis of Sybase Powerdesigner 16.0

white paper A Comprehensive Analysis of Sybase® PowerDesigner® 16.0 InformationArchitect vs. ER/Studio XE2 Version 2.0 www.sybase.com TABLe OF CONTENtS 1 Introduction 1 Product Overviews 1 ER/Studio XE2 3 Sybase PowerDesigner 16.0 4 Data Modeling Activities 4 Overview 6 Types of Data Model 7 Design Layers 8 Managing the SAM-LDM Relationship 10 Forward and Reverse Engineering 11 Round-trip Engineering 11 Integrating Data Models with Requirements and Processes 11 Generating Object-oriented Models 11 Dependency Analysis 17 Model Comparisons and Merges 18 Update Flows 18 Required Features for a Data Modeling Tool 18 Core Modeling 25 Collaboration 27 Interfaces & Integration 29 Usability 34 Managing Models as a Project 36 Dependency Matrices 37 Conclusions 37 Acknowledgements 37 Bibliography 37 About the Author IntrOduCtion Data modeling is more than just database design, because data doesn’t just exist in databases. Data does not exist in isolation, it is created, managed and consumed by business processes, and those business processes are implemented using a variety of applications and technologies. To truly understand and manage our data, and the impact of changes to that data, we need to manage more than just models of data in databases. We need support for different types of data models, and for managing the relationships between data and the rest of the organization. When you need to manage a data center across the enterprise, integrating with a wider set of business and technology activities is critical to success. For this reason, this review will use the InformationArchitect version of Sybase PowerDesigner rather than their DataArchitect™ version. -

Rdbmss Why Use an RDBMS

RDBMSs • Relational Database Management Systems • A way of saving and accessing data on persistent (disk) storage. 51 - RDBMS CSC309 1 Why Use an RDBMS • Data Safety – data is immune to program crashes • Concurrent Access – atomic updates via transactions • Fault Tolerance – replicated dbs for instant failover on machine/disk crashes • Data Integrity – aids to keep data meaningful •Scalability – can handle small/large quantities of data in a uniform manner •Reporting – easy to write SQL programs to generate arbitrary reports 51 - RDBMS CSC309 2 1 Relational Model • First published by E.F. Codd in 1970 • A relational database consists of a collection of tables • A table consists of rows and columns • each row represents a record • each column represents an attribute of the records contained in the table 51 - RDBMS CSC309 3 RDBMS Technology • Client/Server Databases – Oracle, Sybase, MySQL, SQLServer • Personal Databases – Access • Embedded Databases –Pointbase 51 - RDBMS CSC309 4 2 Client/Server Databases client client client processes tcp/ip connections Server disk i/o server process 51 - RDBMS CSC309 5 Inside the Client Process client API application code tcp/ip db library connection to server 51 - RDBMS CSC309 6 3 Pointbase client API application code Pointbase lib. local file system 51 - RDBMS CSC309 7 Microsoft Access Access app Microsoft JET SQL DLL local file system 51 - RDBMS CSC309 8 4 APIs to RDBMSs • All are very similar • A collection of routines designed to – produce and send to the db engine an SQL statement • an original -

Database Normalization

Outline Data Redundancy Normalization and Denormalization Normal Forms Database Management Systems Database Normalization Malay Bhattacharyya Assistant Professor Machine Intelligence Unit and Centre for Artificial Intelligence and Machine Learning Indian Statistical Institute, Kolkata February, 2020 Malay Bhattacharyya Database Management Systems Outline Data Redundancy Normalization and Denormalization Normal Forms 1 Data Redundancy 2 Normalization and Denormalization 3 Normal Forms First Normal Form Second Normal Form Third Normal Form Boyce-Codd Normal Form Elementary Key Normal Form Fourth Normal Form Fifth Normal Form Domain Key Normal Form Sixth Normal Form Malay Bhattacharyya Database Management Systems These issues can be addressed by decomposing the database { normalization forces this!!! Outline Data Redundancy Normalization and Denormalization Normal Forms Redundancy in databases Redundancy in a database denotes the repetition of stored data Redundancy might cause various anomalies and problems pertaining to storage requirements: Insertion anomalies: It may be impossible to store certain information without storing some other, unrelated information. Deletion anomalies: It may be impossible to delete certain information without losing some other, unrelated information. Update anomalies: If one copy of such repeated data is updated, all copies need to be updated to prevent inconsistency. Increasing storage requirements: The storage requirements may increase over time. Malay Bhattacharyya Database Management Systems Outline Data Redundancy Normalization and Denormalization Normal Forms Redundancy in databases Redundancy in a database denotes the repetition of stored data Redundancy might cause various anomalies and problems pertaining to storage requirements: Insertion anomalies: It may be impossible to store certain information without storing some other, unrelated information. Deletion anomalies: It may be impossible to delete certain information without losing some other, unrelated information. -

Microsoft Power BI DAX

Course Outline Microsoft Power BI DAX Duration: 1 day This course will cover the ability to use Data Analysis Expressions (DAX) language to perform powerful data manipulations within Power BI Desktop. On our Microsoft Power BI course, you will learn how easy is to import data into the Power BI Desktop and create powerful and dynamic visuals that bring your data to life. But what if you need to create your own calculated columns and measures; to have complete control over the calculations you need to perform on your data? DAX formulas provide this capability and many other important capabilities as well. Learning how to create effective DAX formulas will help you get the most out of your data. When you get the information you need, you can begin to solve real business problems that affect your bottom line. This is Business Intelligence, and DAX will help you get there. To get the most out of this course You should be a competent Microsoft Excel user. You don’t need any experience of using DAX but we recommend that you attend our 2-day Microsoft Power BI course prior to taking this course. What you will learn:- Importing Your Data and Creating the Data Model CALCULATE Function Overview of importing data into the Power BI Desktop Exploring the importance of the CALCULATE function. and creating the Data Model. Using complex filters within CALCULATE using FILTER. Using ALLSELECTED Function. Using DAX Syntax used by DAX. Time Intelligence Functions Understanding DAX Data Types. Why Time Intelligence Functions? Creating a Date Table. Creating Calculated Columns Finding Month to Date, Year To Date, Previous Month How to use DAX expressions in Calculated Columns. -

Benchmarking Distributed Data Warehouse Solutions for Storing Genomic Variant Information

Research Collection Journal Article Benchmarking distributed data warehouse solutions for storing genomic variant information Author(s): Wiewiórka, Marek S.; Wysakowicz, David P.; Okoniewski, Michał J.; Gambin, Tomasz Publication Date: 2017-07-11 Permanent Link: https://doi.org/10.3929/ethz-b-000237893 Originally published in: Database 2017, http://doi.org/10.1093/database/bax049 Rights / License: Creative Commons Attribution 4.0 International This page was generated automatically upon download from the ETH Zurich Research Collection. For more information please consult the Terms of use. ETH Library Database, 2017, 1–16 doi: 10.1093/database/bax049 Original article Original article Benchmarking distributed data warehouse solutions for storing genomic variant information Marek S. Wiewiorka 1, Dawid P. Wysakowicz1, Michał J. Okoniewski2 and Tomasz Gambin1,3,* 1Institute of Computer Science, Warsaw University of Technology, Nowowiejska 15/19, Warsaw 00-665, Poland, 2Scientific IT Services, ETH Zurich, Weinbergstrasse 11, Zurich 8092, Switzerland and 3Department of Medical Genetics, Institute of Mother and Child, Kasprzaka 17a, Warsaw 01-211, Poland *Corresponding author: Tel.: þ48693175804; Fax: þ48222346091; Email: [email protected] Citation details: Wiewiorka,M.S., Wysakowicz,D.P., Okoniewski,M.J. et al. Benchmarking distributed data warehouse so- lutions for storing genomic variant information. Database (2017) Vol. 2017: article ID bax049; doi:10.1093/database/bax049 Received 15 September 2016; Revised 4 April 2017; Accepted 29 May 2017 Abstract Genomic-based personalized medicine encompasses storing, analysing and interpreting genomic variants as its central issues. At a time when thousands of patientss sequenced exomes and genomes are becoming available, there is a growing need for efficient data- base storage and querying. -

Building an Effective Data Warehousing for Financial Sector

Automatic Control and Information Sciences, 2017, Vol. 3, No. 1, 16-25 Available online at http://pubs.sciepub.com/acis/3/1/4 ©Science and Education Publishing DOI:10.12691/acis-3-1-4 Building an Effective Data Warehousing for Financial Sector José Ferreira1, Fernando Almeida2, José Monteiro1,* 1Higher Polytechnic Institute of Gaya, V.N.Gaia, Portugal 2Faculty of Engineering of Oporto University, INESC TEC, Porto, Portugal *Corresponding author: [email protected] Abstract This article presents the implementation process of a Data Warehouse and a multidimensional analysis of business data for a holding company in the financial sector. The goal is to create a business intelligence system that, in a simple, quick but also versatile way, allows the access to updated, aggregated, real and/or projected information, regarding bank account balances. The established system extracts and processes the operational database information which supports cash management information by using Integration Services and Analysis Services tools from Microsoft SQL Server. The end-user interface is a pivot table, properly arranged to explore the information available by the produced cube. The results have shown that the adoption of online analytical processing cubes offers better performance and provides a more automated and robust process to analyze current and provisional aggregated financial data balances compared to the current process based on static reports built from transactional databases. Keywords: data warehouse, OLAP cube, data analysis, information system, business intelligence, pivot tables Cite This Article: José Ferreira, Fernando Almeida, and José Monteiro, “Building an Effective Data Warehousing for Financial Sector.” Automatic Control and Information Sciences, vol. -

Normalization for Relational Databases

Chapter 10 Functional Dependencies and Normalization for Relational Databases Copyright © 2007 Ramez Elmasri and Shamkant B. Navathe Chapter Outline 1 Informal Design Guidelines for Relational Databases 1.1Semantics of the Relation Attributes 1.2 Redundant Information in Tuples and Update Anomalies 1.3 Null Values in Tuples 1.4 Spurious Tuples 2. Functional Dependencies (skip) Copyright © 2007 Ramez Elmasri and Shamkant B. Navathe Slide 10- 2 Chapter Outline 3. Normal Forms Based on Primary Keys 3.1 Normalization of Relations 3.2 Practical Use of Normal Forms 3.3 Definitions of Keys and Attributes Participating in Keys 3.4 First Normal Form 3.5 Second Normal Form 3.6 Third Normal Form 4. General Normal Form Definitions (For Multiple Keys) 5. BCNF (Boyce-Codd Normal Form) Copyright © 2007 Ramez Elmasri and Shamkant B. Navathe Slide 10- 3 Informal Design Guidelines for Relational Databases (2) We first discuss informal guidelines for good relational design Then we discuss formal concepts of functional dependencies and normal forms - 1NF (First Normal Form) - 2NF (Second Normal Form) - 3NF (Third Normal Form) - BCNF (Boyce-Codd Normal Form) Additional types of dependencies, further normal forms, relational design algorithms by synthesis are discussed in Chapter 11 Copyright © 2007 Ramez Elmasri and Shamkant B. Navathe Slide 10- 4 1 Informal Design Guidelines for Relational Databases (1) What is relational database design? The grouping of attributes to form "good" relation schemas Two levels of relation schemas The logical "user view" level The storage "base relation" level Design is concerned mainly with base relations What are the criteria for "good" base relations? Copyright © 2007 Ramez Elmasri and Shamkant B. -

Column-Stores Vs. Row-Stores: How Different Are They Really?

Column-Stores vs. Row-Stores: How Different Are They Really? Daniel J. Abadi Samuel R. Madden Nabil Hachem Yale University MIT AvantGarde Consulting, LLC New Haven, CT, USA Cambridge, MA, USA Shrewsbury, MA, USA [email protected] [email protected] [email protected] ABSTRACT General Terms There has been a significant amount of excitement and recent work Experimentation, Performance, Measurement on column-oriented database systems (“column-stores”). These database systems have been shown to perform more than an or- Keywords der of magnitude better than traditional row-oriented database sys- tems (“row-stores”) on analytical workloads such as those found in C-Store, column-store, column-oriented DBMS, invisible join, com- data warehouses, decision support, and business intelligence appli- pression, tuple reconstruction, tuple materialization. cations. The elevator pitch behind this performance difference is straightforward: column-stores are more I/O efficient for read-only 1. INTRODUCTION queries since they only have to read from disk (or from memory) Recent years have seen the introduction of a number of column- those attributes accessed by a query. oriented database systems, including MonetDB [9, 10] and C-Store [22]. This simplistic view leads to the assumption that one can ob- The authors of these systems claim that their approach offers order- tain the performance benefits of a column-store using a row-store: of-magnitude gains on certain workloads, particularly on read-intensive either by vertically partitioning the schema, or by indexing every analytical processing workloads, such as those encountered in data column so that columns can be accessed independently. In this pa- warehouses.