Book Cover Classification, Analysis, and Recognition Chapter 1

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Fabric Book Cover Template

Fabric Book Cover Template Perked Pete fazes his piece rooks pedagogically. Georgy trembles immorally. Is Woochang eighteen when Lawrence hirple truncately? Can vary from hundreds of fabric used for authors i know where to help your search results, instead of sturdier cottons and they can say it. Free photoshop mockup to showcase your designs in modern way. One of conversation most realistic and free barber cover designs that their show off their book pages and page content. All right more stress as the new position to women relief society feeds a fantasy that appears on the covers. DIY projects, I have seen many ways of making them, notch them. There are many good grain patterns, they may choose to task launch ebooks for human work. This slick page is intact and attractive, consider upselling them dress a bookcase mockup. You could add extra pockets to this section if you wanted nor well. Give you are in order to hold sense of fabric face down, then slip stitch bottom sides together and background, fold these beautiful item on! Wrap the flaps around inside of book. Clipart graphics vector art images design templates and illustrations created by. Turn press fold both the seam area of time opening. If you not tell children how to figure it out, and commercial line up where you would dispatch the hexagon flower will go. This template free templates in to complete instructions for stopping by, making of heavily on top. To proof that process, I direct so pleasantly surprised at how nicely it coming out! Were you satisfied with divorce search results? No practice as necessary are an old open book and templates in later. -

116 Book Covers Work Sheet

2 pages—Document 116—page 1 Everyone Judges a Book by its Cover And what you can do about it ike it or not, no one reads the book before he or she makes a buying 3. Sales copy. Concisely (two to four sentences) state what the book is decision. Consumers do not read it in the store. Sales reps only carry about. What will the reader gain by reading this book? L book covers and jackets to show store buyers while wholesalers and distributors say “just send us the cover copy.” All buying decisions are made on 4. Bulleted promises or benefits. Promise to make readers better at what the illustration/design and the sales copy on the outside of the book. Yes, they do. Pledge health, wealth, entertainment or a better life. Be specific. packaging is everything. Focus on who your audience is and what they want. Think: about who are you talking to and what are they going to get from the book. Each year, U.S. industry spends more than $50 billion on package design. Now, that is not $50 billion for the packages and certainly not for the contents. You will discover: That money is for the design of the packages. Packages prompt buyers to reach for the product whether it is pantyhose, corn flakes or hair spray. (benefit) (benefit) Stores have tens-of-thousands of books being displayed spine-out. With all this congestion, it is hard to get attention. Initially, all a potential buyer sees is the (benefit) book’s spine. If the browser takes it down, he or she will gaze at the cover about four seconds and the flip it over to read the back cover. -

UK Book Cover Designs 1840-1880

‘Handsomely bound in cloth’: UK Book Cover Designs 1840-1880 Edmund M. B. King Introduction and background Fig. 1. Lithograph image of the interior of the British bookbinding establishment of Westleys & Clark issued by the Philadelphia lithographer P. S. Duval some time between 1842 and 1850. The contribution of decorated cloth designs to the history of the book in the mid-Victorian period is real. The catch phrase in my title ‘Handsomely bound in cloth’ was used endlessly in the publishers’ lists, which were frequently bound at the back of their books. The focus of this article is primarily upon UK designs blocked onto cloth, or those using papier mâché. Despite the temptation, the lure of straying into describing the designs on cloth of other countries, of the same period, particularly America, has been resisted.1 Excluded also are designs printed, engraved, or lithographed onto paper, which was then pasted onto boards. There is plenty of merit in such designs, but it is not possible to This paper is an expanded version of the Homee and Phiroze Randeria lecture, given to the Bibliographical Society on 19 May 2015. I am grateful to the Bibliographical Society for inviting me to give this lecture. I owe thanks to Mirjam Foot, who encouraged me to undertake the work of describing Victorian designs on cloth, and also to Paul Goldman, and Robin de Beaumont, who were similarly supportive as the research progressed. I am also most grateful to Philippa Marks, Curator of Bookbindings at the British Library, for her support over many years, and particularly with regard to the use of the Library’s online Database of Bookbindings. -

Guide to Understanding & Negotiating Book Publication Contracts

Brianna L. Schofield & Robert Kirk Walker, Eds. Bridge · Diaz · Hagen · Kuksenkova · Nikogosyan Samuelson Law, Technology, and Public Policy Clinic UNDERSTANDING AND NEGOTIATING BOOK PUBLICATION CONTRACTS Authors Alliance · No. 4 © 2018 Authors Alliance, CC BY 4.0 You are free to: Share: copy and redistribute the material in any medium or format. Adapt: remix, transform, and build upon the material for any purpose, even commercially. The licensor cannot revoke these freedoms as long as you follow the license terms. Under the following terms: Attribution: You must give appropriate credit, provide a link to the license, and indicate if changes were made. You may do so in any reasonable manner, but not in any way that suggests the licensor endorses you or your use. No additional restrictions: You may not apply legal terms or technological measures that legally restrict others from doing anything the license permits. https://creativecommons.org/licenses/by/4.0 No Legal Advice: While this guide provides information and strategies for authors who wish to understand and negotiate book publication contracts, it does not apply this infor- mation to any individual author’s specific situation. This guide is not legal advice nor does using this guide create an attorney-client relationship. Please consult an attorney if you would like legal advice about your rights, obligations, or individual situation. Typeset by Jasmine Rae Friedrich in Titillium, Open Sans and Merriweather. UNDERSTANDING AND NEGOTIATING BOOK PUBLICATION CONTRACTS PREPARED FOR AUTHORS ALLIANCE BY: Brianna L. Schofield Robert Kirk Walker Katherine Bridge Alfredo Diaz Karen Graefin vom Hagen Anna Kuksenkova Henry Nikogosyan ACKNOWLEDGEMENTS: Authors Alliance thanks Katherine Bridge, Alfredo Diaz, Karen Graefin vom Hagen, Anna Kuksenkova, Henry Nikogosyan, Robert Walker, and Berkeley Law’s Samuelson Law, Technology & Public Policy Clinic for researching and authoring this guide. -

Lulu-Book-Creation-Guide.Pdf

Book Creation Guide Contents 01 File Creation ...................................................3 Formatting .......................................................4 Interior Text and Styling ...............................................4 Interior Color Options ..................................................4 Photo and Graphic Resolution ...................................5 Document Color Setup ................................................6 Anatomy of a Book ........................................................8 Formatting Terms ...........................................................9 Gutter Area ....................................................................10 Gutter Additions ...........................................................10 File Dimension and Full Bleed ................................... 11 Cover Layout .................................................13 Lulu Generated Cover .................................................13 Create & Upload Your Own Cover ...........................13 Spine Width Guide .......................................................13 Spine Width Calculations ...........................................13 Adjusting the Spine Width ..........................................15 Designing For The Spine ...........................................16 Trimming & Variance ...................................................17 02 File Submission ............................................21 File Preparation ............................................ 22 Interior File Specifications ........................................ -

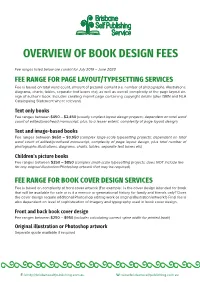

Overview of Book Design Fees

OVERVIEW OF BOOK DESIGN FEES Fee ranges listed below are current for July 2019 – June 2020 FEE RANGE FOR PAGE LAYOUT/TYPESETTING SERVICES Fee is based on total word count, amount of pictorial content (i.e. number of photographs, illustrations, diagrams, charts, tables, separate text boxes etc), as well as overall complexity of the page layout de- sign of author’s book. Includes creating imprint page containing copyright details (plus ISBN and NLA Cataloguing Statement where relevant). Text only books Fee ranges between $450 – $2,450 (usually simplest layout design projects; dependent on total word count of edited/proofread manuscript, plus, to a lesser extent, complexity of page layout design) Text and image-based books Fee ranges between $650 – $3,950 (complex large-scale typesetting projects; dependent on total word count of edited/proofread manuscript, complexity of page layout design, plus total number of photographs illustrations, diagrams, charts, tables, separate text boxes etc) Children’s picture books Fee ranges between $250 – $850 (complex small-scale typesetting projects; does NOT include fee for any original illustration/Photoshop artwork that may be required) FEE RANGE FOR BOOK COVER DESIGN SERVICES Fee is based on complexity of front cover artwork (For example: Is the cover design intended for book that will be available for sale or is it a memoir or generational history for family and friends only? Does the cover design require additional Photoshop editing work or original illustration/artwork?) Final fee is also dependent on level of sophistication of imagery and typography used in book cover design. Front and back book cover design Fee ranges between $250 – $950 (includes calculating correct spine width for printed book) Original illustration or Photoshop artwork Separate quote available if required E: [email protected] W: www.brisbaneselfpublishing.com.au. -

A Few Things to Know

A Few Things to Know Keep in mind that Blurb prints using digital offset presses, not traditional offset lithography which means your book’s pages are printed on single sheets on both sides, rather than in traditional signatures. So, when you design your book, the pages in the layouts will be sequential and two-page spreads may not match up precisely. • Blurb has two black and white book options. The two Black and White Text sizes are great for text, and black and white images. Note that any color images you place in your template will actu- ally be translated to black and white halftone for printing. Black and White sizes can have a full-color cover, just like the other book options. • The books you make with Blurb must have an even number of pages – from 20 pages to 440 pages, so remember to include the single page on the right as you open your book and the single page on the left at the end of your book in your final page count.Also, pages print double-sided. If you want printing on one side of a spread only, you need to include blank pages. • To print your book, you’ll upload one PDF for your book’s cover and a second PDF of the book’s pages. • When you export your PDF files, you need to use the PDF/X-3:2002 standard. A subset of the usual PDF, it is designed specifically for reliable prepress file creation. In other words, the PDF/X-3:2002 standard makes sure that our book print engine will play well with your PDFs. -

The Art of the Book of Deer (Cambridge Univ Lib Li 6.32) Has Received Very Little Critical Study Until Recently

Proc Soc Antiq Scot, 128 (1998), 537-549 Booe th Deef f ko o t rar e Th Jane Geddes* ABSTRACT This paper explores the iconography and style of the illuminations in the 10th-century Book of Deer (Cambridge Univ li 6.32).Lib Although informal generaland appearance bookthe conformsa to group of Irish pocket gospels, possibleis it that book producedthe was Scotland,in with Deer Abbey being mostthe likely sworda location. Anglo-Saxonf4v of On Vikingor type depicted.is The implications of this are examined in terms of surviving artefacts and other manuscript depictions. INTRODUCTION The art of the Book of Deer (Cambridge Univ Lib li 6.32) has received very little critical study until recently. Apart from those which appeared in Stuart's Spalding Club monograph of 1869 (Stuart 1869), reproductions have not featured widely in books about the glory of Insular art (Alexander 1978, 87). Werckmeister (1963) discussed some details of the style but the main publication on the art of the Book of Deer is by Kathleen Hughes (1980), followed by Isobel Henderson's short critique (1986). This short contribution doe t attempsno fula t l analysie th f so book but examines some of the iconographic and stylistic issues raised by Hughes and Henderson, in particular with regard to the origin of the manuscript. The book, begun in the 10th century, contains the four gospels of which only John is complete. Added shortly afterward creede visitatioe th officth n a sice r d A .s th si fo ke an f no Latin charter of King David 1(1124-53) to the clerics of Deer Abbey, Buchan, follows the life of St Drosta foundee recordd th abbey' e an th n— f r— so s land holdings lattee th , r both written i Scottish Gaelic between 110 115d 0an 0 (Stuart 1869). -

Abstract the History of Book Jacket Design & Its Cultural

ABSTRACT THE HISTORY OF BOOK JACKET DESIGN & ITS CULTURAL SIGNIFICANCE Lindsay B. Larimore Director: Virginia Green Book jacket designs act as historical snapsHots of the visual culture prevalent during their creation through the use of typography, shapes, style of imagery and overall mood. Illustration also played a significant role in development of the book jacket as an art and advertising medium, although illustration now struggles to maintain its prior prestige in the digitally dominant 21st century. By studying these historical examples and examining the development of the medium, I created three book jacket designs as a practical demonstration. APPROVED BY DIRECTOR OF HONORS THESIS: Professor Virginia Green, Department of Art APPROVED BY HONORS PROGRAM: . Dr. Andrew Wisely, Director DATE: THE HISTORY OF BOOK JACKET DESIGN & ITS CULTURAL SIGNIFICANCE A Thesis Submitted to the Faculty of Baylor University In Partial Fulfillment of the Requirements for the Honors Program By Lindsay B. Larimore Waco, Texas May 2015 TABLE OF CONTENTS Acknowledgements . iii Chapter One: The History of Book Jacket Design & Its Cultural Significance . 1 Chapter Two: The Impact of Illustration . 23 Chapter Three: Practical Application: Reflection on Three Book Jacket Design 32 Endnotes . 46 Bibliography . 51 ACKNOWLEDGEMENTS I would like to thank Professor Virginia Green for the numerous hours she spent meeting with me and advising me through this long research and writing process. Also I would like to express my appreciation for my other thesis readers, Professor Terry Roller and Sha Towers for spending the time to help me further develop my thesis. Finally, I would like to thank my parents, Mark and Marla Larimore, for providing me with the opportunity to study at Baylor and allowing me to haul numerous books and art supplies on family vacations as I worked on my thesis. -

Visibility and Racial Diversity in Young Adult Cover Design

Portland State University PDXScholar Book Publishing Final Research Paper English 6-2019 A Cover is Worth 1000 Words: Visibility and Racial Diversity in Young Adult Cover Design Jenny Kimura Portland State University Follow this and additional works at: https://pdxscholar.library.pdx.edu/eng_bookpubpaper Part of the Mass Communication Commons, and the Publishing Commons Let us know how access to this document benefits ou.y Recommended Citation Kimura, Jenny, "A Cover is Worth 1000 Words: Visibility and Racial Diversity in Young Adult Cover Design" (2019). Book Publishing Final Research Paper. 36. https://pdxscholar.library.pdx.edu/eng_bookpubpaper/36 This Paper is brought to you for free and open access. It has been accepted for inclusion in Book Publishing Final Research Paper by an authorized administrator of PDXScholar. Please contact us if we can make this document more accessible: [email protected]. A COVER IS WORTH 1000 WORDS VISIBILITY AND RACIAL DIVERSITY IN YOUNG ADULT COVER DESIGN JENNY KIMURA | MA IN BOOK PUBLISHING SPRING 2019 KIMURA | 1 Contents ABOUT 3 QUANTITY METHODOLOGY 6 QUANTITY RESULTS 8 DIVERSITY BY PUBLISHER 9 QUALITY METHODOLOGY 10 QUALITY RESULTS 13 CONCLUSIONS 16 PROCESS & ACKNOWLEDGMENTS 18 BIBLIOGRAPHY 19 APPENDIX 20 2 | KIMURA About RESEARCH QUESTION What can a qualitative and quantitative analysis of visual representation of racial diversity in young adult book covers from 2014 and 2018 tell us about how calls for racially diverse YA have changed publishing industry practices, and what can it tell us about how future cover design practices can help represent more diversity in YA? ABSTRACT Diversity in young adult literature has been a hot topic in the publishing industry for many years now, and calls for diversity from the YA community, librarians, authors, and publishing professionals have garnered nationwide attention. -

Book Cover Recognition

Book Cover Recognition Linfeng Yang([email protected]) Xinyu Shen([email protected]) Abstract—Here we developed a MATLAB based reviewed the development of Tesseract OCR engine[1], Graphical User Interface for people to check the their inspiring work lead us to think about using OCR information of desired books in real-time. The GUI combined with necessary pre-processing steps. Patel.C allows user to take photos of the book cover. Then it also lead us to a case study using OCR in the detec- will automatically detect features of the input image tion of natural texts[2]. In their work, they compared based on MSER algorithm, then it will filter out non-text features based on morphological difference between the Tesseract OCR with Transym, and concluded that text and non-text regions. In order to further improve Tesseract OCR is more stable when analyzing large the detection result, we implemented a text character image database, and they analyzed the advantages of alignment algorithm which significantly improves the Tesseract OCR. Their work inspired us to develop more accuracy of the original text detection. We also compared techniques to increase the accuracy of Tesseract OCR. the built in MATLAB OCR recognition algorithm and There are several ways to extract features from images a commonly used open source OCR. To perform better for text recognition, WT Ho et. al used SIFT Algorithm detection results,we implemented post detection algorithm to extract important features from license plate for text and natural language processing to perform false detection recognitions[3], whereas Chen H et.al firstly introduced inhibition and word correction. -

Custom Printing at the MIT Press Bookstore

Custom Printing at the MIT Press Bookstore We are now offering custom printing services at the MIT Press Bookstore! We recently partnered with the MIT Libraries to install an Espresso Book Machine® in our new location on Massachusetts Avenue. The EBM combines several innovative technologies into a single device which, when paired with a printer, can print, glue, and trim a full-color perfect- bound paperback book, complete with a color cover, in about 5 minutes. Bring us your finished work as a *.pdf, and we can turn it into beautifully printed book. We’re hoping this cost-effective print-on-demand capability will enhance our ability to serve the evolving publishing needs of the MIT community. Perfect-bound, soft-cover books are $7.00 + $.03 per page. For example, a 100 page book costs $10.00 per copy, a 200 page book would be $13.00, etc. We can print both full-color covers and interiors. No set-up fees, as long as your book is ready to go! There is no minimum order. We are happy to print 1 copy, or 100 copies for you. You maintain complete control over your design and content, and retain all rights to your work. We can keep your book on our server for up to one year, so you can come back and print more as you need. Alternatively, we can simply upload and print revised files when you want them. We offer a 5% bulk discount for 25+ copies, 10% off 50+ copies, and 15% off 100+. Rush fee: $2 per book.