Guide to the 2016 Cooperative Congressional Election Survey

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

USSYP 2013 Yearbook

THE HEARST FOUNDATIONS DIRECTORS William Randolph Hearst III PRESIDENT James M. Asher Anissa B. Balson UNITED STATES SENATE YOUTH PROGRAM David J. Barrett Frank A. Bennack, Jr. John G. Conomikes Ronald J. Doerfl er Lisa H. Hagerman George R. Hearst III Gilbert C. Maurer Mark F. Miller Virginia H. Randt Steven R. Swartz Paul “Dino” Dinovitz EXECUTIVE DIRECTOR George B. Irish EASTERN DIRECTOR Rayne B. Guilford PROGRAM DIRECTOR FIFTY-FIRST ANNUAL WASHINGTON WEEK 2013 Lynn De Smet DEPUTY DIRECTOR Catherine Mahoney PROGRAM MANAGER Hayes Reisenfeld PROGRAM LIAISON UNITED STATES SENATE YOUTH PROGRAM FIFTY-FIRST ANNUAL WASHINGTON WEEK ! MARCH 9–16, 2013 SPONSORED BY THE UNITED STATES SENATE FUNDED AND ADMINISTERED BY THE THE HEARST FOUNDATIONS 90 NEW MONTGOMERY STREET ! SUITE 1212 ! SAN FRANCISCO, CA 94105"4504 WWW.USSENATEYOUTH.ORG Photography by Jakub Mosur Secondary Photography by Erin Lubin Design by Catalone Design Co. USSYP_31_Yearbook_COV_052313_cc.indd 1 5/29/13 4:04 PM Forget conventionalisms; forget what the world thinks of you stepping out of your place; think your best thoughts, speak your best words, work your best works, looking to your own conscience for approval. SUSAN B. ANTHONY USSYP_31_Yearbook_COV_052313_cc.indd 2 5/24/13 3:33 PM 2013 UNITED STATES SENATE YOUTH PROGRAM SENATE ADVISORY COMMITTEE HONORARY CO-CHAIRS VICE PRESIDENT SENATOR SENATOR JOSEPH R. BIDEN HARRY REID MITCH McCONNELL President of the Senate Majority Leader Republican Leader CO-CHAIRS SENATOR JEANNE SENATOR SHAHEEN RICHARD BURR of New Hampshire of North Carolina -

OFFICE of Gfnfpal COUNSEL

COMiMlSSION Kevin P. LeMaire Ptairieville, LA 70769 ' OFFICE OF GfNFPAl COUNSEL December 7,2011 Office of General Counsel Federal Election Commission 999 E Street, N.W. Washington, DC 20463 Re: Enclosed S^m Complaint MUR^. Calvin C. Fayard, Jr. Cynthia Fayard Chalyn Fayard Carolyn Mistoler Dear Ma'am/Sir: Enclosed please find my Sworn CQnq)Iaint regarding tfae individuals referenced above. Should you find this matter wanants investigation, please take into consideration that Calvin Fayard, Jr., his law firm of Fayard & Honeycutt, and Ids law partner, D. Blayne Honeycutt, made campaign contributions to the US Attorney for tfae Middle District of Louisiana, Donald Cazayoux, in 2008 when Mr. Cazayoux was seeking a congressional seat In order to avoid even tfae ayppearance of impropriety, it may be prudent to have a different office conduct the investigation. Thank you for your attention to this matter. Respectfully, Kevin LeMaire Enclosures FEHERAL ELECTION COMHISSION SWORN COMPLAINT 20IIOEC2I AHII: 28 STATE OF LOUISIANA OFFICE OF GENERAl PARISH OF EAST BATON COUNSEL BEFORE ME, the undersigned autfaonty, personally came and appeared, KEVINP.LeMAIRE />, <^/^ CComplainant**) herein, who, after being duly sworn, did dqxise and state: Complainant resides at , PrairieviUe, Louisiana.. Complainant's telephone number is Complainant submits tfais Swom Complaint base^ upon fais personal knowledge, fais oral and written communications with others, documents attached as exhibits, and tfae existence of documentary evidence in fhe possession of otfaers. For tfae reasons that follow. Complainant reasonably believes violations of tfae Federal Election Campaign Laws or Commission Regulations faave been committed by: 1. Calvin C. Fayard, Jr. wfao presently resides at. -

Parting the Dark Money Sea: Exposing Politically Active Tax- Exempt Groups Through FEC-IRS Hybrid Enforcement

William & Mary Law Review Volume 57 (2015-2016) Issue 1 Article 7 10-2015 Parting the Dark Money Sea: Exposing Politically Active Tax- Exempt Groups Through FEC-IRS Hybrid Enforcement Carrie E. Miller Follow this and additional works at: https://scholarship.law.wm.edu/wmlr Part of the Election Law Commons, and the Tax Law Commons Repository Citation Carrie E. Miller, Parting the Dark Money Sea: Exposing Politically Active Tax-Exempt Groups Through FEC-IRS Hybrid Enforcement, 57 Wm. & Mary L. Rev. 341 (2015), https://scholarship.law.wm.edu/wmlr/vol57/iss1/7 Copyright c 2015 by the authors. This article is brought to you by the William & Mary Law School Scholarship Repository. https://scholarship.law.wm.edu/wmlr PARTING THE DARK MONEY SEA: EXPOSING POLITICALLY ACTIVE TAX-EXEMPT GROUPS THROUGH FEC-IRS HYBRID ENFORCEMENT TABLE OF CONTENTS INTRODUCTION ....................................... 343 I. TAX LAW AND ELECTION LAW FOUNDATIONS ............. 346 A. Internal Revenue Code Provisions ................... 347 1. Section 501(c)(4) Social Welfare Organizations ...... 347 2. Section 527 Political Organizations ............... 349 B. Campaign Finance Doctrinal Framework ............ 350 1. Statutory Basis and Underlying Values ............ 350 2. Evolving Case Law ............................. 351 II. ESCALATING POLITICAL ACTIVITY OF TAX -E XEMPT SOCIAL WELFARE ORGANIZATIONS : WHERE TAX LAW AND ELECTION LAW INTERSECT ........................... 354 A. IRS Treatment of Political Activity .................. 355 1. Conflicting Threshold Standards ................. 355 2. Vague Definitional Problem ...................... 357 B. Section 501(c)(4) Organizations Have Emerged as the Preferred Campaign Finance Vehicle ................ 359 1. Increased Independent Expenditures .............. 360 2. Relaxed Reporting Provisions .................... 363 3. The Coordination Problem ....................... 367 C. Deregulation’s Impact on Values that Support Campaign Finance Restrictions ................... -

Presidential Results on November 7, 2020, Several Media Organizations

Presidential Results On November 7, 2020, several media organizations declared that Joseph Biden and Kamala Harris won the election for the President and Vice President of the United States. Biden and Harris will take office on January 20, 2021. Currently, President-elect Biden is leading in the electoral college and popular vote. Votes are still being counted so final electoral college and popular vote counts are not available. NASTAD will provide transition documents to the incoming Administration, highlighting agency-specific recommendations that pertain to health department HIV and hepatitis programs. Additionally, the Federal AIDS Policy Partnership (FAPP) and the Hepatitis Appropriations Partnership (HAP), two coalitions that NASTAD leads, will also submit transition documents stressing actions the next Administration can take relating to the HIV and hepatitis epidemics, respectively. House and Senate Results Several House races are still undecided, but Democrats have kept control of the chamber. Republicans picked up several House districts but did not net the 17 seats they needed to gain the majority. Control of the Senate is still unknown with two uncalled seats (Alaska and North Carolina) and two runoffs in Georgia. The runoff races in Georgia will take place on January 5, 2021, so the Senate make up will not be final until then. While it remains likely that Republicans will remain in control of the Senate, if Democrats win both run off races, they will gain control of the Senate with Vice- President-elect Harris serving as tiebreaker. Pre- Post- Party election election Democrats 45 46 Senate*** Republicans 53 50 Independent 2* 2** Democrats 232 219 House**** Republicans 197 203 Independent 0 0 * Angus King (ME) and Bernie Sanders (VT) caucused with the Democrats. -

September 25, 2017 Dear Senators Mike Enzi and John Barrasso and Congressman Cheney: on Behalf of the Eight Institutions of High

September 25, 2017 Dear Senators Mike Enzi and John Barrasso and Congressman Cheney: On behalf of the eight institutions of higher education in Wyoming, we are pleased to submit this joint letter sharing our perspective on the recent actions of President Trump to remove protections from the children of undocumented immigrants. The number of undocumented immigrant children brought to the United States by their parents is relatively small in Wyoming—at least in comparison to states like California and Texas. But the value these young people bring to our state today, and into the future, is large. Immigration is a complex topic, and while Congress should address that issue with measure, we urge you to act with urgency on a single, focused issue to ensure youth currently protected from deportation under the Deferred Action for Childhood Arrivals (DACA) program can continue their studies or employment in the United States. Wyoming’s rapidly aging population, the urgent need to diversify the economy, our reliance on a skilled workforce, and the growing minority population are all facets of the same complex discussion about Wyoming’s future. Wyoming’s population is aging quickly. In June 2017, Dr. Wenlin Lieu, Chief Economist, Wyoming’s Economic Analysis Division, reported “The aging of Wyoming’s population has picked up speed, and the pace was one of the fastest in the country.” Wyoming’s unemployment rate decreased from 5.0 to 3.8 percent from July 2016 to July 2017, largely because the state lost workers who tended to be the younger workforce. The growing minority population helped offset the labor force who moved out of the state. -

Insid E the Cove R

Libertarian Party Files Friend-of-the-Court Brief in D.C. Gun Case - Page 8 Members Sound-Off in “The Mailbox” - page 3 Committee Updates - page 13 February 2008 The Official Monthly Newspaper of the Libertarian Party Volume 38 / Issue 2 Torch Club Breakfast Ballot Access Status Increasing for Libertarian Party at Convention to By Sean Haugh cycle. The LPNC is joined in Honor Patrick Henry n Jan. 30, Wake Coun- this lawsuit by the NC Green ty Superior Judge Leon Party, as well as several Lib- By Andrew Davis O Stanback shocked ertarian candidates who were both sides of the lawsuit filed denied a spot on the ballot in his year’s Torch Club by the Libertarian Party of the 2005 elections. The trial Breakfast at the Na- North Carolina regarding bal- will probably take place in Ttional Convention is set lot access law in the state. The March, after which, either side to honor Patrick Henry, an in- ruling denied mutual motions may appeal to the NC Court of strumental figure of the Ameri- for summary judgment and Appeals and eventually the NC can Revolution. The “Patrick scheduling the case for trial. Supreme Court. Henry Breakfast” for Torch Although both the LPNC and Richard Winger of Ballot Club members will feature the state’s attorneys agreed the Access News commented, “It Gary Aldrich, president and facts of the case are not in dis- is encouraging that the judge founder of the Patrick Henry pute, the judge said he wants wants a trial, because it indi- Center for Individual Liberty. -

Implementation and Its Consequences Hearing

IRAN NUCLEAR DEAL OVERSIGHT: IMPLEMENTATION AND ITS CONSEQUENCES HEARING BEFORE THE COMMITTEE ON FOREIGN AFFAIRS HOUSE OF REPRESENTATIVES ONE HUNDRED FOURTEENTH CONGRESS SECOND SESSION FEBRUARY 11, 2016 Serial No. 114–143 Printed for the use of the Committee on Foreign Affairs ( Available via the World Wide Web: http://www.foreignaffairs.house.gov/ or http://www.gpo.gov/fdsys/ U.S. GOVERNMENT PUBLISHING OFFICE 98–602PDF WASHINGTON : 2016 For sale by the Superintendent of Documents, U.S. Government Publishing Office Internet: bookstore.gpo.gov Phone: toll free (866) 512–1800; DC area (202) 512–1800 Fax: (202) 512–2104 Mail: Stop IDCC, Washington, DC 20402–0001 VerDate 0ct 09 2002 09:54 Mar 24, 2016 Jkt 000000 PO 00000 Frm 00001 Fmt 5011 Sfmt 5011 F:\WORK\_FULL\021116\98602 SHIRL COMMITTEE ON FOREIGN AFFAIRS EDWARD R. ROYCE, California, Chairman CHRISTOPHER H. SMITH, New Jersey ELIOT L. ENGEL, New York ILEANA ROS-LEHTINEN, Florida BRAD SHERMAN, California DANA ROHRABACHER, California GREGORY W. MEEKS, New York STEVE CHABOT, Ohio ALBIO SIRES, New Jersey JOE WILSON, South Carolina GERALD E. CONNOLLY, Virginia MICHAEL T. MCCAUL, Texas THEODORE E. DEUTCH, Florida TED POE, Texas BRIAN HIGGINS, New York MATT SALMON, Arizona KAREN BASS, California DARRELL E. ISSA, California WILLIAM KEATING, Massachusetts TOM MARINO, Pennsylvania DAVID CICILLINE, Rhode Island JEFF DUNCAN, South Carolina ALAN GRAYSON, Florida MO BROOKS, Alabama AMI BERA, California PAUL COOK, California ALAN S. LOWENTHAL, California RANDY K. WEBER SR., Texas GRACE MENG, New York SCOTT PERRY, Pennsylvania LOIS FRANKEL, Florida RON DESANTIS, Florida TULSI GABBARD, Hawaii MARK MEADOWS, North Carolina JOAQUIN CASTRO, Texas TED S. -

Tulane University Releases Poll on Louisiana U.S. Senate Race

Tulane University Tulane University releases poll on Louisiana U.S. Senate race December 05, 2016 10:15 AM Keith Brannon [email protected] 504-862-8789 Louisiana Public Service Commissioner Foster Campbell (left) faces state Treasurer John Kennedy (right) in the runoff for Louisiana's remaining U.S. Senate seat on Dec. 10. Tulane University’s Department of Political Science released new survey results indicating a comfortable lead for state Treasurer John Kennedy going into Saturday’s runoff election for U.S. Senator from Louisiana. Brian Brox, associate professor of Political Science, conducted the online survey of Louisiana residents from Nov. 8-18. Among the findings: ° John Kennedy leads the race with 59 percent of respondents saying they would vote for him while 40 percent of respondents said they would vote for Louisiana Public Service Commissioner Foster Campbell. Tulane University | New Orleans | 504-865-5210 | [email protected] Tulane University ° When the presidential vote of the respondent is considered, nearly 83 percent of Trump voters plan to vote for Kennedy while nearly 69 percent of Clinton voters plan to vote for Campbell. ° When the respondent’s vote in the Senate primary is considered, both Kennedy and Campbell retain the support of well over 90 percent of their primary voters. Among those who voted for other major candidates, only Caroline Fayard voters indicate a preference for Campbell; previous supporters of U.S. Rep Charles Boustany, U.S. Rep. John Fleming, and Rob Maness now indicate a preference for Kennedy. ° When the racial background of the respondent is considered, 71 percent of African-American respondents chose Campbell while nearly 29 percent will vote for Kennedy. -

Michael Miller Partner

Michael Miller Partner Michael W. Miller joined Kyle House Group in 2014 with Areas of Expertise more than two decades of senior-level experience in • Government government, private sector consulting, international • Foreign Affairs organizations, non-profits, and academia. He brings a deep, • Global Health and Development first-hand understanding of how government policy is • International Organizations made and how it affects clients’ missions, markets, and • Non-profits outcomes domestically and internationally. • African Affairs Experience Prior to joining KHG, Michael served as Republican Policy Director for the U.S. Senate Committee on Foreign • Senate Foreign Relations Committee Relations for Senator Bob Corker. This was his second • Department of Health and Human time serving on Capitol Hill: from 1995 to 2001, Michael Services • Duke University was Senator Bill Frist’s senior legislative aide for foreign affairs, where he managed the Senator's work on the Education Foreign Relations Committee and directed the Subcommittee on African Affairs. • B.A. with honors in Geography, Before returning to Capitol Hill, Michael founded and led a Washington, DC- and University of Tennessee Research Triangle, NC-based consulting firm that served a range of U.S. and • M.A. in Political Geography, international clients on government policy, global health and development, international University of South Carolina regulation, and international organizations. Since 2010, Michael has served an Adjunct Associate Professor of Global Health at Duke University. Contact • (e) [email protected] From 2001 to 2009, Michael served in several key policy positions in the Executive • (t) 202-785-5270 Branch and the White House. As a Senior Advisor in the office of the U.S. -

Us–India Relations Under the Modi Government Hearing

U.S.–INDIA RELATIONS UNDER THE MODI GOVERNMENT HEARING BEFORE THE SUBCOMMITTEE ON ASIA AND THE PACIFIC OF THE COMMITTEE ON FOREIGN AFFAIRS HOUSE OF REPRESENTATIVES ONE HUNDRED THIRTEENTH CONGRESS SECOND SESSION JULY 24, 2014 Serial No. 113–203 Printed for the use of the Committee on Foreign Affairs ( Available via the World Wide Web: http://www.foreignaffairs.house.gov/ or http://www.gpo.gov/fdsys/ U.S. GOVERNMENT PRINTING OFFICE 88–834PDF WASHINGTON : 2014 For sale by the Superintendent of Documents, U.S. Government Printing Office Internet: bookstore.gpo.gov Phone: toll free (866) 512–1800; DC area (202) 512–1800 Fax: (202) 512–2104 Mail: Stop IDCC, Washington, DC 20402–0001 VerDate 0ct 09 2002 16:26 Oct 09, 2014 Jkt 000000 PO 00000 Frm 00001 Fmt 5011 Sfmt 5011 F:\WORK\_AP\072414\88834 SHIRL COMMITTEE ON FOREIGN AFFAIRS EDWARD R. ROYCE, California, Chairman CHRISTOPHER H. SMITH, New Jersey ELIOT L. ENGEL, New York ILEANA ROS-LEHTINEN, Florida ENI F.H. FALEOMAVAEGA, American DANA ROHRABACHER, California Samoa STEVE CHABOT, Ohio BRAD SHERMAN, California JOE WILSON, South Carolina GREGORY W. MEEKS, New York MICHAEL T. MCCAUL, Texas ALBIO SIRES, New Jersey TED POE, Texas GERALD E. CONNOLLY, Virginia MATT SALMON, Arizona THEODORE E. DEUTCH, Florida TOM MARINO, Pennsylvania BRIAN HIGGINS, New York JEFF DUNCAN, South Carolina KAREN BASS, California ADAM KINZINGER, Illinois WILLIAM KEATING, Massachusetts MO BROOKS, Alabama DAVID CICILLINE, Rhode Island TOM COTTON, Arkansas ALAN GRAYSON, Florida PAUL COOK, California JUAN VARGAS, California GEORGE HOLDING, North Carolina BRADLEY S. SCHNEIDER, Illinois RANDY K. WEBER SR., Texas JOSEPH P. -

Roster of State Officials

State of Iowa Roster of State Officials 2007 PUBLISHED BY THE STATE OF IOWA UNDER AUTHORITY OF IOWA CODE SECTION 2B.5 Twenty-Fourth Edition Preface Pursuant to Iowa Code section 2B.5, the State Roster is published as a correct list of state officers and deputies, members of boards and commissions, justices of the Supreme Court, judges of the court of appeals, judges of the district courts, including district associate judges and judicial magistrates, and members of the General Assembly. More specifically, the State Roster lists the membership of active boards and commissions established by state law, executive order of the Governor, or Iowa Court Rule. The State Roster also lists advisory councils whose members are appointed by the Governor or General Assembly, and may include other boards and commissions of interest to the public. The information included herein is furnished in part by state agencies, the Office of the Governor, the General Assembly, and the Supreme Court and reflects appointments reported prior to November 20, 2007. Roster listings include citation of the relevant statute and the name, home city, and term ending date or affiliation for each current appointee, as appropriate. The designation “statutory” indicates that the Code of Iowa requires that a representative of a specific office or organization serve. No attempt is made to arrange information on the basis of legal importance. The editors of the State Roster appreciate the cooperation of everyone who contributed to this publication and welcome comments and suggestions -

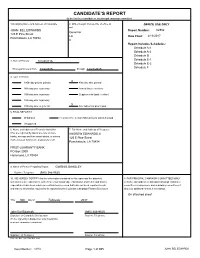

Candidate's Report

CANDIDATE’S REPORT (to be filed by a candidate or his principal campaign committee) 1.Qualifying Name and Address of Candidate 2. Office Sought (Include title of office as OFFICE USE ONLY well JOHN BEL EDWARDS Report Number: 62862 Governor 125 E Pine Street LA Date Filed: 2/13/2017 Ponchatoula, LA 70454 0 Report Includes Schedules: Schedule A-1 Schedule A-2 Schedule B Schedule E-1 3. Date of Primary 10/24/2015 Schedule E-2 Schedule F This report covers from 11/2/2015 through 12/21/2015 4. Type of Report: X 180th day prior to primary 40th day after general 90th day prior to primary Annual (future election) 30th day prior to primary Supplemental (past election) 10th day prior to primary X 10th day prior to general Amendment to prior report 5. FINAL REPORT if: Withdrawn Filed after the election AND all loans and debts paid Unopposed 6. Name and Address of Financial Institution 7. Full Name and Address of Treasurer (You are required by law to use one or more ANDREW EDWARDS, II banks, savings and loan associations, or money 125 E Pine Street market mutual fund as the depository of all Ponchatoula, LA 70454 FIRST GUARANTY BANK PO Box 2009 Hammond, LA 70404 9. Name of Person Preparing Report GWEN B BARSLEY Daytime Telephone (985) 386-9525 10. WE HEREBY CERTIFY that the information contained in this report and the attached 8. FOR PRINCIPAL CAMPAIGN COMMITTEES ONLY schedules is true and correct to the best of our knowledge, information and belief, and that no a.