Written Contributions

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Appendix 8: Damages Caused by Natural Disasters

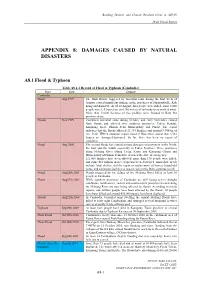

Building Disaster and Climate Resilient Cities in ASEAN Draft Finnal Report APPENDIX 8: DAMAGES CAUSED BY NATURAL DISASTERS A8.1 Flood & Typhoon Table A8.1.1 Record of Flood & Typhoon (Cambodia) Place Date Damage Cambodia Flood Aug 1999 The flash floods, triggered by torrential rains during the first week of August, caused significant damage in the provinces of Sihanoukville, Koh Kong and Kam Pot. As of 10 August, four people were killed, some 8,000 people were left homeless, and 200 meters of railroads were washed away. More than 12,000 hectares of rice paddies were flooded in Kam Pot province alone. Floods Nov 1999 Continued torrential rains during October and early November caused flash floods and affected five southern provinces: Takeo, Kandal, Kampong Speu, Phnom Penh Municipality and Pursat. The report indicates that the floods affected 21,334 families and around 9,900 ha of rice field. IFRC's situation report dated 9 November stated that 3,561 houses are damaged/destroyed. So far, there has been no report of casualties. Flood Aug 2000 The second floods has caused serious damages on provinces in the North, the East and the South, especially in Takeo Province. Three provinces along Mekong River (Stung Treng, Kratie and Kompong Cham) and Municipality of Phnom Penh have declared the state of emergency. 121,000 families have been affected, more than 170 people were killed, and some $10 million in rice crops has been destroyed. Immediate needs include food, shelter, and the repair or replacement of homes, household items, and sanitation facilities as water levels in the Delta continue to fall. -

Typhoon Initialization in a Mesoscale Model — Combination of the Bogused Vortex and the Dropwindsonde Data in DOTSTAR

Typhoon Initialization in a Mesoscale Model — Combination of the Bogused Vortex and the Dropwindsonde Data in DOTSTAR Kun-Hsuan Chou and Chun-Chieh Wu Department of Atmospheric Sciences, National Taiwan University, Taipei, Taiwan Submitted to Monthly Weather Review (Revised on April 23, 2007) ___________________ Corresponding author address: Dr. Chun-Chieh Wu, Department of Atmospheric Sciences, National Taiwan University, No. 1, Sec. 4, Roosevelt Rd., Taipei 106, Taiwan. ([email protected]) Abstract Issues on the initialization and simulation of tropical cyclones by integrating both the dropwindsonde data and the bogused vortex into a mesoscale model have been studied. A method is proposed to combine the dropwindsonde data with the bogused vortex for the tropical cyclone initialization and to improve the track and intensity prediction. Clear positive impact of this proposed method on both the tropical cyclone track and intensity forecasts in a mesoscale model is demonstrated in three cases of typhoons, Meari (2004), Conson (2004) and Megi (2004). The effectiveness of the proposed method in improving the track and intensity forecasts are also demonstrated in the evaluation of all 10 cases of DOTSTAR (Dropwindsonde Observations for Typhoon Surveillance near the Taiwan Region) missions in 2004. This method provides a useful and practical means to improve the operational tropical cyclones prediction with the dropwindsonde observations. 1 1 Introduction Over the past 30 years, persistent and steady progress on the track forecasts of tropical cyclone (TC) have been well demonstrated through the improvement of the numerical models, the data assimilation and bogusing systems, the targeted observations, and the satellite and dropwindsonde data available to the forecast systems (Wu et al. -

Typhoon Initialization in a Mesoscale Model—Combination of the Bogused Vortex and the Dropwindsonde Data in DOTSTAR

MARCH 2008 CHOU AND WU 865 Typhoon Initialization in a Mesoscale Model—Combination of the Bogused Vortex and the Dropwindsonde Data in DOTSTAR KUN-HSUAN CHOU AND CHUN-CHIEH WU Department of Atmospheric Sciences, National Taiwan University, Taipei, Taiwan (Manuscript received 12 January 2007, in final form 30 May 2007) ABSTRACT Issues concerning the initialization and simulation of tropical cyclones by integrating both dropwindsonde data and a bogused vortex into a mesoscale model have been studied. A method is proposed to combine dropwindsonde data with a bogused vortex for tropical cyclone initialization and to improve track and intensity prediction. A clear positive impact of this proposed method on both the tropical cyclone track and intensity forecasts in a mesoscale model is demonstrated in three cases of typhoons, including Meari (2004), Conson (2004), and Megi (2004). The effectiveness of the proposed method in improving the track and intensity forecasts is also demonstrated in the evaluation of all 10 cases of Dropwindsonde Observations for Typhoon Surveillance near the Taiwan Region (DOTSTAR) missions in 2004. This method provides a useful and practical means to improve operational tropical cyclone prediction with dropwindsonde obser- vations. 1. Introduction been sparse, the uncertainty and poor quality in initial conditions can lead to monumental errors in the nu- Over the past 30 yr, persistent and steady progress on merical simulation and prediction of TCs. For example, the track forecasts of tropical cyclones (TCs) has been Kurihara et al. (1995) have shown that a better predic- well demonstrated through the improvement of nu- tion could be achieved by the use of improved initial- merical models, data assimilation and bogusing sys- ization procedures that better represent the initial en- tems, targeted observations, and satellite and drop- vironment, as well as the vortex-scale, flow, and mass windsonde data available to the forecast systems (Wu fields. -

Annual Weather Review 2010 ______

Annual Weather Review 2010 ____________________________________________________________________________ Review of Weather Conditions in 2010 Northeast Monsoon Season (Jan – March) 2010 started off with a mild Northeast Monsoon, with rainfall well below average for the first two months of the year. Not only was the 2009/2010 Northeast Monsoon unusual in not bringing the monsoon surges typical of the rainy season1, the period also saw the occurrence of Sumatra squalls on three almost consecutive days on 4, 6 and 7 January 2010. Sumatra squalls are associated with deep southwesterly winds and are uncommon during the Northeast Monsoon, during which the prevailing winds are predominantly from the opposite direction. The dry phase2 of the Northeast Monsoon set in during late January 2010 and extended to March 2010. The dry conditions usually experienced during this period are due to the strong cross equatorial flow induced by the migration of the monsoon rain belt far south of the equator away from Singapore. The absence of sufficient moisture coupled with the presence of strong divergent wind flow at the 700-850 hPa levels, impeded convective activity and suppressed rainfall during this period. In February 2010, the coincident passage of a dry phase of the Madden Julian Oscillation (MJO) and a moderate El-Niño over the maritime continent further exacerbated the dry conditions over Singapore and the surrounding region. As a result, February 2010 was the driest February for Singapore since rainfall records began in 1869, with only 6.3 mm of rainfall recorded at the climate station. Moreover, the dry phase of the Northeast Monsoon in 2010 had only 5 rain days and registered a total rainfall of merely 9.8 mm. -

Typhoon Conson

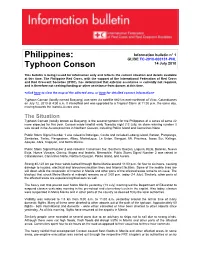

Philippines: Information bulletin n° 1 GLIDE TC-2010-000131-PHL 14 July 2010 Typhoon Conson This bulletin is being issued for information only and reflects the current situation and details available at this time. The Philippine Red Cross, with the support of the International Federation of Red Cross and Red Crescent Societies (IFRC), has determined that external assistance is currently not required, and is therefore not seeking funding or other assistance from donors at this time. <click here to view the map of the affected area, or here for detailed contact information> Typhoon Conson (locally named Basyang) was seen via satellite 660 km east-northeast of Virac, Catanduanes on July 12, 2010 at 4:00 a.m. It intensified and was upgraded to a Tropical Storm at 11:00 p.m. the same day, moving towards the Isabela-Aurora area. The Situation Typhoon Conson (locally known as Basyang) is the second typhoon for the Philippines of a series of some 22 more expected for this year. Conson made landfall early Tuesday night (13 July) as storm warning number 3 was raised in the Aurora province in Northern Quezon, including Polilio Island and Camarines Norte. Public Storm Signal Number 1 was raised in Batangas, Cavite and included Lubang Island, Bataan, Pampanga, Zambales, Tarlac, Pangasinan, Albay, Marinduque, La Union, Benguet, Mt. Province, Ilocos Sur, Kalinga, Apayao, Abra, Cagayan, and Metro Manila. Public Storm Signal Number 2 was raised in Camarines Sur, Southern Quezon, Laguna, Rizal, Bulacan, Nueva Ecija, Nueva Vizcaya, Quirino, Ifugao and Isabela. Meanwhile, Public Storm Signal Number 3 was raised in Catanduanes, Camarines Norte, Northern Quezon, Polilio Island, and Aurora. -

Appendix 3 Selection of Candidate Cities for Demonstration Project

Building Disaster and Climate Resilient Cities in ASEAN Final Report APPENDIX 3 SELECTION OF CANDIDATE CITIES FOR DEMONSTRATION PROJECT Table A3-1 Long List Cities (No.1-No.62: “abc” city name order) Source: JICA Project Team NIPPON KOEI CO.,LTD. PAC ET C ORP. EIGHT-JAPAN ENGINEERING CONSULTANTS INC. A3-1 Building Disaster and Climate Resilient Cities in ASEAN Final Report Table A3-2 Long List Cities (No.63-No.124: “abc” city name order) Source: JICA Project Team NIPPON KOEI CO.,LTD. PAC ET C ORP. EIGHT-JAPAN ENGINEERING CONSULTANTS INC. A3-2 Building Disaster and Climate Resilient Cities in ASEAN Final Report Table A3-3 Long List Cities (No.125-No.186: “abc” city name order) Source: JICA Project Team NIPPON KOEI CO.,LTD. PAC ET C ORP. EIGHT-JAPAN ENGINEERING CONSULTANTS INC. A3-3 Building Disaster and Climate Resilient Cities in ASEAN Final Report Table A3-4 Long List Cities (No.187-No.248: “abc” city name order) Source: JICA Project Team NIPPON KOEI CO.,LTD. PAC ET C ORP. EIGHT-JAPAN ENGINEERING CONSULTANTS INC. A3-4 Building Disaster and Climate Resilient Cities in ASEAN Final Report Table A3-5 Long List Cities (No.249-No.310: “abc” city name order) Source: JICA Project Team NIPPON KOEI CO.,LTD. PAC ET C ORP. EIGHT-JAPAN ENGINEERING CONSULTANTS INC. A3-5 Building Disaster and Climate Resilient Cities in ASEAN Final Report Table A3-6 Long List Cities (No.311-No.372: “abc” city name order) Source: JICA Project Team NIPPON KOEI CO.,LTD. PAC ET C ORP. -

WWRP/THORPEX, 15. Targeted Observations For

Commission for Atmospheric TARGETED OBSERVATIONS FOR Sciences IMPROVING NUMERICAL WEATHER PREDICTION: AN OVERVIEW THORPEX International Programme Office Atmospheric Research and Environment Branch World Meteorological Organization Secretariat 7 bis, avenue de la Paix Case postale 2300 CH-1211 Geneva 2 World Weather Research Programme Switzerland www.wmo.int/thorpex WWRP/THORPEX No. 15 ! WORLD METEOROLOGICAL ORGANIZATION WORLD WEATHER RESEARCH PROGRAMME COMMISSION FOR ATMOSPHERIC SCIENCES TARGETED OBSERVATIONS FOR IMPROVING NUMERICAL WEATHER PREDICTION: AN OVERVIEW Prepared by Sharanya J. Majumdar and Co-authors WWRP/THORPEX No. 15 Table of Contents Abstract ................................................................................................................................................................................ i 1. INTRODUCTION..................................................................................................................................................... 1 2. REVIEW OF FIELD CAMPAIGNS AND OBSERVATIONS ................................................................................... 1 Pre-THORPEX era.................................................................................................................................................. 2 The THORPEX era.................................................................................................................................................. 2 3. THE TARGETED OBSERVING PROCEDURE..................................................................................................... -

Review of Natural Disasters in 2010

A YEAR OF LIVING DANGEROUSLY A REVIEW OF NATURAL DISASTERS IN 2010 The Brookings Institution – London School of Economics Project on Internal Displacement April 2011 BROOKINGS Cover photos: Front: The Haitian national palace, © United Nations Development Programme. Back: Right/ Eruption of Eyjafjallajökull volcano in Iceland, © iStockphoto; Left: Young Haitian injured by the earthquake, © United Nations Development Programme. A YEAR OF LIVING DANGEROUSLY: A REVIEW OF NATURAL DISASTERS IN 2010 BY ELIZabeTH Ferris and Daniel PETZ April 2011 Published by: The Brookings Institution – London School of Economics Project on Internal Displacement ii A YEAR OF LIVING DANGEROUSLY: A REVIEW OF NATURAL DISASTERS IN 2010 Photo: NASA Hurricane Paula, 2010. TABLE OF CONTENTS FOREWORD ........................................................................................................................ VII INTRODUCTION ................................................................................................................. 1 CHAPTER 1: 2010: NATURAL DISASTERS IN NUMBERS ..................................... 5 Section 1: Disaster Statistics and Trends in 2010 .................................................................. 5 Section 2: Humanitarian Funding in 2010 ................................................................................. 21 CHAPTER 2: EARTHQUAKES AND FLOODS ............................................................. 29 Section 1: Comparing the Earthquake in Haiti and the Floods in Pakistan – Basic Indicators .......................................................................................................... -

Operations Update 17

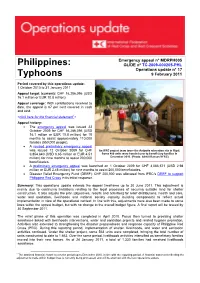

Emergency appeal n° MDRPH005 Philippines: GLIDE n° TC-2009-000205-PHL Operations update n° 17 Typhoons 9 February 2011 Period covered by this operations update: 1 October 2010 to 31 January 2011 Appeal target (current): CHF 16,286,096 (USD 16.1 million or EUR 10.8 million). Appeal coverage: With contributions received to date, the appeal is 67 per cent covered in cash and kind. <click here for the financial statement1> Appeal history: • The emergency appeal was issued 23 October 2009 for CHF 16,286,096 (USD 16.1 million or EUR 10.8 million) for 18 months to assist approximately 110,000 families (550,000 people). • A revised preliminary emergency appeal was issued 12 October 2009 for CHF An IFRC project team tours the Antipolo relocation site in Rizal. 6,854,640 (USD 6.65 million or EUR 4.51 Some 400 units were handed over to beneficiary families in million) for nine months to assist 200,000 December 2010. (Photo: Afrhill Rances/ IFRC) beneficiaries. • A preliminary emergency appeal was launched on 1 October 2009 for CHF 3,086,571 (USD 2.98 million or EUR 2.45 million) for nine months to assist 200,000 beneficiaries. • Disaster Relief Emergency Fund (DREF): CHF 200,000 was allocated from IFRC’s DREF to support Philippine Red Cross in its initial response. Summary: This operations update extends the appeal timeframe up to 30 June 2011. This adjustment is mainly due to continuing limitations relating to the legal processes of securing suitable land for shelter construction. It also adjusts the plan [objectives, results and activities] for relief distributions, health and care, water and sanitation, livelihoods and national society capacity building components to reflect actual implementation in view of the operational context. -

Reprint 1073 Application of Dvorak Technique During the Weakening

Reprint 1073 Application of Dvorak Technique during the Weakening Stage of Tropical Cyclones C.T. Shum & S.T. Chan The 6th China-Korea Joint Workshop on Tropical Cyclones Shanghai, China 26-28 May 2013 APPLICATION OF DVORAK TECHNIQUE DURING THE WEAKENING STAGE OF TROPICAL CYCLONES C.T. SHUM and S.T. CHAN Hong Kong Observatory, Hong Kong, China Abstract The Dvorak technique has been widely used by operational warning centres in different parts of the world as a major analysis tool to determine the intensity of tropical cyclone (TC). However, there exist noticeable differences in the application of the technique among different warning centres. In particular, the weakening rules in the technique that governs the determination of TC intensity during the TC weakening stage constitute one such difference and are the subject of review in this paper. Three options to modify the weakening rules are introduced and evaluated based on verification against the best-track datasets from various centres. Keywords: Dvorak technique, tropical cyclones, weakening rules. 1. Introduction Based on the infrared imageries from geostationary satellites, the Enhanced Infrared (EIR) Dvorak technique (Dvorak, 1984) has long been used in the Hong Kong Observatory (HKO) as one of the references for estimating the intensity of tropical cyclones (TCs). Once a potential TC is suspected to be forming within the area 0o-36oN, 100o-140oE, Dvorak analysis will be performed at 6-hourly intervals. For TCs within the HKO area of responsibility (i.e. 10o-30oN, 105o-125oE), additional analysis will be performed at 3-hourly intervals. While Dvorak analysis serves as one of the important references in determining the maximum sustained surface wind speed (MSW) of the TC, surface wind reports, Doppler wind observations from weather radars, reconnaissance aircraft reports when available, wind scatterometer data and other objective analysis data, e.g. -

ASIA and the PACIFIC Weekly Regional Humanitarian Snapshot 31 August - 6 September 2021

ASIA AND THE PACIFIC Weekly Regional Humanitarian Snapshot 31 August - 6 September 2021 NEPAL BANGLADESH Unusually heavy rainfall in a short period MONGOLIA Heavy rainfall has caused flooding in Inactive Watch of time on September 6 has led to Watch northern Bangladesh since the last flooding in the city of Kathmandu, DPR KOREA Alert week of August. According to the Pyongyang Alert National Disaster Response Coordination inundating more than 382 houses and several Kabul BHUTAN RO KOREA El Niño Centre, the most affected districts include roads, local marketplaces, and parks. About 105 CHINA Kobe AFGHANISTAN Kurigram, Lalmonirhat, Tangail, Rajbari, Islamabad mm of rainfall was recorded in just three hours in La Niña BHUTAN JAPAN Sartiatpur and Bogura. More than 40,000 Kathmandu. At least 138 people have been PAKISTAN NEPAL households were affected, 7759 hectares of rescued by security forces. The most affected LA NIÑA/EL NIÑO LEVEL Source: Commonwealth of Australia Bureau of Meteorology agricultural land have been damaged, and households are those living in informal more than 200 houses have been destroyed, BANGLADESH settlements in low-lying areas of the city. The according to Government reports. The INDIA MYANMAR VIET NAM Bagmati River that flows through the city was at Ministry of Disaster Management and Relief LAO PDR PACIFIC Yangon Northern Mariana has allocated rice and cash support to danger level, but the water level has receded. Islands (US) THAILAND OCEAN affected districts, while local and national More information from damage assessments Bay of Manila PHILIPPINES Bangkok Guam (US) organizations have provided food relief items are yet to be available. -

From the Red River to the Gulf of Tonkin : Dynamics and Sediment Transport Along the Estuary-Coastal Area Continnum Violaine Piton

From the Red River to the Gulf of Tonkin : dynamics and sediment transport along the estuary-coastal area continnum Violaine Piton To cite this version: Violaine Piton. From the Red River to the Gulf of Tonkin : dynamics and sediment transport along the estuary-coastal area continnum. Oceanography. Université Paul Sabatier - Toulouse III, 2019. English. NNT : 2019TOU30235. tel-02957680 HAL Id: tel-02957680 https://tel.archives-ouvertes.fr/tel-02957680 Submitted on 5 Oct 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. THÈSETHÈSE En vue de l’obtention du DOCTORAT DE L’UNIVERSITÉ DE TOULOUSE Délivré par : l’Université Toulouse 3 Paul Sabatier (UT3 Paul Sabatier) Présentée et soutenue le 16/12/2019 par : Violaine Piton Du Fleuve Rouge au Golfe du Tonkin: dynamique et transport sédimentaire le long du continuum estuaire-zone côtière JURY ISABELLE BRENON LIENSs Rapportrice SABINE CHARMASSON IRSN Rapportrice ALDO SOTTOLICHIO EPOC Rapporteur ROBERT LAFITE M2C Examinateur ROMARIC VERNEY IFREMER Examinateur JEAN-MICHEL MARTINEZ GET Examinateur CATHERINE