Brno University of Technology Evaluation of Target

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Spring 2018 Section 004 Syllabus

George Mason University College of Education and Human Development School of Recreation, Health, and Tourism Physical Activity for Lifetime Wellness RECR 136-005 Pistol Marksmanship (1) 1 credit Spring 2018 Wednesdays 3- 5:00 PM Location: NRA Shooting Range (NRA HQ) Faculty Name: Matthew Sharpe -NRA Instructor # 15184715 -DCJS ID # 99-427481 Office Hours: By Appointment Office Location: NRA Range, Fairfax, VA Email: [email protected] Phone: 703-907-9852 Prerequisites None Participants must be 18 years of age before the class begins. Fees This course requires a (lab/course) fee of ($180.00 payable at registration and $10 for range fees payable to the NRA range). Course Description Introduces students to marksmanship skills in target shooting. Increases students' knowledge of firearm safety, international target shooting, equipment care and maintenance, and shooting sports competition methods and techniques. Course Overview Students will be exposed to all aspects of precision Handgun shooting. Each course participant will, upon successful completion of the course, demonstrate knowledge and marksmanship competencies in: 1. Pistol identification, firearm safety, fundamental care of a handgun; 2. Precision handgun shooting fundamental techniques and competitive techniques; 3. Physical, mental and environmental factors in the competitive pistol shooting sports. During the first 2 weeks of instruction, the instructor will conduct an individualized diagnostic session for each student to determine their level of comfort, knowledge and ability with a pistol. Utilizing the written material and instructor demonstrations as a base of knowledge, the student will learn the discipline required to experience true competitive target pistol shooting. The instructor will coach the student through presentations, demonstrations and “live” fire exercises (on an approved pistol range) that will teach the student the appropriate techniques involved in competitive Bullseye shooting. -

The Winchester/NRA Marksmanship Qualification Programs Has Something Fun for the Whole Family & Kids of All Ages!

90099_cv_out.qxd:QualCover08 2/9/10 1:08 PM Page 1 The Winchester/NRA Marksmanship Qualification Programs Has Something Fun for the Whole Family & Kids of All Ages! Shooting Sports — A Sport For Life! 90099_cv_out.qxd:QualCover08 2/9/10 1:08 PM Page 2 Eighth Edition–February 2010 Copyright 2004, National Rifle Association of America All rights reserved. Printed in the United States of America. This book may not be reprinted or reproduced in whole or in part by mechanical means, photocopying, electronic reproduction, scanning, or any other means without prior written permission. For more information, write to: Education & Training Division, National Rifle Association of America, 11250 Waples Mill Road, Fairfax, VA 22030. To join the NRA today, or for additional information regarding NRA Membership, call (800) NRA-3888 or visit online at www.nrahq.org. Your membership dues may be charged to American Express, Discover, Mastercard, or Visa. NR 40810 EQ 09525 90099_out.qxd:Qual Book_06.qxd 2/23/10 9:28 AM Page 1 Winchester/NRA Marksmanship Qualification Program Table of Contents Marksmanship Qualification Program ................................................................................. 2 Just for Women—Women on Target® ................................................................................ 4 Pistol Qualification ................................................................................................................. 6 Conventional “Bullseye” Pistol Qualification ...................................................................... -

Texas 4-H Shooting Sports Project Pistol Rules (2014)

TEXAS 4-H NATURAL RESOURCES PROGRAM Texas 4-H Shooting Sports Project Pistol Rules (2014) The national governing body for the Pistol events rules is the NRA (http://compete.nra.org/official- nra-rule-books.aspx); International Pistol Rules - air pistol events, Conventional Pistol Rules – smallbore pistol bullseye events, and Silhouette Pistol Rules – smallbore pistol silhouette events. These rules are used with modifications in 4-H activities and events as stated below. The following rule set supersedes all other rules where differences exist. General 4-H Shooting Sports Project Rules cover all shooting disciplines. In 4-H events, event specific rules and regulations supersede other governing body rules where differences exist. Coaches, parents, and 4-H shooters are encouraged to be familiar with each of these rule sets. 3. EQUIPMENT AND AMMUNITION 3.2 Smallbore hunter's pistol - A factory-available .22 caliber rimfire pistol chambered for .22 Short, .22 Long or .22 Long Rifle cartridges, weighing no more than 5 pounds and having a safe trigger and a barrel no longer than 12 inches measured from breech face to muzzle may be used in smallbore hunter's pistol competitions. The pistol may be of any action type except bolt action pistols which are restricted to an unlimited class. They must be in original factory condition without modification in all respects except: a. External finish - External finish and embellishments including engraving, inlays and decorative or protective finishes may be added. b. Sights - Any sights may be used, including telescopic, metallic or other optical sights. The sight radius on metallic sights may not exceed 15 inches, and the center of the sighting plane may not be more than 2 inches above the barrel or receiver. -

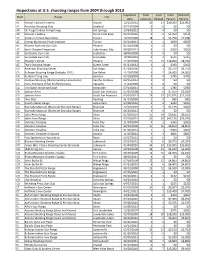

Inspections at U.S. Shooting Ranges from 2004 Through 2013

Inspections at U.S. shooting ranges from 2004 through 2013 Inspection Total Lead Initial Reduced State Range City Date violations Related Penalty Penalty AL Hoover Tactical Firearms Hoover 12/12/2012 20 17 $39,200 $22,000 AL Precision Shooting Club Saraland 07/23/2004 0 0 $0 $0 AR On Target Indoor Firing Range Hot Springs 10/24/2013 5 4 $0 $0 AR Shooter's Gallery North Little Rock 03/14/2005 9 6 $4,050 $450 AZ Jensen's Arizona Sportsman Tucson 10/26/2005 18 3 $3,750 $2,438 AZ Ocongi Marksman Pistol Institute Tucson 02/12/2013 1 1 $600 $600 AZ Phoenix Rod and Gun Club Phoenix 01/29/2008 0 0 $0 $0 AZ Sam's Shooter Emporium Lake Havasu City 09/06/2012 6 5 $300 $300 AZ Scottsdale Gun Club Scottsdale 08/06/2009 0 0 $0 $0 AZ Scottsdale Gun Club Scottsdale 10/18/2010 2 2 $525 $525 AZ Shooter's World Phoenix 11/03/2004 21 15 $18,000 $8,250 AZ Ted's Shooting Range Queen Creek 01/23/2012 3 2 $300 $300 CA American Shooting Center San Diego 07/19/2006 5 1 $3,750 $3,750 CA Bullseye Shooting Range (Savbyko 1911) San Rafael 11/18/2008 6 2 $4,000 $4,000 CA Burbank Firing Line Burbank 02/19/2004 5 4 $740 $740 CA Cordova Shooting (Marksmanship Consultants) Rancho Cordova 03/15/2012 0 0 $0 $0 CA Guns, Fishing & Other Stuff (Morcorp) Vacaville 11/04/2009 0 0 $0 $0 CA Iron Sights Shooting Range Oceanside 07/14/2011 3 0 $780 $780 CA Jackson Arms South San Francisco 05/21/2008 6 6 $7,350 $2,100 CA Jackson Arms South San Francisco 05/01/2013 15 15 $22,675 $11,010 CA Nice Shot Redding 01/11/2005 1 0 $85 $85 CA Reed's Indoor Range Santa Clara 04/28/2010 2 2 $330 -

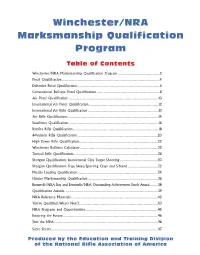

Winchester/NRA Marksmanship Qualification Program

Winchester/NRA Marksmanship Qualification Program Table of Contents Winchester/NRA Marksmanship Qualification Program.................................................2 Pistol Qualification .................................................................................................................4 Defensive Pistol Qualification................................................................................................6 Conventional Bullseye Pistol Qualification .........................................................................8 Air Pistol Qualification ........................................................................................................10 International Air Pistol Qualification.................................................................................12 International Air Rifle Qualification ..................................................................................13 Air Rifle Qualification..........................................................................................................14 Smallbore Qualification.........................................................................................................16 Rimfire Rifle Qualification....................................................................................................18 4-Position Rifle Qualification .............................................................................................20 High Power Rifle Qualification...........................................................................................22 -

Art of Shooting Art of Shooting an Introduction to Target Shooting with Rifle, Pistol, Shotgun and Airgun

Art of Shooting Art of Shooting An introduction to target shooting with rifle, pistol, shotgun and airgun Prof. Philip Treleaven Preface This handbook is a ‘primer’ for the new target shooter: introducing the firearms, shooting disciplines and firearm technology, and drawing on the expertise of Bisley, the home of British and Commonwealth target shooting. For someone interested in taking up target shooting, it is surprisingly difficult to find out what are the different shooting disciplines (or to give them their ISSF name Events), and perhaps more importantly what’s available in their area. Naturally you won’t find Shooting for Dummies in the local bookshop, but there are some excellent books and web sites, especially in the United States. Most cater for the experienced competitor in a specific discipline, like Smallbore or Benchrest, rather than the new shooter. I am fortunate in that I live 40 minutes drive from the world famous Bisley Camp, the home of British and Commonwealth shooting (cf. Camp Perry in America). The great thing about shooting at Bisley is the wealth of knowledge and experience available covering all aspects of the sport. People who have shot in the Olympics and Commonwealth Games, national champions for every shooting discipline, experts in ballistics and hand loading, gunsmiths and armourers … and national coaches. Truly a university of shooting – akin to Cambridge or Harvard! However, even at Bisley it is a daunting challenge to find out what shooting disciplines are available, and who to ask for advice. It’s like everyone else in the shooting world knows everything about shooting, marksmanship, ballistics and hand loading, and you the novice know nothing. -

Winchester/NRA Marksmanship Qualification Program

Winchester/NRA Marksmanship Qualification Program Table of Contents Winchester/NRA Marksmanship Qualification Program.................................................2 Pistol Qualification .................................................................................................................4 Defensive Pistol Qualification................................................................................................6 Conventional Bullseye Pistol Qualification .........................................................................8 Air Pistol Qualification ........................................................................................................10 International Air Pistol Qualification.................................................................................12 International Air Rifle Qualification ..................................................................................13 Air Rifle Qualification..........................................................................................................14 Smallbore Qualification.........................................................................................................16 Rimfire Rifle Qualification....................................................................................................18 4-Position Rifle Qualification .............................................................................................20 High Power Rifle Qualification...........................................................................................22 -

Long Range Planning Survey of BRC Members.Pdf

Long Range Planning Committee SurveyMonkey Q1 What age group do you fit in? Answered: 647 Skipped: 1 18-24 years 25-39 years 40-55 years Over 55 yrs 0% 10% 20% 30% 40% 50% 60% 70% 80% 90% 100% ANSWER CHOICES RESPONSES 18-24 years 0.15% 1 25-39 years 9.74% 63 40-55 years 34.31% 222 Over 55 yrs 55.80% 361 TOTAL 647 1 / 23 Long Range Planning Committee SurveyMonkey Q2 How long have you been a member of the Beloit Rifle Club? Answered: 647 Skipped: 1 Less than 5 years 5-10 years More than 10 years 0% 10% 20% 30% 40% 50% 60% 70% 80% 90% 100% ANSWER CHOICES RESPONSES Less than 5 years 49.00% 317 5-10 years 22.26% 144 More than 10 years 28.75% 186 TOTAL 647 2 / 23 Long Range Planning Committee SurveyMonkey Q3 How often do you use our Ranges? Answered: 647 Skipped: 1 At least 1 time per week 2-3 times per month 1 time per month not often 0% 10% 20% 30% 40% 50% 60% 70% 80% 90% 100% ANSWER CHOICES RESPONSES At least 1 time per week 14.68% 95 2-3 times per month 30.76% 199 1 time per month 29.52% 191 not often 25.04% 162 TOTAL 647 3 / 23 Long Range Planning Committee SurveyMonkey Q4 Which ranges do you usually use? Mark all that apply. Answered: 647 Skipped: 1 Archery General / Main Range Trap Silhouette Outdoor Pistol Black Powder Indoor Range 100-500 Meter Cowboy Defense Range 0% 10% 20% 30% 40% 50% 60% 70% 80% 90% 100% ANSWER CHOICES RESPONSES Archery 17.16% 111 General / Main Range 73.88% 478 Trap 25.50% 165 Silhouette 17.47% 113 Outdoor Pistol 31.53% 204 Black Powder 6.80% 44 Indoor Range 66.62% 431 100-500 Meter 40.34% 261 Cowboy 15.92% 103 Defense Range 43.74% 283 Total Respondents: 647 4 / 23 Long Range Planning Committee SurveyMonkey Q5 Would you be interest in training seminars? Mark those you would be interested in. -

Cowboy Heaven

MercantileEXCITINGSee section our (starting on page 91) NovemberNovemberNovember 2001 2001 2001 CowboyCowboyCowboy ChronicleChronicleChronicle PagePagePage 111 The Cowboy Chronicle~ The Monthly Journal of the Single Action Shooting Society ® Vol. 22 No. 7 © Single Action Shooting Society, Inc. July 2009 .COWBOY HEAVEN, The Last Stand At Chimney Rock • SASS 2008 Western Regional! October 9-12, 2008 By Frederick Jackson Turner, SASS #28271 Photos by Hoss Hall, SASS #15689 ucerne Valley, CA – I’m See HIGHLIGHTS on page 73 sitting in a scoring shack that would double as a an 1880’s southwestern cowtown! L pretty decent hotel lounge Cowboys, bankers, train conduc- in some parts of the country. The tors gentlemen, scoundrels, and 2008 SASS Western Regional is their ladies, amble down a long over, folks are pulling up stakes, boardwalk, where real buildings and headed back home after days of line both sides of the street. Down revelry. Everyone is headed for the boardwalk, you’ll run into the home, ready to take a shower, appropriately titled “Twitchy maybe pour out a good libation of Finger’s Saloon.” It’s a fully func- some sort, and mull over the events tional old time saloon—complete of a long, satisfying weekend. with working piano—that also hap- Except no one is in a hurry to pens to be Stage Three of the leave! match! It would also become the I’m basking in the warm after- site of regular late night gatherings glow of a great match with Ella as the weekend went on. Watson and Kentucky Gal, letting Cowboys started pulling into the last of the day slide by, when town by the middle of the week. -

July 2021 13-14 NEWS Vol

CONTENTS 3-11 COVER FEATURE END of TRAIL — A Look Back and a Peek at the Future 12 EDITORIALS PUBLISHED BY THE WILD BUNCH Skinny’s Soapbox THE COWBOY CHRONICLE, JULY 2021 13-14 NEWS VOL. 2 #23 SASS Western Regional Update Single Action Shooting Society® PO Box 2340, Moriarty, NM 87035 16-19 COSTUMING CORNER 505-843-1320 • Fax 877-770-8687 www.sassnet.com Memories of Past END of TRAIL Costumes © 2021 All rights reserved 20-25 ANNUAL MATCHES EDITORIAL STAFF Legends 2021 — Four Corners Regional and Wild Bunch Territorial Championships Range War 2020 — Michigan State Championship EDITOR-IN-CHIEF Skinny 26-31 GUNS & GEAR MANAGING EDITOR Dispatches From Camp Baylor — Everything About Black Powder Misty Moonshine Dillon 650 Primer Punch Tool EDITORS EMERITUS 32-36 HISTORY Tex & Cat Ballou Dirty Deals — The Compromise of 1877 ADVERTISING MANAGER Little Known Famous People Way Out West — Porter Rockwell Square Deal Jim 410-531-5456 | [email protected] 37-42 PRODUCT REVIEW GRAPHIC DESIGN Cimarron Firearms/Uberti 1866 .44-40 Carbine Mac Daddy 44 PROFILES SASS® Trademarks Essay by 2020 SASS Scholarship Recipient Smoke N’ Ash SASS®, Single Action Shooting Society®, The Cowboy ChronicleTM, Cowboy Action ShootingTM, END of TRAILTM, The 45-47 TRAILMARKERS World Championship of Cowboy Action ShootingTM, Founders RanchTM, SASS Western Hoss Aimright Heritage Museum & Cowboy Action Shooting Hall Of FameTM, SASS Scholarship Icelady TM TM Foundation , Wild Bunch , Wild Bunch Iron Horse Jim Action ShootingTM — are all trademarks of The Single Action Shooting Society®. Any Lobo Joe use or reproduction of these marks without the express written permission of SASS® is strictly prohibited. -

NRA Marksmanship Qual Book

90099_cv_out.qxd:QualCover08 2/9/10 1:08 PM Page 1 The Winchester/NRA Marksmanship Qualification Programs Has Something Fun for the Whole Family & Kids of All Ages! Shooting Sports — A Sport For Life! 90099_cv_out.qxd:QualCover08 2/9/10 1:08 PM Page 2 Eighth Edition–February 2010 Copyright 2004, National Rifle Association of America All rights reserved. Printed in the United States of America. This book may not be reprinted or reproduced in whole or in part by mechanical means, photocopying, electronic reproduction, scanning, or any other means without prior written permission. For more information, write to: Education & Training Division, National Rifle Association of America, 11250 Waples Mill Road, Fairfax, VA 22030. To join the NRA today, or for additional information regarding NRA Membership, call (800) NRA-3888 or visit online at www.nrahq.org. Your membership dues may be charged to American Express, Discover, Mastercard, or Visa. NR 40810 EQ 09525 90099_out.qxd:Qual Book_06.qxd 2/23/10 9:28 AM Page 1 Winchester/NRA Marksmanship Qualification Program Table of Contents Marksmanship Qualification Program ................................................................................. 2 Just for Women—Women on Target® ................................................................................ 4 Pistol Qualification ................................................................................................................. 6 Conventional “Bullseye” Pistol Qualification ...................................................................... -

Innovating Sports Shooting with Computer Vision

http://excel:fit:vutbr:cz Innovating Sports Shooting With Computer Vision Jana Gregorova*´ Abstract Bullseye shooting is a shooting sports discipline where the shooter is standing in one place and shooting at a static paper target. The shooter’s goal is to land as many hits in the centre of the target as possible. The distance between the shooter and the target usually ranges from 10 m to 300 m. That way, while shooting, the shooter is unable to see where exactly their hits land unless some kind of auxiliary equipment is used. To calculate the score, all shooting needs to be stopped as the shooter walks right into the field to see their target up close. For a new round, the shooter either has to change the target for a new one or cover target hits up with cover up patches. The goal of this work is to find a solution to calculate shooter’s score automatically. One of the possible approaches to this problem is taking a video of the target and processing it, so that new hits in the target can be detected and their score calculated. The resulting image will then be shown to the shooter on a screen near them. The result of this paper is a commercial distribution-ready solution both for individual shooters and commercial shooting ranges. The solution consists of a camera setup and an application which displays the video from camera and evaluates the shooter’s score using computer vision. The solution brings significant improvements in terms of shooter’s comfort and bullseye shooting training effectiveness.