Diagonalization

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

MATH 2370, Practice Problems

MATH 2370, Practice Problems Kiumars Kaveh Problem: Prove that an n × n complex matrix A is diagonalizable if and only if there is a basis consisting of eigenvectors of A. Problem: Let A : V ! W be a one-to-one linear map between two finite dimensional vector spaces V and W . Show that the dual map A0 : W 0 ! V 0 is surjective. Problem: Determine if the curve 2 2 2 f(x; y) 2 R j x + y + xy = 10g is an ellipse or hyperbola or union of two lines. Problem: Show that if a nilpotent matrix is diagonalizable then it is the zero matrix. Problem: Let P be a permutation matrix. Show that P is diagonalizable. Show that if λ is an eigenvalue of P then for some integer m > 0 we have λm = 1 (i.e. λ is an m-th root of unity). Hint: Note that P m = I for some integer m > 0. Problem: Show that if λ is an eigenvector of an orthogonal matrix A then jλj = 1. n Problem: Take a vector v 2 R and let H be the hyperplane orthogonal n n to v. Let R : R ! R be the reflection with respect to a hyperplane H. Prove that R is a diagonalizable linear map. Problem: Prove that if λ1; λ2 are distinct eigenvalues of a complex matrix A then the intersection of the generalized eigenspaces Eλ1 and Eλ2 is zero (this is part of the Spectral Theorem). 1 Problem: Let H = (hij) be a 2 × 2 Hermitian matrix. Use the Min- imax Principle to show that if λ1 ≤ λ2 are the eigenvalues of H then λ1 ≤ h11 ≤ λ2. -

Section 5 Summary

Section 5 summary Brian Krummel November 4, 2019 1 Terminology Eigenvalue Eigenvector Eigenspace Characteristic polynomial Characteristic equation Similar matrices Similarity transformation Diagonalizable Matrix of a linear transformation relative to a basis System of differential equations Solution to a system of differential equations Solution set of a system of differential equations Fundamental solutions Initial value problem Trajectory Decoupled system of differential equations Repeller / source Attractor / sink Saddle point Spiral point Ellipse trajectory 1 2 How to find eigenvalues, eigenvectors, and a similar ma- trix 1. First we find eigenvalues of A by finding the roots of the characteristic equation det(A − λI) = 0: Note that if A is upper triangular (or lower triangular), then the eigenvalues λ of A are just the diagonal entries of A and the multiplicity of each eigenvalue λ is the number of times λ appears as a diagonal entry of A. 2. For each eigenvalue λ, find a basis of eigenvectors corresponding to the eigenvalue λ by row reducing A − λI and find the solution to (A − λI)x = 0 in vector parametric form. Note that for a general n × n matrix A and any eigenvalue λ of A, dim(Eigenspace corresp. to λ) = # free variables of (A − λI) ≤ algebraic multiplicity of λ. 3. Assuming A has only real eigenvalues, check if A is diagonalizable. If A has n distinct eigenvalues, then A is definitely diagonalizable. More generally, if dim(Eigenspace corresp. to λ) = algebraic multiplicity of λ for each eigenvalue λ of A, then A is diagonalizable and Rn has a basis consisting of n eigenvectors of A. -

Diagonalizable Matrix - Wikipedia, the Free Encyclopedia

Diagonalizable matrix - Wikipedia, the free encyclopedia http://en.wikipedia.org/wiki/Matrix_diagonalization Diagonalizable matrix From Wikipedia, the free encyclopedia (Redirected from Matrix diagonalization) In linear algebra, a square matrix A is called diagonalizable if it is similar to a diagonal matrix, i.e., if there exists an invertible matrix P such that P −1AP is a diagonal matrix. If V is a finite-dimensional vector space, then a linear map T : V → V is called diagonalizable if there exists a basis of V with respect to which T is represented by a diagonal matrix. Diagonalization is the process of finding a corresponding diagonal matrix for a diagonalizable matrix or linear map.[1] A square matrix which is not diagonalizable is called defective. Diagonalizable matrices and maps are of interest because diagonal matrices are especially easy to handle: their eigenvalues and eigenvectors are known and one can raise a diagonal matrix to a power by simply raising the diagonal entries to that same power. Geometrically, a diagonalizable matrix is an inhomogeneous dilation (or anisotropic scaling) — it scales the space, as does a homogeneous dilation, but by a different factor in each direction, determined by the scale factors on each axis (diagonal entries). Contents 1 Characterisation 2 Diagonalization 3 Simultaneous diagonalization 4 Examples 4.1 Diagonalizable matrices 4.2 Matrices that are not diagonalizable 4.3 How to diagonalize a matrix 4.3.1 Alternative Method 5 An application 5.1 Particular application 6 Quantum mechanical application 7 See also 8 Notes 9 References 10 External links Characterisation The fundamental fact about diagonalizable maps and matrices is expressed by the following: An n-by-n matrix A over the field F is diagonalizable if and only if the sum of the dimensions of its eigenspaces is equal to n, which is the case if and only if there exists a basis of Fn consisting of eigenvectors of A. -

Math 1553 Introduction to Linear Algebra

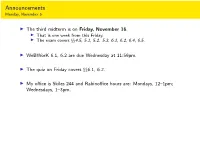

Announcements Monday, November 5 I The third midterm is on Friday, November 16. I That is one week from this Friday. I The exam covers xx4.5, 5.1, 5.2. 5.3, 6.1, 6.2, 6.4, 6.5. I WeBWorK 6.1, 6.2 are due Wednesday at 11:59pm. I The quiz on Friday covers xx6.1, 6.2. I My office is Skiles 244 and Rabinoffice hours are: Mondays, 12{1pm; Wednesdays, 1{3pm. Section 6.4 Diagonalization Motivation Difference equations Many real-word linear algebra problems have the form: 2 3 n v1 = Av0; v2 = Av1 = A v0; v3 = Av2 = A v0;::: vn = Avn−1 = A v0: This is called a difference equation. Our toy example about rabbit populations had this form. The question is, what happens to vn as n ! 1? I Taking powers of diagonal matrices is easy! I Taking powers of diagonalizable matrices is still easy! I Diagonalizing a matrix is an eigenvalue problem. 2 0 4 0 8 0 2n 0 D = ; D2 = ; D3 = ;::: Dn = : 0 −1 0 1 0 −1 0 (−1)n 0 −1 0 0 1 0 1 0 0 1 0 −1 0 0 1 1 2 1 3 1 D = @ 0 2 0 A ; D = @ 0 4 0 A ; D = @ 0 8 0 A ; 1 1 1 0 0 3 0 0 9 0 0 27 0 (−1)n 0 0 1 n 1 ::: D = @ 0 2n 0 A 1 0 0 3n Powers of Diagonal Matrices If D is diagonal, then Dn is also diagonal; its diagonal entries are the nth powers of the diagonal entries of D: (CDC −1)(CDC −1) = CD(C −1C)DC −1 = CDIDC −1 = CD2C −1 (CDC −1)(CD2C −1) = CD(C −1C)D2C −1 = CDID2C −1 = CD3C −1 CDnC −1 Closed formula in terms of n: easy to compute 1 1 2n 0 1 −1 −1 1 2n + (−1)n 2n + (−1)n+1 = : 1 −1 0 (−1)n −2 −1 1 2 2n + (−1)n+1 2n + (−1)n Powers of Matrices that are Similar to Diagonal Ones What if A is not diagonal? Example 1=2 3=2 Let A = . -

Contents 5 Eigenvalues and Diagonalization

Linear Algebra (part 5): Eigenvalues and Diagonalization (by Evan Dummit, 2017, v. 1.50) Contents 5 Eigenvalues and Diagonalization 1 5.1 Eigenvalues, Eigenvectors, and The Characteristic Polynomial . 1 5.1.1 Eigenvalues and Eigenvectors . 2 5.1.2 Eigenvalues and Eigenvectors of Matrices . 3 5.1.3 Eigenspaces . 6 5.2 Diagonalization . 9 5.3 Applications of Diagonalization . 14 5.3.1 Transition Matrices and Incidence Matrices . 14 5.3.2 Systems of Linear Dierential Equations . 16 5.3.3 Non-Diagonalizable Matrices and the Jordan Canonical Form . 19 5 Eigenvalues and Diagonalization In this chapter, we will discuss eigenvalues and eigenvectors: these are characteristic values (and characteristic vectors) associated to a linear operator T : V ! V that will allow us to study T in a particularly convenient way. Our ultimate goal is to describe methods for nding a basis for V such that the associated matrix for T has an especially simple form. We will rst describe diagonalization, the procedure for (trying to) nd a basis such that the associated matrix for T is a diagonal matrix, and characterize the linear operators that are diagonalizable. Then we will discuss a few applications of diagonalization, including the Cayley-Hamilton theorem that any matrix satises its characteristic polynomial, and close with a brief discussion of non-diagonalizable matrices. 5.1 Eigenvalues, Eigenvectors, and The Characteristic Polynomial • Suppose that we have a linear transformation T : V ! V from a (nite-dimensional) vector space V to itself. We would like to determine whether there exists a basis of such that the associated matrix β is a β V [T ]β diagonal matrix. -

Diagonalization of Matrices

DIAGONALIZATION OF MATRICES AMAJOR QUALIFYING PROJECT REPORT SUBMITTED TO THE FACULTY OF WORCESTER POLYTECHNIC INSTITUTE IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF BACHELOR OF SCIENCE WRITTEN BY THEOPHILUS MARKS APPROVED BY:PADRAIG OC´ ATHAIN´ MAY 5, 2021 THIS REPORT REPRESENTS THE WORK OF WPI UNDERGRADUATE STUDENTS SUBMITTED TO THE FACULTY AS EVIDENCE OF COMPLETION OF A DEGREE REQUIREMENT. 1 Abstract This thesis aims to study criteria for diagonalizability over finite fields. First we review basic and major results in linear algebra, including the Jordan Canonical Form, the Cayley-Hamilton theorem, and the spectral theorem; the last of which is an important criterion for for matrix diagonalizability over fields of characteristic 0, but fails in finite fields. We then calculate the number of diagonalizable and non-diagonalizable 2 × 2 matrices and 2 × 2 symmetric matrices with repeated and distinct eigenvalues over finite fields. Finally, we look at results by Brouwer, Gow, and Sheekey for enumerating symmetric nilpotent matrices over finite fields. 2 Summary (1) In Chapter 1 we review basic linear algebra including eigenvectors and eigenval- ues, diagonalizability, generalized eigenvectors and eigenspaces, and the Cayley- Hamilton theorem (we provide an alternative proof in the appendix). We explore the Jordan canonical form and the rational canonical form, and prove some necessary and sufficient conditions for diagonalizability related to the minimal polynomial and the JCF. (2) In Chapter 2 we review inner products and inner product spaces, adjoints, and isome- tries. We prove the spectral theorem, which tells us that symmetric, orthogonal, and self-adjoint matrices are all diagonalizable over fields of characteristic zero. -

Rigidity and Shrinkability of Diagonalizable Matrices

British Journal of Mathematics & Computer Science 21(6): 1-15, 2017; Article no.BJMCS.32839 ISSN: 2231-0851 SCIENCEDOMAIN international www.sciencedomain.org Rigidity and Shrinkability of Diagonalizable Matrices Dimitris Karayannakis 1* and Maria-Evgenia Xezonaki 2 1Department of Informatics Engineering, TEI of Crete, Heraklion 71004, Greece. 2Department of Informatics and Telecommunications, University of Athens, Athens 157 84, Greece. Authors’ contributions This work was carried out in collaboration between the two authors. Author DK designed the study, wrote the theoretical part, wrote the initial draft of the manuscript and managed literature searches. Author MEX managed the matlab calculation whenever necessary for some examples, double- checked the validity of the theoretical predictions and added some new examples of her own. Both authors read and approved the final draft of the manuscript. Article Information DOI: 10.9734/BJMCS/2017/32839 Editor(s): (1) Feyzi Basar, Department of Mathematics, Fatih University, Turkey. Reviewers: (1) Jia Liu, University of West Florida, Pensacola, USA. (2) G. Y. Sheu, Chang-Jung Christian University, Tainan, Taiwan. (3) Marija Milojevic Jevric, Mathematical Institute of the Serbian Academy of Sciences and Arts, Serbia. Complete Peer review History: http://www.sciencedomain.org/review-history/18748 Received: 18 th March 2017 Accepted: 6th April 2017 Original Research Article Published: 22 nd April 2017 _______________________________________________________________________________ Abstract We introduce the seemingly new concept of a rigid matrix based on the comparison of its sparsity to the sparsity of the natural powers of the matrix. Our results could be useful as a usage guide in the scheduling of various iterative algorithms that appear in numerical linear algebra. -

5312 – Lecture for April 6, 2020

MAS 5312 { Lecture for April 6, 2020 Richard Crew Department of Mathematics, University of Florida The determination of the rational canonical form of a matrix A used the invariant factors of the K[X ]-module VA. A number of questions arise here. First, is there an irrational canonical form? Furthermore the elementary divisors of A are all, like \so what are we? chopped liver"? To these, uh questions we now turn. But first let's review some basic facts about eigenvectors. Recall that a nonzero v 2 K n is an eigenvector of A if Av = λv for some λ 2 K. If this is so, v is annihilated by A − λIn, which must then be a singular matrix. Conversely if A − λIn is singular it annihilates a nonzero vector v, which is then an eigenvector of A with eigenvalue λ. On the other hand A − λIn is singular if and only if det(A − λIn) = ΦA(λ) = 0, so the eigenvalues of A are precisely the roots of the characteristic polynomial ΦA(X ). Of course this polynomial need not have any roots in K, but there is always an extension of K { that is, a field L containing K such that ΦA(X ) factors into linear factors. We will see later how to construct such fields. While we're at it let's recall another useful fact about eigenvectors: if v1;:::; vr are eigenvectors of A belonging to distinct eigenvalues λ1; : : : ; λr then v1;:::; vr are independent. Recall the proof: suppose there is a nontrivial dependence relation a1v1 + a2v2 + ··· + ar vr = 0: We can assume that this relation is the shortest possible, and in particular all the ai are nonzero. -

Numerical Linear Algebra

University of Alabama at Birmingham Department of Mathematics Numerical Linear Algebra Lecture Notes for MA 660 (1997{2014) Dr Nikolai Chernov Summer 2014 Contents 0. Review of Linear Algebra 1 0.1 Matrices and vectors . 1 0.2 Product of a matrix and a vector . 1 0.3 Matrix as a linear transformation . 2 0.4 Range and rank of a matrix . 2 0.5 Kernel (nullspace) of a matrix . 2 0.6 Surjective/injective/bijective transformations . 2 0.7 Square matrices and inverse of a matrix . 3 0.8 Upper and lower triangular matrices . 3 0.9 Eigenvalues and eigenvectors . 4 0.10 Eigenvalues of real matrices . 4 0.11 Diagonalizable matrices . 5 0.12 Trace . 5 0.13 Transpose of a matrix . 6 0.14 Conjugate transpose of a matrix . 6 0.15 Convention on the use of conjugate transpose . 6 1. Norms and Inner Products 7 1.1 Norms . 7 1.2 1-norm, 2-norm, and 1-norm . 7 1.3 Unit vectors, normalization . 8 1.4 Unit sphere, unit ball . 8 1.5 Images of unit spheres . 8 1.6 Frobenius norm . 8 1.7 Induced matrix norms . 9 1.8 Computation of kAk1, kAk2, and kAk1 .................... 9 1.9 Inequalities for induced matrix norms . 9 1.10 Inner products . 10 1.11 Standard inner product . 10 1.12 Cauchy-Schwarz inequality . 11 1.13 Induced norms . 12 1.14 Polarization identity . 12 1.15 Orthogonal vectors . 12 1.16 Pythagorean theorem . 12 1.17 Orthogonality and linear independence . 12 1.18 Orthonormal sets of vectors . -

Effective Algorithms with Circulant-Block Matrices CORE

CORE Metadata, citation and similar papers at core.ac.uk Provided by Elsevier - Publisher Connector Effective Algorithms With Circulant-Block Matrices Sergej Rjasanow University of Kaiserslautern, Germany Fachbereich Mathematik Universitiit Kaiserslautern 6750 Kaiserslautern, Germany Submitted by Richard A. Brualdi ABSTRACT Effective numerical algorithms for circulant-block matrices A whose blocks are circulant are obtained. The eigenvalues of such matrices are determined in terms of the eigenvalues of matrices of reduced dimension, and systems of linear equations involving these matrices are solved efficiently using fast Fourier transforms. 1. INTRODUCTION Circulant matrices arise in many applications in mathematics, physics, and other applied sciences in problems possessing a periodicity property [3, 10, 12-15, 191. In this section we give the definition and the basic properties of circulant matrices [8] and define the special class of circulant-block matrices having circulant blocks with any arbitrary block structure. The circulant-block matrices with the circulant or factor circulant structure were considered in [l, 4-6, 17, 181. In Section 2, we discuss the spectral properties of circulant-block matrices and obtain the result that any eigenvalue problem for a circulant-block matrix can be reduced to a number of eigenvalue problems for matrices of reduced dimension. In Section 3, we give the algorithm for solving systems of linear equations with diagonalizable circulant-block matrices. In Section 4, we consider some special classes of nondiagonalizable circulant-block matrices. Finally we present the results from numerical tests on some realistic data. LINEAR ALGEBRA AND ITS APPLICATIONS 202:55-69 (1994) 55 0 Elsevier Science Inc., 1994 655 Avenue of the Americas, New York, NY 10010 0024-3795/94/$7.00 56 SERGEJ RJASANOW DEFINITION1. -

A Polynomial in a of the Diagonalizable and Nilpotent Parts of A

Western Oregon University Digital Commons@WOU Honors Senior Theses/Projects Student Scholarship 6-1-2017 A Polynomial in A of the Diagonalizable and Nilpotent Parts of A Kathryn Wilson Western Oregon University Follow this and additional works at: https://digitalcommons.wou.edu/honors_theses Recommended Citation Wilson, Kathryn, "A Polynomial in A of the Diagonalizable and Nilpotent Parts of A" (2017). Honors Senior Theses/Projects. 145. https://digitalcommons.wou.edu/honors_theses/145 This Undergraduate Honors Thesis/Project is brought to you for free and open access by the Student Scholarship at Digital Commons@WOU. It has been accepted for inclusion in Honors Senior Theses/Projects by an authorized administrator of Digital Commons@WOU. For more information, please contact [email protected], [email protected], [email protected]. A Polynomial in A of the Diagonalizable and Nilpotent Parts of A By Kathryn Wilson An Honors Thesis Submitted in Partial Fulfillment of the Requirements for Graduation from the Western Oregon University Honors Program Dr. Scott Beaver, Thesis Advisor Dr. Gavin Keulks, Honor Program Director June 2017 Acknowledgements I would first like to thank my advisor, Dr. Scott Beaver. He has been an amazing resources throughout this entire process. Your support was what made this project possible. I would also like to thank Dr. Gavin Keulks for his part in running the Honors Program and allowing me the opportunity to be part of it. Further I would like to thank the WOU Mathematics department for making my education here at Western immensely fulfilling, and instilling in me the quality of thinking that will create my success outside of school. -

Linear Algebra 2 Tutorial 5 Diagonalization and Jordan Normal

NMAI058 – Linear algebra 2 Tutorial 5 Diagonalization and Jordan normal form Date: March 29, 2021 TA: Pavel Hubáček Problem 1. Decide and justify whether the following matrices are diagonalizable: 04 −2 01 (a) A1 = @0 2 0A, 6 −5 1 0 1 (b) A = . 2 −2 2 Solution: (a) A matrix is diagonalizable if it can be expressed as A = SDS−1 for some regular matrix S and a diagonal matrix D. Equivalently, A must have n linearly independent eigenvectors and we test this equivalent characteri- zation. We compute the eigenvalues of A1. The characteristic polynomila of A1 is pA1 (λ) = (4 − λ)(2 − λ)(1 − λ): Thus, the eigenvalues are λ1 = 4; λ2 = 2 a λ3 = 1. The corresponding eigenvectors are found by solving the system (A1 − λI) = 0, where we sub- T stitute the above eigenvalues for λ. We get eigenvectors x1 = c · (1; 0; 2) , T T x2 = c · (1; 1; 1) , and x3 = c · (0; 0; 1) . The eigenvectors are linearly inde- pendent (they have to be, since each corresponds to a distinct eigenvalue). −1 Thus, the matrix A1 is diagonalizable and we can express it as A1 = SDS , where 01 1 01 04 0 01 S = @0 1 0A a D = @0 2 0A : 2 1 1 0 0 1 (b) We proceed analogously to matrix A1. The only (insignificant) difference is that we get complex eigenvalues. The characteristic polynomila of A2 2 is pA2 (λ) = λ − 2λ + 2. The roots of pA2 are 1 + i and 1 − i and the corresponding eigenvectors are (1; 1 + i)T and (1; 1 − i)T .