Linear Algebra for Math 542

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

PCMI Summer 2015 Undergraduate Lectures on Flag Varieties 0. What

PCMI Summer 2015 Undergraduate Lectures on Flag Varieties 0. What are Flag Varieties and Why should we study them? Let's start by over-analyzing the binomial theorem: n X n (x + y)n = xmyn−m m m=0 has binomial coefficients n n! = m m!(n − m)! that count the number of m-element subsets T of an n-element set S. Let's count these subsets in two different ways: (a) The number of ways of choosing m different elements from S is: n(n − 1) ··· (n − m + 1) = n!=(n − m)! and each such choice produces a subset T ⊂ S, with an ordering: T = ft1; ::::; tmg of the elements of T This realizes the desired set (of subsets) as the image: f : fT ⊂ S; T = ft1; :::; tmgg ! fT ⊂ Sg of a \forgetful" map. Since each preimage f −1(T ) of a subset has m! elements (the orderings of T ), we get the binomial count. Bonus. If S = [n] = f1; :::; ng is ordered, then each subset T ⊂ [n] has a distinguished ordering t1 < t2 < ::: < tm that produces a \section" of the forgetful map. For example, in the case n = 4 and m = 2, we get: fT ⊂ [4]g = ff1; 2g; f1; 3g; f1; 4g; f2; 3g; f2; 4g; f3; 4gg realized as a subset of the set fT ⊂ [4]; ft1; t2gg. (b) The group Perm(S) of permutations of S \acts" on fT ⊂ Sg via f(T ) = ff(t) jt 2 T g for each permutation f : S ! S This is an transitive action (every subset maps to every other subset), and the \stabilizer" of a particular subset T ⊂ S is the subgroup: Perm(T ) × Perm(S − T ) ⊂ Perm(S) of permutations of T and S − T separately. -

The Geometry of Syzygies

The Geometry of Syzygies A second course in Commutative Algebra and Algebraic Geometry David Eisenbud University of California, Berkeley with the collaboration of Freddy Bonnin, Clement´ Caubel and Hel´ ene` Maugendre For a current version of this manuscript-in-progress, see www.msri.org/people/staff/de/ready.pdf Copyright David Eisenbud, 2002 ii Contents 0 Preface: Algebra and Geometry xi 0A What are syzygies? . xii 0B The Geometric Content of Syzygies . xiii 0C What does it mean to solve linear equations? . xiv 0D Experiment and Computation . xvi 0E What’s In This Book? . xvii 0F Prerequisites . xix 0G How did this book come about? . xix 0H Other Books . 1 0I Thanks . 1 0J Notation . 1 1 Free resolutions and Hilbert functions 3 1A Hilbert’s contributions . 3 1A.1 The generation of invariants . 3 1A.2 The study of syzygies . 5 1A.3 The Hilbert function becomes polynomial . 7 iii iv CONTENTS 1B Minimal free resolutions . 8 1B.1 Describing resolutions: Betti diagrams . 11 1B.2 Properties of the graded Betti numbers . 12 1B.3 The information in the Hilbert function . 13 1C Exercises . 14 2 First Examples of Free Resolutions 19 2A Monomial ideals and simplicial complexes . 19 2A.1 Syzygies of monomial ideals . 23 2A.2 Examples . 25 2A.3 Bounds on Betti numbers and proof of Hilbert’s Syzygy Theorem . 26 2B Geometry from syzygies: seven points in P3 .......... 29 2B.1 The Hilbert polynomial and function. 29 2B.2 . and other information in the resolution . 31 2C Exercises . 34 3 Points in P2 39 3A The ideal of a finite set of points . -

LINEAR ALGEBRA METHODS in COMBINATORICS László Babai

LINEAR ALGEBRA METHODS IN COMBINATORICS L´aszl´oBabai and P´eterFrankl Version 2.1∗ March 2020 ||||| ∗ Slight update of Version 2, 1992. ||||||||||||||||||||||| 1 c L´aszl´oBabai and P´eterFrankl. 1988, 1992, 2020. Preface Due perhaps to a recognition of the wide applicability of their elementary concepts and techniques, both combinatorics and linear algebra have gained increased representation in college mathematics curricula in recent decades. The combinatorial nature of the determinant expansion (and the related difficulty in teaching it) may hint at the plausibility of some link between the two areas. A more profound connection, the use of determinants in combinatorial enumeration goes back at least to the work of Kirchhoff in the middle of the 19th century on counting spanning trees in an electrical network. It is much less known, however, that quite apart from the theory of determinants, the elements of the theory of linear spaces has found striking applications to the theory of families of finite sets. With a mere knowledge of the concept of linear independence, unexpected connections can be made between algebra and combinatorics, thus greatly enhancing the impact of each subject on the student's perception of beauty and sense of coherence in mathematics. If these adjectives seem inflated, the reader is kindly invited to open the first chapter of the book, read the first page to the point where the first result is stated (\No more than 32 clubs can be formed in Oddtown"), and try to prove it before reading on. (The effect would, of course, be magnified if the title of this volume did not give away where to look for clues.) What we have said so far may suggest that the best place to present this material is a mathematics enhancement program for motivated high school students. -

MATH 2370, Practice Problems

MATH 2370, Practice Problems Kiumars Kaveh Problem: Prove that an n × n complex matrix A is diagonalizable if and only if there is a basis consisting of eigenvectors of A. Problem: Let A : V ! W be a one-to-one linear map between two finite dimensional vector spaces V and W . Show that the dual map A0 : W 0 ! V 0 is surjective. Problem: Determine if the curve 2 2 2 f(x; y) 2 R j x + y + xy = 10g is an ellipse or hyperbola or union of two lines. Problem: Show that if a nilpotent matrix is diagonalizable then it is the zero matrix. Problem: Let P be a permutation matrix. Show that P is diagonalizable. Show that if λ is an eigenvalue of P then for some integer m > 0 we have λm = 1 (i.e. λ is an m-th root of unity). Hint: Note that P m = I for some integer m > 0. Problem: Show that if λ is an eigenvector of an orthogonal matrix A then jλj = 1. n Problem: Take a vector v 2 R and let H be the hyperplane orthogonal n n to v. Let R : R ! R be the reflection with respect to a hyperplane H. Prove that R is a diagonalizable linear map. Problem: Prove that if λ1; λ2 are distinct eigenvalues of a complex matrix A then the intersection of the generalized eigenspaces Eλ1 and Eλ2 is zero (this is part of the Spectral Theorem). 1 Problem: Let H = (hij) be a 2 × 2 Hermitian matrix. Use the Min- imax Principle to show that if λ1 ≤ λ2 are the eigenvalues of H then λ1 ≤ h11 ≤ λ2. -

A Quasideterminantal Approach to Quantized Flag Varieties

A QUASIDETERMINANTAL APPROACH TO QUANTIZED FLAG VARIETIES BY AARON LAUVE A dissertation submitted to the Graduate School—New Brunswick Rutgers, The State University of New Jersey in partial fulfillment of the requirements for the degree of Doctor of Philosophy Graduate Program in Mathematics Written under the direction of Vladimir Retakh & Robert L. Wilson and approved by New Brunswick, New Jersey May, 2005 ABSTRACT OF THE DISSERTATION A Quasideterminantal Approach to Quantized Flag Varieties by Aaron Lauve Dissertation Director: Vladimir Retakh & Robert L. Wilson We provide an efficient, uniform means to attach flag varieties, and coordinate rings of flag varieties, to numerous noncommutative settings. Our approach is to use the quasideterminant to define a generic noncommutative flag, then specialize this flag to any specific noncommutative setting wherein an amenable determinant exists. ii Acknowledgements For finding interesting problems and worrying about my future, I extend a warm thank you to my advisor, Vladimir Retakh. For a willingness to work through even the most boring of details if it would make me feel better, I extend a warm thank you to my advisor, Robert L. Wilson. For helpful mathematical discussions during my time at Rutgers, I would like to acknowledge Earl Taft, Jacob Towber, Kia Dalili, Sasa Radomirovic, Michael Richter, and the David Nacin Memorial Lecture Series—Nacin, Weingart, Schutzer. A most heartfelt thank you is extended to 326 Wayne ST, Maria, Kia, Saˇsa,Laura, and Ray. Without your steadying influence and constant comraderie, my time at Rut- gers may have been shorter, but certainly would have been darker. Thank you. Before there was Maria and 326 Wayne ST, there were others who supported me. -

GROMOV-WITTEN INVARIANTS on GRASSMANNIANS 1. Introduction

JOURNAL OF THE AMERICAN MATHEMATICAL SOCIETY Volume 16, Number 4, Pages 901{915 S 0894-0347(03)00429-6 Article electronically published on May 1, 2003 GROMOV-WITTEN INVARIANTS ON GRASSMANNIANS ANDERS SKOVSTED BUCH, ANDREW KRESCH, AND HARRY TAMVAKIS 1. Introduction The central theme of this paper is the following result: any three-point genus zero Gromov-Witten invariant on a Grassmannian X is equal to a classical intersec- tion number on a homogeneous space Y of the same Lie type. We prove this when X is a type A Grassmannian, and, in types B, C,andD,whenX is the Lagrangian or orthogonal Grassmannian parametrizing maximal isotropic subspaces in a com- plex vector space equipped with a non-degenerate skew-symmetric or symmetric form. The space Y depends on X and the degree of the Gromov-Witten invariant considered. For a type A Grassmannian, Y is a two-step flag variety, and in the other cases, Y is a sub-maximal isotropic Grassmannian. Our key identity for Gromov-Witten invariants is based on an explicit bijection between the set of rational maps counted by a Gromov-Witten invariant and the set of points in the intersection of three Schubert varieties in the homogeneous space Y . The proof of this result uses no moduli spaces of maps and requires only basic algebraic geometry. It was observed in [Bu1] that the intersection and linear span of the subspaces corresponding to points on a curve in a Grassmannian, called the kernel and span of the curve, have dimensions which are bounded below and above, respectively. -

Section 5 Summary

Section 5 summary Brian Krummel November 4, 2019 1 Terminology Eigenvalue Eigenvector Eigenspace Characteristic polynomial Characteristic equation Similar matrices Similarity transformation Diagonalizable Matrix of a linear transformation relative to a basis System of differential equations Solution to a system of differential equations Solution set of a system of differential equations Fundamental solutions Initial value problem Trajectory Decoupled system of differential equations Repeller / source Attractor / sink Saddle point Spiral point Ellipse trajectory 1 2 How to find eigenvalues, eigenvectors, and a similar ma- trix 1. First we find eigenvalues of A by finding the roots of the characteristic equation det(A − λI) = 0: Note that if A is upper triangular (or lower triangular), then the eigenvalues λ of A are just the diagonal entries of A and the multiplicity of each eigenvalue λ is the number of times λ appears as a diagonal entry of A. 2. For each eigenvalue λ, find a basis of eigenvectors corresponding to the eigenvalue λ by row reducing A − λI and find the solution to (A − λI)x = 0 in vector parametric form. Note that for a general n × n matrix A and any eigenvalue λ of A, dim(Eigenspace corresp. to λ) = # free variables of (A − λI) ≤ algebraic multiplicity of λ. 3. Assuming A has only real eigenvalues, check if A is diagonalizable. If A has n distinct eigenvalues, then A is definitely diagonalizable. More generally, if dim(Eigenspace corresp. to λ) = algebraic multiplicity of λ for each eigenvalue λ of A, then A is diagonalizable and Rn has a basis consisting of n eigenvectors of A. -

Diagonalizable Matrix - Wikipedia, the Free Encyclopedia

Diagonalizable matrix - Wikipedia, the free encyclopedia http://en.wikipedia.org/wiki/Matrix_diagonalization Diagonalizable matrix From Wikipedia, the free encyclopedia (Redirected from Matrix diagonalization) In linear algebra, a square matrix A is called diagonalizable if it is similar to a diagonal matrix, i.e., if there exists an invertible matrix P such that P −1AP is a diagonal matrix. If V is a finite-dimensional vector space, then a linear map T : V → V is called diagonalizable if there exists a basis of V with respect to which T is represented by a diagonal matrix. Diagonalization is the process of finding a corresponding diagonal matrix for a diagonalizable matrix or linear map.[1] A square matrix which is not diagonalizable is called defective. Diagonalizable matrices and maps are of interest because diagonal matrices are especially easy to handle: their eigenvalues and eigenvectors are known and one can raise a diagonal matrix to a power by simply raising the diagonal entries to that same power. Geometrically, a diagonalizable matrix is an inhomogeneous dilation (or anisotropic scaling) — it scales the space, as does a homogeneous dilation, but by a different factor in each direction, determined by the scale factors on each axis (diagonal entries). Contents 1 Characterisation 2 Diagonalization 3 Simultaneous diagonalization 4 Examples 4.1 Diagonalizable matrices 4.2 Matrices that are not diagonalizable 4.3 How to diagonalize a matrix 4.3.1 Alternative Method 5 An application 5.1 Particular application 6 Quantum mechanical application 7 See also 8 Notes 9 References 10 External links Characterisation The fundamental fact about diagonalizable maps and matrices is expressed by the following: An n-by-n matrix A over the field F is diagonalizable if and only if the sum of the dimensions of its eigenspaces is equal to n, which is the case if and only if there exists a basis of Fn consisting of eigenvectors of A. -

Geometric Engineering of (Framed) Bps States

GEOMETRIC ENGINEERING OF (FRAMED) BPS STATES WU-YEN CHUANG1, DUILIU-EMANUEL DIACONESCU2, JAN MANSCHOT3;4, GREGORY W. MOORE5, YAN SOIBELMAN6 Abstract. BPS quivers for N = 2 SU(N) gauge theories are derived via geometric engineering from derived categories of toric Calabi-Yau threefolds. While the outcome is in agreement of previous low energy constructions, the geometric approach leads to several new results. An absence of walls conjecture is formulated for all values of N, relating the field theory BPS spectrum to large radius D-brane bound states. Supporting evidence is presented as explicit computations of BPS degeneracies in some examples. These computations also prove the existence of BPS states of arbitrarily high spin and infinitely many marginal stability walls at weak coupling. Moreover, framed quiver models for framed BPS states are naturally derived from this formalism, as well as a mathematical formulation of framed and unframed BPS degeneracies in terms of motivic and cohomological Donaldson-Thomas invariants. We verify the conjectured absence of BPS states with \exotic" SU(2)R quantum numbers using motivic DT invariants. This application is based in particular on a complete recursive algorithm which determines the unframed BPS spectrum at any point on the Coulomb branch in terms of noncommutative Donaldson- Thomas invariants for framed quiver representations. Contents 1. Introduction 2 1.1. A (short) summary for mathematicians 7 1.2. BPS categories and mirror symmetry 9 2. Geometric engineering, exceptional collections, and quivers 11 2.1. Exceptional collections and fractional branes 14 2.2. Orbifold quivers 18 2.3. Field theory limit A 19 2.4. -

Math 1553 Introduction to Linear Algebra

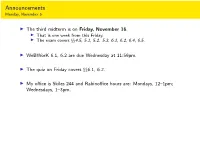

Announcements Monday, November 5 I The third midterm is on Friday, November 16. I That is one week from this Friday. I The exam covers xx4.5, 5.1, 5.2. 5.3, 6.1, 6.2, 6.4, 6.5. I WeBWorK 6.1, 6.2 are due Wednesday at 11:59pm. I The quiz on Friday covers xx6.1, 6.2. I My office is Skiles 244 and Rabinoffice hours are: Mondays, 12{1pm; Wednesdays, 1{3pm. Section 6.4 Diagonalization Motivation Difference equations Many real-word linear algebra problems have the form: 2 3 n v1 = Av0; v2 = Av1 = A v0; v3 = Av2 = A v0;::: vn = Avn−1 = A v0: This is called a difference equation. Our toy example about rabbit populations had this form. The question is, what happens to vn as n ! 1? I Taking powers of diagonal matrices is easy! I Taking powers of diagonalizable matrices is still easy! I Diagonalizing a matrix is an eigenvalue problem. 2 0 4 0 8 0 2n 0 D = ; D2 = ; D3 = ;::: Dn = : 0 −1 0 1 0 −1 0 (−1)n 0 −1 0 0 1 0 1 0 0 1 0 −1 0 0 1 1 2 1 3 1 D = @ 0 2 0 A ; D = @ 0 4 0 A ; D = @ 0 8 0 A ; 1 1 1 0 0 3 0 0 9 0 0 27 0 (−1)n 0 0 1 n 1 ::: D = @ 0 2n 0 A 1 0 0 3n Powers of Diagonal Matrices If D is diagonal, then Dn is also diagonal; its diagonal entries are the nth powers of the diagonal entries of D: (CDC −1)(CDC −1) = CD(C −1C)DC −1 = CDIDC −1 = CD2C −1 (CDC −1)(CD2C −1) = CD(C −1C)D2C −1 = CDID2C −1 = CD3C −1 CDnC −1 Closed formula in terms of n: easy to compute 1 1 2n 0 1 −1 −1 1 2n + (−1)n 2n + (−1)n+1 = : 1 −1 0 (−1)n −2 −1 1 2 2n + (−1)n+1 2n + (−1)n Powers of Matrices that are Similar to Diagonal Ones What if A is not diagonal? Example 1=2 3=2 Let A = . -

Totally Positive Toeplitz Matrices and Quantum Cohomology of Partial Flag Varieties

JOURNAL OF THE AMERICAN MATHEMATICAL SOCIETY Volume 16, Number 2, Pages 363{392 S 0894-0347(02)00412-5 Article electronically published on November 29, 2002 TOTALLY POSITIVE TOEPLITZ MATRICES AND QUANTUM COHOMOLOGY OF PARTIAL FLAG VARIETIES KONSTANZE RIETSCH 1. Introduction A matrix is called totally nonnegative if all of its minors are nonnegative. Totally nonnegative infinite Toeplitz matrices were studied first in the 1950's. They are characterized in the following theorem conjectured by Schoenberg and proved by Edrei. Theorem 1.1 ([10]). The Toeplitz matrix ∞×1 1 a1 1 0 1 a2 a1 1 B . .. C B . a2 a1 . C B C A = B .. .. .. C B ad . C B C B .. .. C Bad+1 . a1 1 C B C B . C B . .. .. a a .. C B 2 1 C B . C B .. .. .. .. ..C B C is totally nonnegative@ precisely if its generating function is of theA form, 2 (1 + βit) 1+a1t + a2t + =exp(tα) ; ··· (1 γit) i Y2N − where α R 0 and β1 β2 0,γ1 γ2 0 with βi + γi < . 2 ≥ ≥ ≥···≥ ≥ ≥···≥ 1 This beautiful result has been reproved many times; see [32]P for anP overview. It may be thought of as giving a parameterization of the totally nonnegative Toeplitz matrices by ~ N N ~ (α;(βi)i; (~γi)i) R 0 R 0 R 0 i(βi +~γi) < ; f 2 ≥ × ≥ × ≥ j 1g i X2N where β~i = βi βi+1 andγ ~i = γi γi+1. − − Received by the editors December 10, 2001 and, in revised form, September 14, 2002. 2000 Mathematics Subject Classification. Primary 20G20, 15A48, 14N35, 14N15. -

Borel Subgroups and Flag Manifolds

Topics in Representation Theory: Borel Subgroups and Flag Manifolds 1 Borel and parabolic subalgebras We have seen that to pick a single irreducible out of the space of sections Γ(Lλ) we need to somehow impose the condition that elements of negative root spaces, acting from the right, give zero. To get a geometric interpretation of what this condition means, we need to invoke complex geometry. To even define the negative root space, we need to begin by complexifying the Lie algebra X gC = tC ⊕ gα α∈R and then making a choice of positive roots R+ ⊂ R X gC = tC ⊕ (gα + g−α) α∈R+ Note that the complexified tangent space to G/T at the identity coset is X TeT (G/T ) ⊗ C = gα α∈R and a choice of positive roots gives a choice of complex structure on TeT (G/T ), with the holomorphic, anti-holomorphic decomposition X X TeT (G/T ) ⊗ C = TeT (G/T ) ⊕ TeT (G/T ) = gα ⊕ g−α α∈R+ α∈R+ While g/t is not a Lie algebra, + X − X n = gα, and n = g−α α∈R+ α∈R+ are each Lie algebras, subalgebras of gC since [n+, n+] ⊂ n+ (and similarly for n−). This follows from [gα, gβ] ⊂ gα+β The Lie algebras n+ and n− are nilpotent Lie algebras, meaning 1 Definition 1 (Nilpotent Lie Algebra). A Lie algebra g is called nilpotent if, for some finite integer k, elements of g constructed by taking k commutators are zero. In other words [g, [g, [g, [g, ··· ]]]] = 0 where one is taking k commutators.