Naver Labs Europe's Systems for the Document-Level Generation And

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Bogut Vs Freeland

Bogut vs freeland Please LIKE & SUBSCRIBE for more NBA videos & mixes! Have a question? Ask here: Andrew Bogut scuffle with Mo Williams, Freeland and Aldridge (3 players ejected) For GS, Draymond Green was ejected and Bogut was given a technical? I'm not sure why Monty Williams vs Kobe Bryant Fight. The dust-up began when Bogut took exception to the way Blazers center Joel Freeland had pushed his elbow in his back while going for a. Feud: Joel Freeland and Andrew Bogut. It's actually surprising that Bogut doesn't get into more fights. You'd think half the league would be. Golden State Warriors center Andrew Bogut and Portland Trail Blazers guard Mo Bogut initiated an incident by elbowing Trail Blazers center Joel Freeland in the jaw. Two Suspended, Three Fined for incident in GSW vs. Things began when Andrew Bogut and Joel Freeland became entangled in the paint during a Portland offensive possession. Bogut eventually. FIGHT - Andre Bogut vs Joel Freeland (Aldridge, Matthews vs. O'Neal)!!! Please LIKE & SUBSCRIBE for more NBA videos & mixes! Have a question? Ask here. During the third quarter of Saturday's game between the Portland Trail Blazers and the Golden State Warriors, Andrew Bogut and Joel Freeland. Australian centre Andrew Bogut has been suspended for one game elbow that connected with Portland center Joel Freeland's jaw after a. Andrew Bogot and Joel Freeland got tangled up under the basket, then in his effort to get away Bogut New York, Bogut is out Tuesday vs. Bogut and Portland big man Joel Freeland got tangled up under the basket after the Blazers reserve had elbowed Bogut in the back under the. -

Westbrook's Triple-Double Helps Thunder Beat Philly

Sports46 FRIDAY, MARCH 24, 2017 DENVER: Cleveland Cavaliers forward Channing Frye (right) fouls Denver Nuggets guard Jamal Murray as he drives to the rim in the second half of an NBA basketball game Wednesday, March 22, 2017, in Denver. The Nuggets won 126-113.— AP Westbrook’s triple-double helps Thunder beat Philly OKLAHOMA CITY: Russell Westbrook Denver, which also got 21 points from Marco Belinelli came off the bench to add for Milwaukee, which cruised to a victory recorded his 35th triple-double of the sea- Gary Harris and 16 from Nikola Jokic. 20 as Charlotte pulled off a fourth-quarter over Sacramento. The win moved the son with 18 points, 11 rebounds and 14 Kyrie Irving lead the Cavs with 33 points comeback to defeat Orlando. Orlando Bucks into a tie with Indiana for the sixth assists as the Oklahoma City Thunder but James had just 18 and the two stars entered the fourth quarter with a five- spot, one game behind fifth-place Atlanta cruised to a 122-97 win over the sat out much of the fourth quarter with point lead but Frank Kaminsky came off in the tight Eastern Conference playoff Philadelphia 76ers on Wednesday night. Cleveland trailing by double digits. the bench to score 13 of his 18 points and race. The Bucks have been the NBA’s The Thunder have won 16 straight games Walker added eight points to help the hottest team the past three weeks, win- against Philadelphia, a run that stretches CELTICS 109, PACERS 100 Hornets outscore the Magic 32-20 in the ning 10 of 12 games. -

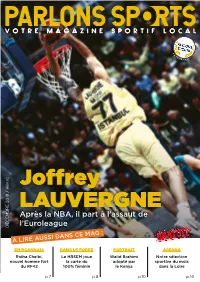

Joffrey LAUVERGNE Après La NBA, Il Part À L’Assaut De

NUM 02 Joffrey LAUVERGNE Après la NBA, il part à l’assaut de DÉCEMBRE 2018 / DÉCEMBRE 2018 l’Euroleague À LIRE AUSSI DANS CE MAG : EN ROANNAIS DANS LE FOREZ PORTRAIT AGENDA Ridha Chelbi, Le HBSEM joue Walid Brahimi Notre sélection nouvel homme fort la carte du adopté par sportive du mois du RF42 100% féminin le Kenya dans la Loire p.7 p.8 p.10 p.14 SOMMAIRE par la rédac s mmaire LE DOSSIER 4/5 - JOFFREY LAUVERGNE, LA FORCE TRANQUILLE L’ACTU DE LA LOIRE 7/8 - REPORTAGES DANS LE 8 ROANNAIS ET LE FOREZ LE PORTRAIT 10 - RENCONTRE AVEC 4 WAHID BRAHIMI RÉTRO 2018 12 RéTROSPECTIVE - 3 MOMENTS FORTS DE 2018 L’ANNÉE SPORTIVE 2018 LA TOUCHE PS 13 3 mOments fOrts - DES NEWS FRAÎCHES ET LE SPORTIF DU MOIS de l’année L’AGENDA 14 - SELECTION DES MATCHS 10 12 A NE PAS LOUPER PARLONS SPORTS MAGAZINE Directeurs de la publication : Amaury MAISONHAUTE Maxime VALADE Clap de fin… d’année Responsable commercial : A. MAISONHAUTE : 06 46 31 49 70 2018 rend son dernier souffle. Des public sportif de France. De son Responsable rédaction : joies, des peines, des doutes, des côté, la Chorale de Roanne est en M. VALADE : 06 44 91 12 02 confirmations… l’heure du bilan passe de redevenir ce qu’elle a Conception graphique : a sonné. Pour le sport français, été autrefois. La course à la Jeep Maryne REOLID cette année restera un bon cru. Elite est plus que jamais d’actua- Mise en page publicitaire : En point d’orgue, la victoire des lité pour les Roannais. -

Isw Bullstrade-1.Pdf

3/28/2019 Analyzing the Chicago Bulls' trade | isportsweb NFL MLB NCAAF NCAAB NBA NHL MORE NBA | Chicago Bulls | Analyzing the Chicago Bulls’ trade Analyzing the Chicago Bulls’ trade February 27, 2017 by Anthony Chatman Twitter Facebook Reddit Email Print One of the most exciting dates during the NBA season just passed: The trade deadline. The trade deadline was February 23rd and many people were looking to see if the Chicago Bulls would be moving Jimmy Butler to Boston for a couple of the Celtics’ pieces. But what they ended up seeing was a trade that few, if anyone at all, saw coming. The Chicago Bulls traded away their forwards Taj Gibson and Doug McDermott, along with their 2018 second round draft pick. In exchange, they received the Oklahoma City Thunder’s HEADLINES guards, Cameron Payne and Anthony Morrow, and center Joffrey Lauvergne. Clearly, the Thunder were the winners of this trade as their new pieces will work to help the team progress. But what does this mean for Indiana Baseball Falls to Kent State in Thriller the Bulls’ progress (or lack thereof)? First I’ll look at what they lost. PLAYERS: TAJ GIBSON AND DOUG MCDERMOTT Predicting the Final Eastern Conference Standings Boston Red Sox Season Preview and Prediction The Time is Now: Keys to the 2019 New York Yankees Season Oklahoma City Thunder take care of the visiting Indiana Pacers COMING UP Football Freestyler Kicks Soccer Chicago Bulls forward Taj ➢ Ball Across the Sahara Gibson (22). Photo Credit: Jeff Hanisch-USA TODAY Sports Cheese, GOT, and Other Super ➢ Bizarre Easter Eggs Simple DIY Easter Basket Ideas ➢ Worth Putting All Your Eggs Into "Jet Lag Gurus", Yep That's a NEXT Football Freestyler Kicks Soccer Ball Across th… ➢ Thing Now Cincinnati Reds: prelude to Opening Day 2019 Contextualizing the Portland Timbers New York Giants: Who will be the last remaining Our partners will collect data and use cookies for ad personalization and measurement. -

Past Match-Ups & Game Notes

Turkish Airlines EuroLeague PAST MATCH-UPS & GAME NOTES REGULAR SEASON - ROUND 2 EUROLEAGUE 2019-20 | REGULAR SEASON | ROUND 2 1 Oct 10, 2019 CET: 19:00 LOCAL TIME: 19:00 | STARK ARENA CRVENA ZVEZDA MTS BELGRADE FENERBAHCE BEKO ISTANBUL 50 OJO, MICHAEL 12 KALINIC, NIKOLA Center | 2.16 | Born: 1993 Forward | 2.02 | Born: 1991 @nikola_kalinic 32 JOVANOVIC, NIKOLA 35 MUHAMMED, ALI Center | 2.11 | Born: 1994 Guard | 1.78 | Born: 1983 @BobbyDixon20 28 SIMANIC, BORISA 24 VESELY, JAN Forward | 2.09 | Born: 1998 Center | 2.13 | Born: 1990 @JanVesely24 13 DOBRIC, OGNJEN 21 WILLIAMS, DERRICK Guard | 2.00 | Born: 1994 Forward | 2.03 | Born: 1991 12 BARON, BILLY 19 DE COLO, NANDO Guard | 1.88 | Born: 1990 Guard | 1.96 | Born: 1987 @billy_baron @nandodecolo 10 LAZIC, BRANKO 77 LAUVERGNE, JOFFREY Guard | 1.95 | Born: 1989 Center | 2.11 | Born: 1991 9 NENADIC, NEMANJA 70 DATOME, LUIGI Forward | 1.97 | Born: 1994 Forward | 2.03 | Born: 1987 @GigiDatome 7 DAVIDOVAC, DEJAN 44 DUVERIOGLU, AHMET Forward | 2.02 | Born: 1995 Center | 2.09 | Born: 1993 5 PERPEROGLOU, STRATOS 16 SLOUKAS, KOSTAS Forward | 2.03 | Born: 1984 Guard | 1.90 | Born: 1990 4 BROWN, LORENZO 10 MAHMUTOGLU, MELIH Guard | 1.96 | Born: 1990 Guard | 1.91 | Born: 1990 22 JENKINS, CHARLES 9 WESTERMANN, LEO Guard | 1.91 | Born: 1989 Guard | 1.98 | Born: 1992 @thejenkinsguy22 @lwestermann 15 GIST, JAMES 3 TIRPANCI, ERGI Forward | 2.04 | Born: 1986 Guard | 2.00 | Born: 2000 3 COVIC, FILIP 13 BIBEROVIC, TARIK Guard | 1.80 | Born: 1989 Forward | 2.01 | Born: 2001 1 BROWN, DERRICK 91 STIMAC, VLADIMIR -

2013 NBA Draft

Round 1 Draft Picks 1. Cleveland Cavaliers – Anthony Bennett (PF), UNLV 2. Orlando Magic – Victor Oladipo (SG), Indiana 3. Washington Wizards – Otto Porter Jr. (SF), Georgetown 4. Charlotte Bobcats – Cody Zeller (PF), Indiana University 5. Phoenix Suns – Alex Len (C), Maryland 6. New Orleans Pelicans – Nerlens Noel (C), Kentucky 7. Sacramento KinGs – Ben McLemore (SG), Kansas 8. Detroit Pistons – Kentavious Caldwell-Pope (SG), Georgia 9. Minnesota Timberwolves – Trey Burke (PG), Michigan 10. Portland Trail Blazers – C.J. McCollum (PG), Lehigh 11. Philadelphia 76ers – Michael Carter-Williams (PG), Syracuse 12. Oklahoma City Thunder – Steven Adams (C), PittsburGh 13. Dallas Mavericks – Kelly Olynyk (PF), Gonzaga 14. Utah Jazz – Shabazz Muhammad (SF), UCLA 15. Milwaukee Bucks – Giannis Antetokounmpo (SF), Greece 16. Boston Celtics – Lucas Nogueira (C), Brazil 17. Atlanta Hawks – Dennis Schroeder (PG), Germany 18. Atlanta Hawks – Shane Larkin (PG), Miami 19. Cleveland Cavaliers – Sergey Karasev (SG), Russia 20. ChicaGo Bulls – Tony Snell (SF), New Mexico 21. Utah Jazz – Gorgui Dieng (C), Louisville 22. Brooklyn – Mason Plumlee, (C), Duke 23. Indiana Pacers – Solomon Hill (SF), Arizona 24. New York Knicks – Tim Hardaway Jr. (SG), MichiGan 25. Los Angeles Clippers – Reggie Bullock (SG), UNC 26. Minnesota Timberwolves – Andre Roberson (SF), Colorado 27. Denver Nuggets – Rudy Gobert (SF), France 28. San Antonio Spurs – Livio Jean-Charles (PF), French Guiana 29. Oklahoma City Thunder – Archie Goodwin (SG), Kentucky 30. Phoenix Suns – Nemanja Nedovic (SG), Serbia Round 2 Draft Picks 31. Cleveland Cavaliers – Allen Crabbe (SG), California 32. Oklahoma City Thunder – Alex Abrines (SG), Spain 33. Cleveland Cavaliers – Carrick Felix (SG), Arizona State 34. Houston Rockets – Isaiah Canaan (PG), Murray State 35. -

Thomas, Celtics Beat Kings in Mexico City

SPORTS SATURDAY, DECEMBER 5, 2015 Thomas, Celtics beat Kings in Mexico City MEXICO CITY: Prior to the game, Isaiah Toronto Raptors 106-105 and end an Thomas said he was disappointed when eight-game losing streak. Forward Darrell he saw Boston’s schedule this season Arthur added 19 points for the Nuggets, showed no visits to Sacramento. while center Joffrey Lauvergne scored 14 He still got to show off for his former points and grabbed 10 rebounds. team, though, scoring 21 points as the Celtics defeated the Kings 114-97 HEAT 97, THUNDER 95 Thursday night in the NBA*s third regular- Guard Dwyane Wade scored 28 points, season game played in Mexico. Thomas, including two game-winning free throws who played in Sacramento from 2011-14, with 1.5 seconds left, to lead the Miami finished with 21 points and nine assists Heat to a 97-95 victory over the Oklahoma helping the Celtics win for the fourth time City Thunder. Wade scored Miami’s final in their last five games. eight points to overshadow Oklahoma “I think I*m over it,” said Thomas, who duo Russell Westbrook and Kevin Durant, was traded to Phoenix last year and then both of whom had 25 points for the dealt to Boston late in the season. “But Thunder. obviously you want to play good against your former team. But you also want to MAGIC 103, JAZZ 94 get the win, because winning is the most Forward Tobias Harris led six players in important thing and today we did it.” double figures and the Orlando Magic Kelly Olynyk added 21 points for the took advantage of center Rudy Gobert’s Celtics while Avery Bradley and Jae absence to beat the Utah Jazz. -

… … … OKLAHOMA CITY THUNDER Vs

OKLAHOMA CITY THUNDER 2016-17 GAME NOTES GAME #47 HOME GAME #22 OKLAHOMA CITY THUNDER vs. DALLAS MAVERICKS (27-19) (16-29) THURSDAY, JANUARY 26, 2017 7:00 PM (CST) CHESAPEAKE ENERGY ARENA 2016-17 SCHEDULE/RESULTS .. … OKLAHOMA CITY THUNDER PROBABLE STARTERS … NO DATE OPP W/L **TV/RECORD No. Player Pos. Ht. Wt. Birthdate Prior to NBA/Home Country Yrs. Pro 1 10/26 @ PHI W, 103-97 1-0 2 10/28 vs. PHX W, 113-110 (OT) 2-0 21 Andre Roberson F 6-7 210 12/04/91 Colorado/USA 4 3 10/30 vs. LAL W, 113-96 3-0 4 11/2 @ LAC W, 85-83 4-0 3 Domantas Sabonis F 6-11 240 05/03/96 Gonzaga/Lithuania R 5 11/3 @ GSW L, 96-122 4-1 12 Steven Adams C 7-0 255 07/20/93 Pittsburgh/New Zealand 4 6 11/5 vs. MIN W, 112-92 5-1 5 Victor Oladipo G 6-4 210 05/04/92 Indiana/USA 4 7 11/7 vs. MIA W, 97-85 6-1 8 11/9 vs. TOR L, 102-112 6-2 0 Russell Westbrook G 6-3 200 11/12/88 UCLA/USA 9 9 11/11 vs. LAC L, 108-110 6-3 10 11/13 vs. ORL L, 119-117 6-4 …… … 11 11/14 @ DET L, 88-104 6-5 OKLAHOMA CITY THUNDER RESERVES 12 11/16 vs. HOU W, 105-103 7-5 13 11/18 vs. BKN W, 124-105 8-5 8 Alex Abrines G/F 6-6 190 08/01/93 FC Barcelona/Spain R 14 11/20 vs. -

Past Match-Ups & Game Notes

Turkish Airlines EuroLeague PAST MATCH-UPS & GAME NOTES REGULAR SEASON - ROUND 2 Euroleague Basketball Whatsapp Service Do you want to receive this document and other relevant information about Euroleague Basketball directly on your mobile phone via Whatsapp? Click here and follow the instructions. EUROLEAGUE 2020-21 | REGULAR SEASON | ROUND 2 1 Oct 08, 2020 CET: 18:00 LOCAL TIME: 20:00 | MEGASPORT ARENA CSKA MOSCOW MACCABI PLAYTIKA TEL AVIV 41 KURBANOV, NIKITA 50 ZOOSMAN, YOVEL Forward | 2.02 | Born: 1986 Guard | 2.00 | Born: 1998 33 MILUTINOV, NIKOLA 24 ALBER, EIDAN Center | 2.13 | Born: 1994 Guard | 1.94 | Born: 2000 28 LOPATIN, ANDREI 23 ZIZIC, ANTE Forward | 2.08 | Born: 1998 Center | 2.10 | Born: 1997 23 SHENGELIA, TORNIKE 18 SAHAR, DORI Forward | 2.06 | Born: 1991 Forward | 1.95 | Born: 2001 @TokoShengelia23 21 CLYBURN, WILL 11 DORSEY, TYLER Forward | 2.01 | Born: 1990 Guard | 1.96 | Born: 1996 17 VOIGTMANN, JOHANNES 6 COHEN, SANDY Forward | 2.11 | Born: 1992 Guard | 1.98 | Born: 1995 13 STRELNIEKS, JANIS 5 HUNTER, OTHELLO Guard | 1.91 | Born: 1989 Center | 2.03 | Born: 1986 11 ANTONOV, SEMEN 4 CALOIARO, ANGELO Forward | 2.02 | Born: 1989 Forward | 2.03 | Born: 1989 10 HACKETT, DANIEL 3 JONES, CHRIS Guard | 1.93 | Born: 1987 Guard | 1.88 | Born: 1993 7 UKHOV, IVAN 1 WILBEKIN, SCOTTIE Guard | 1.93 | Born: 1995 Guard | 1.88 | Born: 1993 6 HILLIARD, DARRUN 0 BRYANT, ELIJAH Guard | 1.98 | Born: 1993 Guard | 1.96 | Born: 1995 5 JAMES, MIKE 14 BLAYZER, OZ Guard | 1.85 | Born: 1990 Forward | 2.00 | Born: 1992 @TheNatural_05 4 KHOMENKO, -

Past Match-Ups & Game Notes

Turkish Airlines EuroLeague PAST MATCH-UPS & GAME NOTES REGULAR SEASON - ROUND 25 EUROLEAGUE 2018-19 | REGULAR SEASON | ROUND 25 1 Mar 07, 2019 CET: 21:00 LOCAL TIME: 21:00 | WIZINK CENTER REAL MADRID FENERBAHCE BEKO ISTANBUL 7 CAMPAZZO, FACUNDO 12 KALINIC, NIKOLA Guard | 1.80 | Born: 1991 Forward | 2.02 | Born: 1991 @facundocampazzo @nikola_kalinic 24 DECK, GABRIEL 10 MAHMUTOGLU, MELIH Forward | 1.99 | Born: 1995 Guard | 1.91 | Born: 1990 3 RANDOLPH, ANTHONY 16 SLOUKAS, KOSTAS Forward | 2.11 | Born: 1989 Guard | 1.90 | Born: 1990 14 AYON, GUSTAVO 24 VESELY, JAN Center | 2.08 | Born: 1985 Center | 2.13 | Born: 1990 @JanVesely24 20 CARROLL, JAYCEE 11 ENNIS, TYLER Guard | 1.88 | Born: 1983 Guard | 1.88 | Born: 1994 @JayceeCarroll 1 CAUSEUR, FABIEN 77 LAUVERGNE, JOFFREY Forward | 1.95 | Born: 1987 Center | 2.11 | Born: 1991 @therealFC55 5 FERNANDEZ, RUDY 18 ARNA, EGEHAN Forward | 1.96 | Born: 1985 Forward | 2.03 | Born: 1997 @rudy5fernandez @egehanarna 32 KUZMIC, OGNJEN 70 DATOME, LUIGI Center | 2.13 | Born: 1990 Forward | 2.03 | Born: 1987 @GigiDatome 23 LLULL, SERGIO 44 DUVERIOGLU, AHMET Guard | 1.92 | Born: 1987 Center | 2.09 | Born: 1993 @23Llull 19 PANTZAR, MELWIN 23 GUDURIC, MARKO Guard | 1.90 | Born: 2000 Guard | 1.96 | Born: 1995 25 PREPELIC, KLEMEN 32 GULER, SINAN Guard | 1.91 | Born: 1992 Guard | 1.92 | Born: 1983 9 REYES, FELIPE 5 HERSEK, BARIS Forward | 2.03 | Born: 1980 Forward | 2.08 | Born: 1988 @9FelipeReyes 22 TAVARES, WALTER 4 MELLI, NICOLO Center | 2.21 | Born: 1992 Forward | 2.05 | Born: 1991 @NikMelli 44 TAYLOR, JEFFERY -

Fenerbahce-Milano Round 21

24 gennaio, EUROLEAGUE, Round 21 Fenerbahce Istanbul (8-12) Vs A|X ARMANI EXCHANGE MILANO (10-10) OLIMPIA MILANOVs Anadolu ( Efes10 -Istanbul10) (7-2) N. Giocatore Ruolo Età Statura 00 Amedeo Della Valle Guardia 27 1.94 5 Vladimir Micov Ala 34 2.03 6 Paul Biligha Centro 29 2.00 7 Arturas Gudaitis Centro 26 2.11 9 Riccardo Moraschini (infortunato) Guardia-ala 28 1.94 10 Michael Roll Guardia-ala 32 1.96 13 Sergio Rodriguez Playmaker 33 1.90 15 Kaleb Tarczewski Centro 26 2.13 16 Nemanja Nedovic Guardia 28 1.91 20 Andrea Cinciarini (capitano) Playmaker 33 1.93 23 Christian Burns Ala-centro 34 2.03 28 Keifer Sykes Playmaker 26 1.80 32 Jeff Brooks (infortunato) Ala forte 30 2.03 40 Luis Scola Ala forte 39 2.07 OLIMPIA MILANO: STAFF 2019/20 Ruolo Head Coach Ettore Messina Assistant Coach Tom Bialaszewski Mario Fioretti Marco Esposito Stefano Bizzozero Dir. Performance Team Roberto Oggioni Preparatore Atletico Giustino Danesi Ass. Preparatore Atletico Luca Agnello Ass. Preparatore Atletico Federico Conti Fisioterapisti Alessandro Colombo Claudio Lomma Marco Monzoni Dottori Matteo Acquati Daniele Casalini Ezio Giani Michele Ronchi OLIMPIA: LE CIFRE TOTALI OLIMPIA: LE MEDIE STAGIONALI OLIMPIA MILANO Istanbul battendo in semifinale proprio l’Olimpia e poi vincendo la finale con uno storico canestro NOTE E CURIOSITA’ sulla sirena di Sasha Djordjevic che a fine stagione venne a giocare a Milano. Era il suo primo anno da I PRECEDENTI VS. FENERBAHCE– Nei 17 capoallenatore. L’Olimpia era allenata da Mike precedenti, il Fenerbahce guida 11-6. Le due D’Antoni, per il secondo anno, e la star era Darryl squadre si sono affrontate nel 1996/97, primo Dawkins. -

Past Match-Ups & Game Notes

Turkish Airlines EuroLeague PAST MATCH-UPS & GAME NOTES REGULAR SEASON - ROUND 6 EUROLEAGUE 2019-20 | REGULAR SEASON | ROUND 6 1 Oct 31, 2019 CET: 20:00 LOCAL TIME: 19:00 | ALEKSANDAR NIKOLIC HALL CRVENA ZVEZDA MTS BELGRADE KHIMKI MOSCOW REGION 50 OJO, MICHAEL 11 JEREBKO, JONAS Center | 2.16 | Born: 1993 Center | 2.08 | Born: 1987 32 JOVANOVIC, NIKOLA 10 DESIATNIKOV, ANDREI Center | 2.11 | Born: 1994 Center | 2.20 | Born: 1994 28 SIMANIC, BORISA 45 BERTANS, DAIRIS Forward | 2.09 | Born: 1998 Guard | 1.92 | Born: 1989 13 DOBRIC, OGNJEN 31 VALIEV, EVGENY Guard | 2.00 | Born: 1994 Forward | 2.05 | Born: 1990 12 BARON, BILLY 24 JOVIC, STEFAN Guard | 1.88 | Born: 1990 Guard | 1.98 | Born: 1990 @billy_baron 10 LAZIC, BRANKO 13 GILL, ANTHONY Guard | 1.95 | Born: 1989 Forward | 2.04 | Born: 1992 9 NENADIC, NEMANJA 9 VIALTSEV, EGOR Forward | 1.97 | Born: 1994 Forward | 1.93 | Born: 1985 7 DAVIDOVAC, DEJAN 8 ZAYTSEV, VYACHESLAV Forward | 2.02 | Born: 1995 Guard | 1.90 | Born: 1989 5 PERPEROGLOU, STRATOS 7 KARASEV, SERGEY Forward | 2.03 | Born: 1984 Forward | 2.01 | Born: 1993 4 BROWN, LORENZO 5 BOOKER, DEVIN Guard | 1.96 | Born: 1990 Center | 2.05 | Born: 1991 22 JENKINS, CHARLES 12 MONIA, SERGEY Guard | 1.91 | Born: 1989 Forward | 2.02 | Born: 1983 @thejenkinsguy22 15 GIST, JAMES 6 TIMMA, JANIS Forward | 2.04 | Born: 1986 Forward | 2.01 | Born: 1992 3 COVIC, FILIP 3 KRAMER, CHRIS Guard | 1.80 | Born: 1989 Guard | 1.91 | Born: 1988 1 BROWN, DERRICK 1 SHVED, ALEXEY Forward | 2.03 | Born: 1987 Guard | 1.98 | Born: 1988 PAST MATCH-UPS & GAME NOTES