Overview of Supercomputer Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Xinya (Leah) Zhao Abdulahi Abu Outline

K-Computer Xinya (Leah) Zhao Abdulahi Abu Outline • History of Supercomputing • K-Computer Architecture • Programming Environment • Performance Evaluation • What's next? Timeline of Supercomputing Control Data The Cray era Massive Processing Petaflop Computing Corporation (1960s) (mid-1970s - 1980s) (1990s) (21st century) • CDC 1604 (1960): First • 80 MHz Cray-1 (1976): • Hitachi SR2201 (1996): • Realization: Power of solid state The most successful used 2048 processors large number of small • CDC 6600 (1964): 100 supercomputers in history connected via a fast three processors can be computers were sold at $8 • Vector processor dimensioanl corssbar harnessed to achieve high million each • Introduced chaining in network performance • Gained speed by "farming which scalar and vector • ASCI Red: mesh-based • IBM Blue Gene out" work to peripheral registers generate interim MIMD massively parallel architecture: trades computing elements, results system with over 9,000 processor speed for low freeing the CPU to • The Cray-2 (1985): No compute nodes and well power consumption, so a process actual data chaning and high memory over 12 terabytes of disk large number of • STAR-100: First to use latency with deep storage processors can be used at vector processing pipelinging • ASCI Red was the first air cooled temperature ever to break through the • K computer (2011) : 1 teraflop barrier fastest in the world K Computer is #1 !!! Why K Computer? Purpose: • Perform extremely complex mathematical or scientific calculations (ex: modelling the changes -

Fujitsu PRIMEHPC FX10 Supercomputer

Brochure Fujitsu PRIMEHPC FX10 Supercomputer Fujitsu PRIMEHPC FX10 Supercomputer Fujitsu's PRIMEHPC FX10 supercomputer provides the ability to address these high magnitude problems by delivering over 23 Petaflops, a quantum leap in processing performance. Combining high performance, scalability, and Green Credentials as well as High Performance High Reliability and Operability in Large reliability with superior energy efficiency, Mean Power Savings Systems PRIMEHPC FX10 further improves on Fujitsu's In today's quest for a greener world the Incorporating RAS functions, proven on supercomputer technology employed in the "K compromise between high performance and mainframe and high-end SPARC64 servers, computer," which achieved the world's environmental footprint is a major issue. At the SPARC64 IXfx processor delivers higher top -ranked performance*¹ in November 2011. heart of PRIMEHPC FX10 are SPARC64 IXfx reliability and operability. The flexible 6D All of the supercomputer's components—from processors that deliver ultra high performance of Mesh/Torus architecture of the Tofu processors to middleware—have been 236.5 Gigaflops and superb power efficiency of interconnect also contributes to overall developed by Fujitsu, thereby delivering high over 2 Gigaflops per watt. reliability. The result is outstanding levels of reliability and operability. The system operation: enhanced by the advanced set of can be scaled to meet customer needs, up to a Application Performance and Simple system management, monitoring, and job 1,024 rack configuration achieving a Development management software, and the highly super -high speed of 23.2 PFLOPS. SPARC64 IXfx processor includes extensions for scalable distributed file system. HPC applications known as HPC-ACE. This plus Fujitsu has developed PRIMEHPC FX10, a wide memory bandwidth, high performance Tofu supercomputer capable of world-class interconnect, Technical Computing Suite, HPC performance of up to 23.2 PFLOPS. -

Masahori Nunami (NIFS)

US-Japan Joint Institute for Fusion Theory National Institute for Fusion Science Workshop on Innovations and co-designs of fusion simulations towards extreme scale computing August 20-21, 2015 Nagoya University, Nagoya, Japan Numerical Simulation Research in NIFS and Fujitsu’s New Supercomputer PRIMEHPC FX100 Masanori Nunamia, Toshiyuki Shimizub aNational Institute for Fusion Science, Japan bFujitsu, Japan Outline M. Nunami (NIFS) l Overview of Numerical Simulation Reactor Research Project in NIFS l Supercomputers in NIFS and Japan T. Shimizu (Fujitsu) l Fujitsu’s new supercomputer (FX100) l Features and evaluations l Application evaluation l The next step in exascale capability Page § 2 Research activities in NIFS In NIFS, there exists 7 divisions and 3 projects for fusion research activities. LHD project NSR project FER project Page § 3 Numerical Simulation Reactor Research Project ( NSRP ) NSRP has been launched to continue the tasks in simulation group in NIFS, and evolve them on re-organization of NIFS in 2010. Final Goal Based on large-scale numerical simulation researches by using a super-computer, - To understand and systemize physical mechanisms in fusion plasmas - To realize ultimately the “Numerical Simulation Reactor” which will be an integrated predictive modeling for plasma behaviors in the reactor. Realization of numerical test reactor requires - understanding all element physics in each hierarchy, - inclusion of all elemental physical processes into our numerical models, - development of innovative simulation technology -

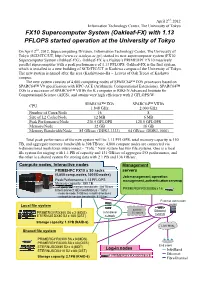

FX10 Supercomputer System (Oakleaf-FX) with 1.13 PFLOPS Started Operation at the University of Tokyo

April 2nd, 2012 Information Technology Center, The University of Tokyo FX10 Supercomputer System (Oakleaf-FX) with 1.13 PFLOPS started operation at the University of Tokyo On April 2nd, 2012, Supercomputing Division, Information Technology Center, The University of Tokyo (SCD/ITC/UT, http://www.cc.u-tokyo.ac.jp/) started its new supercomputer system (FX10 Supercomputer System (Oakleaf-FX)). Oakleaf-FX is a Fujitsu’s PRIMEHPC FX10 massively parallel supercomputer with a peak performance of 1.13 PFLOPS. Oakleaf-FX is the first system, which is installed to a new building of SCD/ITC/UT in Kashiwa campus of the University of Tokyo. The new system is named after the area (Kashiwa-no-Ha = Leaves of Oak Trees) of Kashiwa campus. The new system consists of 4,800 computing nodes of SPARC64™ IXfx processors based on SPARC64™ V9 specification with HPC-ACE (Arithmetic Computational Extensions). SPARC64™ IXfx is a successor of SPARC64™ VIIIfx for K computer in RIKEN Advanced Institute for Computational Science (AICS), and attains very high efficiency with 2 GFLOPS/W. SPARC64™ IXfx SPARC64™ VIIIfx CPU 1.848 GHz 2.000 GHz Number of Cores/Node 16 8 Size of L2 Cache/Node 12 MB 6 MB Peak Performance/Node 236.5 GFLOPS 128.0 GFLOPS Memory/Node 32 GB 16 GB Memory Bandwidth/Node 85 GB/sec (DDR3-1333) 64 GB/sec (DDR3-1000) Total peak performance of the new system will be 1.13 PFLOPS, total memory capacity is 150 TB, and aggregate memory bandwidth is 398 TB/sec. 4,800 compute nodes are connected via 6-dimensional mesh/torus interconnect - “Tofu.” New system has two file systems. -

Fujitsu Standard Tool

Toward Building up ARM HPC Ecosystem Shinji Sumimoto, Ph.D. Next Generation Technical Computing Unit FUJITSU LIMITED Sept. 12th, 2017 0 Copyright 2017 FUJITSU LIMITED Outline Fujitsu’s Super computer development history and Post-K Processor Overview Compiler Development for ARMv8 with SVE Towards building up ARM HPC Ecosystem 1 Copyright 2017 FUJITSU LIMITED Fujitsu’s Super computer development history and Post-K Processor Overview 2 Copyright 2017 FUJITSU LIMITED Fujitsu Supercomputers Fujitsu has been providing high performance FX100 supercomputers for 40 years, increasing application performance while maintaining FX10 Post-K Under Development application compatibility w/ RIKEN No.1 in Top500 (June and Nov., 2011) K computer World’s Fastest FX1 Vector Processor (1999) Most Efficient Performance VPP5000 SPARC in Top500 (Nov. 2008) NWT* Enterprise Developed with NAL No.1 in Top500 PRIMEQUEST (Nov. 1993) VPP300/700 PRIMEPOWER PRIMERGY CX400 Gordon Bell Prize Skinless server (1994, 95, 96) ⒸJAXA HPC2500 VPP500 World’s Most Scalable PRIMERGY VP Series Supercomputer BX900 Cluster node AP3000 (2003) HX600 F230-75APU Cluster node AP1000 PRIMERGY RX200 Cluster node Japan’s Largest Japan’s First Cluster in Top500 Vector (Array) (July 2004) Supercomputer (1977) *NWT: Numerical Wind Tunnel 3 Copyright 2017 FUJITSU LIMITED FUJITSU high-end supercomputers development 2011 2012 2013 2014 2015 2016 2017 2018 2019 2020 K computer and PRIMEHPC FX10/FX100 in operation FUJITSU The CPU and interconnect of FX10/FX100 inherit the K PRIMEHPC FX10 PRIMEHPC FX100 -

Primehpc Fx10

Fujitsu Petascale Supercomputer PRIMEHPC FX10 Toshiyuki Shimizu Director of System Development Div., Next Generation Technical Computing Unit Fujitsu Limited 4x2 racks (768 compute nodes) configuration Copyright 2011 FUJITSU LIMITED Outline Design target Technologies for highly scalable supercomputers PRIMEHPC FX10 CPU Interconnect Summary 1 Copyright 2011 FUJITSU LIMITED Targets for supercomputer development High effective High performance performance and and low power productivity of highly consumption parallel applications High operability and availability for large- scale systems 2 Copyright 2011 FUJITSU LIMITED Technologies for highly scalable supercomputers Developed key technologies & implemented in the series of systems PRIMEHPC FX10 will be available from Jan., 2012 Hybrid parallel Tofu interconnect (VISIMPACT) ISA extension Collective SW (HPC-ACE) CY2008~ CY2011. June~ 40GF, 4-core CPU 128GF, 8-core CPU CY2012~ Linpack 111TF Linpack 10.51 PF 236.5GF, 16-core CPU 3,008 nodes 88,128 nodes ~23.2 PF, 98,304 nodes *The K computer, which is being jointly developed by RIKEN and Fujitsu, is part of the High-Performance Computing Infrastructure (HPCI) initiative led by Japan's Ministry of Education, Culture, Sports, Science and Technology (MEXT). 3 Copyright 2011 FUJITSU LIMITED PRIMEHPC FX10 High-speed and ultra-large-scale computing environment Up to 23.2 PFLOPS (98,304 nodes, 1,024 racks, 6 petabytes of memory) Node New processor SPARC64™ IXfx Uncompromised memory system Tofu interconnect Interconnect controller ICC -

Overview of Supercomputer Systems

Overview of Supercomputer Systems Supercomputing Division Information Technology Center The University of Tokyo Supercomputers at ITC, U. of Tokyo (retired, March 2014) Oakleaf-fx T2K-Todai Yayoi (Fujitsu PRIMEHPC FX10) (Hitachi HA8000-tc/RS425 ) (Hitachi SR16000/M1) Total Peak performance : 1.13 PFLOPS Total Peak performance : 140 TFLOPS Total Peak performance : 54.9 TFLOPS Total number of nodes : 4800 Total number of nodes : 952 Total number of nodes : 56 Total memory : 150 TB Total memory : 32000 GB Total memory : 11200 GB Peak performance / node : 236.5 GFLOPS Peak performance / node : 147.2 GFLOPS Peak performance / node : 980.48 GFLOPS Main memory per node : 32 GB Main memory per node : 32 GB, 128 GB Main memory per node : 200 GB Disk capacity : 1.1 PB + 2.1 PB Disk capacity : 1 PB Disk capacity : 556 TB SPARC64 Ixfx 1.84GHz AMD Quad Core Opteron 2.3GHz IBM POWER 7 3.83GHz “Oakbridge-fx” with 576 nodes installed in April 2014 (separated) (136TF) Total Users > 2,000 2 3 •HPCI • Supercomputer Systems in SCD/ITC/UT • Overview of Fujitsu FX10 (Oakleaf-FX) • Post T2K System 4 Innovative High Performance Computing Infrastructure (HPCI) •HPCI – Seamless access to K computer, supercomputers, and user's machines – Distributed shared storage system • HPCI Consortium – Providing proposals/suggestions to the government and related organizations • Plan and operation of HPCI system • Promotion of computational sciences • Future supercomputing – 38 organizations – Operations started in Fall 2012 • https://www.hpci-office.jp/ 5 SPIRE/HPCI Strategic Programs for Innovative Research • Objectives – Scientific results as soon as K computer starts its operation – Establishment of several core institutes for comp. -

Introduction of Fujitsu's Next-Generation Supercomputer

Introduction of Fujitsu’s next-generation supercomputer MATSUMOTO Takayuki July 16, 2014 HPC Platform Solutions Fujitsu has a long history of supercomputing over 30 years Technologies and experience of providing Post-FX10 several types of supercomputers such as vector, MPP and cluster, are inherited PRIMEHPC FX10 and implemented in latest supercomputer product line No.1 in Top500 K computer (June and Nov., 2011) FX1 World’s Fastest Vector Processor (1999) Highest Performance VPP5000 SPARC efficiency in Top500 NWT* Enterprise (Nov. 2008) Developed with NAL No.1 in Top500 PRIMEQUEST VPP300/700 (Nov. 1993) PRIMEPOWER PRIMERGY CX400 Gordon Bell Prize HPC2500 Skinless server (1994, 95, 96) ⒸJAXA VPP500 World’s Most Scalable PRIMERGY VP Series Supercomputer BX900 Cluster node AP3000 (2003) HX600 Cluster node F230-75APU AP1000 PRIMERGY RX200 Cluster node Japan’s Largest Cluster in Top500 Japan’s First (July 2004) Vector (Array) *NWT: Supercomputer Numerical Wind Tunnel (1977) 1 Copyright 2014 FUJITSU LIMITED K computer and Fujitsu PRIMEHPC series Single CPU/node architecture for multicore Good Bytes/flop and scalability Key technologies for massively parallel supercomputers Original CPU and interconnect Support for tens of millions of cores (VISIMPACT*, Collective comm. HW) VISIMPACT SIMD extension HPC-ACE HPC-ACE HPC-ACE2 Collective comm. HW Direct network Tofu Direct network Tofu Tofu interconnect 2 HMC & Optical connections CY2008~ CY2010~ CY2012~ Coming soon 40GF, 4-core/CPU 128GF, 8-core/CPU 236.5GF, 16-core/CPU 1TF~, 32-core/CPU -

Supercomputers in Nagoya University

2015/3/16 Supercomputers in Nagoya University KATSUYA ISHII The Information Technology Center (ITC) of Nagoya University was originally established as the Computing Center of Nagoya University, which all researchers of academic organization in Japan can used. The first supercomputer of Nagoya University was Fujitsu supercomputer VP100(250Mflops) installed in the Institute of Plasma Physics in January 1984, which was the first operated supercomputer in Japan. 1 2015/3/16 High Performance Computing Infrasturacture of Japan http://www.hpci‐office.jp/folders/english 3 Example 1.The K computer ( named for the Japanese word “kei” (京), meaning 1016) Fujitsu 88,128 SPARC64VIIIfx’s at the RIKEN Advanced Institute for Computational Science ,Kobe. 11.28Petaflops (4th of Top500) *TIT : Tsubame 2.5: Xeon+GPU 5.375Petaflops *U. Tokyo : Fujitsu FX10 SPARC64IXfx’s 4,800nodes 1.135Petaflops *Tohoku Univ. :NEC SX‐ACE 2,560nodes etc 0.707Petaflops 2 2015/3/16 2015/4to9- PhaseⅡ 3.9 PetaFlops 2014/3-2015/3 Strage 11.28PB + High Definition Visualizaion System 2013/10-2014/3 0.59 PetaFlops phaseⅠ Strage 7.32PB Fujitsu PRIMEHPC FX100 change 0.56 PetaFlops Strage 3.96PB Fujitsu PRIMERGY CX400 gradeup SGI UV2000 add strage visualization system Fujitsu PRIMEHPC Fujitsu PRIMERGY FX10 CX400 8K High-definition add new external strorage Display Dome monitor HMD(Mix Reality) Storage system polarized 3D viewer SINET4 Network configuration NICE4 Log in nods 8K display (185inch) Disk 3.36PB UV2000 Private LAN Control AN NetWork for MDS OSS memory MDT OST NFS_SV MetaData PRIMERGY CX400/250 PRIMERGY CX400/270 PRIMEHPC FX10 Intel Intel IvyBridge(2.7GHz)12 コア Nodes:384 IvyBridge(2.7GHz)12cores +Xeon Phi3100 Storage system Rpeak:90.8 TF Nodes:368 Nodes:184 SFA12K‐40+SS8460 Memory:32 GiB Rpeak:190.7 TF Rpeak:279.9 TF [DDN] 4 OS:LinuxOS(TXCOS) Memory:64 GiB Memory:128 GiB OS:RedhatEnterpriseLinux6.4 OS:RedhatEnterpriseLinux6.4 Physical Capacity: 3.96 PB IBnetwork(InfiniBand‐FDR): 56GB/s Contral Manege. -

FUJITSU Supercomputer PRIMEHPC FX10 Datasheet

Brochure FUJITSU Supercomputer PRIMEHPC FX10 FUJITSU Supercomputer PRIMEHPC FX10 PRIMEHPC FX10 provides the ability to address these high magnitude problems by delivering over 23 Petaflops, a quantum leap in processing performance. Combining high performance, scalability, and Green Credentials as well as High Performance High Reliability and Operability in Large reliability with superior energy efficiency, Mean Power Savings Systems PRIMEHPC FX10 further improves on Fujitsu's In today's quest for a greener world the Incorporating RAS functions, proven on supercomputer technology employed in the "K compromise between high performance and mainframe and high-end SPARC64 servers, computer," which achieved the world's environmental footprint is a major issue. At the SPARC64 IXfx processor delivers higher top-ranked performance*¹ in November 2011. heart of PRIMEHPC FX10 are SPARC64 IXfx reliability and operability. The flexible 6D All of the supercomputer's components—from processors that deliver ultra high performance of Mesh/Torus architecture of the Tofu processors to middleware—have been 236.5 Gigaflops and superb power efficiency of interconnect also contributes to overall developed by Fujitsu, thereby delivering high over 2 Gigaflops per watt. reliability. The result is outstanding levels of reliability and operability. The system operation: enhanced by the advanced set of can be scaled to meet customer needs, up to a Application Performance and Simple system management, monitoring, and job 1,024 rack configuration achieving a Development management software, and the highly super-high speed of 23.2 PFLOPS. SPARC64 IXfx processor includes extensions for scalable distributed file system. HPC applications known as HPC-ACE. This plus Fujitsu has developed PRIMEHPC FX10, a wide memory bandwidth, high performance Tofu supercomputer capable of world-class interconnect, Technical Computing Suite, HPC performance of up to 23.2 PFLOPS. -

Peta/Exascale Computing Information Technology Center the University of Tokyo

Challenges towards Post- Peta/Exascale Computing Information Technology Center The University of Tokyo Kengo Nakajima Information Technology Center The University of Tokyo 53rd HPC User Forum, RIKEN AICS, Kobe, Japan July 16, 2014 2 Information Technology Center The University of Tokyo (ITC/U.Tokyo) • Campus/Nation-wide Services on Infrastructure for Information, related Research & Education • Established in 1999 – Campus-wide Communication & Computation Division – Digital Library/Academic Information Science Division – Network Division – Supercomputing Division • Core Institute of Nation-wide Infrastructure Services/Collaborative Research Projects – Joint Usage/Research Center for Interdisciplinary Large- scale Information Infrastructures (JHPCN) (2010-) – HPCI (HPC Infrastructure) 3 Innovative High Performance Computing Infrastructure (HPCI) • HPCI Consortium – Providing proposals/suggestions to the government and related organizations, operations of infrastructure – 38 organizations (Computer Centers, Users) – Operations started in Fall 2012 • https://www.hpci-office.jp/ • Missions – Infrastructure (Supercomputers & Distributed Shared Storage System) • Seamless access to K, SC’s (9 Univ’s), & user's machines – Promotion of Computational Science • Strategic Programs for Innovative Research (SPIRE) – R&D for Future Systems (Post-peta/Exascale) >22 PFLOPS November 2013 Hokkaido Univ.: SR16000/M1(172TF, 22TB) AICS, RIKEN: K computer (11.28 PF, 1.27PiB) BS2000 (44TF, 14TB) Tohoku Univ.: Kyoto Univ. SX-9(29.4TF, 18TB) XE6 (300.8 TF, 59 TB) -

PRIMEHPC FX10 - Performance Evaluations and Approach Towards the Next

SC12 Booth presentation PRIMEHPC FX10 - Performance evaluations and approach towards the next - Toshiyuki Shimizu Next Generation Technical Computing Unit FUJITSU LIMITED November, 2012 Outline Introduction of Fujitsu petascale supercomputers and key technologies of massively parallel computers K computer and FX10 performance evaluations HPC-ACE: CPU architecture extension VISIMPACT: Hybrid parallel execution support Challenges towards Exascale computing Summary SC12 Booth presentation for FX10 1 Copyright 2012 FUJITSU LIMITED Fujitsu HPC from workplace to top-end PRIMERGY x86 Clusters Celsius PRIMEHPC FX10 workstations Supercomputers SC12 Booth presentation for FX10 2 Copyright 2012 FUJITSU LIMITED Customers of large-scale HPC systems Customer Type Peak RIKEN (Kobe AICS) The K computer 11.28 PF National Computational Infrastructure, Australia (*) x86 Cluster (CX400), FX10 1.2 PF The University of Tokyo FX10 1.13 PF Central Weather Bureau of Taiwan (*) FX10 > 1 PF Kyushu University x86 Cluster (CX400), FX10 691 TF HPC Wales, UK x86 Cluster >300 TF Japan Atomic Energy Agency x86 Cluster, FX1, SMP 214 TF Institute for Molecular Science x86 Cluster (RX300), FX10 >168 TF Institute for Molecular Science (*) x86 Cluster (CX400) >136 TF Japan Aerospace Exploration Agency FX1, SMP >135 TF RIKEN (Wako Lab. RICC) x86 Cluster (RX200) 108 TF Institute for Solid State Physics of the Univ. of Tokyo (*) FX10 90 TF NAGOYA University x86 Cluster (HX600), FX1, SMP 60 TF A*STAR, Singapore x86 Cluster (BX900) > 45 TF A Manufacturer x86 Cluster >250 TF (*)