Improvements for the XL Algorithm with Applications to Algebraic Cryptanalysis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Wearable-Based Pedestrian Inertial Navigation with Constraints Based on Biomechanical Models

Wearable-based pedestrian inertial navigation with constraints based on biomechanical models Dina Bousdar Ahmed∗ and Kai Metzger† ∗ Institute of Communications and Navigation German Aerospace Center (DLR), Munich, Germany Email: [email protected] † Technical University of Munich, Germany Email: kai [email protected] Abstract—Our aim in this paper is to analyze inertial nav- sensor to correct the heading estimation [4]. The combina- igation systems (INSs) from the biomechanical point of view. tion of inertial sensors and WiFi measurements is useful in We wanted to improve the performance of a thigh INS by indoor environments. Chen et al. use the position estimated applying biomechanical constraints. To that end, we propose a biomechanical model of the leg. The latter establishes a through WiFi measurements to correct the position estimates relationship between the orientation of the thigh INS and the of an inertial navigation system [5]. The latter is based on a kinematic motion of the leg. This relationship allows to observe smartphone. the effect that the orientation errors have in the expected motion The detection of known landmarks, e.g. turns, elevators, etc, of the leg. We observe that the errors in the orientation estimation can improve the position estimation. Chen et al. [5] incorporate of an INS translate into incoherent human motion. Based on this analysis, we proposed a modified thigh INS to integrate landmarks to improve the performance of their navigation biomechanical constraints. The results show that the proposed system. Munoz et al. [6] use also landmarks to correct directly system outperforms the thigh INS in 50% regarding distance the heading estimation of a thigh-mounted inertial navigation error and 32% regarding orientation error. -

The Data Encryption Standard (DES) – History

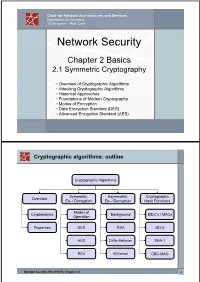

Chair for Network Architectures and Services Department of Informatics TU München – Prof. Carle Network Security Chapter 2 Basics 2.1 Symmetric Cryptography • Overview of Cryptographic Algorithms • Attacking Cryptographic Algorithms • Historical Approaches • Foundations of Modern Cryptography • Modes of Encryption • Data Encryption Standard (DES) • Advanced Encryption Standard (AES) Cryptographic algorithms: outline Cryptographic Algorithms Symmetric Asymmetric Cryptographic Overview En- / Decryption En- / Decryption Hash Functions Modes of Cryptanalysis Background MDC’s / MACs Operation Properties DES RSA MD-5 AES Diffie-Hellman SHA-1 RC4 ElGamal CBC-MAC Network Security, WS 2010/11, Chapter 2.1 2 Basic Terms: Plaintext and Ciphertext Plaintext P The original readable content of a message (or data). P_netsec = „This is network security“ Ciphertext C The encrypted version of the plaintext. C_netsec = „Ff iThtIiDjlyHLPRFxvowf“ encrypt key k1 C P key k2 decrypt In case of symmetric cryptography, k1 = k2. Network Security, WS 2010/11, Chapter 2.1 3 Basic Terms: Block cipher and Stream cipher Block cipher A cipher that encrypts / decrypts inputs of length n to outputs of length n given the corresponding key k. • n is block length Most modern symmetric ciphers are block ciphers, e.g. AES, DES, Twofish, … Stream cipher A symmetric cipher that generats a random bitstream, called key stream, from the symmetric key k. Ciphertext = key stream XOR plaintext Network Security, WS 2010/11, Chapter 2.1 4 Cryptographic algorithms: overview -

Preparation and Investigation of Highly Charged Ions in a Penning Trap for the Determination of Atomic Magnetic Moments

Preparation and Investigation of Highly Charged Ions in a Penning Trap for the Determination of Atomic Magnetic Moments Präparation und Untersuchung von hochgeladenen Ionen in einer Penning-Falle zur Bestimmung atomarer magnetischer Momente Dissertation approved by the Fachbereich Physik of the Technische Universität Darmstadt in fulfillment of the requirements for the degree of Doctor of Natural Sciences (Dr. rer. nat.) by Dipl.-Phys. Marco Wiesel from Neustadt an der Weinstraße June 2017 — Darmstadt — D 17 Preparation and Investigation of Highly Charged Ions in a Penning Trap for the Determination of Atomic Magnetic Moments Dissertation approved by the Fachbereich Physik of the Technische Universität Darmstadt in fulfillment of the requirements for the degree of Doctor of Natural Sciences (Dr. rer. nat.) by Dipl.-Phys. Marco Wiesel from Neustadt an der Weinstraße 1. Referee: Prof. Dr. rer. nat. Gerhard Birkl 2. Referee: Privatdozent Dr. rer. nat. Wolfgang Quint Submission date: 18.04.2017 Examination date: 24.05.2017 Darmstadt 2017 D 17 Title: The logo of ARTEMIS – AsymmetRic Trap for the measurement of Electron Magnetic moments in IonS. Bitte zitieren Sie dieses Dokument als: URN: urn:nbn:de:tuda-tuprints-62803 URL: http://tuprints.ulb.tu-darmstadt.de/id/eprint/6280 Dieses Dokument wird bereitgestellt von tuprints, E-Publishing-Service der TU Darmstadt http://tuprints.ulb.tu-darmstadt.de [email protected] Die Veröffentlichung steht unter folgender Creative Commons Lizenz: Namensnennung – Keine kommerzielle Nutzung – Keine Bearbeitung 4.0 International https://creativecommons.org/licenses/by-nc-nd/4.0/ Abstract The ARTEMIS experiment aims at measuring magnetic moments of electrons bound in highly charged ions that are stored in a Penning trap. -

Konrad Zuse Und Die Schweiz

Research Collection Report Konrad Zuse und die Schweiz Relaisrechner Z4 an der ETH Zürich : Rechenlocher M9 für die Schweizer Remington Rand : Eigenbau des Röhrenrechners ERMETH : Zeitzeugenbericht zur Z4 : unbekannte Dokumente zur M9 : ein Beitrag zu den Anfängen der Schweizer Informatikgeschichte Author(s): Bruderer, Herbert Publication Date: 2011 Permanent Link: https://doi.org/10.3929/ethz-a-006517565 Rights / License: In Copyright - Non-Commercial Use Permitted This page was generated automatically upon download from the ETH Zurich Research Collection. For more information please consult the Terms of use. ETH Library Konrad Zuse und die Schweiz Relaisrechner Z4 an der ETH Zürich Rechenlocher M9 für die Schweizer Remington Rand Eigenbau des Röhrenrechners ERMETH Zeitzeugenbericht zur Z4 Unbekannte Dokumente zur M9 Ein Beitrag zu den Anfängen der Schweizer Informatikgeschichte Herbert Bruderer ETH Zürich Departement Informatik Professur für Informationstechnologie und Ausbildung Zürich, Juli 2011 Adresse des Verfassers: Herbert Bruderer ETH Zürich Informationstechnologie und Ausbildung CAB F 14 Universitätsstrasse 6 CH-8092 Zürich Telefon: +41 44 632 73 83 Telefax: +41 44 632 13 90 [email protected] www.ite.ethz.ch privat: Herbert Bruderer Bruderer Informatik Seehaldenstrasse 26 Postfach 47 CH-9401 Rorschach Telefon: +41 71 855 77 11 Telefax: +41 71 855 72 11 [email protected] Titelbild: Relaisschränke der Z4 (links: Heinz Rutishauser, rechts: Ambros Speiser), ETH Zürich 1950, © ETH-Bibliothek Zürich, Bildarchiv Bild 4. Umschlagseite: Verabschiedungsrede von Konrad Zuse an der Z4 am 6. Juli 1950 in der Zuse KG in Neukirchen (Kreis Hünfeld) © Privatarchiv Horst Zuse, Berlin Eidgenössische Technische Hochschule Zürich Departement Informatik Professur für Informationstechnologie und Ausbildung CH-8092 Zürich www.abz.inf.ethz.ch 1. -

Symmetric Encryption: AES

Symmetric Encryption: AES Yan Huang Credits: David Evans (UVA) Advanced Encryption Standard ▪ 1997: NIST initiates program to choose Advanced Encryption Standard to replace DES ▪ Why not just use 3DES? 2 AES Process ▪ Open Design • DES: design criteria for S-boxes kept secret ▪ Many good choices • DES: only one acceptable algorithm ▪ Public cryptanalysis efforts before choice • Heavy involvements of academic community, leading public cryptographers ▪ Conservative (but “quick”): 4 year process 3 AES Requirements ▪ Secure for next 50-100 years ▪ Royalty free ▪ Performance: faster than 3DES ▪ Support 128, 192 and 256 bit keys • Brute force search of 2128 keys at 1 Trillion keys/ second would take 1019 years (109 * age of universe) 4 AES Round 1 ▪ 15 submissions accepted ▪ Weak ciphers quickly eliminated • Magenta broken at conference! ▪ 5 finalists selected: • MARS (IBM) • RC6 (Rivest, et. al.) • Rijndael (Belgian cryptographers) • Serpent (Anderson, Biham, Knudsen) • Twofish (Schneier, et. al.) 5 AES Evaluation Criteria 1. Security Most important, but hardest to measure Resistance to cryptanalysis, randomness of output 2. Cost and Implementation Characteristics Licensing, Computational, Memory Flexibility (different key/block sizes), hardware implementation 6 AES Criteria Tradeoffs ▪ Security v. Performance • How do you measure security? ▪ Simplicity v. Complexity • Need complexity for confusion • Need simplicity to be able to analyze and implement efficiently 7 Breaking a Cipher ▪ Intuitive Impression • Attacker can decrypt secret messages • Reasonable amount of work, actual amount of ciphertext ▪ “Academic” Ideology • Attacker can determine something about the message • Given unlimited number of chosen plaintext-ciphertext pairs • Can perform a very large number of computations, up to, but not including, 2n, where n is the key size in bits (i.e. -

P the Pioneers and Their Computers

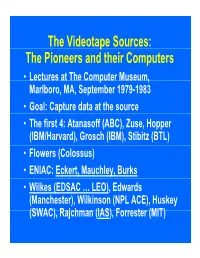

The Videotape Sources: The Pioneers and their Computers • Lectures at The Compp,uter Museum, Marlboro, MA, September 1979-1983 • Goal: Capture data at the source • The first 4: Atanasoff (ABC), Zuse, Hopper (IBM/Harvard), Grosch (IBM), Stibitz (BTL) • Flowers (Colossus) • ENIAC: Eckert, Mauchley, Burks • Wilkes (EDSAC … LEO), Edwards (Manchester), Wilkinson (NPL ACE), Huskey (SWAC), Rajchman (IAS), Forrester (MIT) What did it feel like then? • What were th e comput ers? • Why did their inventors build them? • What materials (technology) did they build from? • What were their speed and memory size specs? • How did they work? • How were they used or programmed? • What were they used for? • What did each contribute to future computing? • What were the by-products? and alumni/ae? The “classic” five boxes of a stored ppgrogram dig ital comp uter Memory M Central Input Output Control I O CC Central Arithmetic CA How was programming done before programming languages and O/Ss? • ENIAC was programmed by routing control pulse cables f ormi ng th e “ program count er” • Clippinger and von Neumann made “function codes” for the tables of ENIAC • Kilburn at Manchester ran the first 17 word program • Wilkes, Wheeler, and Gill wrote the first book on programmiidbBbbIiSiing, reprinted by Babbage Institute Series • Parallel versus Serial • Pre-programming languages and operating systems • Big idea: compatibility for program investment – EDSAC was transferred to Leo – The IAS Computers built at Universities Time Line of First Computers Year 1935 1940 1945 1950 1955 ••••• BTL ---------o o o o Zuse ----------------o Atanasoff ------------------o IBM ASCC,SSEC ------------o-----------o >CPC ENIAC ?--------------o EDVAC s------------------o UNIVAC I IAS --?s------------o Colossus -------?---?----o Manchester ?--------o ?>Ferranti EDSAC ?-----------o ?>Leo ACE ?--------------o ?>DEUCE Whirl wi nd SEAC & SWAC ENIAC Project Time Line & Descendants IBM 701, Philco S2000, ERA.. -

Kapitel 6 Der Advanced Encryption Standard Rijndael

Kap. 6: Der Advanced Encryption Standard Rijndael Dieses ging von Anfang an davon aus, daß der zu wahlende¨ Algorith- mus stark¨ er sein musse¨ als Triple DES; er sollte zwanzig bis dreißig Jahre lang anwendbar sein und dementsprechende Sicherheit bieten. Nach einer internationalen Konferenz uber¨ die Auswahlkriterien am 15. April 1997 verof¨ fentlichte es am 12. September 1997 die endgultige¨ Ausschreibung. Kapitel 6 Minimalanforderung an die einzureichenden Algorithmen waren da- nach, daß es sich um symmetrische Blockchiffren handeln muß, die min- Der Advanced Encryption Standard Rijndael destens eine Blocklange¨ von 128 Bit bei Schlussell¨ angen¨ von 128 Bit, 192 Bit und 256 Bit vorsieht. §1: Geschichte und Auswahlkriterien Als Kriterien fur¨ die Wahl zwischen den einzelnen Algorithmen wurden DES wurde in Zusammenarbeit mit der National Security Agency der die folgenden Aspekte genannt: Vereinigten Staaten von IBM entwickelt und dann als amerikanischer 1. Sicherheit: Wie sicher ist der Algorithmus im Vergleich zu den Standard verkundet.¨ Diese Vorgehensweise weckte von Anfang an den anderen Kandidaten? Inwieweit ist seine Ausgabe ununterscheidbar Verdacht, daß moglicherweise¨ eine Falltur¨ “ eingebaut sei, insbeson- von der einer Zufallspermutation? Wie gut ist die mathematische ” dere da zumindest ursprunglich¨ nicht alle Design-Kriterien publiziert Basis fur¨ die Sicherheit des Algorithmus begrundet?¨ (Im Gegensatz wurden. zu DES sollten dieses Mal alle Kriterien publiziert werden.) 2. Kosten: Welche Lizensgebuhren¨ werden fallig?¨ -

Basic Cryptanalysis Methods on Block Ciphers

1 BASIC CRYPTANALYSIS METHODS ON BLOCK CIPHERS A THESIS SUBMITTED TO THE GRADUATE SCHOOL OF APPLIED MATHEMATICS OF MIDDLE EAST TECHNICAL UNIVERSITY BY DILEK˙ C¸ELIK˙ IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF MASTER OF SCIENCE IN CRYPTOGRAPHY MAY 2010 Approval of the thesis: BASIC CRYPTANALYSIS METHODS ON BLOCK CIPHERS submitted by DILEK˙ C¸ELIK˙ in partial fulfillment of the requirements for the degree of Master of Science in Department of Cryptography, Middle East Technical University by, Prof. Dr. Ersan AKYILDIZ Director, Graduate School of Applied Mathematics Prof. Dr. Ferruh OZBUDAK¨ Head of Department, Cryptography Assoc. Prof. Dr. Ali DOGANAKSOY˘ Supervisor, Department of Mathematics, METU Examining Committee Members: Prof. Dr. Ferruh OZBUDAK¨ Department of Mathematics, METU Assoc. Prof. Dr. Ali DOGANAKSOY˘ Department of Mathematics, METU Assist. Prof. Dr. Zulf¨ ukar¨ SAYGI Department of Mathematics, TOBB ETU Dr. Muhiddin UGUZ˘ Department of Mathematics, METU Dr. Murat CENK Department of Cryptography, METU Date: I hereby declare that all information in this document has been obtained and presented in accordance with academic rules and ethical conduct. I also declare that, as required by these rules and conduct, I have fully cited and referenced all material and results that are not original to this work. Name, Last Name: DILEK˙ C¸ELIK˙ Signature : iii ABSTRACT BASIC CRYPTANALYSIS METHODS ON BLOCK CIPHERS C¸elik, Dilek M.S., Department of Cryptography Supervisor : Assoc. Prof. Dr. Ali DOGANAKSOY˘ May 2010, 119 pages Differential cryptanalysis and linear cryptanalysis are the first significant methods used to at- tack on block ciphers. These concepts compose the keystones for most of the attacks in recent years. -

Statistical Cryptanalysis of Block Ciphers

STATISTICAL CRYPTANALYSIS OF BLOCK CIPHERS THÈSE NO 3179 (2005) PRÉSENTÉE À LA FACULTÉ INFORMATIQUE ET COMMUNICATIONS Institut de systèmes de communication SECTION DES SYSTÈMES DE COMMUNICATION ÉCOLE POLYTECHNIQUE FÉDÉRALE DE LAUSANNE POUR L'OBTENTION DU GRADE DE DOCTEUR ÈS SCIENCES PAR Pascal JUNOD ingénieur informaticien dilpômé EPF de nationalité suisse et originaire de Sainte-Croix (VD) acceptée sur proposition du jury: Prof. S. Vaudenay, directeur de thèse Prof. J. Massey, rapporteur Prof. W. Meier, rapporteur Prof. S. Morgenthaler, rapporteur Prof. J. Stern, rapporteur Lausanne, EPFL 2005 to Mimi and Chlo´e Acknowledgments First of all, I would like to warmly thank my supervisor, Prof. Serge Vaude- nay, for having given to me such a wonderful opportunity to perform research in a friendly environment, and for having been the perfect supervisor that every PhD would dream of. I am also very grateful to the president of the jury, Prof. Emre Telatar, and to the reviewers Prof. em. James L. Massey, Prof. Jacques Stern, Prof. Willi Meier, and Prof. Stephan Morgenthaler for having accepted to be part of the jury and for having invested such a lot of time for reviewing this thesis. I would like to express my gratitude to all my (former and current) col- leagues at LASEC for their support and for their friendship: Gildas Avoine, Thomas Baign`eres, Nenad Buncic, Brice Canvel, Martine Corval, Matthieu Finiasz, Yi Lu, Jean Monnerat, Philippe Oechslin, and John Pliam. With- out them, the EPFL (and the crypto) would not be so fun! Without their support, trust and encouragement, the last part of this thesis, FOX, would certainly not be born: I owe to MediaCrypt AG, espe- cially to Ralf Kastmann and Richard Straub many, many, many hours of interesting work. -

Evaluating Algebraic Attacks on the AES

Diplomarbeit Evaluating Algebraic Attacks on the AES Ralf-Philipp Weinmann <[email protected]> Betreuer: Prof. Dr. J. Buchmann, Fachbereich Informatik Fachgebiet Kryptographie und Computeralgebra, Technische Universit¨atDarmstadt 2 Contents 1 Introduction 5 1.1 Algebraic descriptions of AES . 5 1.2 Block ciphers . 6 1.2.1 Iterated Block Ciphers . 6 1.2.2 Key-Iterated Block Ciphers . 6 1.3 Classification of attacks on block ciphers . 7 1.4 Scope of this thesis . 7 2 The family of Mini-Rijndaels 9 2.1 Parameters . 10 2.2 An algorithmic cipher description . 10 2.2.1 AddRoundKey ............................. 10 2.2.2 SubElement .............................. 11 2.2.3 ShiftRows ............................... 12 2.2.4 MixColumns .............................. 12 2.2.5 Key scheduling . 13 3 Systems of polynomial equations 15 3.1 Terminology . 15 3.2 Constructing the equations . 16 3.3 Constructing a system over F2 ........................ 18 3.3.1 The S-Boxes . 18 3.3.2 The linear layer . 19 3.3.3 The key schedule . 20 3.4 Embedding the cipher . 20 3.4.1 The S-Boxes . 20 3.4.2 The linear layer . 21 3.4.3 The key schedule . 22 4 Linearization attacks 31 4.1 Linearization . 31 4.2 The XL Algorithm . 32 4.3 Relinearization . 32 4.4 Extended Sparse Linearization . 33 4.4.1 The final step . 33 3 4 CONTENTS 4.4.2 An example for XSL . 34 5 Observations and experimental results 43 5.1 Implementation . 43 5.2 The original examples . 44 5.3 Applying XSL to a Mini-Rijndael . -

Computational and Algebraic Aspects of the Advanced Encryption Standard

Computational and Algebraic Aspects of the Advanced Encryption Standard Carlos Cid, Sean Murphy and Matthew Robshaw Information Security Group, Royal Holloway, University of London, Egham, Surrey, TW20 0EX, U.K. [email protected] [email protected] [email protected] Abstract. The new Advanced Encryption Standard (AES) has been recently selected by the US government to replace the old Data Encryption Standard (DES) for protecting sensitive o±cial information. Due to its simplicity and elegant algebraic structure, the choice of the AES algorithm has motivated the study of a new approach to the analysis of block ciphers. While conventional methods of cryptanalysis (e.g. di®erential and linear cryptanalysis) are usually based on a \statistical" approach, where an attacker attempts to construct statistical patterns through many interactions of the cipher, the so-called algebraic attacks exploit the intrinsic algebraic structure of a cipher. More speci¯cally, the attacker expresses the encryption transformation as a set of multivariate polynomial equations and attempts to recover the encryption key by solving the system. In this paper we consider a number of algebraic aspects of the AES, and examine a few computational and algebraic techniques that could be used in the cryptanalysis of cipher. We show how one can express the cipher as a very large, though surprisingly simple, system of multivariate quadratic equations over the ¯nite ¯eld F28 , and consider some approaches that can be used to solve this system. 1 Introduction In 1997 the US National Institute of Standards and Technology (NIST) announced an open compe- tition to select a substitute for the old 64-bit block, 56-bit key Data Encryption Standard (DES). -

Bridge Measurement Analysis

Bridge Measurement Analysis Svetlana Avramov-Zamurovic1, Bryan Waltrip2 and Andrew Koffman2 1United States Naval Academy, Weapons and Systems Engineering Department Annapolis, MD 21402, Telephone: 410 293 6124 Email: [email protected] 2National Institute of Standards and Technology†, Electricity Division Gaithersburg, MD 21899. Telephone: 301 975 2438, Email: [email protected] Introduction At the United States Academy there are several engineering majors, including Systems Engineering. This program offers excellent systems integration education. In particular the major concentrates on control of electrical, computer and mechanical systems. In addition to several tracks, students have the opportunity to independently research a field of interest. This is a great opportunity for teachers and students to pursue more in-depth analyses. This paper will describe one such experiment in the field of metrology. Very often engineering laboratories at undergraduate schools are well equipped with power supplies, signal generators, oscilloscopes and general-purpose multimeters. This set allows teachers and students to set up test-beds for most of the basic electronics circuits studied in different engineering tracks. Modern instrumentation is in general user-friendly and students like using the equipment. However, students are often not aware that there are two pieces of information necessary to establish a measurement result: the numerical value of the measured quantity and the uncertainty with which that measurement was performed. In order to achieve high measurement accuracy, more complex measurement systems must be developed. This paper will describe the process of analyzing a bridge measurement using MATLAB‡. Measurement Bridge One of the basic circuits that demonstrate the concept of a current/voltage divider is a Wheatstone bridge (given in Figure 1.) A source voltage is applied to a parallel connection of impedances.