Games History.Key

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

University of Alberta Library Release

University of Alberta Library Release Form Name of Author: Morgan Hugh Kan Title of Thesis: Postgame Analysis of Poker Decisions Degree: Master of Science Year this Degree Granted: 2007 Permission is hereby granted to the University of Alberta Library to reproduce sin- gle copies of this thesis and to lend or sell such copies for private, scholarly or scientific research purposes only. The author reserves all other publication and other rights in association with the copyright in the thesis, and except as herein before provided, neither the thesis nor any substantial portion thereof may be printed or otherwise reproduced in any material form whatever without the author's prior written permission. Morgan Hugh Kan Date: University of Alberta POSTGAME ANALYSIS OF POKER DECISIONS by Morgan Hugh Kan A thesis submitted to the Faculty of Graduate Studies and Research in partial ful- fillment of the requirements for the degree of Master of Science. Department of Computing Science Edmonton, Alberta Spring 2007 University of Alberta Faculty of Graduate Studies and Research The undersigned certify that they have read, and recommend to the Faculty of Grad- uate Studies and Research for acceptance, a thesis entitled Postgame Analysis of Poker Decisions submitted by Morgan Hugh Kan in partial fulfillment of the re- quirements for the degree of Master of Science. Jonathan Schaeffer Supervisor Michael Bowling Michael Carbonaro External Examiner Date: To my parents, Janet and Chay Kan, and my sister, Megan Kan, I would never have made it this far without them. Abstract In Artificial Intelligence research, evaluation is a recurring theme. A newly crafted game-playing program is interesting if it can be shown to be better by some mea- sure. -

SCIENCE VOLUME 18, No 2, FALL 2007

SCIENCE VOLUME 18, No 2, FALL 2007 FACULTY OF SCIENCE ALUMNI MAGAZINE contourswww.ualberta.ca/science UNIVERSITY Reading CREATES our body’s SPACE chemicals for INSTITUTE better diagnosis Honouring Mama ALUMNUS TURNS Lu’s spirit of GRIEF INTO HOPE giving Fine arts ARCTIC PONDS students liven DRYING UP up lab space through games SCIENCEcontours Science Contours is published twice MESSAGE FROM THE DEAN a year by the faculty of Science to provide current information on its many activities. The magazine is distributed to alumni and friends of the Faculty of An Intersection Between Arts and Science Science. Phase 1 of our new Dean of Science Centennial Centre for Gregory Taylor Interdisciplinary Sci- ence (CCIS) was near- Assistant Dean, External Relations Claudia Wood ing completion when I happened upon a stu- External Relations Team dent art competition on Katherine Captain, Emily Lennstrom, campus. It occurred to Michel Proulx, Traci Toshack, me then that it would be Kevin Websdale wonderful to have stu- Fine arts students Editor dents help us put some Wendy Lung and Ansun Michel Proulx finishing touches on our Yan enjoy their work new space. Graphic Design After a few conversations, two different classes - one in Inte- Studio X Design grative/Exhibit Design and the other in Printmaking - were tasked with coming up with ideas. The results are astounding. Contributing writers To begin with, the students had to wrap their heads around the Bev Betkowski, Kris Connor, Michel purpose and uniqueness of the building. CCIS is one of the few Proulx, Isabela Varela facilities in the world to house interdisciplinary teams under one roof and Phase 1 is no different. -

AI from a Game Player Perspective Eleonora Giunchiglia Why?

AI from a game player perspective Eleonora Giunchiglia Why? David Churchill, professor at Memorial University of Newfoundland: ``From a scientific point of view, the properties of StarCraft are very much like the properties of real life. [. .] We’re making a test bed for technologies we can use in the real world.’’ This concept can be extended to every game and justifies the research of AI in games. Connections with MAS A multiagent system is one composed of multiple interacting software components known as agents, which are typically capable of cooperating to solve problems that are beyond the abilities of any individual member. This represents by far a more complex setting than the traditional 1vs1 games à only in recent years they were able to study games with multiple agents. In this project we traced the path that led from AI applied to 1vs1 games to many vs. many games. The very first attempts The very first attempts were done even before the concept of Artificial intelligence was born: 1890: Leonardo Torres y Quevedo developed an electro-mechanical device, El Ajedrecista, to checkmate a human opponent’s king using only its own king and rook. 1948: Alan Turing wrote the algorithm TurboChamp. He never managed to run it on a real computer. The very first attempts 1950: Claude Shannon proposes the Minimax algorithm. Shannon proposed two different ways of deciding the next move: 1. doing brute-force tree search on the complete tree, and take the optimal move, or 2. looking at a small subset of next moves at each layer during tree search, and take the “likely optimal” move. -

Building a Champion Level Computer Poker Player

University of Alberta Library Release Form Name of Author: Michael Bradley Johanson Title of Thesis: Robust Strategies and Counter-Strategies: Building a Champion Level Computer Poker Player Degree: Master of Science Year this Degree Granted: 2007 Permission is hereby granted to the University of Alberta Library to reproduce single copies of this thesis and to lend or sell such copies for private, scholarly or scientific research purposes only. The author reserves all other publication and other rights in association with the copyright in the thesis, and except as herein before provided, neither the thesis nor any substantial portion thereof may be printed or otherwise reproduced in any material form whatever without the author’s prior written permission. Michael Bradley Johanson Date: Too much chaos, nothing gets finished. Too much order, nothing gets started. — Hexar’s Corollary University of Alberta ROBUST STRATEGIES AND COUNTER-STRATEGIES: BUILDING A CHAMPION LEVEL COMPUTER POKER PLAYER by Michael Bradley Johanson A thesis submitted to the Faculty of Graduate Studies and Research in partial fulfillment of the requirements for the degree of Master of Science. Department of Computing Science Edmonton, Alberta Fall 2007 University of Alberta Faculty of Graduate Studies and Research The undersigned certify that they have read, and recommend to the Faculty of Graduate Studies and Research for acceptance, a thesis entitled Robust Strategies and Counter-Strategies: Building a Champion Level Computer Poker Player submitted by Michael Bradley Johanson in partial fulfillment of the requirements for the degree of Master of Science. Michael Bowling Supervisor Duane Szafron Michael Carbonaro External Examiner Date: To my family: my parents Brad and Sue Johanson, and my brother, Jeff Johanson. -

A Chess Engine

A Chess Engine Paul Dailly Dominik Gotojuch Neil Henning Keir Lawson Alec Macdonald Tamerlan Tajaddinov University of Glasgow Department of Computing Science Sir Alwyn Williams Building Lilybank Gardens Glasgow G12 8QQ March 18, 2008 Abstract Though many computer chess engines are available, the number of engines using object orientated approaches to the problem is minimal. This report documents an implementation of an object oriented chess engine. Traditionally, in order to gain the advantage of speed, the C language is used for implementation, however, being an older language, it lacks many modern language features. The chess engine documented within this report uses the modern Java language, providing features such as reflection and generics that are used extensively, allowing for complex but understandable code. Also of interest are the various depth first search algorithms used to produce a fast game, and the numerous functions for evaluating different characteristics of the board. These two fundamental components, the evaluator and the analyser, combine to produce a fast and relatively skillful chess engine. We discuss both the design and implementation of the engine, along with details of other approaches that could be taken, and in what manner the engine could be expanded. We conclude by examining the engine empirically, and from this evaluation, reflecting on the advantages and disadvantages of our chosen approach. Education Use Consent We hereby give our permission for this project to be shown to other University of Glasgow students and to be distributed in an electronic format. Please note that you are under no obligation to sign this declaration, but doing so would help future students. -

Chess by Vincent Diepeveen

Chess by Vincent Diepeveen Chess History Chess found its origin in India well over 1500 years ago th Most sources quote the 6 century A.D. and even before that.. From India it reaches Persia (Iran) The rules gradually change; the queen and bishop become more powerful and the pawn can move two squares The name Chess comes from the Persian word for king: Shah Chess Some centuries later, Muslim rulers who conquer Persia spread the game of chess to Europe th Around the 15 century the rules start to be similar to todays chess rules Where rules are pretty much the same since then, the way the pieces look like definitely isn't, not even today! th Most western tournaments the standard is Staunton, from 19 century UK Original Staunton 1849 Replica's are already around $2000 a set Russian Chess pieces (modern) Actually similar (cheaper) sets you can encounter in the east in tournaments; the below set is already a couple of hundreds of dollars in the stores – note shape similar to Staunton Renaissance Style Chess pieces Please note that there is no cross on the king It's possible Staunton gets that credit... Most chessplayers find this easier Blindfolded Chess Actually chess players don't need to see the chess pieces at all Nearly all titled chess players can play blindfolded Question for the audience: How strong do titled chess players play blindfolded? Blindfolded Strength Playing regularly blindfolded hardly loses strength to OTB (over the board) Own experience: The first few moves after opening are actually the hardest moves -

Designing a Pervasive Chess Game

Designing a pervasive chess game André Pereira, Carlos Martinho, Iolanda Leite, Rui Prada, Ana Paiva Instituto Superior Técnico – Technical University of Lisbon and INESC-ID, AV. Professor Cavaco Silva – Taguspark 2780-990 Porto Salvo, Portugal {Andre.Pereira, Carlos.Martinho, Iolanda.Leite, Rui Prada}@tagus.ist.utl.pt, [email protected] Abstract. Chess is an ancient and widely popular tabletop game. For more than 1500 years of history chess has survived every civilization it has touched. One of its heirs, computerized chess, was one of the first artificial intelligence research areas and was highly important for the development of A.I. throughout the twentieth-century. This change of paradigm between a tabletop and a computerized game has its ups and downs. Computation can be used, for example, to simulate opponents in a virtual scenario, but the real essence of chess in which two human opponents battle face-to-face in that familiar black and white chessboard is lost. Usually, computer chess research focuses on improving the effectiveness of chess algorithms. This research, on the other hand, focuses on trying to bring back the essence of real world chess to a computerized scenario. To achieve this goal, in this paper we describe a model inspired by the field of Pervasive Gaming, a field that profits by the mix of real, virtual and social game elements. Keywords: Chess, Pervasive Gaming, Tangible User Interfaces, Graphical User Interfaces, Design Model, Agents. 1 Introduction “Pervasive gaming brings computer entertainment back to the real world” [14] and by doing so overcomes some restrictions of conventional computer games. -

The Game Stats Appendix A: Further Reading

The Game Stats Appendix A: Further Reading Checkers Organizations American Checker Federation (ACF). http://usacheckers.com. Membership in- cludes the bimonthly ACF Bulletin. English Draughts Association (EDA). http://home.clara.net/davey. Membership includes the occasional English Draughts Journal. Checkers Irving Chernev, The Compleat Draughts Player, Oxford University Press, 1981. A readable and instructive book including the history of the game, openings, strate- gies, endgames, problem solving, and classic games. Chernev is one of the few players to reach the title of master in both chess and checkers. Robert Pike, Checker Power: A Game of Problem Solving, Charlesbridge Pub- lishing, 1997. Pike has authored a series of readable books on using checkers for problem solving (and fun). Robert L. Schuffett, Checkers the Tinsley Way, published privately, 1982. Avail- able through the ACF. This book contains over seven hundred of Tinsley’s games, along with pictures, anecdotes, and statistics from his remarkable career. Richard Pask, The Legendary MFT, Checkered Thinking, 2007. Available at http://www.bobnewell.net/nucleus/checkers.php. The most comprehensive collec- tion of material on Tinsley. Richard Fortman, Basic Checkers, seven volumes, privately published. Available from the ACF and at http://home.clara.net/davey/basicche.html. A detailed intro- duction to the main lines of play of the three-move ballot openings. Computer Checkers History Christopher Strachey, “Digital Computers Applied to Games,” Faster Than Thought, B.V. Bowden (editor), Pitman & Sons, London, 1953. Arthur Samuel, “Some Studies in Machine Learning Using the Game of Check- ers,” IBM Journal of Research and Development 3, 1959, pp. 210-229. -

CHINOOK the World Man-Machine Checkers Champion

AI Magazine Volume 17 Number 1 (1996) (© AAAI) Articles CHINOOK The World Man-Machine Checkers Champion Jonathan Schaeffer, Robert Lake, Paul Lu, and Martin Bryant ■ In 1992, the seemingly unbeatable World Check- the American Checker Federation (ACF), CHI- er Champion Marion Tinsley defended his title NOOK was allowed to play in the 1990 U.S. against the computer program CHINOOK. After an championship. This biennial event attracts intense, tightly contested match, Tinsley fought the best players in the world, with the winner back from behind to win the match by scoring earning the right to play a match for the four wins to CHINOOK’s two, with 33 draws. This match was the first time in history that a human world championship. world champion defended his title against a com- CHINOOK came in an undefeated second in puter. This article reports on the progress of the the tournament behind the world champion, checkers (8 3 8 draughts) program CHINOOK since Marion Tinsley. The four individual games 1992. Two years of research and development on between CHINOOK and Tinsley were drawn. the program culminated in a rematch with Tins- This placing earned CHINOOK the right to chal- ley in August 1994. In this match, after six games lenge Tinsley for the world championship, (all draws), Tinsley withdrew from the match and the first time a computer had earned such a relinquished the world championship title to CHI- right in any game. NOOK, citing health concerns. CHINOOK has since defended its title in two subsequent matches. It is Tinsley emerged as a dominant player in the first time in history that a computer has won the late 1940s. -

Best-First and Depth-First Minimax Search in Practice

Best-First and Depth-First Minimax Search in Practice Aske Plaat, Erasmus University, [email protected] Jonathan Schaeffer, University of Alberta, [email protected] Wim Pijls, Erasmus University, [email protected] Arie de Bruin, Erasmus University, [email protected] Erasmus University, University of Alberta, Department of Computer Science, Department of Computing Science, Room H4-31, P.O. Box 1738, 615 General Services Building, 3000 DR Rotterdam, Edmonton, Alberta, The Netherlands Canada T6G 2H1 Abstract Most practitioners use a variant of the Alpha-Beta algorithm, a simple depth-®rst pro- cedure, for searching minimax trees. SSS*, with its best-®rst search strategy, reportedly offers the potential for more ef®cient search. However, the complex formulation of the al- gorithm and its alleged excessive memory requirements preclude its use in practice. For two decades, the search ef®ciency of ªsmartº best-®rst SSS* has cast doubt on the effectiveness of ªdumbº depth-®rst Alpha-Beta. This paper presents a simple framework for calling Alpha-Beta that allows us to create a variety of algorithms, including SSS* and DUAL*. In effect, we formulate a best-®rst algorithm using depth-®rst search. Expressed in this framework SSS* is just a special case of Alpha-Beta, solving all of the perceived drawbacks of the algorithm. In practice, Alpha-Beta variants typically evaluate less nodes than SSS*. A new instance of this framework, MTD(ƒ), out-performs SSS* and NegaScout, the Alpha-Beta variant of choice by practitioners. 1 Introduction Game playing is one of the classic problems of arti®cial intelligence. -

Solving Difficult Game Positions

Solving Difficult Game Positions Solving Difficult Game Positions PROEFSCHRIFT ter verkrijging van de graad van doctor aan de Universiteit Maastricht, op gezag van de Rector Magnificus, Prof. mr. G.P.M.F. Mols, volgens het besluit van het College van Decanen, in het openbaar te verdedigen op woensdag 15 december 2010 om 14.00 uur door Jahn-Takeshi Saito Promotor: Prof. dr. G. Weiss Copromotor: Dr. M.H.M. Winands Dr. ir. J.W.H.M. Uiterwijk Leden van de beoordelingscommissie: Prof. dr. ir. R.L.M. Peeters (voorzitter) Prof. dr. T. Cazenave (Universit´eParis-Dauphine) Prof. dr. M. Gyssens (Universiteit Hasselt / Universiteit Maastricht) Prof. dr. ir. J.C. Scholtes Prof. dr. C. Witteveen (Technische Universiteit Delft) The research has been funded by the Netherlands Organisation for Scientific Research (NWO), in the framework of the project Go for Go, grant number 612.066.409. Dissertation Series No. 2010-49 The research reported in this thesis has been carried out under the auspices of SIKS, the Dutch Research School for Information and Knowledge Systems. ISBN: 978-90-8559-164-1 c 2010 J.-T. Saito All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronically, mechanically, photo- copying, recording or otherwise, without prior permission of the author. Preface After receiving my Master's degree in Computational Linguistics and Artificial Intel- ligence in Osnabruck,¨ I faced the pleasant choice between becoming a Ph.D. student in Osnabruck¨ or Maastricht. The first option would have led me further into the field of Computational Linguistics. -

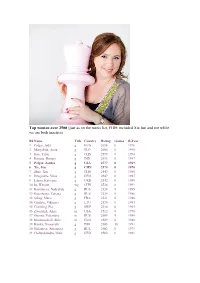

Top Women Over 2500 (Just As on the Men's List, FIDE Included Xie Jun and Me While We Are Both Inactive)

Top women over 2500 (just as on the men's list, FIDE included Xie Jun and me while we are both inactive) Rk Name Title Country Rating Games B-Year 1 Polgar, Judit g HUN 2698 0 1976 2 Muzychuk, Anna g SLO 2606 0 1990 3 Hou, Yifan g CHN 2599 0 1994 4 Koneru, Humpy g IND 2593 0 1987 5 Polgar, Zsuzsa g USA 2577 0 1969 6 Xie, Jun g CHN 2574 0 1970 7 Zhao, Xue g CHN 2549 0 1985 8 Dzagnidze, Nana g GEO 2547 0 1987 9 Lahno, Kateryna g UKR 2542 0 1989 10 Ju, Wenjun wg CHN 2528 0 1991 11 Kosintseva, Nadezhda g RUS 2524 9 1985 12 Kosintseva, Tatiana g RUS 2524 9 1986 13 Sebag, Marie g FRA 2521 0 1986 14 Cmilyte, Viktorija g LTU 2520 0 1983 15 Cramling, Pia g SWE 2514 6 1963 16 Zatonskih, Anna m USA 2512 0 1978 17 Gunina, Valentina m RUS 2509 9 1989 18 Khotenashvili, Bela m GEO 2509 0 1988 19 Harika, Dronavalli g IND 2505 18 1991 20 Stefanova, Antoaneta g BUL 2502 0 1979 21 Chiburdanidze, Maia g GEO 2500 0 1961 How to Play Chinese Chess Thursday, March 1, 2012 | BY: THE EDITORS Chinese chess is never going to suddenly make the transition into becoming a cool pastime, but take it from us: Chinese chess is a lot more fun than its moniker at first lets on. Forget all about those boring queens and bishops; in China you get war elephants and flying cannons.