AI from a Game Player Perspective Eleonora Giunchiglia Why?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Chess Engine

A Chess Engine Paul Dailly Dominik Gotojuch Neil Henning Keir Lawson Alec Macdonald Tamerlan Tajaddinov University of Glasgow Department of Computing Science Sir Alwyn Williams Building Lilybank Gardens Glasgow G12 8QQ March 18, 2008 Abstract Though many computer chess engines are available, the number of engines using object orientated approaches to the problem is minimal. This report documents an implementation of an object oriented chess engine. Traditionally, in order to gain the advantage of speed, the C language is used for implementation, however, being an older language, it lacks many modern language features. The chess engine documented within this report uses the modern Java language, providing features such as reflection and generics that are used extensively, allowing for complex but understandable code. Also of interest are the various depth first search algorithms used to produce a fast game, and the numerous functions for evaluating different characteristics of the board. These two fundamental components, the evaluator and the analyser, combine to produce a fast and relatively skillful chess engine. We discuss both the design and implementation of the engine, along with details of other approaches that could be taken, and in what manner the engine could be expanded. We conclude by examining the engine empirically, and from this evaluation, reflecting on the advantages and disadvantages of our chosen approach. Education Use Consent We hereby give our permission for this project to be shown to other University of Glasgow students and to be distributed in an electronic format. Please note that you are under no obligation to sign this declaration, but doing so would help future students. -

Chess by Vincent Diepeveen

Chess by Vincent Diepeveen Chess History Chess found its origin in India well over 1500 years ago th Most sources quote the 6 century A.D. and even before that.. From India it reaches Persia (Iran) The rules gradually change; the queen and bishop become more powerful and the pawn can move two squares The name Chess comes from the Persian word for king: Shah Chess Some centuries later, Muslim rulers who conquer Persia spread the game of chess to Europe th Around the 15 century the rules start to be similar to todays chess rules Where rules are pretty much the same since then, the way the pieces look like definitely isn't, not even today! th Most western tournaments the standard is Staunton, from 19 century UK Original Staunton 1849 Replica's are already around $2000 a set Russian Chess pieces (modern) Actually similar (cheaper) sets you can encounter in the east in tournaments; the below set is already a couple of hundreds of dollars in the stores – note shape similar to Staunton Renaissance Style Chess pieces Please note that there is no cross on the king It's possible Staunton gets that credit... Most chessplayers find this easier Blindfolded Chess Actually chess players don't need to see the chess pieces at all Nearly all titled chess players can play blindfolded Question for the audience: How strong do titled chess players play blindfolded? Blindfolded Strength Playing regularly blindfolded hardly loses strength to OTB (over the board) Own experience: The first few moves after opening are actually the hardest moves -

Designing a Pervasive Chess Game

Designing a pervasive chess game André Pereira, Carlos Martinho, Iolanda Leite, Rui Prada, Ana Paiva Instituto Superior Técnico – Technical University of Lisbon and INESC-ID, AV. Professor Cavaco Silva – Taguspark 2780-990 Porto Salvo, Portugal {Andre.Pereira, Carlos.Martinho, Iolanda.Leite, Rui Prada}@tagus.ist.utl.pt, [email protected] Abstract. Chess is an ancient and widely popular tabletop game. For more than 1500 years of history chess has survived every civilization it has touched. One of its heirs, computerized chess, was one of the first artificial intelligence research areas and was highly important for the development of A.I. throughout the twentieth-century. This change of paradigm between a tabletop and a computerized game has its ups and downs. Computation can be used, for example, to simulate opponents in a virtual scenario, but the real essence of chess in which two human opponents battle face-to-face in that familiar black and white chessboard is lost. Usually, computer chess research focuses on improving the effectiveness of chess algorithms. This research, on the other hand, focuses on trying to bring back the essence of real world chess to a computerized scenario. To achieve this goal, in this paper we describe a model inspired by the field of Pervasive Gaming, a field that profits by the mix of real, virtual and social game elements. Keywords: Chess, Pervasive Gaming, Tangible User Interfaces, Graphical User Interfaces, Design Model, Agents. 1 Introduction “Pervasive gaming brings computer entertainment back to the real world” [14] and by doing so overcomes some restrictions of conventional computer games. -

Solving Difficult Game Positions

Solving Difficult Game Positions Solving Difficult Game Positions PROEFSCHRIFT ter verkrijging van de graad van doctor aan de Universiteit Maastricht, op gezag van de Rector Magnificus, Prof. mr. G.P.M.F. Mols, volgens het besluit van het College van Decanen, in het openbaar te verdedigen op woensdag 15 december 2010 om 14.00 uur door Jahn-Takeshi Saito Promotor: Prof. dr. G. Weiss Copromotor: Dr. M.H.M. Winands Dr. ir. J.W.H.M. Uiterwijk Leden van de beoordelingscommissie: Prof. dr. ir. R.L.M. Peeters (voorzitter) Prof. dr. T. Cazenave (Universit´eParis-Dauphine) Prof. dr. M. Gyssens (Universiteit Hasselt / Universiteit Maastricht) Prof. dr. ir. J.C. Scholtes Prof. dr. C. Witteveen (Technische Universiteit Delft) The research has been funded by the Netherlands Organisation for Scientific Research (NWO), in the framework of the project Go for Go, grant number 612.066.409. Dissertation Series No. 2010-49 The research reported in this thesis has been carried out under the auspices of SIKS, the Dutch Research School for Information and Knowledge Systems. ISBN: 978-90-8559-164-1 c 2010 J.-T. Saito All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronically, mechanically, photo- copying, recording or otherwise, without prior permission of the author. Preface After receiving my Master's degree in Computational Linguistics and Artificial Intel- ligence in Osnabruck,¨ I faced the pleasant choice between becoming a Ph.D. student in Osnabruck¨ or Maastricht. The first option would have led me further into the field of Computational Linguistics. -

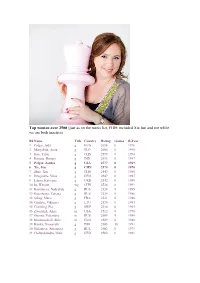

Top Women Over 2500 (Just As on the Men's List, FIDE Included Xie Jun and Me While We Are Both Inactive)

Top women over 2500 (just as on the men's list, FIDE included Xie Jun and me while we are both inactive) Rk Name Title Country Rating Games B-Year 1 Polgar, Judit g HUN 2698 0 1976 2 Muzychuk, Anna g SLO 2606 0 1990 3 Hou, Yifan g CHN 2599 0 1994 4 Koneru, Humpy g IND 2593 0 1987 5 Polgar, Zsuzsa g USA 2577 0 1969 6 Xie, Jun g CHN 2574 0 1970 7 Zhao, Xue g CHN 2549 0 1985 8 Dzagnidze, Nana g GEO 2547 0 1987 9 Lahno, Kateryna g UKR 2542 0 1989 10 Ju, Wenjun wg CHN 2528 0 1991 11 Kosintseva, Nadezhda g RUS 2524 9 1985 12 Kosintseva, Tatiana g RUS 2524 9 1986 13 Sebag, Marie g FRA 2521 0 1986 14 Cmilyte, Viktorija g LTU 2520 0 1983 15 Cramling, Pia g SWE 2514 6 1963 16 Zatonskih, Anna m USA 2512 0 1978 17 Gunina, Valentina m RUS 2509 9 1989 18 Khotenashvili, Bela m GEO 2509 0 1988 19 Harika, Dronavalli g IND 2505 18 1991 20 Stefanova, Antoaneta g BUL 2502 0 1979 21 Chiburdanidze, Maia g GEO 2500 0 1961 How to Play Chinese Chess Thursday, March 1, 2012 | BY: THE EDITORS Chinese chess is never going to suddenly make the transition into becoming a cool pastime, but take it from us: Chinese chess is a lot more fun than its moniker at first lets on. Forget all about those boring queens and bishops; in China you get war elephants and flying cannons. -

Artificial Intelligence in Modern Society Michael Holt Murray State University, [email protected]

Murray State's Digital Commons Integrated Studies Center for Adult and Regional Education Spring 2018 Artificial Intelligence in Modern Society Michael Holt Murray State University, [email protected] Follow this and additional works at: https://digitalcommons.murraystate.edu/bis437 Recommended Citation Holt, Michael, "Artificial Intelligence in Modern Society" (2018). Integrated Studies. 138. https://digitalcommons.murraystate.edu/bis437/138 This Thesis is brought to you for free and open access by the Center for Adult and Regional Education at Murray State's Digital Commons. It has been accepted for inclusion in Integrated Studies by an authorized administrator of Murray State's Digital Commons. For more information, please contact [email protected]. Running head: ARTIFICIAL INTELLIGENCE IN MODERN SOCIETY 1 Artificial Intelligence in Modern Society Michael C. Holt Murray State University ARTIFICIAL INTELLIGENCE IN MODERN SOCIETY 2 Abstract Artificial intelligence is progressing rapidly into diverse areas in modern society. AI can be used in several areas such as research in the medical field or creating innovative technology, for instance, autonomous vehicles. Artificial intelligence is used in the medical field to improve the accuracy of programs used for detecting health conditions. AI technology is also used in programs such as Netflix or Spotify. This type of AI will monitor a user’s habits and make recommendations based on their recent activity. Banks use AI systems to monitor activity on members’ accounts to check for identity theft, approve loans and maintain online security. Systems like these can even be found in call centers. These programs analyze a caller’s voice in real time to provide information to the call center which helps them build a faster rapport with the caller. -

Charles Babbage and the “First” Computer Game

“I commenced an examination of a game called 'tit-tat-to'”: Charles Babbage and the “First” Computer Game Devin Monnens 3300 University Blvd Winter Park, FL 32792 303-506-5602 [email protected] ABSTRACT This paper examines Charles Babbage's tic-tac-toe automaton using original notes and sketches taken from Babbage's notebooks. While Babbage's work with games and computers has been mentioned previously by other authors, this is the first attempt to study that work in detail. The paper explains the origins of the automaton, imagines how it would have operated had it been built, describes how it might have functioned, and Babbage's inspirations for building it. The paper concludes with an analysis of Babbage's place in the history of videogames. Keywords Ada Lovelace, Alan Turing, Automata, Bagatelle, Chess, Charles Babbage, Claude Shannon, Computer Chess, El Ajedrecista, John Joseph Merlin, Nim, Nimatron, Ralph Baer, The Turk, Tic-Tac-Toe, Torres y Quevedo, Wolfgang von Kempelen INTRODUCTION The origins of the computer game can be difficult to trace. Before early games such as Pong (1972), Spacewar! (1962), and Tennis for Two (1958), the history of games on computers becomes deeply entangled with experiments in computer chess, Goldsmith and Mann's 1947 patent for a “cathode ray tube amusement device”, and the Nim-playing computer by Westinghouse in 1939. Montfort (2005, p. 76) defines the first computer game as Leonardo Torres y Quevedo's chess-playing automaton (1914). While many of these inventions were not directly tied to the later development of the videogame industry, they stand out as anomalies in mankind's relationship with games and computers. -

Games History.Key

Artificial Intelligence: A History of Game-Playing Programs Nathan Sturtevant AI For Traditional Games With thanks to Jonathan Schaeffer What does it mean to be intelligent? How can we measure computer intelligence? Nathan Sturtevant When will human intelligence be surpassed? Nathan Sturtevant When will human intelligence be surpassed? Human strengths Computer Strengths • Intuition • Fast, precise computation • Visual patterns • Large, perfect memory • Deeply crafted knowledge • Repetitive, boring tasks • Experience applied to new situations Nathan Sturtevant When will human intelligence be surpassed? Nathan Sturtevant When will human intelligence be surpassed? Why games? Focus • Well defined • How did the computer AI win? • Well known • How did the humans react? • Measurable • What mistakes were made in over-stating performance • Only 60 years ago it was an open question whether computers could play games • Game play was thought to be a creative, human activity, which machines would be incapable of Nathan Sturtevant When will human intelligence be surpassed? Nathan Sturtevant When will human intelligence be surpassed? Checkers History • Arthur Samuel began work on Checkers in the late 50’s • Wrote a program that “learned” to play • Beat Robert Nealey in 1962 • IBM advertised as “a former Connecticut checkers champion, and one of the nation’s foremost players” • Nealey won rematch in 1963 • Nealey didn’t win Connecticut state championship until 1966 • Crushed by human champions in 1966 Nathan Sturtevant When will human intelligence be surpassed? Reports of success overblown Human Champ: Marion Tinsley • “...it seems safe to predict that within • “Computers became unbeatable in ten years, checkers will be a completely checkers several years ago.” Thomas • Closest thing to perfect decidable game.” Richard Bellman, Hoover, “Intelligent Machines,” Omni human player Proceedings of the National Academy of magazine, 1979, p. -

The Drink Tank

That cover is from Mo Starkey, The Spiel of a New Machine: built into the mechanics, or it has who is a wonderful addition ot the Computer Chess to be a very limited system, like for Drink Tank family. That kid is all by Chris Garcia end game problems. El Ajedrecista sorts of creepy. was a King vs. King and Castle end The games issue has been in the Let’s face it: computers were game player. It still exists, having works for a while. I’ve been a big game built by white guys in the 1950s. Yes, been built in 1912, but it hasn’t been player for ages, especially when we eat there were women who played very played in several years. The builder, lunch together at work. This issue is all significant roles, and a few Hispan- Leonardo Torres y Quevedo, did a lot about gaming, video, board, et cetera. ics and a couple of Japanese, Chi- of things with automata. I’ve got an article about Computers and nese, Black and Hispanics, but for This wasn’t the first attempt, Games and why the attempts to mix the the most part, they were white dudes though the first not to be a cheat. two have been so strange. There’s also in skinny ties. And when it came The first was The Turk, a mechani- another article from me that explains time to figure out when computers cal device that had a midget chess how I had to compact the idea of com- were smarter than us, they did what master inside it. -

From the First Chess-Automaton to the Mars Pathfinder

Acta Polytechnica Hungarica Vol. 13, No. 1, 2016 From the First Chess-Automaton to the Mars Pathfinder George Kovács Computer and Automation Research Institute, Hungarian Academy of Sciences Kende u. 13-17, H-1111 Budapest, Hungary; [email protected] Alexander Petunin*, Jevgenij Ivanko** *,**Ural Federal University, ul. Mira 19, 620002 Ekaterinburg, Russia [email protected]; [email protected] **Institute of Mathematics and Mechanics, Ural Branch, Russian Academy of Sciences, 16, S. Kovalevskoi, 620990 Ekaterinburg, Russia Nafissa Yusupova Ufa State Aviation Technical University, 12 K. Marx Str., 450000 Ufa, Russia [email protected] Abstract: This paper aims to highlight the relationship of Artificial Intelligence, the first Chessautomaton (The Turk), Computer Chess (Deep Blue), Mars Mission (Pathfinder Sojourner), Intelligent Robotics and Industrial Robots Biographical and technical data is presented in order to evaluate and laudate the extraordinary achievements of extreme talents, starting with two Hungarian world class innovators: Farkas Kempelen and Antal Bejczy. This paper gives an overview of their lives and contributions, pointing out some interesting connections. A novel evaluation and classification method of robots is suggested. Keywords: Mars Rover; Pathfinder; Chess automaton 1 Introduction There are many Hungarians, who had a major contribution in the most important inventions and scientific milestones of mankind. John von Neumann, József Galamb, Ányos Jedlik, Tódor Kármán, Leó Szilárd, Miksa Déri are only a few of the many famous Hungarian scientists and engineers, who played a major role in – 61 – G. Kovács et al. From the First Chess-Automaton to the Mars Pathfinder shaping our world’s technology over the past two centuries. -

Computer Chess - Wikipedia, the Free Encyclopedia

Computer chess - Wikipedia, the free encyclopedia http://en.wikipedia.org/wiki/Computer_chess Computer chess From Wikipedia, the free encyclopedia The idea of creating a chess-playing machine dates back to the eighteenth century. Around 1769, the chess playing automaton called The Turk became famous before being exposed as a hoax. Serious trials based in automatons such as El Ajedrecista were too complex and limited to be useful. The field of mechanical chess research languished until the advent of the digital computer in the 1950s. Since then, chess enthusiasts and computer engineers have built, with increasing degrees of seriousness and success, chess-playing machines and computer programs. Chess-playing computers are available for negligible cost, and there are many programs (such as free software, like GNU Chess, Amy and Crafty) that play a game that, with the aid of virtually any modern personal computer can defeat most master players under tournament conditions, while top commercial programs like Shredder or Fritz have surpassed even world champion caliber players at blitz and short time controls. 1990s Pressure-sensory Chess Computer with LCD screen Contents 1 Motivation 2 Brute force versus selective search 3 Computers versus humans 4 Endgame tablebases 5 Computer chess implementation issues 5.1 Board representations 5.2 Search techniques Chessmaster 10th edition running on Windows XP 5.3 Leaf evaluation 5.4 Using endgame databases 5.5 Other optimizations 5.6 Standards 5.7 Playing strength versus computer speed 6 Other chess software 7 Advanced chess 8 Computer chess theorists 9 The future of computer chess? 10 Solving chess 11 Chronology of computer chess 3-D board, Fritz 8 12 See also 13 Notes 14 References 15 External links Motivation The prime motivations for computerized chess playing have been solo entertainment (allowing players to practice and to amuse themselves when no human opponents are available), as aids to chess analysis, for computer chess competitions, and as research to provide insights into human cognition. -

Acto De Celebración De La Concesión De Un Milestone Del Ieee Al Telekino De D

ACTO DE CELEBRACIÓN DE LA CONCESIÓN DE UN MILESTONE DEL IEEE AL TELEKINO DE D. LEONARDO TORRES QUEVEDO EARLY DEVELOPMENTS IN REMOTE-CONTROL, 1901 THE TELEKINE E.T.S. DE INGENIEROS DE CAMINOS, CANALES Y PUERTOS. UNIVERSIDAD POLITÉCNICA DE MADRID DIA 16 MARZO 2007. HORA 11,30 ACTO DE CELEBRACIÓN DE LA CONCESIÓN DE UN MILESTONE DEL IEEE AL TELEKINO DE D. LEONARDO TORRES QUEVEDO EARLY DEVELOPMENTS IN REMOTE-CONTROL, 1901 THE TELEKINE PROGRAMA PROGRAM MUSEO TORRES QUEVEDO (2º SÓTANO) TORRES QUEVEDO MUSEUM o DESCUBRIMIENTO DE LA PLACA o UNVEILING OF THE COMMEMORATIVE CONMEMORATIVA DEL MILESTONE (MUSEO PLAQUE OF THE MILESTONE (TORRES TORRES QUEVEDO. SÓTANO 2). QUEVEDO MUSEUM). SALA VERDE DE LA ESCUELA GREEN ROOM (FIRST FLOOR) o APERTURA DEL ACTO por el Excmo. y Magfco. Sr. o WELCOME TALK by Javier Uceda Antolín, Rector de la UPM, D. Javier Uceda Antolín. President of Politechnical University. o INTERVENCIÓN del Profesor D. Antonio Pérez o KEYNOTE SPEECH by Professor Antonio Yuste, EUIT Telecomunicación. Pérez Yuste, EUIT Telecommunication. o PALABRAS de Don Leonardo Torres Quevedo, Dr. o SPECIAL GUEST SPEECH of Leonardo Torres Ingeniero de Caminos, Canales y Puertos (nieto del Quevedo, Dr. Civil Engineering (grandson of the inventor). inventor). o INTERVENCIÓN de D. Juan Antonio Santamera o TALK by Juan Antonio Santamera, Director of Sánchez, Director de la E.T.S.I. Caminos. Civil Engineering School. o INTERVENCIÓN del Dr. Lewis M. Terman, o TALK by Dr. Lewis M. Terman, President-Elect Presidente Electo del Institute of Electrical and of Institute of Electrical and Electronic Electronic Engineers, IEEE. Engineers, IEEE. o CLAUSURA DEL ACTO por el Excmo.