Download the Handbook As

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Classification of Gujarati Documents Using Naïve Bayes Classifier

ISSN (Print) : 0974-6846 Indian Journal of Science and Technology, Vol 10(5), DOI: 10.17485/ijst/2017/v10i5/103233, February 2017 ISSN (Online) : 0974-5645 Classification of Gujarati Documents using Naïve Bayes Classifier Rajnish M. Rakholia1* and Jatinderkumar R. Saini2 1School of Computer Science, R. K. University, Rajkot - 360020, Gujarat, India; [email protected] 2Narmada College of Computer Application, Bharuch - 392011, Gujarat, India; [email protected] Abstract Objectives: Information overload on the web is a major problem faced by institutions and businesses today. Sorting out some useful documents from the web which is written in Indian language is a challenging task due to its morphological variance Methods: Keyword search is a one of the way to retrieve the meaningful document from the web, but it doesn’t discriminate by context. In this paper and language barrier. As on date, there is no document classifier available for Gujarati language. we have presented the Naïve Bayes (NB) statistical machine learning algorithm for classification of Gujarati documents. Six pre-defined categories sports, health, entertainment, business, astrologyFindings: and spiritual The experimental are used for this results work. show A corpus that the of 280 Gujarat documents for each category is used for training and testing purpose of the categorizer. WeThese have results used k-foldprove cross validation to evaluate the performance of Naïve Bayes classifier. Applications: Proposed research work isaccuracy very useful of NB to classifier implement without the functionality and using features of directory selection search was in many75.74% web and portals 88.96% to sortrespectively. useful documents and many Informationthat the NB classifierRetrieval contribute(IR) applications. -

Numerical Notation: a Comparative History

This page intentionally left blank Numerical Notation Th is book is a cross-cultural reference volume of all attested numerical notation systems (graphic, nonphonetic systems for representing numbers), encompassing more than 100 such systems used over the past 5,500 years. Using a typology that defi es progressive, unilinear evolutionary models of change, Stephen Chrisomalis identifi es fi ve basic types of numerical notation systems, using a cultural phylo- genetic framework to show relationships between systems and to create a general theory of change in numerical systems. Numerical notation systems are prima- rily representational systems, not computational technologies. Cognitive factors that help explain how numerical systems change relate to general principles, such as conciseness and avoidance of ambiguity, which also apply to writing systems. Th e transformation and replacement of numerical notation systems relate to spe- cifi c social, economic, and technological changes, such as the development of the printing press and the expansion of the global world-system. Stephen Chrisomalis is an assistant professor of anthropology at Wayne State Uni- versity in Detroit, Michigan. He completed his Ph.D. at McGill University in Montreal, Quebec, where he studied under the late Bruce Trigger. Chrisomalis’s work has appeared in journals including Antiquity, Cambridge Archaeological Jour- nal, and Cross-Cultural Research. He is the editor of the Stop: Toutes Directions project and the author of the academic weblog Glossographia. Numerical Notation A Comparative History Stephen Chrisomalis Wayne State University CAMBRIDGE UNIVERSITY PRESS Cambridge, New York, Melbourne, Madrid, Cape Town, Singapore, São Paulo, Delhi, Dubai, Tokyo Cambridge University Press The Edinburgh Building, Cambridge CB2 8RU, UK Published in the United States of America by Cambridge University Press, New York www.cambridge.org Information on this title: www.cambridge.org/9780521878180 © Stephen Chrisomalis 2010 This publication is in copyright. -

ICCIASH-2020 PROCEEDINGS.Pdf

St. MARTIN’S ENGINEERING COLLEGE An Autonomous Institute A Non Minority College | Approved by AICTE | Affiliated to JNTUH, Hyderabad | NAAC-Accredited„A+‟ Grade|2(f)&12(B)status(UGC)ISO9001:2008 Certified| NBA Accredited | SIRO (DSIR) | UGC-Paramarsh | Recognized Remote Center of IIT, Bombay Dhulapally, Secunderabad – 500100, Telangana State, India. www.smec.ac.in Department of Science & Humanities International Conference on “Continuity, Consistency and Innovation in Applied Sciences and Humanities” on 13th & 14th August 2020 (ICCIASH – 20) Editor in Chief Dr. P. Santosh Kumar Patra Principal, SMEC Editor Dr. Ranadheer Reddy Donthi Professor & Head, Dept. of S&H, SMEC Editorial Committee Dr. A. Aditya Prasad, Professor, S&H Dr. N. Panda, Professor, S&H Dr. S. Someshwar, Associate Professor,S&H Dr. Andrea S. C. D’Cruz, Associate Professor, S&H Dr. Venkanna, Associate Professor, S&H Mr. S. Malli Babu, Associate Professor, S&H Mr.K.Sathish,AssistantProfessor,S&H ISBN No. 978-93-88096-42-3 St. MARTIN’S ENGINEERING COLLEGE Dhulapally, Secunderabad - 500100 NIRF ranked, NAAC A+ ACCREDITED M. LAXMAN REDDY CHAIRMAN MESSAGE I am extremely pleased to know that the department of Science & Humanities of SMEC is organizing Online International Conference on “Continuity, Consistency and Innovation in Applied Sciences and Humanities (ICCIASH-2020)” on 13th and 14th August, 2020. I understand that large number of researchers have submitted their research papers for presentation in the conference and also for publication. The response to this conference from all over India and Foreign countries is most encouraging. I am sure all the participants will be benefitted by their interaction with their fellow researchers and engineers which will help for their research work and subsequently to the society at large. -

Numbering Systems Developed by the Ancient Mesopotamians

Emergent Culture 2011 August http://emergent-culture.com/2011/08/ Home About Contact RSS-Email Alerts Current Events Emergent Featured Global Crisis Know Your Culture Legend of 2012 Synchronicity August, 2011 Legend of 2012 Wednesday, August 31, 2011 11:43 - 4 Comments Cosmic Time Meets Earth Time: The Numbers of Supreme Wholeness and Reconciliation Revealed In the process of writing about the precessional cycle I fell down a rabbit hole of sorts and in the process of finding my way around I made what I think are 4 significant discoveries about cycles of time and the numbers that underlie and unify cosmic and earthly time . Discovery number 1: A painting by Salvador Dali. It turns that clocks are not as bad as we think them to be. The units of time that segment the day into hours, minutes and seconds are in fact reconciled by the units of time that compose the Meso American Calendrical system or MAC for short. It was a surprise to me because one of the world’s foremost authorities in calendrical science the late Dr.Jose Arguelles had vilified the numbers of Western timekeeping as a most grievious error . So much so that he attributed much of the worlds problems to the use of the 12 month calendar and the 24 hour, 60 minute, 60 second day, also known by its handy acronym 12-60 time. I never bought into his argument that the use of those time factors was at fault for our largely miserable human-planetary condition. But I was content to dismiss mechanized time as nothing more than a convenient tool to facilitate the activities of complex societies. -

The Writing Revolution

9781405154062_1_pre.qxd 8/8/08 4:42 PM Page iii The Writing Revolution Cuneiform to the Internet Amalia E. Gnanadesikan A John Wiley & Sons, Ltd., Publication 9781405154062_1_pre.qxd 8/8/08 4:42 PM Page iv This edition first published 2009 © 2009 Amalia E. Gnanadesikan Blackwell Publishing was acquired by John Wiley & Sons in February 2007. Blackwell’s publishing program has been merged with Wiley’s global Scientific, Technical, and Medical business to form Wiley-Blackwell. Registered Office John Wiley & Sons Ltd, The Atrium, Southern Gate, Chichester, West Sussex, PO19 8SQ, United Kingdom Editorial Offices 350 Main Street, Malden, MA 02148-5020, USA 9600 Garsington Road, Oxford, OX4 2DQ, UK The Atrium, Southern Gate, Chichester, West Sussex, PO19 8SQ, UK For details of our global editorial offices, for customer services, and for information about how to apply for permission to reuse the copyright material in this book please see our website at www.wiley.com/wiley-blackwell. The right of Amalia E. Gnanadesikan to be identified as the author of this work has been asserted in accordance with the Copyright, Designs and Patents Act 1988. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, except as permitted by the UK Copyright, Designs and Patents Act 1988, without the prior permission of the publisher. Wiley also publishes its books in a variety of electronic formats. Some content that appears in print may not be available in electronic books. Designations used by companies to distinguish their products are often claimed as trademarks. -

3 Writing Systems

Writing Systems 43 3 Writing Systems PETER T. DANIELS Chapters on writing systems are very rare in surveys of linguistics – Trager (1974) and Mountford (1990) are the only ones that come to mind. For a cen- tury or so – since the realization that unwritten languages are as legitimate a field of study, and perhaps a more important one, than the world’s handful of literary languages – writing systems were (rightly) seen as secondary to phonological systems and (wrongly) set aside as unworthy of study or at best irrelevant to spoken language. The one exception was I. J. Gelb’s attempt (1952, reissued with additions and corrections 1963) to create a theory of writ- ing informed by the linguistics of his time. Gelb said that what he wrote was meant to be the first word, not the last word, on the subject, but no successors appeared until after his death in 1985.1 Although there have been few lin- guistic explorations of writing, a number of encyclopedic compilations have appeared, concerned largely with the historical development and diffusion of writing,2 though various popularizations, both new and old, tend to be less than accurate (Daniels 2000). Daniels and Bright (1996; The World’s Writing Systems: hereafter WWS) includes theoretical and historical materials but is primarily descriptive, providing for most contemporary and some earlier scripts information (not previously gathered together) on how they represent (the sounds of) the languages they record. This chapter begins with a historical-descriptive survey of the world’s writ- ing systems, and elements of a theory of writing follow. -

Multi-Class Support Vector Machine Based Continuous Voiced Odia Numerals Recognition

INTERNATIONAL JOURNAL OF SCIENTIFIC & TECHNOLOGY RESEARCH VOLUME 8, ISSUE 10, OCTOBER 2019 ISSN 2277-8616 Multi-Class Support Vector Machine Based Continuous Voiced Odia Numerals Recognition Prithviraj Mohanty, Ajit Kumar Nayak Abstract: With rapid advancement of automatic speech-recognition technologies, speech-based machine interaction has fascinated attention by many researchers to apply their approach from the research laboratory to real-life applications. A continuous voiced numerals recognition system is always useful for physically challenged persons (blind people) or elder people to have a telephonic conversion, setting the PIN number for their debit and credit cards and also devising the security code for some applications without physically touching the system. The work presented on this paper emphasize the recognition of continuous Odia numerals using multi-class Support Vector Machine (SVM).Three popular feature extraction techniques such as: PLP, LPC and MFCC are used to extract the feature parameters from voiced numerals and fed as the input to the recognition process. Different kernel mapping functions like: polynomial, sigmoid, Radial Basis Function (RBF) and wavelet are used in order to map the non-linear input feature space of framed signals to linear high dimensional feature space. So as to recognize the Odia numerals, multi-class SVM models are constructed using the techniques of One-Verses-All (OVA) and Half-Verses-Half (HVH). For the proposed system, diverse experimentations has been performed and results are analyzed over multi-class SVM models considering different feature parameter techniques with various kernel mapping functions. It has been observed that, OVA SVM model with MFCC for feature extraction and wavelet as kernel mapping function provides better accuracy as compared to other variations of the results attained. -

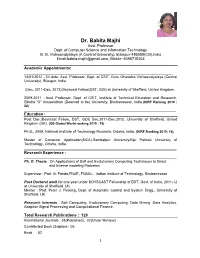

Dr. Babita Majhi Asst

Dr. Babita Majhi Asst. Professor Dept. of Computer Science and Information Technology G. G. Vishwavidyalaya (A Central University), Bilaspur-495009(CG),India Email:[email protected], Mobile :9098715203 Academic Appointments: _______________________________________________________________________ 13/01/2012 –Till date: Asst. Professor, Dept. of CSIT, Guru Ghasidas Vishwavidyalaya (Central University), Bilaspur, India (Dec. 2011-Dec. 2012) Boyscast Fellow(DST, GOI) at University of Sheffield, United Kingdom. 2009-2011 : Asst. Professor, Dept. of CSIT, Institute of Technical Education and Research, Siksha “O” Anusandhan (Deemed to be) University, Bhubaneswar, India.(NIRF Ranking 2019 : 24) ____________________________________________________________________________ Education : Post Doc.(Boyscast Fellow, DST, GOI) Dec.2011-Dec.2012, University of Sheffield, United Kingdom (UK). (QS Global World ranking 2019 : 78) Ph.D., 2009, National Institute of Technology Rourkela, Odisha, India. (NIRF Ranking 2019: 16). Master of Computer Application(MCA),Sambalpur University/Biju Patnaik University of Technology, Odisha, India. ______________________________________________________________________ Research Experience : _________________________________________________________________________ Ph. D. Thesis : On Applications of Soft and Evolutionary Computing Techniques to Direct and Inverse modeling Problems Supervisor : Prof. G. Panda,FNAE, FNASc., Indian Institute of Technology, Bhubaneswar Post Doctoral work for one year under BOYSCAST Fellowship -

An Approach for Automatic Recognition System for Indian Vehicles Numbers Using K-Nearest Neighbours and Decision Tree Classifier^

International Journal of Advanced Science and Technology Vol. 28, No. 9, (2019), pp. 477-492 An Approach for Automatic Recognition System for Indian Vehicles Numbers Using K-Nearest Neighbours and Decision Tree Classifier^ Ruchika Bindal1, Pradeepta Kumar Sarangi1*, Gagandeep Kaur1, Gamini Dhiman1 1Chitkara University Institute of Engineering and Technology Chitkara University, Punjab, India Abstract An Automatic Vehicle Number Plate Recognition System is presented in this paper. Basically, there are different kinds of motor vehicle number plates which are used in India. The actual number plates are not similar and most of them are deviated from the prescribed norms as laid by Government of India from time to time. The number plates of Indian vehicles are written in different languages also. The number plate characters are varied according to the language of a particular state in the country however a large portion of number plates are written in English language (legally all plates are required to be in modern Hindu-Arabic numerals with Latin letters). This is very vital in recognizing the number plates, extraction of number plate characters, segmenting the characters because of some diversity. In this paper we present a work on extraction of number plates, segmentation and recognition of number plates in the domain of English language. Prewitt filter technology is used for extracting the number plate thresholding and for segmentation of connected component analysis are used. For recognizing the characters, neural network, k-nearest neighbor and decision tree classifiers are used. The recognition rate of neural network is 96.1%, k-nearest neighbor is 95.55% and decision tree is 91.38% respectively which is acceptable for future research in this direction. -

INDO-EUROPEAN NUMERALS INDO-EUROPEAN "One" and "First"

141 INDO-EUROPEAN NUMERALS INDO-EUROPEAN "one" and "first" For Eva Havlová, the first lady of Czech etymology §1. In Indo-European languages the numeral "one" was formed from one of two roots: A. *oy-, B. *sem-. The ordinal "first", usually derived from the root *per-/*pro-, meant originally "fóre, foremost". The most important forms can be projected in the following partial reconstructions allowing their deeper analysis. Indo-Iranian: A. *oy- *oy-ko- "1" > Indo-Aryan *aika- > Mitanni Aryan aika- in a-i-ka-wa-ar-ta- na "one tum" (Puhvel 1984: 14), Old Indie éka-, Pali eka-, ekka-, Prakrit ikka, igga, ěa, Hindi ek, Oriya eka besides e (cf. Prakrit ěa), Sindhi eku etc, Kashmiri akh, Shina ik, Phalura ak, Torwali ek, ě, Tirahi, Kalasha, Dameli ek, Bashkarik, Maiyan ak, Shumashti yak, Wotapuri yek, Gawar yak, Waigali ěk, ew. Kati ev, Ashkun ač, Pashai, Khowar I (cf. Prakrit ěa). Let us mention that the alternativě reconstruction *oy-kwo- (Waanders 1992: 370) is also quite legitimate. *oy-wo- "1" > Iranian *aiwa- > Avestan aěuua-, Khwarezmian ěw, Sog- dian (Buddhistic) 'yw, (Manichean) lyw, (Christian) yw, Yaghnobi i, Pashto yau, Yidgha, Mundjan, Ishkashim yu, Wakhi lu, Shugni yl(w), Sarikoli T(w), Ossetic Digor ew, Iron íw, Old Persian aiva-, Zoroastrian Pahlavi ěv, ěvak (*aivaka, cf. Modern Persian yak "1" and yekom "lst", already in Zoroastrian Pahlavi ěkom "lst", Baluchi ěyók "single", evak'a "alone", Sanglechi wok, yak, Yazgulam yů(g) "1" and further Khotanese ššúka- "alone", Parthian 'wg id.), Modern Persian dial. yaw, Zaza yau, Talysh i; cf. also Old Indie adv. -

Chapter 22, Symbols

The Unicode® Standard Version 13.0 – Core Specification To learn about the latest version of the Unicode Standard, see http://www.unicode.org/versions/latest/. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and the publisher was aware of a trade- mark claim, the designations have been printed with initial capital letters or in all capitals. Unicode and the Unicode Logo are registered trademarks of Unicode, Inc., in the United States and other countries. The authors and publisher have taken care in the preparation of this specification, but make no expressed or implied warranty of any kind and assume no responsibility for errors or omissions. No liability is assumed for incidental or consequential damages in connection with or arising out of the use of the information or programs contained herein. The Unicode Character Database and other files are provided as-is by Unicode, Inc. No claims are made as to fitness for any particular purpose. No warranties of any kind are expressed or implied. The recipient agrees to determine applicability of information provided. © 2020 Unicode, Inc. All rights reserved. This publication is protected by copyright, and permission must be obtained from the publisher prior to any prohibited reproduction. For information regarding permissions, inquire at http://www.unicode.org/reporting.html. For information about the Unicode terms of use, please see http://www.unicode.org/copyright.html. The Unicode Standard / the Unicode Consortium; edited by the Unicode Consortium. — Version 13.0. Includes index. ISBN 978-1-936213-26-9 (http://www.unicode.org/versions/Unicode13.0.0/) 1. -

Feature Extraction and Dimensionality Reduction in Pattern Recognition Using Handwritten Odia Numerals

Middle-East Journal of Scientific Research 22 (10): 1514-1519, 2014 ISSN 1990-9233 © IDOSI Publications, 2014 DOI: 10.5829/idosi.mejsr.2014.22.10.21487 Feature Extraction and Dimensionality Reduction in Pattern Recognition Using Handwritten Odia Numerals 12Pradeepta K. Sarangi and Kiran K. Ravulakollu 1Apeejay Institute of Technology, Greater Noida, U.P, India 2Sharda University, Greater Noida, U.P, India Abstract: Feature extraction is the initial and critical stage that needs to be carried out for any recognition system that uses pattern matching. Many of the existing feature extraction techniques such as Quadrant-mean, Histogram and Texture extraction are based on the principle of partitioning the image into small segments. However, as the size of the image increases, the complexity of computation also increases. When it comes to image reconstruction from the feature values, these methods are not successful in generation a complete and valid original image. In addition, as the number of components in the image increases, the dimensionality of the features also increases. In order to reduce the feature extraction complexity, dimensionality reduction is applied to increase the recognition performance. To perform image restoration, transformation of limited feature vector to binary image is considered. In this paper, dimensionality reduction and feature extraction is achieved using row-wise decimal conversion for pattern recognition. This signifies the transformation of features (small number) to a normalized state thereby preserving quality of image (numeral). A simple recurrent neural network (RNN) has been used as the classifier and recognition accuracy is reported. The method has been implemented on the dataset consisting of 1500-isolated handwritten Odia numerals demonstrating an accuracy of 92.4%.