User's Guide: Converged Network Adapters (41000 Series)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

MLNX OFED Documentation Rev 5.0-2.1.8.0

MLNX_OFED Documentation Rev 5.0-2.1.8.0 Exported on May/21/2020 06:13 AM https://docs.mellanox.com/x/JLV-AQ Notice This document is provided for information purposes only and shall not be regarded as a warranty of a certain functionality, condition, or quality of a product. NVIDIA Corporation (“NVIDIA”) makes no representations or warranties, expressed or implied, as to the accuracy or completeness of the information contained in this document and assumes no responsibility for any errors contained herein. NVIDIA shall have no liability for the consequences or use of such information or for any infringement of patents or other rights of third parties that may result from its use. This document is not a commitment to develop, release, or deliver any Material (defined below), code, or functionality. NVIDIA reserves the right to make corrections, modifications, enhancements, improvements, and any other changes to this document, at any time without notice. Customer should obtain the latest relevant information before placing orders and should verify that such information is current and complete. NVIDIA products are sold subject to the NVIDIA standard terms and conditions of sale supplied at the time of order acknowledgement, unless otherwise agreed in an individual sales agreement signed by authorized representatives of NVIDIA and customer (“Terms of Sale”). NVIDIA hereby expressly objects to applying any customer general terms and conditions with regards to the purchase of the NVIDIA product referenced in this document. No contractual obligations are formed either directly or indirectly by this document. NVIDIA products are not designed, authorized, or warranted to be suitable for use in medical, military, aircraft, space, or life support equipment, nor in applications where failure or malfunction of the NVIDIA product can reasonably be expected to result in personal injury, death, or property or environmental damage. -

Storage Administration Guide Storage Administration Guide SUSE Linux Enterprise Server 12 SP4

SUSE Linux Enterprise Server 12 SP4 Storage Administration Guide Storage Administration Guide SUSE Linux Enterprise Server 12 SP4 Provides information about how to manage storage devices on a SUSE Linux Enterprise Server. Publication Date: September 24, 2021 SUSE LLC 1800 South Novell Place Provo, UT 84606 USA https://documentation.suse.com Copyright © 2006– 2021 SUSE LLC and contributors. All rights reserved. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”. For SUSE trademarks, see https://www.suse.com/company/legal/ . All other third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its aliates. Asterisks (*) denote third-party trademarks. All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its aliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof. Contents About This Guide xii 1 Available Documentation xii 2 Giving Feedback xiv 3 Documentation Conventions xiv 4 Product Life Cycle and Support xvi Support Statement for SUSE Linux Enterprise Server xvii • Technology Previews xviii I FILE SYSTEMS AND MOUNTING 1 1 Overview -

For Immediate Release

an ellisys company FOR IMMEDIATE RELEASE SerialTek Contact: Simon Thomas, Director, Sales and Marketing Phone: +1-720-204-2140 Email: [email protected] SerialTek Debuts PCIe x16 Gen5 Protocol Analysis System and Web Application New Kodiak™ Platform Brings SerialTek Advantages to More Computing and Data Storage Markets Longmont, CO, USA — February 24, 2021 — SerialTek, a leading provider of protocol test solutions for PCI Express®, NVM Express®, Serial Attached SCSI, and Serial ATA, today introduced an advancement in the PCIe® test and analysis market with the release of the Kodiak PCIe x16 Gen5 Analysis System, as well as the industry’s first calibration-free PCIe x16 add-in-card (AIC) interposer and a new web-based BusXpert™ user interface to manage and analyze traces more efficiently than ever. The addition of PCIe x16 Gen5 to the Kodiak analyzer and SI-Fi™ interposer family brings previously unavailable analysis capabilities and efficiencies to computing, datacenter, networking, storage, AI, and other PCIe x16 Gen5 applications. With SerialTek’s proven calibration-free SI-Fi interposer technology, the Kodiak’s innovative state-of-the-art design, and the new BusXpert analyzer software, users can more easily set up the analyzer hardware, more accurately probe PCIe signals, and more efficiently analyze traces. Kodiak PCIe x16 Gen5 Analysis System At the core of the Kodiak PCIe x16 analyzer is an embedded hardware architecture that delivers substantial and unparalleled advancements in capture, search, and processing acceleration. Interface responsiveness is markedly advanced, searches involving massive amounts of data are fast, and hardware filtering is flexible and powerful. “Once installed in a customer test environment the Kodiak’s features and benefits are immediately obvious,” said Paul Mutschler, CEO of SerialTek. -

Course Outline & Schedule

Course Outline & Schedule Call US 408-759-5074 or UK +44 20 7620 0033 Suse Linux Advanced System Administration Curriculum Linux Course Code SLASA Duration 5 Day Course Price $2,425 Course Description This instructor led SUSE Linux Advanced System Administration training course is designed to teach the advanced administration, security, networking and performance tasks required on a SUSE Linux Enterprise system. Targeted to closely follow the official LPI curriculum (generic Linux), this course together with the SUSE Linux System Administration course will enable the delegate to work towards achieving the LPIC-2 qualification. Exercises and examples are used throughout the course to give practical hands-on experience with the techniques covered. Objectives The delegate will learn and acquire skills as follows: Perform administrative tasks with supplied tools such as YaST Advanced network configuration Network troubleshooting and analysing packets Creating Apache virtual hosts and hosting user web content Sharing Windows and Linux resources with SAMBA Configuring a DNS server and configuring DNS logging Configuring a DHCP server and client Sharing Linux network resources with NFS Creating Unit Files Configuring AutoFS direct and indirect maps Configuring a secure FTP server Configuring a SQUID proxy server Creating Btrfs subvolumes and snapshots Backing-up and restoring XFS filesystems Configuring LVM and managing Logical Volumes Managing software RAID Centralised storage with iSCSI Monitoring disk status and reliability with SMART Perpetual -

Fstrim, Lvm and Dmcrypt

Blog Projects About me Contact Tweet synaptic fault mind dumped How to properly activate TRIM for your SSD on Linux: fstrim, lvm and dmcrypt Language Posted on Saturday, 23rd February 2013 27 comments English Español Unlike hard disk drives (HDDs), NAND flash memory that make SSD cannot overwrite existing data. This means that you first have to delete the old data before writing new one. Flash memory is divided into blocks, which is further divided in pages. The minimum write unit is a page, but the smallest erase unit is a Tags block. apache Applet debian email fpga game Git GNOME hack hardware kernel linux meego networking packages performance power management procmail python RFKill Security ssd tor trim usb virtualization war webkit wireless Categories http://planet.igalia.com http://planet.webkit.org http://planet.webkitgtk.org http://planetsecurity.org Share it! Data can be written directly into an empty page, but only whole blocks can be erased. Therefore, to reclaim the space taken up by invalid data, all the valid data from one block must be first copied and written into the empty pages of a new block. Only then can the invalid data in the original block be erased, making it ready Recent Posts for new valid data to be written. WebKitGTK+ Performance Bot! Do you see the problem? This means that as time goes on, the SSD will internally fragment the blocks How to connect to .onion Tor domains with among the different pages, until that it reaches a point where there won’t be available any empty page. -

NVM Express Over Fabrics

NVM Express Over Fabrics Dave Minturn, Intel Corp. OFADevWorkshop NVMe in Fabric Environments • A primary use case for NVMe PCIe SSDs is in an all flash appliance • Hundreds or more SSDs may be attached – too many for PCIe based attach scale-out • Concern: Today remote SSD scale-out over a fabric attach uses SCSI based protocols: Requiring protocol translation Desire best performance and latency from NVMe SSD investment over fabrics like Ethernet with RDMA (iWARP,RoCE), InfiniBand™, and Intel® Omni-Path Architecture Realizing Benefit of Next Gen NVM over Fabrics • PCIe NVMe SSD latency may be < 10 µs with Next Generation NVM • Using a SCSI-based protocol for remote NVMe access ads over 100 µs* in latency • Usage models require efficient write mirroring of PCIe Next Gen NVMe SSDs over fabrics *Source: Intel measurements. Concern: Low latency of Next Gen NVM lost in (SCSI) translation. Why NVMe over Fabrics? Simplicity, Efficiency and End-to-End NVMe Model • Simplicity of protocol enables hardware automated I/O Queues – NVMe transport bridge • No translation to or from another protocol like SCSI (in firmware/software) • Inherent parallelism of NVMe multiple I/O Queues is exposed to the host • NVMe commands and structures are transferred end-to-end • Maintains the NVMe architecture across a range of fabric types • Maintains architecture and software consistency between fabric types by standardizing a common abstraction and encapsulation definition Performance Goal: Make remote NVMe access over fabrics equivalent to local PCIe attached NVMe, -

Delock External Enclosure for M.2 Nvme Pcie SSD with Superspeed USB 20 Gbps (USB 3.2 Gen 2X2) USB Type-C™ Female

Delock External Enclosure for M.2 NVMe PCIe SSD with SuperSpeed USB 20 Gbps (USB 3.2 Gen 2x2) USB Type-C™ female Description This enclosure by Delock enables the installation of anM.2 PCIe NVMe SSD in 2280 format, it can be connected via USB to the PC or laptop. Therobust metal housing with cooling fins ensures an optimum temperature of the memory. SuperSpeed USB 20 Gbps The enclosure allows a data transfer rate of 20 Gbps on the USB-C™ port. Specification Item no. 42000 • Connectors: EAN: 4043619420001 external: 1 x SuperSpeed USB 20 Gbps (USB 3.2 Gen 2x2) USB Type-C™ female internal: 1 x 67 pin M.2 key M slot Country of origin: China • Chipset: Asmedia ASM2364 • Supports M.2 modules in format 2280 with key M or key B+M based on PCIe (NVMe) Package: • Retail Box • Maximum height of the components on the module: 1.5 mm, application of double- sided assembled modules supported • Supports NVM Express (NVMe) • Data transfer rate up to 20 Gbps • LED indicator for power and access • Metal housing • Dimensions (LxWxH): ca. 99 x 50 x 18 mm • Hot Plug, Plug & Play System requirements • Android 9.0 or above • Chrome OS 78.0 or above • Linux Kernel 4.6 or above • Mac OS 10.15.3 or above • Windows 8.1/8.1-64/10/10-64 • PC or laptop with a free USB Type-C™ port • PC or laptop with a free Thunderbolt™ 3 port Package content • External enclosure M.2 • Mounting material • 1 x thermal conductive pad • Screwdriver • Cable USB-C™ male to USB-C™ male, length ca. -

Certification Report BSI-DSZ-CC-0999-2016

BSI-DSZ-CC-0999-2016 for Red Hat Enterprise Linux Version 7.1 from Red Hat BSI - Bundesamt für Sicherheit in der Informationstechnik, Postfach 20 03 63, D-53133 Bonn Phone +49 (0)228 99 9582-0, Fax +49 (0)228 9582-5477, Infoline +49 (0)228 99 9582-111 Certification Report V1.0 CC-Zert-327 V5.14 BSI-DSZ-CC-0999-2016 (*) Operating System Red Hat Enterprise Linux Version 7.1 from Red Hat SOGIS Recognition Agreement PP Conformance: Operating System Protection Profile, Version 2.0, 01 June 2010, BSI-CC-PP-0067-2010, OSPP Extended Package – Advanced Management, Version 2.0, 28 May 2010, OSPP Extended Package – Labeled Security, Version 2.0, 28 May 2010 Functionality: PP conformant plus product specific extensions Common Criteria Part 2 extended Assurance: Common Criteria Part 3 conformant EAL 4 augmented by ALC_FLR.3 The IT Product identified in this certificate has been evaluated at an approved evaluation facility using the Common Methodology for IT Security Evaluation (CEM), Version 3.1 extended by Scheme Interpretations for conformance to the Common Criteria for IT Security Evaluation (CC), Version 3.1. CC and CEM are also published as ISO/IEC 15408 and ISO/IEC 18045. (*) This certificate applies only to the specific version and release of the product in its evaluated configuration and in conjunction with the complete Certification Report and Notification. For details on the validity see Certification Report part A chapter 4 The evaluation has been conducted in accordance with the provisions of the certification Common Criteria scheme of the German Federal Office for Information Security (BSI) and the conclusions Recognition Arrangement of the evaluation facility in the evaluation technical report are consistent with the evidence adduced. -

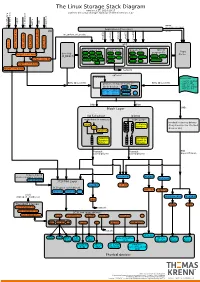

The Linux Storage Stack Diagram

The Linux Storage Stack Diagram version 3.17, 2014-10-17 outlines the Linux storage stack as of Kernel version 3.17 ISCSI USB mmap Fibre Channel Fibre over Ethernet Fibre Channel Fibre Virtual Host Virtual FireWire (anonymous pages) Applications (Processes) LIO malloc vfs_writev, vfs_readv, ... ... stat(2) read(2) open(2) write(2) chmod(2) VFS tcm_fc sbp_target tcm_usb_gadget tcm_vhost tcm_qla2xxx iscsi_target_mod block based FS Network FS pseudo FS special Page ext2 ext3 ext4 proc purpose FS target_core_mod direct I/O NFS coda sysfs Cache (O_DIRECT) xfs btrfs tmpfs ifs smbfs ... pipefs futexfs ramfs target_core_file iso9660 gfs ocfs ... devtmpfs ... ceph usbfs target_core_iblock target_core_pscsi network optional stackable struct bio - sector on disk BIOs (Block I/O) BIOs (Block I/O) - sector cnt devices on top of “normal” - bio_vec cnt block devices drbd LVM - bio_vec index - bio_vec list device mapper mdraid dm-crypt dm-mirror ... dm-cache dm-thin bcache BIOs BIOs Block Layer BIOs I/O Scheduler blkmq maps bios to requests multi queue hooked in device drivers noop Software (they hook in like stacked ... Queues cfq devices do) deadline Hardware Hardware Dispatch ... Dispatch Queue Queues Request Request BIO based Drivers based Drivers based Drivers request-based device mapper targets /dev/nullb* /dev/vd* /dev/rssd* dm-multipath SCSI Mid Layer /dev/rbd* null_blk SCSI upper level drivers virtio_blk mtip32xx /dev/sda /dev/sdb ... sysfs (transport attributes) /dev/nvme#n# /dev/skd* rbd Transport Classes nvme skd scsi_transport_fc network -

Unbreakable Enterprise Kernel Release Notes for Unbreakable Enterprise Kernel Release 3

Unbreakable Enterprise Kernel Release Notes for Unbreakable Enterprise Kernel Release 3 E48380-10 June 2020 Oracle Legal Notices Copyright © 2013, 2020, Oracle and/or its affiliates. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs) and Oracle computer documentation or other Oracle data delivered to or accessed by U.S. Government end users are "commercial computer software" or "commercial computer software documentation" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, the use, reproduction, duplication, release, display, disclosure, modification, preparation of derivative works, and/or adaptation of i) Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs), ii) Oracle computer documentation and/or iii) other Oracle data, is subject to the rights and limitations specified in the license contained in the applicable contract. -

Emulex Drivers for Linux User Guide Table of Contents

Emulex® Drivers for Linux User Guide Release 12.6 Broadcom DRVLin-UG126-100 February 4, 2020 Broadcom, the pulse logo, Connecting everything, Avago Technologies, Avago, the A logo, Emulex, ExpressLane, and OneCommand are among the trademarks of Broadcom and/or its affiliates in the United States, certain other countries, and/ or the EU. Copyright © 2003–2020 Broadcom. All Rights Reserved. The term “Broadcom” refers to Broadcom Inc. and/or its subsidiaries. For more information, please visit www.broadcom.com. Broadcom reserves the right to make changes without further notice to any products or data herein to improve reliability, function, or design. Information furnished by Broadcom is believed to be accurate and reliable. However, Broadcom does not assume any liability arising out of the application or use of this information, nor the application or use of any product or circuit described herein, neither does it convey any license under its patent rights nor the rights of others. Emulex Drivers for Linux User Guide Table of Contents Chapter 1: Introduction ...................................................................................................................... 5 1.1 Overview ....................................................................................................................................................................5 1.2 Abbreviations ............................................................................................................................................................5 Chapter 2: Installing -

Parallel NFS (Pnfs)

Red Hat Enterprise 7 Beta File Systems New Scale, Speed & Features Ric Wheeler Director Red Hat Kernel File & Storage Team Red Hat Storage Engineering Agenda •Red Hat Enterprise Linux 7 Storage Features •Red Hat Enterprise Linux 7 Storage Management Features •Red Hat Enterprise Linux 7 File Systems •What is Parallel NFS? •Red Hat Enterprise Linux 7 NFS Red Hat Enterprise Linux 7 Storage Foundations Red Hat Enterprise Linux 6 File & Storage Foundations •Red Hat Enterprise Linux 6 provides key foundations for Red Hat Enterprise Linux 7 • LVM Support for Scalable Snapshots • Device Mapper Thin Provisioned Storage • Expanded options for file systems •Large investment in performance enhancements •First in industry support for Parallel NFS (pNFS) LVM Thinp and Snapshot Redesign •LVM thin provisioned LV (logical volume) • Eliminates the need to pre-allocate space • Logical Volume space allocated from shared pool as needed • Typically a high end, enterprise storage array feature • Makes re-sizing a file system almost obsolete! •New snapshot design, based on thinp • Space efficient and much more scalable • Blocks are allocated from the shared pool for COW operations • Multiple snapshots can have references to same COW data • Scales to many snapshots, snapshots of snapshots RHEL Storage Provisioning Improved Resource Utilization & Ease of Use Avoids wasting space Less administration * Lower costs * Traditional Provisioning RHEL Thin Provisioning Free Allocated Unused Volume 1 Space Available Allocation Storage { Data Pool { Volume 1 Volume 2 Allocated