Reliable Storage for HA, DR, Clouds and Containers Philipp Reisner, CEO LINBIT LINBIT - the Company Behind It

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Storage Administration Guide Storage Administration Guide SUSE Linux Enterprise Server 12 SP4

SUSE Linux Enterprise Server 12 SP4 Storage Administration Guide Storage Administration Guide SUSE Linux Enterprise Server 12 SP4 Provides information about how to manage storage devices on a SUSE Linux Enterprise Server. Publication Date: September 24, 2021 SUSE LLC 1800 South Novell Place Provo, UT 84606 USA https://documentation.suse.com Copyright © 2006– 2021 SUSE LLC and contributors. All rights reserved. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”. For SUSE trademarks, see https://www.suse.com/company/legal/ . All other third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its aliates. Asterisks (*) denote third-party trademarks. All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its aliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof. Contents About This Guide xii 1 Available Documentation xii 2 Giving Feedback xiv 3 Documentation Conventions xiv 4 Product Life Cycle and Support xvi Support Statement for SUSE Linux Enterprise Server xvii • Technology Previews xviii I FILE SYSTEMS AND MOUNTING 1 1 Overview -

The Linux Kernel Module Programming Guide

The Linux Kernel Module Programming Guide Peter Jay Salzman Michael Burian Ori Pomerantz Copyright © 2001 Peter Jay Salzman 2007−05−18 ver 2.6.4 The Linux Kernel Module Programming Guide is a free book; you may reproduce and/or modify it under the terms of the Open Software License, version 1.1. You can obtain a copy of this license at http://opensource.org/licenses/osl.php. This book is distributed in the hope it will be useful, but without any warranty, without even the implied warranty of merchantability or fitness for a particular purpose. The author encourages wide distribution of this book for personal or commercial use, provided the above copyright notice remains intact and the method adheres to the provisions of the Open Software License. In summary, you may copy and distribute this book free of charge or for a profit. No explicit permission is required from the author for reproduction of this book in any medium, physical or electronic. Derivative works and translations of this document must be placed under the Open Software License, and the original copyright notice must remain intact. If you have contributed new material to this book, you must make the material and source code available for your revisions. Please make revisions and updates available directly to the document maintainer, Peter Jay Salzman <[email protected]>. This will allow for the merging of updates and provide consistent revisions to the Linux community. If you publish or distribute this book commercially, donations, royalties, and/or printed copies are greatly appreciated by the author and the Linux Documentation Project (LDP). -

Kubernetes Security Guide Contents

Kubernetes Security Guide Contents Intro 4 CHAPTER 1 Securing your container images and CI/CD pipeline 6 Image scanning 6 What is image scanning 7 Docker image scanning open source tools 7 Open source Docker scanning tool: Anchore Engine 8 Securing your CI/CD pipeline 9 Image scanning in CI/CD 10 CHAPTER 2 Securing Kubernetes Control Plane 14 Kubelet security 14 Access to the kubelet API 15 Kubelet access to Kubernetes API 16 RBAC example, accessing the kubelet API with curl 16 Kubernetes API audit and security log 17 Audit log policies configuration 19 Extending the Kubernetes API using security admission controllers 20 Securing Kubernetes etcd 23 PKI-based authentication for etcd 23 etcd peer-to-peer TLS 23 Kubernetes API to etcd cluster TLS 24 Using a trusted Docker registry 24 Kubernetes trusted image collections: Banning non trusted registry 26 Kubernetes TLS certificates rotation and expiration 26 Kubernetes kubelet TLS certificate rotation 27 Kubernetes serviceAccount token rotation 28 Kubernetes user TLS certificate rotation 29 Securing Kubernetes hosts 29 Kubernetes 2 Security Guide Using a minimal host OS 30 Update system patches 30 Node recycling 30 Running CIS benchmark security tests 31 CHAPTER 3 Understanding Kubernetes RBAC 32 Kubernetes role-based access control (RBAC) 32 RBAC configuration: API server flags 34 How to create Kubernetes users and serviceAccounts 34 How to create a Kubernetes serviceAccount step by step 35 How to create a Kubernetes user step by step 37 Using an external user directory 40 CHAPTER 4 Security -

Running Legacy VM's Along with Containers in Kubernetes!

Running Legacy VM’s along with containers in Kubernetes Delusion or Reality? Kunal Kushwaha NTT Open Source Software Center Copyright©2019 NTT Corp. All Rights Reserved. About me • Work @ NTT Open Source Software Center • Collaborator (Core developer) for libpod (podman) • Contributor KubeVirt, buildkit and other related projects • Docker Community Leader @ Tokyo Chapter Copyright©2019 NTT Corp. All Rights Reserved. 2 Growth of Containers in Companies Adoption of containers in production has significantly increased Credits: CNCF website Copyright©2019 NTT Corp. All Rights Reserved. 3 Growth of Container Orchestration usage Adoption of container orchestrator like Kubernetes have also increased significantly on public as well private clouds. Credits: CNCF website Copyright©2019 NTT Corp. All Rights Reserved. 4 Infrastructure landscape app-2 app-2 app-M app-1 app-2 app-N app-1 app-1 app-N VM VM VM kernel VM Platform VM Platform Existing Products New Products • The application infrastructure is fragmented as most of old application still running on traditional infrastructure. • Fragmentation means more work & increase in cost Copyright©2019 NTT Corp. All Rights Reserved. 5 What keeps applications away from Containers • Lack of knowledge / Too complex to migrate in containers. • Dependency on custom kernel parameters. • Application designed for a custom kernel. • Application towards the end of life. Companies prefer to re-write application, rather than directly migrating them to containers. https://dzone.com/guides/containers-orchestration-and-beyond Copyright©2019 NTT Corp. All Rights Reserved. 6 Ideal World app-2 app-2 app-M app-1 app-2 app-N app-1 app-1 app-N VM VM VM kernel VM Platform • Applications in VM and containers can be managed with same control plane • Management/ Governance Policies like RBAC, Network etc. -

The Xen Port of Kexec / Kdump a Short Introduction and Status Report

The Xen Port of Kexec / Kdump A short introduction and status report Magnus Damm Simon Horman VA Linux Systems Japan K.K. www.valinux.co.jp/en/ Xen Summit, September 2006 Magnus Damm ([email protected]) Kexec / Kdump Xen Summit, September 2006 1 / 17 Outline Introduction to Kexec What is Kexec? Kexec Examples Kexec Overview Introduction to Kdump What is Kdump? Kdump Kernels The Crash Utility Xen Porting Effort Kexec under Xen Kdump under Xen The Dumpread Tool Partial Dumps Current Status Magnus Damm ([email protected]) Kexec / Kdump Xen Summit, September 2006 2 / 17 Introduction to Kexec Outline Introduction to Kexec What is Kexec? Kexec Examples Kexec Overview Introduction to Kdump What is Kdump? Kdump Kernels The Crash Utility Xen Porting Effort Kexec under Xen Kdump under Xen The Dumpread Tool Partial Dumps Current Status Magnus Damm ([email protected]) Kexec / Kdump Xen Summit, September 2006 3 / 17 Kexec allows you to reboot from Linux into any kernel. as long as the new kernel doesn’t depend on the BIOS for setup. Introduction to Kexec What is Kexec? What is Kexec? “kexec is a system call that implements the ability to shutdown your current kernel, and to start another kernel. It is like a reboot but it is indepedent of the system firmware...” Configuration help text in Linux-2.6.17 Magnus Damm ([email protected]) Kexec / Kdump Xen Summit, September 2006 4 / 17 . as long as the new kernel doesn’t depend on the BIOS for setup. Introduction to Kexec What is Kexec? What is Kexec? “kexec is a system call that implements the ability to shutdown your current kernel, and to start another kernel. -

Anatomy of Linux Loadable Kernel Modules a 2.6 Kernel Perspective

Anatomy of Linux loadable kernel modules A 2.6 kernel perspective Skill Level: Intermediate M. Tim Jones ([email protected]) Consultant Engineer Emulex Corp. 16 Jul 2008 Linux® loadable kernel modules, introduced in version 1.2 of the kernel, are one of the most important innovations in the Linux kernel. They provide a kernel that is both scalable and dynamic. Discover the ideas behind loadable modules, and learn how these independent objects dynamically become part of the Linux kernel. The Linux kernel is what's known as a monolithic kernel, which means that the majority of the operating system functionality is called the kernel and runs in a privileged mode. This differs from a micro-kernel, which runs only basic functionality as the kernel (inter-process communication [IPC], scheduling, basic input/output [I/O], memory management) and pushes other functionality outside the privileged space (drivers, network stack, file systems). You'd think that Linux is then a very static kernel, but in fact it's quite the opposite. Linux can be dynamically altered at run time through the use of Linux kernel modules (LKMs). More in Tim's Anatomy of... series on developerWorks • Anatomy of Linux flash file systems • Anatomy of Security-Enhanced Linux (SELinux) • Anatomy of real-time Linux architectures • Anatomy of the Linux SCSI subsystem • Anatomy of the Linux file system • Anatomy of the Linux networking stack Anatomy of Linux loadable kernel modules © Copyright IBM Corporation 1994, 2008. All rights reserved. Page 1 of 11 developerWorks® ibm.com/developerWorks • Anatomy of the Linux kernel • Anatomy of the Linux slab allocator • Anatomy of Linux synchronization methods • All of Tim's Anatomy of.. -

Kdump, a Kexec-Based Kernel Crash Dumping Mechanism

Kdump, A Kexec-based Kernel Crash Dumping Mechanism Vivek Goyal Eric W. Biederman Hariprasad Nellitheertha IBM Linux NetworkX IBM [email protected] [email protected] [email protected] Abstract important consideration for the success of a so- lution has been the reliability and ease of use. Kdump is a crash dumping solution that pro- Kdump is a kexec based kernel crash dump- vides a very reliable dump generation and cap- ing mechanism, which is being perceived as turing mechanism [01]. It is simple, easy to a reliable crash dumping solution for Linux R . configure and provides a great deal of flexibility This paper begins with brief description of what in terms of dump device selection, dump saving kexec is and what it can do in general case, and mechanism, and plugging-in filtering mecha- then details how kexec has been modified to nism. boot a new kernel even in a system crash event. The idea of kdump has been around for Kexec enables booting into a new kernel while quite some time now, and initial patches for preserving the memory contents in a crash sce- kdump implementation were posted to the nario, and kdump uses this feature to capture Linux kernel mailing list last year [03]. Since the kernel crash dump. Physical memory lay- then, kdump has undergone significant design out and processor state are encoded in ELF core changes to ensure improved reliability, en- format, and these headers are stored in a re- hanced ease of use and cleaner interfaces. This served section of memory. Upon a crash, new paper starts with an overview of the kdump de- kernel boots up from reserved memory and pro- sign and development history. -

Communicating Between the Kernel and User-Space in Linux Using Netlink Sockets

SOFTWARE—PRACTICE AND EXPERIENCE Softw. Pract. Exper. 2010; 00:1–7 Prepared using speauth.cls [Version: 2002/09/23 v2.2] Communicating between the kernel and user-space in Linux using Netlink sockets Pablo Neira Ayuso∗,∗1, Rafael M. Gasca1 and Laurent Lefevre2 1 QUIVIR Research Group, Departament of Computer Languages and Systems, University of Seville, Spain. 2 RESO/LIP team, INRIA, University of Lyon, France. SUMMARY When developing Linux kernel features, it is a good practise to expose the necessary details to user-space to enable extensibility. This allows the development of new features and sophisticated configurations from user-space. Commonly, software developers have to face the task of looking for a good way to communicate between kernel and user-space in Linux. This tutorial introduces you to Netlink sockets, a flexible and extensible messaging system that provides communication between kernel and user-space. In this tutorial, we provide fundamental guidelines for practitioners who wish to develop Netlink-based interfaces. key words: kernel interfaces, netlink, linux 1. INTRODUCTION Portable open-source operating systems like Linux [1] provide a good environment to develop applications for the real-world since they can be used in very different platforms: from very small embedded devices, like smartphones and PDAs, to standalone computers and large scale clusters. Moreover, the availability of the source code also allows its study and modification, this renders Linux useful for both the industry and the academia. The core of Linux, like many modern operating systems, follows a monolithic † design for performance reasons. The main bricks that compose the operating system are implemented ∗Correspondence to: Pablo Neira Ayuso, ETS Ingenieria Informatica, Department of Computer Languages and Systems. -

Ovirt and Docker Integration

oVirt and Docker Integration October 2014 Federico Simoncelli Principal Software Engineer – Red Hat oVirt and Docker Integration, Oct 2014 1 Agenda ● Deploying an Application (Old-Fashion and Docker) ● Ecosystem: Kubernetes and Project Atomic ● Current Status of Integration ● oVirt Docker User-Interface Plugin ● “Dockerized” oVirt Engine ● Docker on Virtualization ● Possible Future Integration ● Managing Containers as VMs ● Future Multi-Purpose Data Center oVirt and Docker Integration, Oct 2014 2 Deploying an Application (Old-Fashion) ● Deploying an instance of Etherpad # yum search etherpad Warning: No matches found for: etherpad No matches found $ unzip etherpad-lite-1.4.1.zip $ cd etherpad-lite-1.4.1 $ vim README.md ... ## GNU/Linux and other UNIX-like systems You'll need gzip, git, curl, libssl develop libraries, python and gcc. *For Debian/Ubuntu*: `apt-get install gzip git-core curl python libssl-dev pkg- config build-essential` *For Fedora/CentOS*: `yum install gzip git-core curl python openssl-devel && yum groupinstall "Development Tools"` *For FreeBSD*: `portinstall node, npm, git (optional)` Additionally, you'll need [node.js](http://nodejs.org) installed, Ideally the latest stable version, be careful of installing nodejs from apt. ... oVirt and Docker Integration, Oct 2014 3 Installing Dependencies (Old-Fashion) ● 134 new packages required $ yum install gzip git-core curl python openssl-devel Transaction Summary ================================================================================ Install 2 Packages (+14 Dependent -

Container and Kernel-Based Virtual Machine (KVM) Virtualization for Network Function Virtualization (NFV)

Container and Kernel-Based Virtual Machine (KVM) Virtualization for Network Function Virtualization (NFV) White Paper August 2015 Order Number: 332860-001US YouLegal Lines andmay Disclaimers not use or facilitate the use of this document in connection with any infringement or other legal analysis concerning Intel products described herein. You agree to grant Intel a non-exclusive, royalty-free license to any patent claim thereafter drafted which includes subject matter disclosed herein. No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. All information provided here is subject to change without notice. Contact your Intel representative to obtain the latest Intel product specifications and roadmaps. The products described may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request. Copies of documents which have an order number and are referenced in this document may be obtained by calling 1-800-548-4725 or by visiting: http://www.intel.com/ design/literature.htm. Intel technologies’ features and benefits depend on system configuration and may require enabled hardware, software or service activation. Learn more at http:// www.intel.com/ or from the OEM or retailer. Results have been estimated or simulated using internal Intel analysis or architecture simulation or modeling, and provided to you for informational purposes. Any differences in your system hardware, software or configuration may affect your actual performance. For more complete information about performance and benchmark results, visit www.intel.com/benchmarks. Tests document performance of components on a particular test, in specific systems. -

SUSE Linux Enterprise Server 15 SP2 Autoyast Guide Autoyast Guide SUSE Linux Enterprise Server 15 SP2

SUSE Linux Enterprise Server 15 SP2 AutoYaST Guide AutoYaST Guide SUSE Linux Enterprise Server 15 SP2 AutoYaST is a system for unattended mass deployment of SUSE Linux Enterprise Server systems. AutoYaST installations are performed using an AutoYaST control le (also called a “prole”) with your customized installation and conguration data. Publication Date: September 24, 2021 SUSE LLC 1800 South Novell Place Provo, UT 84606 USA https://documentation.suse.com Copyright © 2006– 2021 SUSE LLC and contributors. All rights reserved. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”. For SUSE trademarks, see https://www.suse.com/company/legal/ . All other third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its aliates. Asterisks (*) denote third-party trademarks. All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its aliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof. Contents 1 Introduction to AutoYaST 1 1.1 Motivation 1 1.2 Overview and Concept 1 I UNDERSTANDING AND CREATING THE AUTOYAST CONTROL FILE 4 2 The AutoYaST Control -

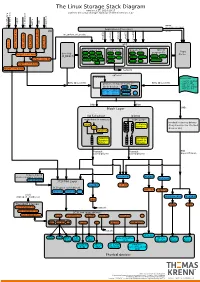

The Linux Storage Stack Diagram

The Linux Storage Stack Diagram version 3.17, 2014-10-17 outlines the Linux storage stack as of Kernel version 3.17 ISCSI USB mmap Fibre Channel Fibre over Ethernet Fibre Channel Fibre Virtual Host Virtual FireWire (anonymous pages) Applications (Processes) LIO malloc vfs_writev, vfs_readv, ... ... stat(2) read(2) open(2) write(2) chmod(2) VFS tcm_fc sbp_target tcm_usb_gadget tcm_vhost tcm_qla2xxx iscsi_target_mod block based FS Network FS pseudo FS special Page ext2 ext3 ext4 proc purpose FS target_core_mod direct I/O NFS coda sysfs Cache (O_DIRECT) xfs btrfs tmpfs ifs smbfs ... pipefs futexfs ramfs target_core_file iso9660 gfs ocfs ... devtmpfs ... ceph usbfs target_core_iblock target_core_pscsi network optional stackable struct bio - sector on disk BIOs (Block I/O) BIOs (Block I/O) - sector cnt devices on top of “normal” - bio_vec cnt block devices drbd LVM - bio_vec index - bio_vec list device mapper mdraid dm-crypt dm-mirror ... dm-cache dm-thin bcache BIOs BIOs Block Layer BIOs I/O Scheduler blkmq maps bios to requests multi queue hooked in device drivers noop Software (they hook in like stacked ... Queues cfq devices do) deadline Hardware Hardware Dispatch ... Dispatch Queue Queues Request Request BIO based Drivers based Drivers based Drivers request-based device mapper targets /dev/nullb* /dev/vd* /dev/rssd* dm-multipath SCSI Mid Layer /dev/rbd* null_blk SCSI upper level drivers virtio_blk mtip32xx /dev/sda /dev/sdb ... sysfs (transport attributes) /dev/nvme#n# /dev/skd* rbd Transport Classes nvme skd scsi_transport_fc network