Comparison of Lossless Compression Schemes for High Rate Electrical Grid

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

FLAC Decoder Using ARM920T Using S3C2440

International Journal of Engineering Research and Development e-ISSN: 2278-067X, p-ISSN: 2278-800X, www.ijerd.com Volume 4, Issue 7 (November 2012), PP. 21-24 FLAC Decoder using ARM920T using S3C2440 J. L. DivyaShivani1, M. Madan Gopal 2 1M.Tech (Embedded Systems) Student, 2Assoc.Professor Aurora’s Technological & Research Institute Uppal, Hyderabad, INDIA Abstract: In this paper, an embedded FLAC decoder system was designed, and the embedded development platform of ARM920T was built for the design. Furthermore, the IIS bus of S3C2440 in Linux which were used in designing the decoder system. Results show that the FLAC format sound can play well in the decoder system. The decoding solution can be applied to many high-end audio devices. With the development of multimedia technology, as well as the people's requirements to higher sound quality, the Lossy compression coding audio format such as MP3 cannot satisfy many music lovers. Therefore, many R & D staffs have research on how to develop Lossless Audio Decoding systems based on embedded devices with lower price and better sound quality. FLAC stands for Free Lossless Audio Codec, an audio format similar to MP3, but lossless, meaning that audio is compressed in FLAC without any loss in quality. This is similar to how Zip works, except with FLAC you will get much better compression because it is designed specifically for audio, and you can play back compressed FLAC files in your favorite player (or your car or home stereo) just like you would an MP3 file. FLAC stands out as the fastest and most widely supported lossless audio codec, and the only one that at once is non-proprietary, is unencumbered by patents, has an open- source reference implementation, has a well-documented format and API, and has several other independent implementations. -

Data Compression: Dictionary-Based Coding 2 / 37 Dictionary-Based Coding Dictionary-Based Coding

Dictionary-based Coding already coded not yet coded search buffer look-ahead buffer cursor (N symbols) (L symbols) We know the past but cannot control it. We control the future but... Last Lecture Last Lecture: Predictive Lossless Coding Predictive Lossless Coding Simple and effective way to exploit dependencies between neighboring symbols / samples Optimal predictor: Conditional mean (requires storage of large tables) Affine and Linear Prediction Simple structure, low-complex implementation possible Optimal prediction parameters are given by solution of Yule-Walker equations Works very well for real signals (e.g., audio, images, ...) Efficient Lossless Coding for Real-World Signals Affine/linear prediction (often: block-adaptive choice of prediction parameters) Entropy coding of prediction errors (e.g., arithmetic coding) Using marginal pmf often already yields good results Can be improved by using conditional pmfs (with simple conditions) Heiko Schwarz (Freie Universität Berlin) — Data Compression: Dictionary-based Coding 2 / 37 Dictionary-based Coding Dictionary-Based Coding Coding of Text Files Very high amount of dependencies Affine prediction does not work (requires linear dependencies) Higher-order conditional coding should work well, but is way to complex (memory) Alternative: Do not code single characters, but words or phrases Example: English Texts Oxford English Dictionary lists less than 230 000 words (including obsolete words) On average, a word contains about 6 characters Average codeword length per character would be limited by 1 -

Package 'Brotli'

Package ‘brotli’ May 13, 2018 Type Package Title A Compression Format Optimized for the Web Version 1.2 Description A lossless compressed data format that uses a combination of the LZ77 algorithm and Huffman coding. Brotli is similar in speed to deflate (gzip) but offers more dense compression. License MIT + file LICENSE URL https://tools.ietf.org/html/rfc7932 (spec) https://github.com/google/brotli#readme (upstream) http://github.com/jeroen/brotli#read (devel) BugReports http://github.com/jeroen/brotli/issues VignetteBuilder knitr, R.rsp Suggests spelling, knitr, R.rsp, microbenchmark, rmarkdown, ggplot2 RoxygenNote 6.0.1 Language en-US NeedsCompilation yes Author Jeroen Ooms [aut, cre] (<https://orcid.org/0000-0002-4035-0289>), Google, Inc [aut, cph] (Brotli C++ library) Maintainer Jeroen Ooms <[email protected]> Repository CRAN Date/Publication 2018-05-13 20:31:43 UTC R topics documented: brotli . .2 Index 4 1 2 brotli brotli Brotli Compression Description Brotli is a compression algorithm optimized for the web, in particular small text documents. Usage brotli_compress(buf, quality = 11, window = 22) brotli_decompress(buf) Arguments buf raw vector with data to compress/decompress quality value between 0 and 11 window log of window size Details Brotli decompression is at least as fast as for gzip while significantly improving the compression ratio. The price we pay is that compression is much slower than gzip. Brotli is therefore most effective for serving static content such as fonts and html pages. For binary (non-text) data, the compression ratio of Brotli usually does not beat bz2 or xz (lzma), however decompression for these algorithms is too slow for browsers in e.g. -

Lossless Audio Codec Comparison

Contents Introduction 3 1 CD-audio test 4 1.1 CD's used . .4 1.2 Results all CD's together . .4 1.3 Interesting quirks . .7 1.3.1 Mono encoded as stereo (Dan Browns Angels and Demons) . .7 1.3.2 Compressibility . .9 1.4 Convergence of the results . 10 2 High-resolution audio 13 2.1 Nine Inch Nails' The Slip . 13 2.2 Howard Shore's soundtrack for The Lord of the Rings: The Return of the King . 16 2.3 Wasted bits . 18 3 Multichannel audio 20 3.1 Howard Shore's soundtrack for The Lord of the Rings: The Return of the King . 20 A Motivation for choosing these CDs 23 B Test setup 27 B.1 Scripting and graphing . 27 B.2 Codecs and parameters used . 27 B.3 MD5 checksumming . 28 C Revision history 30 Bibliography 31 2 Introduction While testing the efficiency of lossy codecs can be quite cumbersome (as results differ for each person), comparing lossless codecs is much easier. As the last well documented and comprehensive test available on the internet has been a few years ago, I thought it would be a good idea to update. Beside comparing with CD-audio (which is often done to assess codec performance) and spitting out a grand total, this comparison also looks at extremes that occurred during the test and takes a look at 'high-resolution audio' and multichannel/surround audio. While the comparison was made to update the comparison-page on the FLAC website, it aims to be fair and unbiased. -

An Introduction to Mpeg-4 Audio Lossless Coding

« ¬ AN INTRODUCTION TO MPEG-4 AUDIO LOSSLESS CODING Tilman Liebchen Technical University of Berlin ABSTRACT encoding process has to be perfectly reversible without loss of in- formation, several parts of both encoder and decoder have to be Lossless coding will become the latest extension of the MPEG-4 implemented in a deterministic way. audio standard. In response to a call for proposals, many com- The MPEG-4 ALS codec uses forward-adaptive Linear Pre- panies have submitted lossless audio codecs for evaluation. The dictive Coding (LPC) to reduce bit rates compared to PCM, leav- codec of the Technical University of Berlin was chosen as refer- ing the optimization entirely to the encoder. Thus, various encoder ence model for MPEG-4 Audio Lossless Coding (ALS), attaining implementations are possible, offering a certain range in terms of working draft status in July 2003. The encoder is based on linear efficiency and complexity. This section gives an overview of the prediction, which enables high compression even with moderate basic encoder and decoder functionality. complexity, while the corresponding decoder is straightforward. The paper describes the basic elements of the codec, points out 2.1. Encoder Overview envisaged applications, and gives an outline of the standardization process. The MPEG-4 ALS encoder (Figure 1) typically consists of these main building blocks: • 1. INTRODUCTION Buffer: Stores one audio frame. A frame is divided into blocks of samples, typically one for each channel. Lossless audio coding enables the compression of digital audio • Coefficients Estimation and Quantization: Estimates (and data without any loss in quality due to a perfect reconstruction quantizes) the optimum predictor coefficients for each of the original signal. -

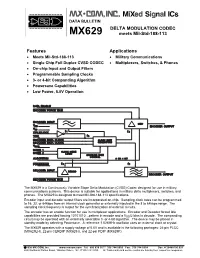

DELTA MODULATION CODEC Meets Mil-Std-188-113 Features

DATA BULLETIN DELTA MODULATION CODEC MX629 meets Mil-Std-188-113 Features Applications Meets Mil-Std-188-113 Military Communications Single Chip Full Duplex CVSD CODEC Multiplexers, Switches, & Phones On-chip Input and Output Filters Programmable Sampling Clocks 3- or 4-bit Companding Algorithm Powersave Capabilities Low Power, 5.0V Operation ➤ ➤ ➤ ➤➤ ➤ ➤ ➤ ➤ ➤ ➤ ➤ ➤ ➤ ➤ ➤ ➤ ➤ ➤ ➤ ➤ ➤ ➤ ➤ ➤➤➤ ➤ The MX629 is a Continuously Variable Slope Delta Modulation (CVSD) Codec designed for use in military communications systems. This device is suitable for applications in military delta multiplexers, switches, and phones. The MX629 is designed to meet Mil-Std-188-113 specifications. Encoder input and decoder output filters are incorporated on-chip. Sampling clock rates can be programmed to 16, 32, or 64kbps from an internal clock generator or externally injected in the 8 to 64kbps range. The sampling clock frequency is output for the synchronization of external circuits. The encoder has an enable function for use in multiplexer applications. Encoder and Decoder forced idle capabilities are provided forcing 10101010…pattern in encode and a VDD/2 bias in decode. The companding circuit may be operated with an externally selectable 3- or 4-bit algorithm. The device may be placed in standby mode by selecting Powersave. A reference 1.024MHz oscillator uses an external clock or crystal. The MX629 operates with a supply voltage of 5.0V and is available in the following packages: 24-pin PLCC (MX629LH), 22-pin CERDIP (MX629J), and 22-pin PDIP (MX629P). 1998 MX-COM, Inc. www.mxcom.com Tel: 800 638 5577 336 744 5050 Fax: 336 744 5054 Doc. # 20480190.001 4800 Bethania Station Road, Winston-Salem, NC 27105-1201 USA All Trademarks and service marks are held by their respective companies. -

Video Codec Requirements and Evaluation Methodology

Video Codec Requirements 47pt 30pt and Evaluation Methodology Color::white : LT Medium Font to be used by customers and : Arial www.huawei.com draft-filippov-netvc-requirements-01 Alexey Filippov, Huawei Technologies 35pt Contents Font to be used by customers and partners : • An overview of applications • Requirements 18pt • Evaluation methodology Font to be used by customers • Conclusions and partners : Slide 2 Page 2 35pt Applications Font to be used by customers and partners : • Internet Protocol Television (IPTV) • Video conferencing 18pt • Video sharing Font to be used by customers • Screencasting and partners : • Game streaming • Video monitoring / surveillance Slide 3 35pt Internet Protocol Television (IPTV) Font to be used by customers and partners : • Basic requirements: . Random access to pictures 18pt Random Access Period (RAP) should be kept small enough (approximately, 1-15 seconds); Font to be used by customers . Temporal (frame-rate) scalability; and partners : . Error robustness • Optional requirements: . resolution and quality (SNR) scalability Slide 4 35pt Internet Protocol Television (IPTV) Font to be used by customers and partners : Resolution Frame-rate, fps Picture access mode 2160p (4K),3840x2160 60 RA 18pt 1080p, 1920x1080 24, 50, 60 RA 1080i, 1920x1080 30 (60 fields per second) RA Font to be used by customers and partners : 720p, 1280x720 50, 60 RA 576p (EDTV), 720x576 25, 50 RA 576i (SDTV), 720x576 25, 30 RA 480p (EDTV), 720x480 50, 60 RA 480i (SDTV), 720x480 25, 30 RA Slide 5 35pt Video conferencing Font to be used by customers and partners : • Basic requirements: . Delay should be kept as low as possible 18pt The preferable and maximum delay values should be less than 100 ms and 350 ms, respectively Font to be used by customers . -

Digital Speech Processing— Lecture 17

Digital Speech Processing— Lecture 17 Speech Coding Methods Based on Speech Models 1 Waveform Coding versus Block Processing • Waveform coding – sample-by-sample matching of waveforms – coding quality measured using SNR • Source modeling (block processing) – block processing of signal => vector of outputs every block – overlapped blocks Block 1 Block 2 Block 3 2 Model-Based Speech Coding • we’ve carried waveform coding based on optimizing and maximizing SNR about as far as possible – achieved bit rate reductions on the order of 4:1 (i.e., from 128 Kbps PCM to 32 Kbps ADPCM) at the same time achieving toll quality SNR for telephone-bandwidth speech • to lower bit rate further without reducing speech quality, we need to exploit features of the speech production model, including: – source modeling – spectrum modeling – use of codebook methods for coding efficiency • we also need a new way of comparing performance of different waveform and model-based coding methods – an objective measure, like SNR, isn’t an appropriate measure for model- based coders since they operate on blocks of speech and don’t follow the waveform on a sample-by-sample basis – new subjective measures need to be used that measure user-perceived quality, intelligibility, and robustness to multiple factors 3 Topics Covered in this Lecture • Enhancements for ADPCM Coders – pitch prediction – noise shaping • Analysis-by-Synthesis Speech Coders – multipulse linear prediction coder (MPLPC) – code-excited linear prediction (CELP) • Open-Loop Speech Coders – two-state excitation -

Cluster-Based Delta Compression of a Collection of Files Department of Computer and Information Science

Cluster-Based Delta Compression of a Collection of Files Zan Ouyang Nasir Memon Torsten Suel Dimitre Trendafilov Department of Computer and Information Science Technical Report TR-CIS-2002-05 12/27/2002 Cluster-Based Delta Compression of a Collection of Files Zan Ouyang Nasir Memon Torsten Suel Dimitre Trendafilov CIS Department Polytechnic University Brooklyn, NY 11201 Abstract Delta compression techniques are commonly used to succinctly represent an updated ver- sion of a file with respect to an earlier one. In this paper, we study the use of delta compression in a somewhat different scenario, where we wish to compress a large collection of (more or less) related files by performing a sequence of pairwise delta compressions. The problem of finding an optimal delta encoding for a collection of files by taking pairwise deltas can be re- duced to the problem of computing a branching of maximum weight in a weighted directed graph, but this solution is inefficient and thus does not scale to larger file collections. This motivates us to propose a framework for cluster-based delta compression that uses text clus- tering techniques to prune the graph of possible pairwise delta encodings. To demonstrate the efficacy of our approach, we present experimental results on collections of web pages. Our experiments show that cluster-based delta compression of collections provides significant im- provements in compression ratio as compared to individually compressing each file or using tar+gzip, at a moderate cost in efficiency. A shorter version of this paper appears in the Proceedings of the 3rd International Con- ference on Web Information Systems Engineering (WISE), December 2002. -

Implementing Object-Based Audio in Radio Broadcasting

Object-based Audio in Radio Broadcast Implementing Object-based audio in radio broadcasting Diplomarbeit Ausgeführt zum Zweck der Erlangung des akademischen Grades Dipl.-Ing. für technisch-wissenschaftliche Berufe am Masterstudiengang Digitale Medientechnologien and der Fachhochschule St. Pölten, Masterkalsse Audio Design von: Baran Vlad DM161567 Betreuer/in und Erstbegutachter/in: FH-Prof. Dipl.-Ing Franz Zotlöterer Zweitbegutacher/in:FH Lektor. Dipl.-Ing Stefan Lainer [Wien, 09.09.2019] I Ehrenwörtliche Erklärung Ich versichere, dass - ich diese Arbeit selbständig verfasst, andere als die angegebenen Quellen und Hilfsmittel nicht benutzt und mich auch sonst keiner unerlaubten Hilfe bedient habe. - ich dieses Thema bisher weder im Inland noch im Ausland einem Begutachter/einer Begutachterin zur Beurteilung oder in irgendeiner Form als Prüfungsarbeit vorgelegt habe. Diese Arbeit stimmt mit der vom Begutachter bzw. der Begutachterin beurteilten Arbeit überein. .................................................. ................................................ Ort, Datum Unterschrift II Kurzfassung Die Wissenschaft der objektbasierten Tonherstellung befasst sich mit einer neuen Art der Übermittlung von räumlichen Informationen, die sich von kanalbasierten Systemen wegbewegen, hin zu einem Ansatz, der Ton unabhängig von dem Gerät verarbeitet, auf dem es gerendert wird. Diese objektbasierten Systeme behandeln Tonelemente als Objekte, die mit Metadaten verknüpft sind, welche ihr Verhalten beschreiben. Bisher wurde diese Forschungen vorwiegend -

Audio Coding for Digital Broadcasting

Recommendation ITU-R BS.1196-7 (01/2019) Audio coding for digital broadcasting BS Series Broadcasting service (sound) ii Rec. ITU-R BS.1196-7 Foreword The role of the Radiocommunication Sector is to ensure the rational, equitable, efficient and economical use of the radio- frequency spectrum by all radiocommunication services, including satellite services, and carry out studies without limit of frequency range on the basis of which Recommendations are adopted. The regulatory and policy functions of the Radiocommunication Sector are performed by World and Regional Radiocommunication Conferences and Radiocommunication Assemblies supported by Study Groups. Policy on Intellectual Property Right (IPR) ITU-R policy on IPR is described in the Common Patent Policy for ITU-T/ITU-R/ISO/IEC referenced in Resolution ITU-R 1. Forms to be used for the submission of patent statements and licensing declarations by patent holders are available from http://www.itu.int/ITU-R/go/patents/en where the Guidelines for Implementation of the Common Patent Policy for ITU-T/ITU-R/ISO/IEC and the ITU-R patent information database can also be found. Series of ITU-R Recommendations (Also available online at http://www.itu.int/publ/R-REC/en) Series Title BO Satellite delivery BR Recording for production, archival and play-out; film for television BS Broadcasting service (sound) BT Broadcasting service (television) F Fixed service M Mobile, radiodetermination, amateur and related satellite services P Radiowave propagation RA Radio astronomy RS Remote sensing systems S Fixed-satellite service SA Space applications and meteorology SF Frequency sharing and coordination between fixed-satellite and fixed service systems SM Spectrum management SNG Satellite news gathering TF Time signals and frequency standards emissions V Vocabulary and related subjects Note: This ITU-R Recommendation was approved in English under the procedure detailed in Resolution ITU-R 1. -

The Basic Principles of Data Compression

The Basic Principles of Data Compression Author: Conrad Chung, 2BrightSparks Introduction Internet users who download or upload files from/to the web, or use email to send or receive attachments will most likely have encountered files in compressed format. In this topic we will cover how compression works, the advantages and disadvantages of compression, as well as types of compression. What is Compression? Compression is the process of encoding data more efficiently to achieve a reduction in file size. One type of compression available is referred to as lossless compression. This means the compressed file will be restored exactly to its original state with no loss of data during the decompression process. This is essential to data compression as the file would be corrupted and unusable should data be lost. Another compression category which will not be covered in this article is “lossy” compression often used in multimedia files for music and images and where data is discarded. Lossless compression algorithms use statistic modeling techniques to reduce repetitive information in a file. Some of the methods may include removal of spacing characters, representing a string of repeated characters with a single character or replacing recurring characters with smaller bit sequences. Advantages/Disadvantages of Compression Compression of files offer many advantages. When compressed, the quantity of bits used to store the information is reduced. Files that are smaller in size will result in shorter transmission times when they are transferred on the Internet. Compressed files also take up less storage space. File compression can zip up several small files into a single file for more convenient email transmission.