FORMAL ONTOLOGY in INFORMATION SYSTEMS Frontiers in Artificial Intelligence and Applications

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Information Technology Management 14

Information Technology Management 14 Valerie Bryan Practitioner Consultants Florida Atlantic University Layne Young Business Relationship Manager Indianapolis, IN Donna Goldstein GIS Coordinator Palm Beach County School District Information Technology is a fundamental force in • IT as a management tool; reshaping organizations by applying investment in • understanding IT infrastructure; and computing and communications to promote competi- • ȱǯ tive advantage, customer service, and other strategic ęǯȱǻȱǯȱǰȱŗşşŚǼ ȱȱ ȱ¢ȱȱȱ¢ǰȱȱ¢Ȃȱ not part of the steamroller, you’re part of the road. ǻ ȱǼ ȱ ¢ȱ ȱ ȱ ęȱ ȱ ȱ ¢ǯȱȱȱȱȱ ȱȱ ȱ- ¢ȱȱȱȱȱǯȱ ǰȱ What is IT? because technology changes so rapidly, park and recre- ation managers must stay updated on both technological A goal of management is to provide the right tools for ȱȱȱȱǯ ěȱ ȱ ě¢ȱ ȱ ȱ ȱ ȱ ȱ ȱȱȱȱ ȱȱȱǰȱȱǰȱ ȱȱȱȱȱȱǯȱȱȱȱ ǰȱȱȱȱ ȱȱ¡ȱȱȱȱ recreation organization may be comprised of many of terms crucial for understanding the impact of tech- ȱȱȱȱǯȱȱ ¢ȱ ȱ ȱ ȱ ȱ ǯȱ ȱ ȱ ȱ ȱ ȱ ǯȱ ȱ - ȱȱęȱȱȱ¢ȱȱȱȱ ¢ȱǻ Ǽȱȱȱȱ¢ȱ ȱȱȱȱ ǰȱ ȱ ȱ ȬȬ ȱ ȱ ȱ ȱȱǯȱȱȱȱȱ ¢ȱȱǯȱȱ ȱȱȱȱ ȱȱ¡ȱȱȱȱȱǻǰȱŗşŞśǼǯȱ ȱȱȱȱȱȱĞȱȱȱȱ ǻȱ¡ȱŗŚǯŗȱ Ǽǯ ě¢ȱȱȱȱǯ Information technology is an umbrella term Details concerning the technical terms used in this that covers a vast array of computer disciplines that ȱȱȱȱȱȱȬȬęȱ ȱȱ permit organizations to manage their information ǰȱ ȱ ȱ ȱ Ȭȱ ¢ȱ ǯȱ¢ǰȱȱ¢ȱȱȱ ȱȱ ǯȱȱȱȱȱȬȱ¢ȱ a fundamental force in reshaping organizations by applying ȱȱ ȱȱ ȱ¢ȱȱȱȱ investment in computing and communications to promote ȱȱ¢ǯȱ ȱȱȱȱ ȱȱ competitive advantage, customer service, and other strategic ȱ ǻȱ ȱ ŗŚȬŗȱ ęȱ ȱ DZȱ ęȱǻǰȱŗşşŚǰȱǯȱřǼǯ ȱ Ǽǯ ȱ¢ȱǻ Ǽȱȱȱȱȱ ȱȱȱęȱȱȱ¢ȱȱ ȱ¢ǯȱȱȱȱȱ ȱȱ ȱȱ ȱȱȱȱ ȱ¢ȱ ȱȱǯȱ ȱ¢ǰȱ ȱȱ ȱDZ ȱȱȱȱǯȱ ȱȱȱȱǯȱ It lets people learn things they didn’t think they could • ȱȱȱ¢ǵ ȱǰȱȱǰȱȱȱǰȱȱȱȱȱǯȱ • the manager’s responsibilities; ǻȱǰȱȱ¡ȱĜȱȱĞǰȱȱ • information resources; ǷȱǯȱȱȱřŗǰȱŘŖŖŞǰȱ • disaster recovery and business continuity; ȱĴDZȦȦ ǯ ǯǼ Information Technology Management 305 Exhibit 14. -

Graph-Based Reasoning in Collaborative Knowledge Management for Industrial Maintenance Bernard Kamsu-Foguem, Daniel Noyes

Graph-based reasoning in collaborative knowledge management for industrial maintenance Bernard Kamsu-Foguem, Daniel Noyes To cite this version: Bernard Kamsu-Foguem, Daniel Noyes. Graph-based reasoning in collaborative knowledge manage- ment for industrial maintenance. Computers in Industry, Elsevier, 2013, Vol. 64, pp. 998-1013. 10.1016/j.compind.2013.06.013. hal-00881052 HAL Id: hal-00881052 https://hal.archives-ouvertes.fr/hal-00881052 Submitted on 7 Nov 2013 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Open Archive Toulouse Archive Ouverte (OATAO) OATAO is an open access repository that collects the work of Toulouse researchers and makes it freely available over the web where possible. This is an author-deposited version published in: http://oatao.univ-toulouse.fr/ Eprints ID: 9587 To link to this article: doi.org/10.1016/j.compind.2013.06.013 http://www.sciencedirect.com/science/article/pii/S0166361513001279 To cite this version: Kamsu Foguem, Bernard and Noyes, Daniel Graph-based reasoning in collaborative knowledge management for industrial -

Bioinformatics 1

Bioinformatics 1 Bioinformatics School School of Science, Engineering and Technology (http://www.stmarytx.edu/set/) School Dean Ian P. Martines, Ph.D. ([email protected]) Department Biological Science (https://www.stmarytx.edu/academics/set/undergraduate/biological-sciences/) Bioinformatics is an interdisciplinary and growing field in science for solving biological, biomedical and biochemical problems with the help of computer science, mathematics and information technology. Bioinformaticians are in high demand not only in research, but also in academia because few people have the education and skills to fill available positions. The Bioinformatics program at St. Mary’s University prepares students for graduate school, medical school or entry into the field. Bioinformatics is highly applicable to all branches of life sciences and also to fields like personalized medicine and pharmacogenomics — the study of how genes affect a person’s response to drugs. The Bachelor of Science in Bioinformatics offers three tracks that students can choose. • Bachelor of Science in Bioinformatics with a minor in Biology: 120 credit hours • Bachelor of Science in Bioinformatics with a minor in Computer Science: 120 credit hours • Bachelor of Science in Bioinformatics with a minor in Applied Mathematics: 120 credit hours Students will take 23 credit hours of core Bioinformatics classes, which included three credit hours of internship or research and three credit hours of a Bioinformatics Capstone course. BS Bioinformatics Tracks • Bachelor of Science -

A Survey of Top-Level Ontologies to Inform the Ontological Choices for a Foundation Data Model

A survey of Top-Level Ontologies To inform the ontological choices for a Foundation Data Model Version 1 Contents 1 Introduction and Purpose 3 F.13 FrameNet 92 2 Approach and contents 4 F.14 GFO – General Formal Ontology 94 2.1 Collect candidate top-level ontologies 4 F.15 gist 95 2.2 Develop assessment framework 4 F.16 HQDM – High Quality Data Models 97 2.3 Assessment of candidate top-level ontologies F.17 IDEAS – International Defence Enterprise against the framework 5 Architecture Specification 99 2.4 Terminological note 5 F.18 IEC 62541 100 3 Assessment framework – development basis 6 F.19 IEC 63088 100 3.1 General ontological requirements 6 F.20 ISO 12006-3 101 3.2 Overarching ontological architecture F.21 ISO 15926-2 102 framework 8 F.22 KKO: KBpedia Knowledge Ontology 103 4 Ontological commitment overview 11 F.23 KR Ontology – Knowledge Representation 4.1 General choices 11 Ontology 105 4.2 Formal structure – horizontal and vertical 14 F.24 MarineTLO: A Top-Level 4.3 Universal commitments 33 Ontology for the Marine Domain 106 5 Assessment Framework Results 37 F. 25 MIMOSA CCOM – (Common Conceptual 5.1 General choices 37 Object Model) 108 5.2 Formal structure: vertical aspects 38 F.26 OWL – Web Ontology Language 110 5.3 Formal structure: horizontal aspects 42 F.27 ProtOn – PROTo ONtology 111 5.4 Universal commitments 44 F.28 Schema.org 112 6 Summary 46 F.29 SENSUS 113 Appendix A F.30 SKOS 113 Pathway requirements for a Foundation Data F.31 SUMO 115 Model 48 F.32 TMRM/TMDM – Topic Map Reference/Data Appendix B Models 116 ISO IEC 21838-1:2019 -

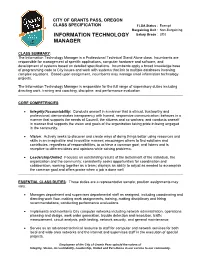

Information Technology Manager Is a Professional Technical Stand Alone Class

CITY OF GRANTS PASS, OREGON CLASS SPECIFICATION FLSA Status : Exempt Bargaining Unit : Non-Bargaining INFORMATION TECHNOLOGY Salary Grade : UD2 MANAGER CLASS SUMMARY: The Information Technology Manager is a Professional Technical Stand Alone class. Incumbents are responsible for management of specific applications, computer hardware and software, and development of systems based on detailed specifications. Incumbents apply a broad knowledge base of programming code to City issues and work with systems that link to multiple databases involving complex equations. Based upon assignment, incumbents may manage small information technology projects. The Information Technology Manager is responsible for the full range of supervisory duties including directing work, training and coaching, discipline, and performance evaluation. CORE COMPETENCIES: Integrity/Accountability: Conducts oneself in a manner that is ethical, trustworthy and professional; demonstrates transparency with honest, responsive communication; behaves in a manner that supports the needs of Council, the citizens and co-workers; and conducts oneself in manner that supports the vision and goals of the organization taking pride in being engaged in the community. Vision: Actively seeks to discover and create ways of doing things better using resources and skills in an imaginative and innovative manner; encourages others to find solutions and contributes, regardless of responsibilities, to achieve a common goal; and listens and is receptive to different ideas and opinions while solving problems. Leadership/United: Focuses on outstanding results of the betterment of the individual, the organization and the community; consistently seeks opportunities for coordination and collaboration, working together as a team; displays an ability to adjust as needed to accomplish the common goal and offers praise when a job is done well. -

Genome Informatics 4–8 September 2002, Wellcome Trust Genome Campus, Hinxton, Cambridge, UK

Comparative and Functional Genomics Comp Funct Genom 2003; 4: 509–514. Published online in Wiley InterScience (www.interscience.wiley.com). DOI: 10.1002/cfg.300 Feature Meeting Highlights: Genome Informatics 4–8 September 2002, Wellcome Trust Genome Campus, Hinxton, Cambridge, UK Jo Wixon1* and Jennifer Ashurst2 1MRC UK HGMP-RC, Hinxton, Cambridge CB10 1SB, UK 2The Wellcome Trust Sanger Institute, Wellcome Trust Genome Campus, Hinxton, Cambridge CB10 1SA, UK *Correspondence to: Abstract Jo Wixon, MRC UK HGMP-RC, Hinxton, Cambridge CB10 We bring you the highlights of the second Joint Cold Spring Harbor Laboratory 1SB, UK. and Wellcome Trust ‘Genome Informatics’ Conference, organized by Ewan Birney, E-mail: [email protected] Suzanna Lewis and Lincoln Stein. There were sessions on in silico data discovery, comparative genomics, annotation pipelines, functional genomics and integrative biology. The conference included a keynote address by Sydney Brenner, who was awarded the 2002 Nobel Prize in Physiology or Medicine (jointly with John Sulston and H. Robert Horvitz) a month later. Copyright 2003 John Wiley & Sons, Ltd. In silico data discovery background set was genes which had a log ratio of ∼0 in liver. Their approach found 17 of 17 known In the first of two sessions on this topic, Naoya promoters with a specificity of 17/28. None of the Hata (Cold Spring Harbor Laboratory, USA) sites they identified was located downstream of a spoke about motif searching for tissue specific TSS and all showed an excess in the foreground promoters. The first step in the process is to sample compared to the background sample. -

Framework for Formal Ontology Barry Smith and Kevin Mulligan

Framework for Formal Ontology Barry Smith and Kevin Mulligan NOTICE: THIS MATERIAL M/\Y BE PROTECTED BY COPYRIGHT LAW (TITLE 17, u.s. CODE) ABSTRACT. The discussions which follow rest on a distinction, or object-relations in the world; nor does it concern itself rust expounded by Husser!, between formal logic and formal specifically with sentences about such objects. It deals, ontology. The former concerns itself with (formal) meaning-struc rather, with sentences in general (including, for example, tures; the latter with formal structures amongst objects and their the sentences of mathematics),2 and where it is applied to parts. The paper attempts to show how, when formal ontological considerations are brought into play, contemporary extensionalist sentences about objects it can take no account of any theories of part and whole, and above all the mereology of Lesniew formal or material object·structures which may be ex ski, can be generalised to embrace not only relations between con hibited amongst the objects pictured. Its attentions are crete objects and object-pieces, but also relations between what we directed, rather, to the relations which obtain between shall call dependent parts or moments. A two-dimensional formal sentences purely in virtue of what we can call their logical language is canvassed for the resultant ontological theory, a language which owes more to the tradition of Euler, Boole and Venn than to complexity (for example the deducibility-relations which the quantifier-centred languages which have predominated amongst obtain between any sentence of the form A & Band analytic philosophers since the time of Frege and Russell. -

Next Generation Logic Programming Systems Gopal Gupta

Next Generation Logic Programming Systems Gopal Gupta In the last 30 years logic programming (LP), with Prolog as the most representative logic programming language, has emerged as a powerful paradigm for intelligent reasoning and deductive problem solving. In the last 15 years several powerful and highly successful extensions of logic programming have been proposed and researched. These include constraint logic programming (CLP), tabled logic programming, inductive logic programming, concurrent/parallel logic programming, Andorra Prolog and adaptations for non- monotonic reasoning such as answer set programming. These extensions have led to very powerful applications in reasoning, for example, CLP has been used in industry for intelligently and efficiently solving large scale scheduling and resource allocation problems, tabled logic programming has been used for intelligently solving large verification problems as well as non- monotonic reasoning problems, inductive logic programming for new drug discoveries, and answer set programming for practical planning problems, etc. However, all these powerful extensions of logic programming have been developed by extending standard logic programming (Prolog) systems, and each one has been developed in isolation from others. While each extension has resulted in very powerful applications in reasoning, considerably more powerful applications will become possible if all these extensions were combined into a single system. This power will come about due to search space being dramatically pruned, reducing the time taken to find a solution given a reasoning problem coded as a logic program. Given the current state-of-the-art, even two extensions are not available together in a single system. Realizing multiple or all extensions in a single framework has been rendered difficult by the enormous complexity of implementing reasoning systems in general and logic programming systems in particular. -

Logic-Based Technologies for Intelligent Systems: State of the Art and Perspectives

information Article Logic-Based Technologies for Intelligent Systems: State of the Art and Perspectives Roberta Calegari 1,* , Giovanni Ciatto 2 , Enrico Denti 3 and Andrea Omicini 2 1 Alma AI—Alma Mater Research Institute for Human-Centered Artificial Intelligence, Alma Mater Studiorum–Università di Bologna, 40121 Bologna, Italy 2 Dipartimento di Informatica–Scienza e Ingegneria (DISI), Alma Mater Studiorum–Università di Bologna, 47522 Cesena, Italy; [email protected] (G.C.); [email protected] (A.O.) 3 Dipartimento di Informatica–Scienza e Ingegneria (DISI), Alma Mater Studiorum–Università di Bologna, 40136 Bologna, Italy; [email protected] * Correspondence: [email protected] Received: 25 February 2020; Accepted: 18 March 2020; Published: 22 March 2020 Abstract: Together with the disruptive development of modern sub-symbolic approaches to artificial intelligence (AI), symbolic approaches to classical AI are re-gaining momentum, as more and more researchers exploit their potential to make AI more comprehensible, explainable, and therefore trustworthy. Since logic-based approaches lay at the core of symbolic AI, summarizing their state of the art is of paramount importance now more than ever, in order to identify trends, benefits, key features, gaps, and limitations of the techniques proposed so far, as well as to identify promising research perspectives. Along this line, this paper provides an overview of logic-based approaches and technologies by sketching their evolution and pointing out their main application areas. Future perspectives for exploitation of logic-based technologies are discussed as well, in order to identify those research fields that deserve more attention, considering the areas that already exploit logic-based approaches as well as those that are more likely to adopt logic-based approaches in the future. -

STUMPF, HUSSERL and INGARDEN of the Application of the Formal Meaningful Categories

Formal Ontology FORMAL ONTOLOGY AS AN OPERATIVE TOOL of theory, categories that must be referred to as the objectual domain, which is determined by the ontological categories. In this way, we IN THE THORIES OF THE OBJECTS OF THE must take into account that, for Husserl, ontological categories are LIFE‐WORLD: formal insofar as they are completely freed from any material domain STUMPF, HUSSERL AND INGARDEN of the application of the formal meaningful categories. Therefore, formal ontology, as developed in the third Logical Investigation, is the corresponding “objective correlate of the concept of a possible theory, 1 Horacio Banega (University of Buenos Aires and National Universi‐ deinite only in respect of form.” 2 ty of Quilmes) Volume XXI of Husserliana provides insight into the theoretical source of Husserlian formal ontology.3 In particular, it strives to deine the theory of manifolds or the debate over the effective nature of what will later be called “set theory.” Thus, what in § of Prolegomena is It is accepted that certain mereological concepts and phenomenolog‐ ical conceptualisations presented in Carl Stumpf’s U ber den psy‐ called a “Theory of Manifolds” (Mannigfaltigkeitslehre) is what Husserl chologischen Ursprung der Raumvorstellung and Tonpsychologie played an important role in the development of the Husserlian formal 1 Edmund Husserl, Logische Untersuchunghen. Zweiter Band, Untersuchungen zur ontology. In the third Logical Investigation, which displays the for‐ Phänomenologie und Theorie der Erkenntnis. Husserliana XIX/ and XIX/, (ed.) U. mal relations between part and whole and among parts that make Panzer (The Hague: Martinus Nijhoff, ), hereafter referred to as Hua XIX/ out a whole, one of the main concepts of contemporary formal ontol‐ and Hua XIX/; tr. -

An Academic Discipline

Information Technology – An Academic Discipline This document represents a summary of the following two publications defining Information Technology (IT) as an academic discipline. IT 2008: Curriculum Guidelines for Undergraduate Degree Programs in Information Technology. (Nov. 2008). Association for Computing Machinery (ACM) and IEEE Computer Society. Computing Curricula 2005 Overview Report. (Sep. 2005). Association for Computing Machinery (ACM), Association for Information Systems (AIS), Computer Society (IEEE- CS). The full text of these reports with details on the model IT curriculum and further explanation of the computing disciplines and their commonalities/differences can be found online: http://www.acm.org/education/education/curricula-recommendations) From IT 2008: Curriculum Guidelines for Undergraduate Degree Programs in Information Technology IT programs aim to provide IT graduates with the skills and knowledge to take on appropriate professional positions in Information Technology upon graduation and grow into leadership positions or pursue research or graduate studies in the field. Specifically, within five years of graduation a student should be able to: 1. Explain and apply appropriate information technologies and employ appropriate methodologies to help an individual or organization achieve its goals and objectives; 2. Function as a user advocate; 3. Manage the information technology resources of an individual or organization; 4. Anticipate the changing direction of information technology and evaluate and communicate the likely utility of new technologies to an individual or organization; 5. Understand and, in some cases, contribute to the scientific, mathematical and theoretical foundations on which information technologies are built; 6. Live and work as a contributing, well-rounded member of society. In item #2 above, it should be recognized that in many situations, "a user" is not a homogeneous entity. -

Compiling a Default Reasoning System Into Prolog

Compiling A Default Reasoning System into Prolog David Poole Department of Computer Science University of British Columbia Vancouver BC Canada VT W p o olecsub cca July Abstract Articial intelligence researchers have b een designing representa tion systems for default and ab ductive reasoning Logic Programming researchers have b een working on techniques to improve the eciency of Horn Clause deduction systems This pap er describ es how one such default and ab ductive reasoning system namely Theorist can b e translated into Horn clauses with negation as failure so that we can use the clarity of ab ductive reasoning systems and the eciency of Horn clause deduction systems Wethus showhowadvances in expressivepower that articial intelligence workers are working on can directly utilise advances in eciency that logic programming re searchers are working on Actual co de from a running system is given Intro duction Many p eople in Articial Intelligence have b een working on default reasoning and ab ductive diagnosis systems The systems implemented so far eg are only prototyp es or have b een develop ed in A Theorist to Prolog Compiler ways that cannot take full advantage in the advances of logic programming implementation technology Many p eople are working on making logic programming systems more ecient These systems however usually assume that the input is in the form of Horn clauses with negation as failure This pap er shows howto implement the default reasoning system Theorist by compiling its input into Horn clauses