Finalist Reports 2016-2017

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Los Alamos' Russian

ReflectionsLos Alamos National Laboratory Vol. 3, No. 3 • April 1998 Los Alamos’ Russian ‘Ambassadors’ … pages 6 and 7 Reflections Inside this issue … Cover photo illustration by Edwin Vigil Ergonomics . Page 3 Let’s get physical BEAM robotics . Page 4 I promised myself a few weeks ago, for the “umpteenth” Lab, pueblos . Page 5 time, to get more physically fit. I just know I’ll feel better, Russian have more stamina and be better able to deal with on-the-job ‘ambassadors’ . Pages 6 and 7 stresses. That summer is fast approaching — and with it the People . Pages 8 and 9 shedding of concealing jackets and bulky sweaters — only Just for fun . Page 11 adds urgency to my need to get more fit. But with a little will power, I hope not to have too much trouble reaching my goal. Physicist sets sail . Page 12 After all, I live in Northern New Mexico ... And I work at the Lab. What does living in Northern New Mexico and working at the Lab have Correction: The cover story in the to do with my being able to get more physically fit? They take away my March issue of Reflections about excuses for not exercising regularly. The area’s usually sunny skies and TA-21 incorrectly said no one works serenely beautiful landscape literally beckon one to get out and walk around at the facility. The Ecology Group or go for a jog. (ESH-20) is located in a building at I can step out of my office building in Technical Area 3 during the lunch DP West, and the gates are open hour, walk for a short distance in almost any direction and be bombarded during the day. -

INVENTING Los ALAMOS This Page Intentionally Left Blank INVENTING LOS ALAMOS the Growth of an Atomic Community

INVENTING Los ALAMOS This page intentionally left blank INVENTING LOS ALAMOS The Growth of an Atomic Community JONHUNNER Also by Jon Hunner (coauthor) Las Cruces (Chicago, 2003) (coauthor) Santa Fe: A Historical Walking Guide (Chicago, 2004) This book is published with the generous assistance of the McCasland Foun- dation, Duncan, Oklahoma. Library of Congress Cataloging-in-PublicationData Hunner, Jon. Inventing Los Alamos : the growth of an atomic community / Jon Hunner. p. cm. Includes bibliographical references and index. ISBN 0-8061-3634-0 (alk. paper) 1. Los Alamos (N.M.)-History-20th century. 2. Los Alamos (N.M.)- Social life and customs-20th century. 3. Los Alamos (N.M.)-Social con- ditions-20th century. 4. Family-New Mexico-Los Alamos-History- 20th century. 5. Community life-New Mexico-Los Alamos-History- 20th century. 6. Nuclear weapons-Social aspects-New Mexico-Los Alamos-History-20th century. 7. Nuclear weapons-Social aspects- United States-History-20th century. 8. Cold War-Social aspects-New Mexico-Los Alamos. 9. Cold War-Social aspects-United State. I. Title. F804.L6H86 2004 978.9'58053--&22 2004046086 The paper in this book meets the guidelines for permanence and durability of the Committee on Production Guidelines for Book Longevity of the Council on Library Resources, Inc. m Copyright O 2004 by the University of Oklahoma Press, Norman, Publishing Division of the University. All rights reserved. Manufactured in the U.S.A. CONTENTS LIST OF ILLUSTRATIONS vii ACKNOWLEDGMENTS ix INTRODUCTION 3 CHAPTER l Rendezvous at Site Y The Instant City 12 CHAPTER 2 Fishing in the Desert with Fat Man: Civic Tension, Atomic Explosion CHAPTER 3 Postwar Los Alamos: Exodus, New Growth, and Invisible Danger CHAPTER 4 Los Alamos Transformed: Federal Largesse and Red Challenge CHAPTER 5 A Cold War Community Up in Arrns: Competition and Conformity CHAPTER 6 Toward Normalizing Los Alamos: Cracking the Gates CHAPTER 7 Atomic City on a Hill: Legacy and Continuing Research NOTES BIBLIOGRAPHY INDEX This page intentionally left blank PHOTOGRAPHS J. -

2001 Presidential Scholars Yearbook (PDF)

2001 PRESIDENTIAL SCHOLARS PROGRAM NATIONAL RECOGNITION WEEK June 23 - June 28, 2001 Washington, DC National Recognition Week is Sponsored by The General Motors Corporation GMAC Financial Services The Merck Company Foundation The Presidential1964- Scholars Program Through Thirty-Eight Years... he United States Presidential Scholars Program was established in 1964, by Executive Order of the TPresident, to recognize and honor some of our Nation’s most distinguished graduating high school seniors. Each year, up to 141 students are named as Presidential Scholars, one of the Nation’s high- est honors for high school students. In honoring the Presidential Scholars, the President of the Unit- ed States symbolically honors all graduating high school seniors of high potential. From President Lyndon Baines Johnson to George W. Bush, the Presidential Scholars Program has honored more than 5,000 of our nation’s most distinguished graduating high school seniors. Initiat- ed by President Johnson, the Presidential Scholars Program annually selects one male and one female student from each state, the District of Columbia, Puerto Rico, Americans living abroad, 15 at-large students, and up to 20 students in the arts on the basis of outstanding scholarship, service, leadership and creativity through a rigorous selection and review process administered by the U.S. Department of Education. President Johnson opened the first meeting of the White House Commission on Presidential Schol- ars by stating that the Program was not just a reward for excellence, but a means of nourishing excel- lence. The Program was intended to stimulate achievement in a way that could be “revolutionary.” During the first National Recognition Week in 1964, the Scholars participated in seminars with Sec- retary of State Dan Rusk, Astronaut Alan B. -

LOS ALAMOS PUBLIC SCHOOLS 5-Year Facilities Master Plan FINAL • 2019-2023 • # 5382 Table of Contents

LOS ALAMOS PUBLIC SCHOOLS 5-Year Facilities Master Plan FINAL • 2019-2023 • # 5382 Table of Contents SECTION 0: INTRODUCTION Master Plan Team Acronyms and Definitions Executive Summary Requirement Process and Adoption School District Information Facilities Demographics and Enrollment Utilization and Capacity District Financial Information Technology and Preventive Maintenance PSCOC Facilities Assessment Database School District Priorities School District Capital Plan SECTION 1: FACILITY GOALS/PROCESS 1.1 Goals District Mission and Vision Statement District Educational Goals/Program of Instruction District Relationship with the Community District Facilities Alignment to NMAS Long Range District Facility and FMP Goals 1.2 Public Process Decision Making Authority Facilities Master Plan Process FMP Prioritization Schedule 1.3 Issues and Findings SECTION 2: EXISTING & PROJECTED CONDITIONS 2.1 Programs 2.1.1 District Information including: • Total Enrollment • Number of Schools • Types of Schools and Grade Configuration • School Feeder Chart • Pupil to Teacher Ratio • School Grades • Educational Programs 2.1.2 Anticipated Changes in Educational Programs 2.1.3 Shared/Joint Use of Facilities Los Alamos Public Schools • 5-Year Facilities Master Plan TOC.i GS Architecture • 2019 Table of Contents 2.2 Sites/ Facilities 2.2.1 District Site Information • District Maps 2.2.2 District Facilities Inventory 2.3 District Growth District Regional Perspectives • Map of District Region Demographic Trends • County, District, Town Population -

School/Library City Albuquerque Academy Albuquerque

School/Library City Albuquerque Academy Albuquerque Albuquerque School of Excellence Albuquerque Alta Vista Early College High School Anthony Arrowhead Park Early College High School Las Cruces Aztec High School Aztec Bandelier Elementary School Albuquerque Belen Middle School Belen Blanco Elementary School Blanco Chaparral Elementary School Santa Fe Chaparral Middle School Alamogordo Cibola High School Albuquerque Cien Aguas International School Albuquerque Cleveland Middle School Library Albuquerque Corrales International School Albuquerque Cottonwood Classics School Albuquerque Cottonwood Valley Charter School Socorro Dennis Chavez Elementary School Albuquerque Des Moines Municipal Schools Des Moines Eagle Ridge Middle School Rio Rancho East Mountain High School Sandia Park Edgewood Middle School Edgewood Eisenhower Middle School Albuquerque Eldorado High School Albuquerque Estancia Valley Classical Academy Moriarty Eubank Elementary School Albuquerque Farmington High School Farmington Gallup High School Gallup Georgia O'Keeffe Elementary School Albuquerque Grant Middle School Albuquerque Guadalupe Montessori Silver City Herandez Elementary School Española Holloman Middle School Holloman AFB Holy Ghost Catholic School Albuquerque Home School Writing Las Cruces Jefferson Montessori Academy Carlsbad Kennedy Middle School Albuquerque La Cueva High School Albuquerque La Mariposa Montessori School Santa Fe Los Alamos High School Los Alamos Los Alamos Middle School Los Alamos Lovington High School Lovington Lyndon B. Johnson Middle School Albuquerque Magdalena High School Magdalena Magdalena Municipal Schools Magdalena Mesa View Middle School Farmington New Mexico Connections Academy Santa Fe Public Academy for Performing Arts Albuquerque Ramah High School Ramah Ramah Middle School Ramah Ruidoso High School Ruidoso S.Y. Jackson Elementary School Albuquerque Salazar Elementary School Santa Fe Santa Fe Waldorf School Santa Fe Sarracino Middle School Socorro School of Dreams Academy Los Lunas Shiprock High School Shiprock Southwest Learning Center Albuquerque St. -

Candidates: U.S. Presidential Scholars Program -- February 6, 2019 (PDF)

Candidates for the U.S. Presidential Scholars Program January 2019 [*] Candidate for U.S. Presidential Scholar in Arts. [**] Candidate for U.S. Presidential Scholar in Career and Technical Education [***] Candidate for U.S. Presidential Scholar and Presidential Scholar in the Arts [****] Candidate for U.S. Presidential Scholar and Presidential Scholar in Career & Technical Education Americans Abroad AA - Sophia M. Adams, FPO - Naples American High School AA - Gian C. Arellano, FPO - David G Farragut High School AA - Annette A. Belleman, APO - Brussels American High School AA - Hana N. Belt, FPO - Nile C. Kinnick High School AA - Carter A. Borland, Alconbury - Alconbury American High School AA - Kennedy M. Campbell, APO - Ramstein American High School AA - Sharan Chawla, St Thomas - Antilles School AA - Victoria C. Chen, DPO - American Cmty Sch Of Abu Dhabi AA - Aimee Cho, Seoul - Humphreys High School AA - Ji Hye Choi, Barrigada - Guam Adventist Academy AA - Yvan F. Chu, Tamuning - John F Kennedy High School AA - Jean R. Clemente, Tamuning - John F Kennedy High School AA - Jacob Corsaro, Seoul - Humphreys High School AA - Ashton Craycraft, APO - Kaiserslautern American High School AA - Mackenzie Cuellar, Kaiserslautern - Kaiserslautern American High School AA - Aimee S. Dastin-Van Rijn, DPO - Saint Johns International Sch AA - Garrett C. Day, APO - Kwajalein Junior-Senior High School AA - Haley Deome, Kaiserslautern - Ramstein American High School AA - Emma B. Driggers, APO - Brussels American High School AA - Connor J. Ennis, APO - Brussels American High School AA - Riki K. Fameli, APO - Zama American High School AA - Talia A. Feshbach, St Thomas - The Peter Gruber International Academy AA - Kyras T. Fort, DPO - Frankfurt International School [**] AA - Kayla Friend, APO, AE - Ramstein American High School AA - Connor P. -

2020 ANHE Program Book

2020 NAfME ALL-NATIONAL HONOR ENSEMBLES Jazz Ensemble, Mixed Choir, Guitar Ensemble, Modern Band, Symphony Orchestra, Concert Band Selected to perform in the 2020 All-National Honor Ensembles are 542 of the most musically talented high school students in the United States. With assistance from their music teachers and directors, these exceptional students have prepared challenging music that they will perform under the leadership of prominent conductors in this annual event. Grateful acknowledgment is given to the parents, families, and friends of the selected students for their cooperation and support as they share in this joyful experience. Special acknowledgment is also given to the following individuals for their leadership, expertise, and assistance in organizing and presenting these concerts. To our 2020 Sponsors and Partners: NAfME acknowledges Jazz at Lincoln Center for their continued support of the All-National Jazz Ensemble. NAfME acknowledges Little Kids Rock for their support of the All- National Modern Band. NAfME would like to thank Conn- Selmer for their support hosting the virtual platform for all events and rehearsals. NAfME would like to thank the Mark Wood Music Foundation for providing content and resources for this year’s string students, and Harman for providing recording equipment for this year's Modern Band. NAfME is also appreciative of our concert production company, GPG Music (Our Virtual Ensemble) for their dedication to music education during the COVID-19 pandemic and for providing educators with a platform for sharing their concerts virtually. 2020 NAfME ALL-NATIONAL HONOR ENSEMBLES 2 NATIONAL ASSOCIATION FOR MUSIC EDUCATION (NAfME) National Executive Board NAfME President and Board Chair Western Division President Mackie V. -

Los Alamos Girls Are Tops in State

INSIDE High schools C-3 Baseball C-4 c Pro basketball C-7 THE SANTA FE NEW MEXICAN Hockey C-10 Tennis C-li Sunday Golf C-12 MAY 14, 2000 Martinez no longer second best win titles Saturday. Los Alam- Elk's pace. Los Alamos junior Brad Skidmore Nick Martinez of > He improves his personal os senior Magdalena Sandoval, was third. Pojoaque crosses best by 3 seconds in winning the winner Friday of the 3,200, TRACK&FIELD "I was feeling kind of shaky because I hadn't the finish line to won the 1,600 while teammate been running well the last couple of days," win the Class AAA 1,600; trio of area athletes also Christina Gonzales successful- Martinez said. "It was ail mental today boys 1,600 meters ly defended her title in the because my legs felt terrible. Sometimes you Saturday. Also win gold at state meets triple jump, and in the process just make them move." taking gold medals set a new state record. Halfway through the race, Skidmore moved at the AAA and By DON JONES In the AAAA championship ^^ into the lead. Down the backstretch, Albu- AAAA state meets The New Mexican meet, held in conjunction with querque Academy's Ismail Kassam took over. were Magdalena the AAA meet at Friends of The University of At that point Martinez was in seventh, but he Sandoval and New Mexico Field, Santa Fe High senior Matt moved up to third with 400 to go. He took the Christina Gonzales ALBUQUERQUE — Pojoaque senior Nick Gonzales won the 3,200. -

Five-Year Facilities Master Plan Update 2015-2020

Los Alamos Public Schools Five-Year Facilities Master Plan Update 2015-2020 FINAL REPORT December 2014 Architectural Research Consultants, Incorporated Albuquerque, New Mexico 505-842-1254 505-766-9269 www.arcplanning.com Acknowledgements Eugene Schmidt, Superintendent Gerry Washburn, Assistant Superintendent School Board President Judy Bjarke-McKenzie Vice President Kevin Honnell Board Secretary Matt Williams Board Member Nancy N. Holmes Board Member Jim Hall FMP Steering Committee Eugene Schmidt, Superintendent Gerry Washburn, Assistant Superintendent Joan M. Ahlers Ted Galvez Herbert E. McLean Lisa Montoya Jeff Sargent PSFA William Sprick, Facilities Master Planner Natalie Diaz, Regional Manager Planning Consultant Architectural Research Consultants, Incorporated ContentS Introduction .................................................................................. ix 1 Goals / Process ...................................................................... 1-1 1.1 Vision, Mission, and Goals .............................................. 1-1 1.2 Process ............................................................................. 1-2 1.2.1 Capital Planning and Decision Making ................. 1-2 1.3 Acronyms, Abbreviations and Definitions ..................... 1-5 2 Existing and Projected Conditions ..................................... 2-1 2.1 Programs .......................................................................... 2-1 2.1.1 Number of Schools, Types and Grade Configuration ........................................................ -

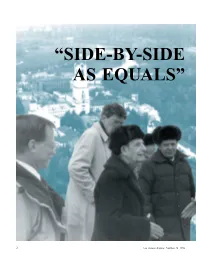

"Side-By-Side As Equals"-An Unprecedented Collaboration Between Therussian and American Nuclear Weapons Laboratories To

“SIDE-BY-SIDE AS EQUALS” 2 Los Alamos Science Number 24 1996 An unprecedented collaboration between the Russian and American Nuclear Weapons Laboratories to reduce the nuclear danger “I’ve been waiting forty years for this” Academician Yuli Borisovich Khariton Scientific Director Emeritus Arzamas-16 (Sarov) Number 24 1996 Los Alamos Science 1 “Side-by-Side as Equals”—a round table emories tend to be short Lugar program was extended to pro- in this rapidly changing mote stabilization of defense personnel world. It has been only and, where possible, their conversion to Mfour years since the So- civilian activities. This visionary gov- viet Union collapsed and separated ernment initiative under DoD leader- into independent states. Yet the ship has made significant progress in U.S.-Soviet superpower struggle and the destruction of delivery systems and the threat of all-out nuclear war are missile silos slated for elimination under the Strategic Arms Reduction Treaty, or START I. However, efforts aimed at stabilizing the people and fa- cilities of the Russian nuclear complex and safeguarding the associated nuclear materials initially proved to be difficult. In the context of these highly visible efforts, another smaller and quieter ef- fort was proceeding steadily and with remarkable success. Nuclear weapons scientists from Los Alamos and from Arzamas-16 (the birthplace of the Sovi- et atomic bomb, now called Sarov) began working together on basic sci- ence projects almost immediately after the Cold War ended, and the mutual trust and respect gained through that lab-to-lab scientific effort has become a The photo shows the Directors of Los already matters for historical studies. -

County Report LAHS FY21 Q3.Xlsx

FY2021 QUARTERLY REPORT FORM CONTRACTOR: LOS ALAMOS HISTORICAL SOCIETY QUARTER: 3 ‐ Jan ‐ Mar AGR20‐44 Completed by: Elizabeth Martineau FINANCIAL INFORMATION (report on LAC direct funding only) Is annual financial review complete? If yes, date of report Type of Expense Q1 (July‐Sept) Q2 (Oct‐Dec) Q3 (Jan‐Mar) Q4 (Apr‐June) Operations & Management + Museum 24,465.00 24,465.00 24,465.00 Education 11,455.50 11,455.50 11,455.50 Archives 17,080.50 17,080.50 17,080.50 Admission Fee Revenue (20%) (141.00) (304.00) (236.00) TOTAL $52,860.00 $52,697.00 $52,765.00 $0.00 ARCHIVES # of items offered 7,600 # of items accepted for education collection 254 # of items accepted for permanent collection 400 # of items catalogued 74 Book Article/Periodical Film Project Web # of research requests by purpose: 3 11 1 46 7 PROGRAMMING Museum Campus Public Programs School Programs Visitors Tours # of in‐person participants 361 54 # of online participants 241 127 It is difficult to collect identifying data in some programs and such identification is not required, so numbers below may not add up to total participant counts. # reporting as residents 28 4 # reporting as non‐residents 333 50 # of children ‐ 119 32 10 # of adults 241 8 329 44 # of in‐person programs 17 # of online programs 3 7 History Museum Campus Visitors Who Don't Live in New Mexico # who checked in from another # who checked in from another state 310 ‐ country # of new (not renewed) member households 2 total member households @EOQ 369 # of people providing feedback69 # paid staff hours 3,044.00 -

Eric Rombach-Kendall

ERIC ROMBACH-KENDALL Director of Bands: University of New Mexico ADDRESS 1916 Buffalo Dancer Trail NE Albuquerque, NM 87112 (505) 298-5631 Home (505) 277-5545 Office (505) 362-2160 Cell [email protected] EDUCATION The University of Michigan, Ann Arbor, Michigan. 1986-1988. MM (Wind Conducting) The University of Michigan, Ann Arbor, Michigan. 1983-1984. MM (Music Education) University of Puget Sound, Tacoma, Washington. 1975-1979. BM (Music Education), Cum Laude TEACHING EXPERIENCE University of New Mexico, Albuquerque, New Mexico. 1993 to Present. Professor of Music, Director of Bands. Provide leadership and artistic vision for comprehensive band program. Regular Courses: Wind Symphony, Graduate Wind Conducting, Undergraduate Conducting, Instrumental Repertory. Additional Courses: Instrumental Music Methods, Introduction to Music Education. Interim Director of Orchestras 2019-2020. Boston University, Boston, Massachusetts. 1989-1993. Assistant Professor of Music, Conductor Wind Ensemble. Courses: Wind Ensemble, Chamber Winds, Concert Band, Contemporary Music Ensemble, Conducting, Instrumental Literature and Materials, Philosophical Foundations of Music Education. Carleton College, Northfield, Minnesota. 1988-1989. Instructor of Music (sabbatical replacement). Courses: Wind Ensemble, Jazz Ensemble, Chamber Music. The University of Michigan, Ann Arbor, Michigan. 1986-1988. Graduate Assistant: University Bands. Conductor of Campus Band, Assistant Conductor Michigan Youth Band. The University of Michigan, 1984. Graduate Assistant in Music Education. Instructor of wind and percussion techniques for undergraduate music education students. Oak Harbor School District, Oak Harbor, Washington. 1985-1986. Director of Bands, Oak Harbor High School. Courses taught: Wind Ensemble, Symphonic Band, Jazz Band, Marching Band. Peninsula School District, Gig Harbor, Washington. 1979-1983, 1984-1985. Director of Bands, Gig Harbor High School, Goodman Middle School.