Computational Physics Shuai Dong

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ecamp12 Book Online.Pdf

, Industrial Exhibition Welcome to ECAMP 2016 Questions/Support: , In case of any problems please contact either the conference office or ask the conference staff for help. The staff is wearing easily recognizable “ECAMP 12” T-shirts. Conference Office: HSZ, 3rd Floor, HZ13 Help Hotline: +49 (0)69 798-19463 +49 (0)1590-8146358 (cell) WiFi Access: • Eduroam is available on campus. • In case you do not have eduroam, a personal WiFi-account can be requested at the conference office. 1 The ECAMP 12 is organized by the Institut für Kernphysik Frankfurt and hosted by the Goethe-Universität Frankfurt ECAMP 12 Local Organizing Committee: Reinhard Dörner Markus Schöffler Till Jahnke Lothar Schmidt Horst Schmidt-Böcking Scientific Committee Dominique Vernhet (Chair) Igor Ryabtsev Fritz Aumayr (Vice-Chair) Nina Rohringer Thomas Schlathölter Pierre Pillet Reinhard Dörner Olga Smirnova Alexander Eisfeld Tim Softley Jan-Petter Hansen Sergio Diaz Tendero Guglielmo Tino 2 Campus map P Parking Lot (no public parking!) H Bus Stop U Metro Station (Lines: U1,U2,U3,U8) The scientific part of the conference is held at the “HSZ” (designated as “Hörsaalzentrum” on the campus map) located in the middle of the Westend-Campus of Frankfurt University. 3 Site plan HSZ Ground floor entrance to lecture halls, industrial exhibition and registration (Sunday and Monday) 4 HSZ 1st floor entrance to lecture halls 5 HSZ 3rd floor conference office and poster sessions 6 Talks Talks will be held at the HSZ in the lecture halls HZ1 and HZ2. Duration of talks + discussion: Plenary talks: 50 + 10 minutes Progress reports: 25 + 5 minutes Hot topics: 12 + 3 minutes Please make sure to upload / test your presentation during the break prior to your talk. -

An Introduction to the Ising Model Barry A. Cipra the American

An Introduction to the Ising Model Barry A. Cipra The American Mathematical Monthly, Vol. 94, No. 10. (Dec., 1987), pp. 937-959. Stable URL: http://links.jstor.org/sici?sici=0002-9890%28198712%2994%3A10%3C937%3AAITTIM%3E2.0.CO%3B2-V The American Mathematical Monthly is currently published by Mathematical Association of America. Your use of the JSTOR archive indicates your acceptance of JSTOR's Terms and Conditions of Use, available at http://www.jstor.org/about/terms.html. JSTOR's Terms and Conditions of Use provides, in part, that unless you have obtained prior permission, you may not download an entire issue of a journal or multiple copies of articles, and you may use content in the JSTOR archive only for your personal, non-commercial use. Please contact the publisher regarding any further use of this work. Publisher contact information may be obtained at http://www.jstor.org/journals/maa.html. Each copy of any part of a JSTOR transmission must contain the same copyright notice that appears on the screen or printed page of such transmission. JSTOR is an independent not-for-profit organization dedicated to and preserving a digital archive of scholarly journals. For more information regarding JSTOR, please contact [email protected]. http://www.jstor.org Mon Apr 30 12:49:36 2007 An Introduction to the Ising Model BARRYA. CIPRA,St. Olu/College, Northfield, Minnesotu 55057 Burry Cipru received a Ph.D, in mathematics from the University of Maryland in 1980. He has been an instructor at M.I.T. and at the Ohio State University, and is currently an assistant professor of mathematics at St. -

The Stochastic Ising and Potts Models at Criticality

Courant Institute (NYU) Wilhelm Lenz Introduced by Wilhelm Lenz in 1920 1888-1957 as a model of ferromagnetism: Place iron in a magnetic field: increase field to maximum , then slowly reduce it to zero. There is a critical temperature 푇푐 (the Curie point) below which the iron retains residual magnetism. Magnetism caused by charged particles spinning or moving in orbit in alignment with each other. How do local interactions between nearby particles affect the global behavior at different temperatures? Eyal Lubetzky, Courant Institute Gives random binary values (spins) to vertices accounting for nearest-neighbor interactions. Initially thought to be over-simplified Sociology Biology to capture ferromagnetism, but Chemistry Economics turned out to have a crucial role in Physics … understanding phase transitions & critical phenomena. One of the most studied models in Math. Phys.: more than 10,000 papers About 13,300 results over the last 30 years… Eyal Lubetzky, Courant Institute Cyril Domb Proposed in 1951 by C. Domb to his 1920-2012 Ph.D. student R. Potts. Generalization of the Ising model to allow 푞 > 2 states per site. Special case 푞 = 4 was first studied in 1943 by Ashkin and Teller. Renfrey Potts Rich critical phenomena: 1925-2005 first/second order phase transitions depending on 푞 and the dimension. figure taken from: The Potts model FY Wu – Reviews of modern physics, 1982 Cited by 2964 Eyal Lubetzky, Courant Institute Underlying geometry: Λ = finite 2D grid. Set of possible configurations: + + + - Λ Ω = ±1 - + + + (each site receives a plus/minus spin) - + - + Probability of a configuration 휎 ∈ Ω given by the Gibbs distribution: + - + + 1 휇 휎 = exp 1훽 휎 푥 휎(푦) I 푍 훽 2 푥∼푦 Inverse Partition Josiah W. -

Otto Stern Annalen 4.11.11

(To be published by Annalen der Physik in December 2011) Otto Stern (1888-1969): The founding father of experimental atomic physics J. Peter Toennies,1 Horst Schmidt-Böcking,2 Bretislav Friedrich,3 Julian C.A. Lower2 1Max-Planck-Institut für Dynamik und Selbstorganisation Bunsenstrasse 10, 37073 Göttingen 2Institut für Kernphysik, Goethe Universität Frankfurt Max-von-Laue-Strasse 1, 60438 Frankfurt 3Fritz-Haber-Institut der Max-Planck-Gesellschaft Faradayweg 4-6, 14195 Berlin Keywords History of Science, Atomic Physics, Quantum Physics, Stern- Gerlach experiment, molecular beams, space quantization, magnetic dipole moments of nucleons, diffraction of matter waves, Nobel Prizes, University of Zurich, University of Frankfurt, University of Rostock, University of Hamburg, Carnegie Institute. We review the work and life of Otto Stern who developed the molecular beam technique and with its aid laid the foundations of experimental atomic physics. Among the key results of his research are: the experimental test of the Maxwell-Boltzmann distribution of molecular velocities (1920), experimental demonstration of space quantization of angular momentum (1922), diffraction of matter waves comprised of atoms and molecules by crystals (1931) and the determination of the magnetic dipole moments of the proton and deuteron (1933). 1 Introduction Short lists of the pioneers of quantum mechanics featured in textbooks and historical accounts alike typically include the names of Max Planck, Albert Einstein, Arnold Sommerfeld, Niels Bohr, Max von Laue, Werner Heisenberg, Erwin Schrödinger, Paul Dirac, Max Born, and Wolfgang Pauli on the theory side, and of Wilhelm Conrad Röntgen, Ernest Rutherford, Arthur Compton, and James Franck on the experimental side. However, the records in the Archive of the Nobel Foundation as well as scientific correspondence, oral-history accounts and scientometric evidence suggest that at least one more name should be added to the list: that of the “experimenting theorist” Otto Stern. -

A New Look on the Two-Dimensional Ising Model: Thermal Artificial Spins

Home Search Collections Journals About Contact us My IOPscience A new look on the two-dimensional Ising model: thermal artificial spins This content has been downloaded from IOPscience. Please scroll down to see the full text. 2016 New J. Phys. 18 023008 (http://iopscience.iop.org/1367-2630/18/2/023008) View the table of contents for this issue, or go to the journal homepage for more Download details: IP Address: 130.238.169.108 This content was downloaded on 04/05/2016 at 14:41 Please note that terms and conditions apply. New J. Phys. 18 (2016) 023008 doi:10.1088/1367-2630/18/2/023008 PAPER A new look on the two-dimensional Ising model: thermal artificial OPEN ACCESS spins RECEIVED 29 October 2015 Unnar B Arnalds1, Jonathan Chico2, Henry Stopfel2, Vassilios Kapaklis2, Oliver Bärenbold2, REVISED Marc A Verschuuren3, Ulrike Wolff4, Volker Neu4, Anders Bergman2 and Björgvin Hjörvarsson2 14 December 2015 1 Science Institute, University of Iceland, Dunhaga 3, IS-107 Reykjavik, Iceland ACCEPTED FOR PUBLICATION 2 7 January 2016 Department of Physics and Astronomy, Uppsala University, SE-75120 Uppsala, Sweden 3 Philips Research Laboratories, Eindhoven, The Netherlands PUBLISHED 4 IFW Dresden, Institute of Metallic Materials, D-01171 Dresden, Germany 29 January 2016 E-mail: [email protected] Original content from this Keywords: magnetic ordering, artificial spins, Ising model work may be used under the terms of the Creative Supplementary material for this article is available online Commons Attribution 3.0 licence. Any further distribution of this work must maintain Abstract attribution to the author(s) and the title of We present a direct experimental investigation of the thermal ordering in an artificial analogue of an the work, journal citation and DOI. -

The World Around Is Physics

The world around is physics Life in science is hard What we see is engineering Chemistry is harder There is no money in chemistry Future is uncertain There is no need of chemistry Therefore, it is not my option I don’t have to learn chemistry Chemistry is life Chemistry is chemicals Chemistry is memorizing things Chemistry is smell Chemistry is this and that- not sure Chemistry is fumes Chemistry is boring Chemistry is pollution Chemistry does not excite Chemistry is poison Chemistry is a finished subject Chemistry is dirty Chemistry - stands on the legacy of giants Antoine-Laurent Lavoisier (1743-1794) Marie Skłodowska Curie (1867- 1934) John Dalton (1766- 1844) Sir Humphrey Davy (1778 – 1829) Michael Faraday (1791 – 1867) Chemistry – our legacy Mendeleev's Periodic Table Modern Periodic Table Dmitri Ivanovich Mendeleev (1834-1907) Joseph John Thomson (1856 –1940) Great experimentalists Ernest Rutherford (1871-1937) Jagadish Chandra Bose (1858 –1937) Chandrasekhara Venkata Raman (1888-1970) Chemistry and chemical bond Gilbert Newton Lewis (1875 –1946) Harold Clayton Urey (1893- 1981) Glenn Theodore Seaborg (1912- 1999) Linus Carl Pauling (1901– 1994) Master craftsmen Robert Burns Woodward (1917 – 1979) Chemistry and the world Fritz Haber (1868 – 1934) Machines in science R. E. Smalley Great teachers Graduate students : Other students : 1. Werner Heisenberg 1. Herbert Kroemer 2. Wolfgang Pauli 2. Linus Pauling 3. Peter Debye 3. Walter Heitler 4. Paul Sophus Epstein 4. Walter Romberg 5. Hans Bethe 6. Ernst Guillemin 7. Karl Bechert 8. Paul Peter Ewald 9. Herbert Fröhlich 10. Erwin Fues 11. Helmut Hönl 12. Ludwig Hopf 13. Walther Kossel 14. -

Wolfgang Pauli 1900 to 1930: His Early Physics in Jungian Perspective

Wolfgang Pauli 1900 to 1930: His Early Physics in Jungian Perspective A Dissertation Submitted to the Faculty of the Graduate School of the University of Minnesota by John Richard Gustafson In Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy Advisor: Roger H. Stuewer Minneapolis, Minnesota July 2004 i © John Richard Gustafson 2004 ii To my father and mother Rudy and Aune Gustafson iii Abstract Wolfgang Pauli's philosophy and physics were intertwined. His philosophy was a variety of Platonism, in which Pauli’s affiliation with Carl Jung formed an integral part, but Pauli’s philosophical explorations in physics appeared before he met Jung. Jung validated Pauli’s psycho-philosophical perspective. Thus, the roots of Pauli’s physics and philosophy are important in the history of modern physics. In his early physics, Pauli attempted to ground his theoretical physics in positivism. He then began instead to trust his intuitive visualizations of entities that formed an underlying reality to the sensible physical world. These visualizations included holistic kernels of mathematical-physical entities that later became for him synonymous with Jung’s mandalas. I have connected Pauli’s visualization patterns in physics during the period 1900 to 1930 to the psychological philosophy of Jung and displayed some examples of Pauli’s creativity in the development of quantum mechanics. By looking at Pauli's early physics and philosophy, we gain insight into Pauli’s contributions to quantum mechanics. His exclusion principle, his influence on Werner Heisenberg in the formulation of matrix mechanics, his emphasis on firm logical and empirical foundations, his creativity in formulating electron spinors, his neutrino hypothesis, and his dialogues with other quantum physicists, all point to Pauli being the dominant genius in the development of quantum theory. -

5 X-Ray Crystallography

Introductory biophysics A. Y. 2016-17 5. X-ray crystallography and its applications to the structural problems of biology Edoardo Milotti Dipartimento di Fisica, Università di Trieste The interatomic distance in a metallic crystal can be roughly estimated as follows. Take, e.g., iron • density: 7.874 g/cm3 • atomic weight: 56 3 • molar volume: VM = 7.1 cm /mole then the interatomic distance is roughly VM d ≈ 3 ≈ 2.2nm N A Edoardo Milotti - Introductory biophysics - A.Y. 2016-17 The atomic lattice can be used a sort of diffraction grating for short-wavelength radiation, about 100 times shorter than visible light which is in the range 400-750 nm. Since hc 2·10−25 J m 1.24 eV µm E = ≈ ≈ γ λ λ λ 1 nm radiation corresponds to about 1 keV photon energy. Edoardo Milotti - Introductory biophysics - A.Y. 2016-17 !"#$%&'$(")* S#%/J&T&U2*#<.%&CKET3&VG$GG./"#%G3&W.%-$/; +(."J&AN&>,%()&CTDB3&S.%)(/3&X.1*&W.%-$/; Y#<.)&V%(Z.&(/&V=;1(21&(/&CTCL&[G#%&=(1&"(12#5.%;&#G&*=.& "(GG%$2*(#/&#G&\8%$;1&<;&2%;1*$)1] 9/(*($));&=.&1*:"(."&H(*=&^_/F*./3&$/"&*=./&H(*=&'$`&V)$/2I&(/& S.%)(/3&H=.%.&=.&=$<()(*$*."&(/&CTBD&H(*=&$&*=.1(1&[a<.% "(.& 9/*.%G.%./Z.%12=.(/:/F./ $/&,)$/,$%$)).)./ V)$**./[?& 7=./&=.&H#%I."&$*&*=.&9/1*(*:*.&#G&7=.#%.*(2$)&V=;1(213&=.$"."& <;&>%/#)"&Q#--.%G.)"3&:/*()&=.&H$1&$,,#(/*."&G:))&,%#G.11#%&$*& *=.&4/(5.%1(*;&#G&0%$/IG:%*&(/&CTCL3&H=./&=.&$)1#&%.2.(5."&=(1& Y#<.)&V%(Z.?& !"#$%"#&'()#**(&8 9/*%#":2*#%;&<(#,=;1(21&8 >?@?&ABCD8CE Arnold Sommerfeld (1868-1951) ... Four of Sommerfeld's doctoral students, Werner Heisenberg, Wolfgang Pauli, Peter Debye, and Hans Bethe went on to win Nobel Prizes, while others, most notably, Walter Heitler, Rudolf Peierls, Karl Bechert, Hermann Brück, Paul Peter Ewald, Eugene Feenberg, Herbert Fröhlich, Erwin Fues, Ernst Guillemin, Helmut Hönl, Ludwig Hopf, Adolf KratZer, Otto Laporte, Wilhelm LenZ, Karl Meissner, Rudolf Seeliger, Ernst C. -

Otto Stern Annalen 22.9.11

September 22, 2011 Otto Stern (1888-1969): The founding father of experimental atomic physics J. Peter Toennies,1 Horst Schmidt-Böcking,2 Bretislav Friedrich,3 Julian C.A. Lower2 1Max-Planck-Institut für Dynamik und Selbstorganisation Bunsenstrasse 10, 37073 Göttingen 2Institut für Kernphysik, Goethe Universität Frankfurt Max-von-Laue-Strasse 1, 60438 Frankfurt 3Fritz-Haber-Institut der Max-Planck-Gesellschaft Faradayweg 4-6, 14195 Berlin Keywords History of Science, Atomic Physics, Quantum Physics, Stern- Gerlach experiment, molecular beams, space quantization, magnetic dipole moments of nucleons, diffraction of matter waves, Nobel Prizes, University of Zurich, University of Frankfurt, University of Rostock, University of Hamburg, Carnegie Institute. We review the work and life of Otto Stern who developed the molecular beam technique and with its aid laid the foundations of experimental atomic physics. Among the key results of his research are: the experimental determination of the Maxwell-Boltzmann distribution of molecular velocities (1920), experimental demonstration of space quantization of angular momentum (1922), diffraction of matter waves comprised of atoms and molecules by crystals (1931) and the determination of the magnetic dipole moments of the proton and deuteron (1933). 1 Introduction Short lists of the pioneers of quantum mechanics featured in textbooks and historical accounts alike typically include the names of Max Planck, Albert Einstein, Arnold Sommerfeld, Niels Bohr, Werner Heisenberg, Erwin Schrödinger, Paul Dirac, Max Born, and Wolfgang Pauli on the theory side, and of Konrad Röntgen, Ernest Rutherford, Max von Laue, Arthur Compton, and James Franck on the experimental side. However, the records in the Archive of the Nobel Foundation as well as scientific correspondence, oral-history accounts and scientometric evidence suggest that at least one more name should be added to the list: that of the “experimenting theorist” Otto Stern. -

The Fate of Ernst Ising and the Fate of His Model

The Fate of Ernst Ising and the Fate of his Model Ising, T, Folk, R, Kenna, R, Berche, B & Holovatch, Y Published PDF deposited in Coventry University’s Repository Original citation: Ising, T, Folk, R, Kenna, R, Berche, B & Holovatch, Y 2017, 'The Fate of Ernst Ising and the Fate of his Model' Journal of Physical Studies, vol 21, no. 3, 3002. http://physics.lnu.edu.ua/jps/2017/3/abs/a3002-19.html ISSN 1027-4642 ESSN 2310-0052 Publisher: National University of Lviv Copyright © and Moral Rights are retained by the author(s) and/ or other copyright owners. A copy can be downloaded for personal non-commercial research or study, without prior permission or charge. This item cannot be reproduced or quoted extensively from without first obtaining permission in writing from the copyright holder(s). The content must not be changed in any way or sold commercially in any format or medium without the formal permission of the copyright holders. ЖУРНАЛ ФIЗИЧНИХ ДОСЛIДЖЕНЬ JOURNAL OF PHYSICAL STUDIES т. 21, № 3 (2017) 3002(19 ñ.) v. 21, No. 3 (2017) 3002(19 p.) THE FATE OF ERNST ISING AND THE FATE OF HIS MODEL∗ T. Ising1, R. Folk2, R. Kenna3;1, B. Berche4;1, Yu. Holovatch5;1 1 L4 Collaboration & Doctoral College for the Statistical Physics of Complex Systems, Leipzig-Lorraine-Lviv-Coventry 2 Institute for Theoretical Physics, Johannes Kepler University Linz, A-4040, Linz, Austria 3Applied Mathematics Research Centre, Coventry University, Coventry, CV1 5FB, United Kingdom 4Statistical Physics Group, Laboratoire de Physique et Chimie Th´eoriques, Universit´e de Lorraine, F-54506 Vandœuvre-les-Nancy Cedex, France 5Institute for Condensed Matter Physics, National Acad. -

The Development of the Quantum-Mechanical Electron Theory of Metals: 1928---1933

The development of the quantum-mechanical electron theory of metals: 1S28—1933 Lillian Hoddeson and Gordon Bayrn Department of Physics, University of Illinois at Urbana-Champaign, Urbana, illinois 6180f Michael Eckert Deutsches Museum, Postfach 260102, 0-8000 Munich 26, Federal Republic of Germany We trace the fundamental developments and events, in their intellectual as well as institutional settings, of the emergence of the quantum-mechanical electron theory of metals from 1928 to 1933. This paper contin- ues an earlier study of the first phase of the development —from 1926 to 1928—devoted to finding the gen- eral quantum-mechanical framework. Solid state, by providing a large and ready number of concrete prob- lems, functioned during the period treated here as a target of application for the recently developed quan- tum mechanics; a rush of interrelated successes by numerous theoretical physicists, including Bethe, Bloch, Heisenberg, Peierls, Landau, Slater, and Wilson, established in these years the network of concepts that structure the modern quantum theory of solids. We focus on three examples: band theory, magnetism, and superconductivity, the former two immediate successes of the quantum theory, the latter a persistent failure in this period. The history revolves in large part around the theoretical physics institutes of the Universi- ties of Munich, under Sommerfeld, Leipzig under Heisenberg, and the Eidgenossische Technische Hochschule (ETH) in Zurich under Pauli. The year 1933 marked both a climax and a transition; as the lay- ing of foundations reached a temporary conclusion, attention began to shift from general formulations to computation of the properties of particular solids. CONTENTS mechanics of electrons in a crystal lattice (Bloch, 1928); these were followed by the further development in Introduction 287 1928—1933 of the quantum-mechanical basis of the I. -

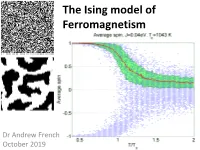

Ising Model of Ferromagnetism

The Ising model of Ferromagnetism Dr Andrew French October 2019 The Ising Model of Ferromagnetism All atoms will respond in some fashion to magnetic fields. The angular momentum (and spin) properties of electrons imply a circulating charge, which means they will be subject to a Lorentz force in a magnetic field. However the effects of diamagnetism, paramagnetism and anti-ferromagnetism are typically very small. Ferromagnetic materials (iron, cobalt, nickel, some rare earth metal compounds) respond strongly to magnetic fields and can intensify them by orders of magnitude. i.e. the relative permeability can be tens or hundreds, or possibly thousands. The Ising model is a simplified model of a ferromagnet which exhibits a phase transition above the Curie temperature. Below this, magnetic dipole alignment will tend to cluster into domains, and its is these micro-scale groupings which give rise to ferromagnetic behaviour. Ernst Ising (1900-1998) “Soft” magnetism - Ferromagnets Unlike permanent “hard” magnets, once the applied field is removed, the domain alignment will randomize again, effectively zeroing the net magnetism. Magnetic domains The Ising model can be used to demonstrate spontaneous mass alignment of magnetic dipoles, and possibly a mechanism for domain formation. Perhaps the simplest model which yields characteristic behaviour is an N x N square grid, where each square is initially randomly assigned a +1 or -1 value, with equal probability. The +/-1 values correspond to a single direction of magnetic dipole moment in a rectangular lattice of ferromagnetic atoms, or in the case of individual electrons, spin. White squares represent +1 Black squares represent -1 10 x 10 grid 100 x 100 grid Original Metropolis algorithm 1.