Safety First for Automated Driving I Authors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

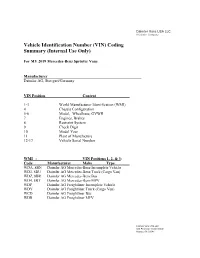

Vehicle Identification Number (VIN) Coding Summary (Internal Use Only)

Daimler Vans USA LLC A Daimler Company Vehicle Identification Number (VIN) Coding Summary (Internal Use Only) For MY 2019 Mercedes-Benz Sprinter Vans Manufacturer Daimler AG, Stuttgart/Germany VIN Position Content 1-3 World Manufacturer Identification (WMI) 4 Chassis Configuration 5-6 Model, Wheelbase, GVWR 7 Engines, Brakes 8 Restraint System 9 Check Digit 10 Model Year 11 Plant of Manufacture 12-17 Vehicle Serial Number WMI - VIN Positions 1, 2, & 3: Code Manufacturer Make Type WDA, 8BN Daimler AG Mercedes-Benz Incomplete Vehicle WD3, 8BU Daimler AG Mercedes-Benz Truck (Cargo Van) WDZ, 8BR Daimler AG Mercedes-Benz Bus WD4, 8BT Daimler AG Mercedes-Benz MPV WDP Daimler AG Freightliner Incomplete Vehicle WDY Daimler AG Freightliner Truck (Cargo Van) WCD Daimler AG Freightliner Bus WDR Daimler AG Freightliner MPV Daimler Vans USA LLC 303 Perimeter Center North Atlanta, GA 30346 Daimler Vans USA LLC A Daimler Company Chassis Configuration - VIN Position 4: Code Chassis Configuration / Intended Market P All 4x2 Vehicle Types / U.S. B All 4x2 Vehicle Types / Canada F All 4x4 Vehicle Types / U.S. C All 4x4 Vehicle Types / Canada Model, Wheelbase, GVWR - VIN Positions 5 & 6: Code Model Wheelbase GVWR E7 C1500/C2500/P1500 3665 mm/ 144 in. 8,000 lbs. to 9,000 lbs. Class G E8 C2500/P2500 4325 mm/ 170 in. 8,000 lbs. to 9,000 lbs. Class G F0 C2500/C3500 3665 mm/ 144 in. 9,000 lbs. to 10,000 lbs. Class H F1 C2500/C3500 4325 mm/ 170 in. 9,000 lbs. to 10,000 lbs. Class H F3 C4500/C3500 3665 mm/ 144 in. -

Songs by Artist

Reil Entertainment Songs by Artist Karaoke by Artist Title Title &, Caitlin Will 12 Gauge Address In The Stars Dunkie Butt 10 Cc 12 Stones Donna We Are One Dreadlock Holiday 19 Somethin' Im Mandy Fly Me Mark Wills I'm Not In Love 1910 Fruitgum Co Rubber Bullets 1, 2, 3 Redlight Things We Do For Love Simon Says Wall Street Shuffle 1910 Fruitgum Co. 10 Years 1,2,3 Redlight Through The Iris Simon Says Wasteland 1975 10, 000 Maniacs Chocolate These Are The Days City 10,000 Maniacs Love Me Because Of The Night Sex... Because The Night Sex.... More Than This Sound These Are The Days The Sound Trouble Me UGH! 10,000 Maniacs Wvocal 1975, The Because The Night Chocolate 100 Proof Aged In Soul Sex Somebody's Been Sleeping The City 10Cc 1Barenaked Ladies Dreadlock Holiday Be My Yoko Ono I'm Not In Love Brian Wilson (2000 Version) We Do For Love Call And Answer 11) Enid OS Get In Line (Duet Version) 112 Get In Line (Solo Version) Come See Me It's All Been Done Cupid Jane Dance With Me Never Is Enough It's Over Now Old Apartment, The Only You One Week Peaches & Cream Shoe Box Peaches And Cream Straw Hat U Already Know What A Good Boy Song List Generator® Printed 11/21/2017 Page 1 of 486 Licensed to Greg Reil Reil Entertainment Songs by Artist Karaoke by Artist Title Title 1Barenaked Ladies 20 Fingers When I Fall Short Dick Man 1Beatles, The 2AM Club Come Together Not Your Boyfriend Day Tripper 2Pac Good Day Sunshine California Love (Original Version) Help! 3 Degrees I Saw Her Standing There When Will I See You Again Love Me Do Woman In Love Nowhere Man 3 Dog Night P.S. -

Daimler AG, Formerly Known As

UNITED STATES DISTRICT COURT FOR THE DISTRICT OF COLUMBIA UNITED STATES SECURITIES AND EXCHANGE COMMISSION, 100 F Street,NE Washington, DC 20549-6030 Case: 1:10-cv-00473 Plaintiff, Ass~gned To : Leon, RichardJ-.- ASSIgn. Date: 3/22/2010 v. Description: General Civil DAIMLERAG, .Mercedesstrasse 137 70327 . Stuttgart, Germany Defendant. COMPLAINT PlaintiffUnited States Securities and Exchange Commission ("Commission") alleges that: SUMMARY OF THE ACTION 1. From at least 1998 through 2008, Daimler AG, formerly knoWn as DaimlerChrysler AG ("Daimler" or the "Company"), and certain ofits subsidiaries and affiliates, violated the anti-bribery, books and records and internal controls provisions ofthe Foreign _ Corrupt Practices Act (the "FCPA") by making illicit payments, directly or indirectly, to foreign government officials in order to secure and maintain business worldWide. 2. During this time period, Daimler paid bribes to government officials to further government sales in Asia, Africa, Eastern Europe and the Middle East. In connection With at least 51 transactions, Daimler violated the anti-bribery provision of the FCPA by paying tens of millions of dollars in corrupt payments to foreign government officials to secure business in · Russia,Chilia,Vietnam, Nigeria, Hungary, Latvia, Croatia and Bosnia. These corrupt payments were made through the use ofU.S. mails or the means or instrumentality ofU.S. interstate commerce. 3. Daimler also violated the FCPA's books and records and internal controls provisions in connection with the 51 transactions and at least an additional 154 transactions, in which it made improper payments totaling atleast $56 million to secure business in 22 countries, including, among others, Russia, China, Nigeria, Vietnam, Egypt, Greece, Hungary, North Korea, andIndonesia. -

Chapter 3 Review Questions

Chapter 3 - Learning to Drive PA Driver’s Manual CHAPTER 3 REVIEW QUESTIONS 1. TEENAGE DRIVERS ARE MORE LIKELY TO BE INVOLVED IN A CRASH WHEN: A. They are driving with their pet as a passenger B. They are driving with adult passengers C. They are driving with teenage passengers D. They are driving without any passengers 2. DRIVERS WHO EAT AND DRINK WHILE DRIVING: A. Have no driving errors B. Have trouble driving slow C. Are better drivers because they are not hungry D. Have trouble controlling their vehicles 3. PREPARING TO SMOKE AND SMOKING WHILE DRIVING: A. Do not affect driving abilities B. Help maintain driver alertness C. Are distracting activities D. Are not distracting activities 4. THE TOP MAJOR CRASH TYPE FOR 16 YEAR OLD DRIVERS IN PENNSYLVANIA IS: A. Single vehicle/run-off-the-road B. Being sideswiped on an interstate C. Driving in reverse on a side street D. Driving on the shoulder of a highway 5. WHEN PASSING A BICYCLIST, YOU SHOULD: A. Blast your horn to alert the bicyclist B. Move as far left as possible C. Remain in the center of the lane D. Put on your four-way flashers 6. WHEN YOU DRIVE THROUGH AN AREA WHERE CHILDREN ARE PLAYING, YOU SHOULD EXPECT THEM: A. To know when it is safe to cross B. To stop at the curb before crossing the street C. To run out in front of you without looking D. Not to cross unless they are with an adult 7. IF YOU ARE DRIVING BEHIND A MOTORCYCLE, YOU MUST: A. -

Retirement Strategy Fund 2060 Description Plan 3S DCP & JRA

Retirement Strategy Fund 2060 June 30, 2020 Note: Numbers may not always add up due to rounding. % Invested For Each Plan Description Plan 3s DCP & JRA ACTIVIA PROPERTIES INC REIT 0.0137% 0.0137% AEON REIT INVESTMENT CORP REIT 0.0195% 0.0195% ALEXANDER + BALDWIN INC REIT 0.0118% 0.0118% ALEXANDRIA REAL ESTATE EQUIT REIT USD.01 0.0585% 0.0585% ALLIANCEBERNSTEIN GOVT STIF SSC FUND 64BA AGIS 587 0.0329% 0.0329% ALLIED PROPERTIES REAL ESTAT REIT 0.0219% 0.0219% AMERICAN CAMPUS COMMUNITIES REIT USD.01 0.0277% 0.0277% AMERICAN HOMES 4 RENT A REIT USD.01 0.0396% 0.0396% AMERICOLD REALTY TRUST REIT USD.01 0.0427% 0.0427% ARMADA HOFFLER PROPERTIES IN REIT USD.01 0.0124% 0.0124% AROUNDTOWN SA COMMON STOCK EUR.01 0.0248% 0.0248% ASSURA PLC REIT GBP.1 0.0319% 0.0319% AUSTRALIAN DOLLAR 0.0061% 0.0061% AZRIELI GROUP LTD COMMON STOCK ILS.1 0.0101% 0.0101% BLUEROCK RESIDENTIAL GROWTH REIT USD.01 0.0102% 0.0102% BOSTON PROPERTIES INC REIT USD.01 0.0580% 0.0580% BRAZILIAN REAL 0.0000% 0.0000% BRIXMOR PROPERTY GROUP INC REIT USD.01 0.0418% 0.0418% CA IMMOBILIEN ANLAGEN AG COMMON STOCK 0.0191% 0.0191% CAMDEN PROPERTY TRUST REIT USD.01 0.0394% 0.0394% CANADIAN DOLLAR 0.0005% 0.0005% CAPITALAND COMMERCIAL TRUST REIT 0.0228% 0.0228% CIFI HOLDINGS GROUP CO LTD COMMON STOCK HKD.1 0.0105% 0.0105% CITY DEVELOPMENTS LTD COMMON STOCK 0.0129% 0.0129% CK ASSET HOLDINGS LTD COMMON STOCK HKD1.0 0.0378% 0.0378% COMFORIA RESIDENTIAL REIT IN REIT 0.0328% 0.0328% COUSINS PROPERTIES INC REIT USD1.0 0.0403% 0.0403% CUBESMART REIT USD.01 0.0359% 0.0359% DAIWA OFFICE INVESTMENT -

DMV Driver Manual

New Hampshire Driver Manual i 6WDWHRI1HZ+DPSVKLUH DEPARTMENT OF SAFETY DIVISION OF MOTOR VEHICLES MESSAGE FROM THE DIVISION OF MOTOR VEHICLES Driving a motor vehicle on New Hampshire roadways is a privilege and as motorists, we all share the responsibility for safe roadways. Safe drivers and safe vehicles make for safe roadways and we are pleased to provide you with this driver manual to assist you in learning New Hampshire’s motor vehicle laws, rules of the road, and safe driving guidelines, so that you can begin your journey of becoming a safe driver. The information in this manual will not only help you navigate through the process of obtaining a New Hampshire driver license, but it will highlight safe driving tips and techniques that can help prevent accidents and may even save a life. One of your many responsibilities as a driver will include being familiar with the New Hampshire motor vehicle laws. This manual includes a review of the laws, rules and regulations that directly or indirectly affect you as the operator of a motor vehicle. Driving is a task that requires your full attention. As a New Hampshire driver, you should be prepared for changes in the weather and road conditions, which can be a challenge even for an experienced driver. This manual reviews driving emergencies and actions that the driver may take in order to avoid a major collision. No one knows when an emergency situation will arise and your ability to react to a situation depends on your alertness. Many factors, such as impaired vision, fatigue, alcohol or drugs will impact your ability to drive safely. -

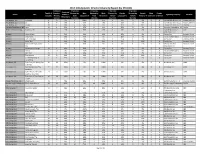

2017 DGA Episodic Director Diversity Report (By STUDIO)

2017 DGA Episodic Director Diversity Report (by STUDIO) Combined # Episodes # Episodes # Episodes # Episodes Combined Total # of Female + Directed by Male Directed by Male Directed by Female Directed by Female Male Female Studio Title Female + Signatory Company Network Episodes Minority Male Caucasian % Male Minority % Female Caucasian % Female Minority % Unknown Unknown Minority % Episodes Caucasian Minority Caucasian Minority A+E Studios, LLC Knightfall 2 0 0% 2 100% 0 0% 0 0% 0 0% 0 0 Frank & Bob Films II, LLC History Channel A+E Studios, LLC Six 8 4 50% 4 50% 1 13% 3 38% 0 0% 0 0 Frank & Bob Films II, LLC History Channel A+E Studios, LLC UnReal 10 4 40% 6 60% 0 0% 2 20% 2 20% 0 0 Frank & Bob Films II, LLC Lifetime Alameda Productions, LLC Love 12 4 33% 8 67% 0 0% 4 33% 0 0% 0 0 Alameda Productions, LLC Netflix Alcon Television Group, Expanse, The 13 2 15% 11 85% 2 15% 0 0% 0 0% 0 0 Expanding Universe Syfy LLC Productions, LLC Amazon Hand of God 10 5 50% 5 50% 2 20% 3 30% 0 0% 0 0 Picrow, Inc. Amazon Prime Amazon I Love Dick 8 7 88% 1 13% 0 0% 7 88% 0 0% 0 0 Picrow Streaming Inc. Amazon Prime Amazon Just Add Magic 26 7 27% 19 73% 0 0% 4 15% 1 4% 0 2 Picrow, Inc. Amazon Prime Amazon Kicks, The 9 2 22% 7 78% 0 0% 0 0% 2 22% 0 0 Picrow, Inc. Amazon Prime Amazon Man in the High Castle, 9 1 11% 8 89% 0 0% 0 0% 1 11% 0 0 Reunion MITHC 2 Amazon Prime The Productions Inc. -

SAE International® PROGRESS in TECHNOLOGY SERIES Downloaded from SAE International by Eric Anderson, Thursday, September 10, 2015

Downloaded from SAE International by Eric Anderson, Thursday, September 10, 2015 Connectivity and the Mobility Industry Edited by Dr. Andrew Brown, Jr. SAE International® PROGRESS IN TECHNOLOGY SERIES Downloaded from SAE International by Eric Anderson, Thursday, September 10, 2015 Connectivity and the Mobility Industry Downloaded from SAE International by Eric Anderson, Thursday, September 10, 2015 Other SAE books of interest: Green Technologies and the Mobility Industry By Dr. Andrew Brown, Jr. (Product Code: PT-146) Active Safety and the Mobility Industry By Dr. Andrew Brown, Jr. (Product Code: PT-147) Automotive 2030 – North America By Bruce Morey (Product Code: T-127) Multiplexed Networks for Embedded Systems By Dominique Paret (Product Code: R-385) For more information or to order a book, contact SAE International at 400 Commonwealth Drive, Warrendale, PA 15096-0001, USA phone 877-606-7323 (U.S. and Canada) or 724-776-4970 (outside U.S. and Canada); fax 724-776-0790; e-mail [email protected]; website http://store.sae.org. Downloaded from SAE International by Eric Anderson, Thursday, September 10, 2015 Connectivity and the Mobility Industry By Dr. Andrew Brown, Jr. Warrendale, Pennsylvania, USA Copyright © 2011 SAE International. eISBN: 978-0-7680-7461-1 Downloaded from SAE International by Eric Anderson, Thursday, September 10, 2015 400 Commonwealth Drive Warrendale, PA 15096-0001 USA E-mail: [email protected] Phone: 877-606-7323 (inside USA and Canada) 724-776-4970 (outside USA) Fax: 724-776-0790 Copyright © 2011 SAE International. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, distributed, or transmitted, in any form or by any means without the prior written permission of SAE. -

Grounding Human-To-Vehicle Advice for Self-Driving Vehicles

Grounding Human-to-Vehicle Advice for Self-driving Vehicles Jinkyu Kim1, Teruhisa Misu2, Yi-Ting Chen2, Ashish Tawari2, and John Canny1 1EECS, UC Berkeley, 2Honda Research Institute USA, Inc. 1 2 {jinkyu.kim, canny}@berkeley.edu, {tmisu,ychen,atawari}@honda-ri.com Abstract Visual encoder Recent success suggests that deep neural control net- Vehicle works are likely to be a key component of self-driving ve- controller hicles. These networks are trained on large datasets to imi- End-user Input image tate human actions, but they lack semantic understanding of Human-to-Vehicle Advice Textual e.g., “pedestrians are in crosswalk” image contents. This makes them brittle and potentially un- encoder safe in situations that do not match training data. Here, we propose to address this issue by augmenting training control data with natural language advice from a human. Advice commands includes guidance about what to do and where to attend. Visualizing We present a first step toward advice giving, where we train without advice with advice model’s attention an end-to-end vehicle controller that accepts advice. The controller adapts the way it attends to the scene (visual attention) and the control (steering and speed). Attention Figure 1: Our model takes human-to-vehicle advice as an mechanisms tie controller behavior to salient objects in the input, i.e., “pedestrians are in crosswalk”, and grounds it advice. We evaluate our model on a novel advisable driving into the vehicle controller, which then predicts a sequence dataset with manually annotated human-to-vehicle advice of control commands, i.e., a steering wheel angle and a ve- called Honda Research Institute-Advice Dataset (HAD). -

Heart & Vascular Institute

Sydell and Arnold Miller Family Heart & Vascular Institute 9500 Euclid Avenue, Cleveland, OH 44195 ClevelandClinic.org 2016 Outcomes 17-OUT-413 108369_CCFBCH_Cov_acg.indd 1 8/31/17 12:22 PM Measuring Outcomes Promotes Quality Improvement This project would not have been possible without the commitment and expertise of a team led by Umesh Khot, MD; Mouin Abdallah, MD; Sandra Hays; and Jagina McIntyre. Graphic design and photography were provided by Brian Kohlbacher and Cleveland Clinic’s Center for Medical Art and Photography. © The Cleveland Clinic Foundation 2017 108369_CCFBCH_Cov_acg.indd 2 9/19/17 10:57 AM Measuring and understanding outcomes of medical treatments promotes quality improvement. Cleveland Clinic has created a series of Outcomes books similar to this one for its clinical institutes. Designed for a physician audience, the Outcomes books contain a summary of many of our surgical and medical treatments, with a focus on outcomes data and a review of new technologies and innovations. The Outcomes books are not a comprehensive analysis of all treatments provided at Cleveland Clinic, and omission of a particular treatment does not necessarily mean we do not offer that treatment. When there are no recognized clinical outcome measures for a specific treatment, we may report process measures associated with improved outcomes. When process measures are unavailable, we may report volume measures; a relationship has been demonstrated between volume and improved outcomes for many treatments, particularly those involving surgical and -

Anti-Texting Law Facts

Anti-Texting Law Facts Pennsylvania’s anti-texting law, effective March 8, 2012, encourages motorists to put their full focus on driving. What the Law Does The law prohibits as a primary offense any driver from using an Interactive Wireless Communication Device (IWCD) to send, read or write a text-based communication while his or her vehicle is in motion. Defines an IWCD as a wireless phone, personal digital assistant, smart phone, portable or mobile computer or similar devices that can be used for texting, instant messaging, emailing or browsing the Internet. Defines a text-based communication as a text message, instant message, email or other written communication composed or received on an IWCD. Institutes a $50 fine for convictions under this section. Makes clear that this law supersedes and preempts any local ordinances restricting the use of interactive wireless devices by drivers. The penalty is a summary offense with a $50 fine, plus court costs and other fees. The violation carries no points as a penalty and will not be recorded on the driver record for non- commercial drivers. It will be recorded on commercial drivers’ records as a non-sanction violation. The texting ban does NOT include the use of a GPS device, a system or device that is physically or electronically integrated into the vehicle, or a communications device that is affixed to a mass transit vehicle, bus or school bus. The law does not authorize the seizure of an IWCD. Background, Nationwide Perspective In 2010, there were 13,846 crashes in Pennsylvania where distracted driving played a role. -

Provisions Governing the Disposition of State Motor-Vehicle and Motor-Carrier Receipts 1

PROVISIONS GOVERNING THE DISPOSITION OF STATE MOTOR-VEHICLE AND MOTOR-CARRIER RECEIPTS 1/ BASED ON INFORMATION OBTAINED FROM STATE TABLE MV-106 AUTHORITIES AND FROM STATE LAW CODES STATUS AS OF JANUARY 1, 1998 CLASSIFICATION NAME OF FUND AMOUNT OR STATE CODE OF FEE 2/ OR AGENCY PROPORTION OBJECTS OF EXPENDITURE REMARKS SECTION ALABAMA 1 Counties 35.25 percent of 42.16 percent is allocated equally among counties. 57.84 percent is 40-12-270 additional truck allocated on the basis of population. registration fee State Public Road and 64.75 percent of 40-12-270 Bridge Fund additional truck registration fee Remainder Distributed as follows: State Treasurer 5 percent For collection, administration and cost of tags. 40-12-269 County Probate Judges 2.5 percent Collection and administration. 40-12-269 County Probate Judges $1.25 per registration Collection and administration. Service fee charged by local officials. 40-12-271 Department of Public Additional car, light Traffic regulation and enforcement of State traffic $10.00 per car; $10.00 per truck under 8001 lbs.; $8.00 per motorcycle. This is 40-12-274 Safety truck, and and motor vehicle laws. an additional tax to the regular license tax or registration fee and is paid to motorcycle State General Fund for the Department of Public Safety. registration fees Remainder Distributed as follows: Municipalities and 21 percent Administration, construction, maintenance and Distributed to municipality where motor vehicle resides or is registered or to 40-12-270 counties debt service on bonds for highways. county where fee is paid if not registered or residing in an incorporated municipality.