Flexible Architecture Methods for Graphics Processing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Wind River Vxworks Platforms 3.8

Wind River VxWorks Platforms 3.8 The market for secure, intelligent, Table of Contents Build System ................................ 24 connected devices is constantly expand- Command-Line Project Platforms Available in ing. Embedded devices are becoming and Build System .......................... 24 VxWorks Edition .................................2 more complex to meet market demands. Workbench Debugger .................. 24 New in VxWorks Platforms 3.8 ............2 Internet connectivity allows new levels of VxWorks Simulator ....................... 24 remote management but also calls for VxWorks Platforms Features ...............3 Workbench VxWorks Source increased levels of security. VxWorks Real-Time Operating Build Configuration ...................... 25 System ...........................................3 More powerful processors are being VxWorks 6.x Kernel Compatibility .............................3 considered to drive intelligence and Configurator ................................. 25 higher functionality into devices. Because State-of-the-Art Memory Host Shell ..................................... 25 Protection ..................................3 real-time and performance requirements Kernel Shell .................................. 25 are nonnegotiable, manufacturers are VxBus Framework ......................4 Run-Time Analysis Tools ............... 26 cautious about incorporating new Core Dump File Generation technologies into proven systems. To and Analysis ...............................4 System Viewer ........................ -

Reviving the Development of Openchrome

Reviving the Development of OpenChrome Kevin Brace OpenChrome Project Maintainer / Developer XDC2017 September 21st, 2017 Outline ● About Me ● My Personal Story Behind OpenChrome ● Background on VIA Chrome Hardware ● The History of OpenChrome Project ● Past Releases ● Observations about Standby Resume ● Developmental Philosophy ● Developmental Challenges ● Strategies for Further Development ● Future Plans 09/21/2017 XDC2017 2 About Me ● EE (Electrical Engineering) background (B.S.E.E.) who specialized in digital design / computer architecture in college (pretty much the only undergraduate student “still” doing this stuff where I attended college) ● Graduated recently ● First time conference presenter ● Very experienced with Xilinx FPGA (Spartan-II through 7 Series FPGA) ● Fluent in Verilog / VHDL design and verification ● Interest / design experience with external communication interfaces (PCI / PCIe) and external memory interfaces (SDRAM / DDR3 SDRAM) ● Developed a simple DMA engine for PCI I/F validation w/Windows WDM (Windows Driver Model) kernel device driver ● Almost all the knowledge I have is self taught (university engineering classes were not very useful) 09/21/2017 XDC2017 3 Motivations Behind My Work ● General difficulty in obtaining meaningful employment in the digital hardware design field (too many students in the field, difficulty obtaining internship, etc.) ● Collects and repairs abandoned computer hardware (It’s like rescuing puppies!) ● Owns 100+ desktop computers and 20+ laptop computers (mostly abandoned old stuff I -

Intel® Atom™ Processor E6x5c Series-Based Platform for Embedded Computing

PlAtfOrm brief Intel® Atom™ Processor E6x5C Series Embedded Computing Intel® Atom™ Processor E6x5C Series-Based Platform for Embedded Computing Platform Overview Available with industrial and commercial The Intel® Atom™ processor E6x5C series temperature ranges, this processor series delivers, in a single package, the benefits of provides embedded lifecycle support and the Intel® Atom™ processor E6xx combined is supported by the broad Intel® archi- with a Field-Programmable Gate Array tecture ecosystem as well as standard (FPGA) from Altera. This series offers Altera development tools. Additionally, a exceptional flexibility to incorporate a compatible, dedicated Power Management wide range of standard and user-defined Integrated Circuit (PMIC) solution may be I/O interfaces, high-speed connectivity, obtained from leading PMIC suppliers to memory interfaces, and process accelera- help minimize platform part count and tion to meet the requirements of a variety reduce bill of material costs and design of embedded applications in industrial, medi- complexity. Options include separate PMIC cal, communication, vision systems, voice and clock generator chips (available from over Internet protocol (VoIP), military, high- ROHM Co., Ltd.) or a single-chip solution performance programmable logic control- that integrates the voltage regulator and lers (PLCs) and embedded computers. clock generator (available from Dialog Semiconductor). The Intel Atom processor E6x5C series is a multi-chip, single-package device that Product Highlights reduces board footprint, lowers compo- • Single-package: A compact 37.5 x nent count, and simplifies inventory 37.5 mm, 0.8 mm ball pitch, multi-chip control and manufacturing. This compact device internally connects the Intel Atom design offers single-vendor support while processor E6xx with a user-programma- providing Intel Atom processors for ble FPGA. -

Intel Atom® Processor C2000 Product Family for Microservers

Intel® Atom™ Processor C2000 Product Family for Microservers PRODUCT BRIEF Maximize Efficiency for Your Lightweight Scale-Out Workloads Extreme Density and Energy-Efficiency for Low-End, Scale-Out Workloads With a need to rapidly deliver new services, cope with massive data growth, and contain costs, cloud service providers and hosters seek increasingly efficient ways to handle the demands on their infrastructure. Today’s servers based on Intel® Xeon® processors provide leadership performance and performance per watt with the flexibility to handle a wide range of workloads and peak demands. 2nd generation 64-bit However, certain lightweight, scale-out workloads—such as basic dedicated hosting, low-end static Intel® Atom™ Processor web serving, and simple content delivery can sometimes be hosted more efficiently on larger C2000 Product Family numbers of smaller servers built for extreme power efficiency. for microservers To address this need, Intel worked with a broad giving you the flexibility to right-size your infra- • Up to 7x higher7 perfor- ecosystem of leading server manufacturers to structure without limiting software mobility and mance, up to 6x better8 develop and deliver a variety of extreme low-power interoperability as your applications evolve. systems to support an emerging server category— performance/watt Optimized Platform Support microservers. With up to a 1,000 nodes1 per Intel provides complete microserver platform 9 rack and shared power, cooling, and networking • 6-20 watt TDP solutions that simplify implementation, improve resources, microservers can help you improve data overall efficiency and enable higher node density. • Up to 1000+ server center efficiency by right-sizing infrastructure for New innovations include: 10 relatively light processing requirements. -

GPU Developments 2018

GPU Developments 2018 2018 GPU Developments 2018 © Copyright Jon Peddie Research 2019. All rights reserved. Reproduction in whole or in part is prohibited without written permission from Jon Peddie Research. This report is the property of Jon Peddie Research (JPR) and made available to a restricted number of clients only upon these terms and conditions. Agreement not to copy or disclose. This report and all future reports or other materials provided by JPR pursuant to this subscription (collectively, “Reports”) are protected by: (i) federal copyright, pursuant to the Copyright Act of 1976; and (ii) the nondisclosure provisions set forth immediately following. License, exclusive use, and agreement not to disclose. Reports are the trade secret property exclusively of JPR and are made available to a restricted number of clients, for their exclusive use and only upon the following terms and conditions. JPR grants site-wide license to read and utilize the information in the Reports, exclusively to the initial subscriber to the Reports, its subsidiaries, divisions, and employees (collectively, “Subscriber”). The Reports shall, at all times, be treated by Subscriber as proprietary and confidential documents, for internal use only. Subscriber agrees that it will not reproduce for or share any of the material in the Reports (“Material”) with any entity or individual other than Subscriber (“Shared Third Party”) (collectively, “Share” or “Sharing”), without the advance written permission of JPR. Subscriber shall be liable for any breach of this agreement and shall be subject to cancellation of its subscription to Reports. Without limiting this liability, Subscriber shall be liable for any damages suffered by JPR as a result of any Sharing of any Material, without advance written permission of JPR. -

The ASCI Red TOPS Supercomputer

The ASCI Red TOPS Supercomputer http://www.sandia.gov/ASCI/Red/RedFacts.htm The ASCI Red TOPS Supercomputer Introduction The ASCI Red TOPS Supercomputer is the first step in the ASCI Platforms Strategy, which is aimed at giving researchers the five-order-of-magnitude increase in computing performance over current technology that is required to support "full-physics," "full-system" simulation by early next century. This supercomputer, being installed at Sandia National Laboratories, is a massively parallel, MIMD computer. It is noteworthy for several reasons. It will be the world's first TOPS supercomputer. I/O, memory, compute nodes, and communication are scalable to an extreme degree. Standard parallel interfaces will make it relatively simple to port parallel applications to this system. The system uses two operating systems to make the computer both familiar to the user (UNIX) and non-intrusive for the scalable application (Cougar). And it makes use of Commercial Commodity Off The Shelf (CCOTS) technology to maintain affordability. Hardware The ASCI TOPS system is a distributed memory, MIMD, message-passing supercomputer. All aspects of this system architecture are scalable, including communication bandwidth, main memory, internal disk storage capacity, and I/O. Artist's Concept The TOPS Supercomputer is organized into four partitions: Compute, Service, System, and I/O. The Service Partition provides an integrated, scalable host that supports interactive users, application development, and system administration. The I/O Partition supports a scalable file system and network services. The System Partition supports system Reliability, Availability, and Serviceability (RAS) capabilities. Finally, the Compute Partition contains nodes optimized for floating point performance and is where parallel applications execute. -

Intel Quartus Prime Pro Edition User Guide: Programmer Send Feedback

Intel® Quartus® Prime Pro Edition User Guide Programmer Updated for Intel® Quartus® Prime Design Suite: 21.2 Subscribe UG-20134 | 2021.07.21 Send Feedback Latest document on the web: PDF | HTML Contents Contents 1. Intel® Quartus® Prime Programmer User Guide..............................................................4 1.1. Generating Primary Device Programming Files........................................................... 5 1.2. Generating Secondary Programming Files................................................................. 6 1.2.1. Generating Secondary Programming Files (Programming File Generator)........... 7 1.2.2. Generating Secondary Programming Files (Convert Programming File Dialog Box)............................................................................................. 11 1.3. Enabling Bitstream Security for Intel Stratix 10 Devices............................................ 18 1.3.1. Enabling Bitstream Authentication (Programming File Generator)................... 19 1.3.2. Specifying Additional Physical Security Settings (Programming File Generator).............................................................................................. 21 1.3.3. Enabling Bitstream Encryption (Programming File Generator).........................22 1.4. Enabling Bitstream Encryption or Compression for Intel Arria 10 and Intel Cyclone 10 GX Devices.................................................................................................. 23 1.5. Generating Programming Files for Partial Reconfiguration......................................... -

A Superscalar Out-Of-Order X86 Soft Processor for FPGA

A Superscalar Out-of-Order x86 Soft Processor for FPGA Henry Wong University of Toronto, Intel [email protected] June 5, 2019 Stanford University EE380 1 Hi! ● CPU architect, Intel Hillsboro ● Ph.D., University of Toronto ● Today: x86 OoO processor for FPGA (Ph.D. work) – Motivation – High-level design and results – Microarchitecture details and some circuits 2 FPGA: Field-Programmable Gate Array ● Is a digital circuit (logic gates and wires) ● Is field-programmable (at power-on, not in the fab) ● Pre-fab everything you’ll ever need – 20x area, 20x delay cost – Circuit building blocks are somewhat bigger than logic gates 6-LUT6-LUT 6-LUT6-LUT 3 6-LUT 6-LUT FPGA: Field-Programmable Gate Array ● Is a digital circuit (logic gates and wires) ● Is field-programmable (at power-on, not in the fab) ● Pre-fab everything you’ll ever need – 20x area, 20x delay cost – Circuit building blocks are somewhat bigger than logic gates 6-LUT 6-LUT 6-LUT 6-LUT 4 6-LUT 6-LUT FPGA Soft Processors ● FPGA systems often have software components – Often running on a soft processor ● Need more performance? – Parallel code and hardware accelerators need effort – Less effort if soft processors got faster 5 FPGA Soft Processors ● FPGA systems often have software components – Often running on a soft processor ● Need more performance? – Parallel code and hardware accelerators need effort – Less effort if soft processors got faster 6 FPGA Soft Processors ● FPGA systems often have software components – Often running on a soft processor ● Need more performance? – Parallel -

EDN Magazine, December 17, 2004 (.Pdf)

ᮋ HE BEST 100 PRODUCTS OF 2004 encompass a range of architectures and technologies Tand a plethora of categories—from analog ICs to multimedia to test-and-measurement tools. All are innovative, but, of the thousands that manufacturers announce each year and the hundreds that EDN reports on, only about 100 hot products make our readers re- ally sit up and take notice. Here are the picks from this year's crop. We present the basic info here. To get the whole scoop and find out why these products are so compelling, go to the Web version of this article on our Web site at www.edn.com. There, you'll find links to the full text of the articles that cover these products' dazzling features. ANALOG ICs Power Integrations COMMUNICATIONS NetLogic Microsystems Analog Devices LNK306P Atheros Communications NSE5512GLQ network AD1954 audio DAC switching power converter AR5005 Wi-Fi chip sets search engine www.analog.com www.powerint.com www.atheros.com www.netlogicmicro.com D2Audio Texas Instruments Fulcrum Microsystems Parama Networks XR125 seven-channel VCA8613 FM1010 six-port SPI-4,2 PNI8040 add-drop module eight-channel VGA switch chip multiplexer www.d2audio.com www.ti.com www.fulcrummicro.com www.paramanet.com International Rectifier Wolfson Microelectronics Motia PMC-Sierra IR2520D CFL ballast WM8740 audio DAC Javelin smart-antenna IC MSP2015, 2020, 4000, and power controller www.wolfsonmicro.com www.motia.com 5000 VoIP gateway chips www.irf.com www.pmc-sierra.com www.edn.com December 17, 2004 | edn 29 100 Texas Instruments Intel DISCRETE SEMICONDUCTORS -

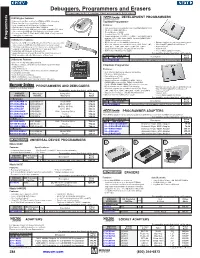

Debuggers, Programmers and Erasers Products May Be Rohs Compliant

Debuggers, Programmers and Erasers Products may be RoHS compliant. Check mouser.com for RoHS status. USB2Wiggler Features: DEVELOPMENT PROGRAMMERS • Universal Serial Bus interface for JTAG and BDM debugging TopMaxII Programmer • Faster than the classic parallel port Wiggler • Fully compatible with all Macraigor Systems software Features: • Operates up to Hi-Speed USB rates (480Mb/s) • High speed device programmer and TTL/LOGIC/DRAM tester • One side interfaces to USB port of host IBM compatible PC, other • PC driven: USB 2.0 interface side connects to OCD (On-Chip Debug) port of target system • Device libraries > 18000 • Port may be JTAG, E-JTAG, OnCE, COP, BDM, or any of several • Standard 48-pin DIL ZIF socket other types of connections • Supported devices: EPROM, EEPROM, FLASH (NOR/NAND) Programmers memory, PLD, FPGA, Serial PROM, Parallel PROM, CMOS USB2Demon Features: PROM, PIC and microcontrollers • Interfaces to USB 1.1 or USB 2.0 port of host PC on one side, other • B/P/V takes 65 sec. for 64Mbit flash memory • External start key for auto programming mode side connects to OCD (On-Chip Debug) port on target system • All socket adapters are available in stock (USA): PLCC, SOP, • Win 95/98/NT/2000/XP/Vista/Windows 7 • Simultaneously debugs up to 255 devices on a single scan chain SOIC, QFP, TSOP, SON, BGA, TSSOP, and TSOPII • Approved by CE • Supports configurable JTAG/BDM clock rates up to 20MHz • Supported low-voltages: 1.8/2.0/2.7/3.0/3.3/5.0 volt • Made in USA • Compatible with Windows and Linux hosts • Built-in AC (110-240) power supply • Free software update for lifetime • Supported versions of Linux: Red Hat 7.2-9 and Fedora Core 2 For quantities greater than listed, call for quote. -

Intel® Quartus® Prime Design Suite Version 18.1 Update Release Notes

Intel® Quartus® Prime Design Suite Version 18.1 Update Release Notes Updated for Intel® Quartus® Prime Design Suite: 18.1.1 Standard Edition Subscribe RN-01080-18.1.1.0 | 2019.04.17 Send Feedback Latest document on the web: PDF | HTML Contents Contents 1. Intel® Quartus® Prime Design Suite Version 18.1 Update Release Notes........................ 3 2. Issues Addressed in Update 1......................................................................................... 4 2.1. Intel Quartus Prime Pro Edition Software.................................................................. 4 2.2. Intel Quartus Prime Standard Edition Software.......................................................... 7 2.3. IP and IP Cores..................................................................................................... 8 2.4. DSP Builder for Intel FPGAs...................................................................................12 2.5. Intel High Level Synthesis Compiler........................................................................12 2.6. Intel FPGA SDK for OpenCL*................................................................................. 13 3. Issues Addressed in Update 2....................................................................................... 15 3.1. Intel Quartus Prime Pro Edition Software.................................................................15 3.2. IP and IP Cores................................................................................................... 15 3.3. Intel FPGA SDK for OpenCL.................................................................................. -

2017 HPC Annual Report Team Would Like to Acknowledge the Invaluable Assistance Provided by John Noe

sandia national laboratories 2017 HIGH PERformance computing The 2017 High Performance Computing Annual Report is dedicated to John Noe and Dino Pavlakos. Building a foundational framework Editor in high performance computing Yasmin Dennig Contributing Writers Megan Davidson Sandia National Laboratories has a long history of significant contributions to the high performance computing Mattie Hensley community and industry. Our innovative computer architectures allowed the United States to become the first to break the teraflop barrier—propelling us to the international spotlight. Our advanced simulation and modeling capabilities have been integral in high consequence US operations such as Operation Burnt Frost. Strong partnerships with industry leaders, such as Cray, Inc. and Goodyear, have enabled them to leverage our high performance computing capabilities to gain a tremendous competitive edge in the marketplace. Contributing Editor Laura Sowko As part of our continuing commitment to provide modern computing infrastructure and systems in support of Sandia’s missions, we made a major investment in expanding Building 725 to serve as the new home of high performance computer (HPC) systems at Sandia. Work is expected to be completed in 2018 and will result in a modern facility of approximately 15,000 square feet of computer center space. The facility will be ready to house the newest National Nuclear Security Administration/Advanced Simulation and Computing (NNSA/ASC) prototype Design platform being acquired by Sandia, with delivery in late 2019 or early 2020. This new system will enable continuing Stacey Long advances by Sandia science and engineering staff in the areas of operating system R&D, operation cost effectiveness (power and innovative cooling technologies), user environment, and application code performance.