Directx Chas Boyd

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

DRAFT - DATED MATERIAL the CONTENTS of THIS REVIEWER’S GUIDE IS INTENDED SOLELY for REFERENCE WHEN REVIEWING Rev a VERSIONS of VOODOO5 5500 REFERENCE BOARDS

Voodoo5™ 5500 for the PC Reviewer’s Guide DRAFT - DATED MATERIAL THE CONTENTS OF THIS REVIEWER’S GUIDE IS INTENDED SOLELY FOR REFERENCE WHEN REVIEWING Rev A VERSIONS OF VOODOO5 5500 REFERENCE BOARDS. THIS INFORMATION WILL BE REGULARLY UPDATED, AND REVIEWERS SHOULD CONTACT THE PERSONS LISTED IN THIS GUIDE FOR UPDATES BEFORE EVALUATING ANY VOODOO5 5500BOARD. 3dfx Interactive, Inc. 4435 Fortran Dr. San Jose, Ca 95134 408-935-4400 Brian Burke 214-570-2113 [email protected] PT Barnum 214-570-2226 [email protected] Bubba Wolford 281-578-7782 [email protected] www.3dfx.com www.3dfxgamers.com Copyright 2000 3dfx Interactive, Inc. All Rights Reserved. All other trademarks are the property of their respective owners. Visit the 3dfx Virtual Press Room at http://www.3dfx.com/comp/pressweb/index.html. Voodoo5 5500 Reviewers Guide xxxx 2000 Table of Contents Benchmarking the Voodoo5 5500 Page 5 • Cures for common benchmarking and image quality mistakes • Tips for benchmarking • Overclocking Guide INTRODUCTION Page 11 • Optimized for DVD Acceleration • Scalable Performance • Trend-setting Image quality Features • Texture Compression • Glide 2.x and 3.x Compatibility: The Most Titles • World-class Window’s GUI/video Acceleration SECTION 1: Voodoo5 5500 Board Overview Page 12 • General Features • 3D Features set • 2D Features set • Voodoo5 Video Subsystem • SLI • Texture compression • Fill Rate vs T&L • T-Buffer introduction • Alternative APIs (Al Reyes on Mac, Linux, BeOS and more) • Pricing & Availability • Warranty • Technical Support • Photos, screenshots, -

Rasterized Cnlor

US006825851B1 (12) United States Patent (10) Patent N0.: US 6,825,851 B1 Leather (45) Date of Patent: Nov. 30, 2004 (54) METHOD AND APPARATUS FOR (74) Attorney, Agent, or Firm—Nixon & Vanderhye PC ENVIRONMENT-MAPPED BUMP-MAPPING IN A GRAPHICS SYSTEM (57) ABSTRACT (75) Inventor: Mark M. Leather, Saratoga, CA (US) A graphics system including a custom graphics and audio (73) Assignee: Nintendo Co., Ltd., Kyoto (JP) processor produces exciting 2D and 3D graphics and sur round sound. The system includes a graphics and audio ( * ) Notice: Subject to any disclaimer, the term of this patent is extended or adjusted under 35 processor including a 3D graphics pipeline and an audio U.S.C. 154(b) by 369 days. digital signal processor. Realistic looking surfaces on ren dered images are generated by EMBM using an indirect (21) Appl. No.: 09/722,381 texture lookup to a “bump map” followed by an environ (22) Filed: Nov. 28, 2000 ment or light mapping. Apparatus and example methods for Related US. Application Data environment-mapped style of bump-mapping (EMBM) are (60) Provisional application No. 60/226,893, ?led on Aug. 23, provided that use a pre-completed bump-map texture 2000. accessed as an indirect texture along With pre-computed (51) Int. Cl.7 ......................... .. G09G 5/00; G06T 15/60 object surface normals (i.e., the Normal, Tangent and Binor (52) US. Cl. ..................... .. 345/584; 345/581; 345/426; 345/848 mal vectors) from each vertex of rendered polygons to (58) Field of Search ....................... .. 345/426, 581—582, effectively generate a neW perturbed Normal vector per 345/584, 501, 506, 427, 522, 848 vertex. -

3Dfx Oral History Panel Gordon Campbell, Scott Sellers, Ross Q. Smith, and Gary M. Tarolli

3dfx Oral History Panel Gordon Campbell, Scott Sellers, Ross Q. Smith, and Gary M. Tarolli Interviewed by: Shayne Hodge Recorded: July 29, 2013 Mountain View, California CHM Reference number: X6887.2013 © 2013 Computer History Museum 3dfx Oral History Panel Shayne Hodge: OK. My name is Shayne Hodge. This is July 29, 2013 at the afternoon in the Computer History Museum. We have with us today the founders of 3dfx, a graphics company from the 1990s of considerable influence. From left to right on the camera-- I'll let you guys introduce yourselves. Gary Tarolli: I'm Gary Tarolli. Scott Sellers: I'm Scott Sellers. Ross Smith: Ross Smith. Gordon Campbell: And Gordon Campbell. Hodge: And so why don't each of you take about a minute or two and describe your lives roughly up to the point where you need to say 3dfx to continue describing them. Tarolli: All right. Where do you want us to start? Hodge: Birth. Tarolli: Birth. Oh, born in New York, grew up in rural New York. Had a pretty uneventful childhood, but excelled at math and science. So I went to school for math at RPI [Rensselaer Polytechnic Institute] in Troy, New York. And there is where I met my first computer, a good old IBM mainframe that we were just talking about before [this taping], with punch cards. So I wrote my first computer program there and sort of fell in love with computer. So I became a computer scientist really. So I took all their computer science courses, went on to Caltech for VLSI engineering, which is where I met some people that influenced my career life afterwards. -

12) United States Patent 10) Patent No.: US 7.003.588 B1

US007003588B1 12) United States Patent 10) Patent No.: US 7.003.5889 9 B1 Takeda et al. 45) Date of Patent: Feb. 21,5 2006 (54) PERIPHERAL DEVICES FOR A VIDEO 4,567,516 A 1/1986 Scherer et al. .............. 358/114 GAME SYSTEM 4,570,233 A. 2/1986 Yan et al. 4,589,089 A 5/1986 Frederiksen ................ 364/900 (75) Inventors: Genyo Takeda, Kyoto (JP), Ko Shiota, 4,592,012 A 5/1986 Braun ........................ 364/900 Kyoto- (JP);2 Munchitoe Oira,2 Kyoto- 4,725,831† A 2/19884/1987 Colemanº ºatoshi º Nishiumi, Kyoto sº (JP) (JP); 4,829,2954,799,635 A 5/19891/1989 HiroyukiNakagawa .................. 364/900 - e 4,837,488 A 6/1989 Donahue ..................... 324/66 (73) Assignee: Nintendo Co., Ltd., Kyoto (JP) 4,850,591. A 7/1989 Takezawa et al. ............ 273/85 (*) Notice: Subject to any disclaimer, the term of this (Continued) patent is extended or adjusted under 35 U.S.C. 154(b) by 0 days. FOREIGN PATENT DOCUMENTS CA 2070934 12/1993 (21) Appl. No.: 10/225,488 (Continued) (22) Filed: Aug. 22, 2002 OTHER PUBLICATIONS Related U.S. Application Data “Pinouts for Various Connectors in Real Life (tm)”, ?on (60) Provisional application No. 60/313,812, filed on Aug. line], retrieved from the Internet, http://repairfaq.ece.drex 22, 2001. el.edu/REPAIR/F, Pinoutsl.html, updated May 20, 1997 (per document). (51) Int. Cl. A63F I3/00 (2006.01) (Continued) (52) U.S. Cl. .............................. .."...º.º. Primary Examiner—Christopher Shin (58) Fielde of Classificatione e Search ................2 710/100,2 (74) Attorney, Agent, or Firm—Nixon & Vanderhye, P.C. -

Linux Hardware Compatibility HOWTO

Linux Hardware Compatibility HOWTO Steven Pritchard Southern Illinois Linux Users Group / K&S Pritchard Enterprises, Inc. <[email protected]> 3.2.4 Copyright © 2001−2007 Steven Pritchard Copyright © 1997−1999 Patrick Reijnen 2007−05−22 This document attempts to list most of the hardware known to be either supported or unsupported under Linux. Copyright This HOWTO is free documentation; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free software Foundation; either version 2 of the license, or (at your option) any later version. Linux Hardware Compatibility HOWTO Table of Contents 1. Introduction.....................................................................................................................................................1 1.1. Notes on binary−only drivers...........................................................................................................1 1.2. Notes on proprietary drivers.............................................................................................................1 1.3. System architectures.........................................................................................................................1 1.4. Related sources of information.........................................................................................................2 1.5. Known problems with this document...............................................................................................2 1.6. New versions of this document.........................................................................................................2 -

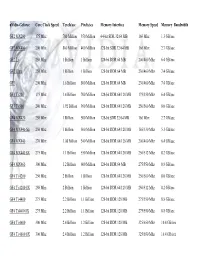

Nvidia-Geforce GF2 MX200 GF2 MX400 GF2 Ti GF2 Ultra

nVidia-Geforce Core Clock Speed Texels/sec Pixels/sec Memory Interface Memory Speed Memory Bandwidth GF2 MX200 175 Mhz 700 Million 350 Million 64-bit SDR 32/64 MB 166 Mhz 1.3 GB/sec GF2 MX400 200 Mhz 800 Million 400 Million 128-bit SDR 32/64 MB 166 Mhz 2.7 GB/sec GF2 Ti 250 Mhz 1 Billion 1 Billion 128-bit DDR 64 MB 200/400 Mhz 6.4 GB/sec GF2 Ultra 250 Mhz 1 Billion 1 Billion 128-bit DDR 64 MB 230/460 Mhz 7.4 GB/sec GF3 200 Mhz 1.6 Billion 800 Million 128-bit DDR 64 MB 230/460 Mhz 7.4 GB/sec GF3 Ti 200 175 Mhz 1.4 Billion 700 Million 128-bit DDR 64/128 MB 175/350 Mhz 6.4 GB/sec GF3 Ti 500 240 Mhz 1.92 Billion 960 Million 128-bit DDR 64/128 MB 250/500 Mhz 8.0 GB/sec GF4 MX420 250 Mhz 1 Billion 500 Million 128-bit SDR 32/64 MB 166 Mhz 2.7 GB/sec GF4 MX440-SE 250 Mhz 1 Billion 500 Million 128-bit DDR 64/128 MB 165/330 Mhz 5.3 GB/sec GF4 MX440 270 Mhz 1.08 Billion 540 Million 128-bit DDR 64/128 MB 200/400 Mhz 6.4 GB/sec GF4 MX440 8X 275 Mhz 1.1 Billion 550 Million 128-bit DDR 64/128 MB 256/512 Mhz 8.2 GB/sec GF4 MX460 300 Mhz 1.2 Billion 600 Million 128-bit DDR 64 MB 275/550 Mhz 8.8 GB/sec GF4 Ti 4200 250 Mhz 2 Billion 1 Billion 128-bit DDR 64/128 MB 250/500 Mhz 8.0 GB/sec GF4 Ti 4200 8X 250 Mhz 2 Billion 1 Billion 128-bit DDR 64/128 MB 256/512 Mhz 8.2 GB/sec GF4 Ti 4400 275 Mhz 2.2 Billion 1.1 Billion 128-bit DDR 128 MB 275/550 Mhz 8.8 GB/sec GF4 Ti4400 8X 275 Mhz 2.2 Billion 1.1 Billion 128-bit DDR 128 MB 275/550 Mhz 8.8 GB/sec GF4 Ti 4600 300 Mhz 2.4 Billion 1.2 Billion 128-bit DDR 128 MB 325/650 Mhz 10.4 GB/sec GF4 Ti 4600 8X 300 Mhz 2.4 -

Video Adapters and Accelerators

Color profile: Generic CMYK printer profile Composite Default screen Bigelow / Troubleshooting, Maintaining & Repairing PCs / Bigelow / 2686-8 / Chapter 43 43 VIDEO ADAPTERS AND ACCELERATORS CHAPTER AT A GLANCE Understanding Conventional Video More on DirectSound Adapters 1515 More on DirectInput Text vs. Graphics More on Direct3D ROM BIOS (Video BIOS) More on DirectPlay Reviewing Video Display Hardware 1517 Determining the Installed Version of MDA (Monochrome Display Adapter—1981) DirectX CGA (Color Graphics Adapter—1981) Replacing/Updating a Video Adapter 1540 EGA (Enhanced Graphics Adapter—1984) Removing the Old Device Drivers PGA (Professional Graphics Adapter—1984) Exchanging the Video Adapter MCGA (Multi-Color Graphics Array—1987) Installing the New Software VGA (Video Graphics Array—1987) Checking the Installation 8514 (1987) SVGA (Super Video Graphics Array) Video Feature Connectors 1543 XGA (1990) AMI Multimedia Channel Understanding Graphics Accelerators 1522 AGP Overclocking 1547 Video Speed Factors AGP and BIOS Settings 3D Graphics Accelerator Issues 1531 Troubleshooting Video Adapters 1549 The 3D Process Isolating the Problem Area Key 3D Speed Issues Multiple Display Support Guide Improving 3D Performance Through Hardware Unusual Hardware Issues Award Video BIOS Glitch Understanding DirectX 1536 Video Symptoms Pieces of a Puzzle More on DirectDraw Further Study 1570 The monitor itself is merely an output device—a “peripheral”—that translates synchronized analog or TTL (Transistor to Transistor Logic) video signals into a visual -

H Ll What We Will Cover

Whllhat we will cover Contour Tracking Surface Rendering Direct Volume Rendering Isosurface Rendering Optimizing DVR Pre-Integrated DVR Sp latti ng Unstructured Volume Rendering GPU-based Volume Rendering Rendering using Multi-GPU History of Multi-Processor Rendering 1993 SGI announced the Onyx series of graphics servers the first commercial system with a multi-processor graphics subsystem cost up to a million dollars 3DFx Interactive (1995) introduced the first gaming accelerator called 3DFx Voodoo Graphics The Voodoo Graphics chipset consisted of two chips: the Frame Buffer Interface (FBI) was responsible for the frame buffer and the Texture Mapping Unit (TMU) processed textures. The chipset could scale the performance up by adding more TMUs – up to three TMUs per one FBI. early 1998 3dfx introduced its next chipset , Voodoo2 the first mass product to officially support the option of increasing the performance by uniting two Voodoo2-based graphics cards with SLI (Scan-Line Interleave) technology. the cost of the system was too high 1999 NVIDIA released its new graphics chip TNT2 whose Ultra version could challenge the speed of the Voodoo2 SLI but less expensive. 3DFx Interactive was devoured by NVIDIA in 2000 Voodoo Voodoo 2 SLI Voodoo 5 SLI Rendering Voodoo 5 5500 - Two Single Board SLI History of Multi-Processor Rendering ATI Technologies(1999) MAXX –put two chips on one PCB and make them render different frames simultaneously and then output the frames on the screen alternately. Failed by technical issues (sync and performance problems) NVIDIA SLI(200?) Scalable Link Interface Voodoo2 style dual board implementation 3Dfx SLI was PCI based and has nowhere near the bandwidth of PCIE used by NVIDIA SLI. -

GPU Multiprocessing

GPU multiprocessing Manuel Ujaldón Martínez Computer Architecture Department University of Malaga (Spain) Outline 1. Multichip solutions [10 slides] 2. Multicard solutions [2 slides] 3. Multichip + multicard [3] 4. Performance on matrix decompositions [2] 5. CUDA programming [5] 6. Scalability on 3DFD [4] A world of possibilities From lower to higher cost, we have: 1. Multichip: Voodoo5 (3Dfx), 3D1 (Gigabyte). 2. Multicard: SLI(Nvidia) / CrossFire(ATI). Gigabyte (2005) NVIDIA (2007) ATI (2007) NVIDIA (2008) 3. Combination: Two chips/card and/orEvans & Sutherland (2004): two cards/connector. 3 I. Multichip solutions 4 First choice: Multichip. A retrospective: Voodoo 5 Rage Fury 5500 Maxx 3Dfx ATI (1999) (2000) Volari V8 2 Rad9800 Duo (prototype) XGI Sapphire (2002) (2003) 5 First choice: Multichip. Example 1: 3D1 (Gigabyte - 2005). A double GeForce 6600GT GPU on the same card (december 2005). Each GPU endowed with 128 MB of memory and a 128 bits bus width. 6 First choice: Multichip. Example 2: GeForce 7950 GX2 (Nvidia – 2006) 7 First choice: Multichip. Example 3: GeForce 9800 GX2 (Nvidia - 2008) . Double GeForce 8800 GPU, double printed circuit board and double video memory of 512 MB. A single PCI-express connector. 8 First choice: Multichip. 3D1 (Gigabyte). Cost and performance 3DMark 2003 3DMark 2005 Card 1024x768 1600x1200 1024x768 1600x1200 GeForce 6600 GT 8234 2059 3534 2503 3D1 using a single GPU 8529 2063 3572 2262 GeForce 6800 GT 11493 3846 4858 3956 GeForce 6600 GT SLI 14049 3924 6122 3542 3D1 using two GPUs 14482 4353 6307 3609 Cost: row 3 > row 4 > row 5 > row 1 > row 1 9 First choice: Multichip. -

(12) Ulllted States Patent (10) Patent N0.: US 7,538,772 B1 Fouladi Et A1

US007538772B1 (12) Ulllted States Patent (10) Patent N0.: US 7,538,772 B1 Fouladi et a1. (45) Date of Patent: May 26, 2009 (54) GRAPHICS PROCESSING SYSTEM WITH 4,463,380 A 7/1984 Hooks, Jr. ENHANCED MEMORY CONTROLLER 4,491,836 A 1/1985 Collmeyer et a1. 4,570,233 A 2/1986 Yan et a1. (75) Inventors: Farhad Fouladi, Los Altos Hills, CA (Continued) (US); Winnie W. Yeung, San Jose, CA (US); Howard Cheng, Sammamish, WA FOREIGN PATENT DOCUMENTS (Us) CA 2070934 12/1993 (73) Assignee: Nintendo Co., Ltd., Kyoto (JP) (Continued) ( * ) Notice: Subject to any disclaimer, the term of this OTHER PUBLICATIONS patent is extended or adjusted under 35 Photograph of Sony PlayStation II System. U.S.C. 154(b) by 1082 days. (Continued) (21) APP1- NOJ 09/726,220 Primary ExamineriKee M Tung _ Assistant Examineriloni Hsu (22) Flled: NOV‘ 28’ 2000 (74) Attorney, Agent, or FirmiNiXon & Vanderhye, PC Related US. Application Data (57) ABSTRACT (60) 5203183061211apphCanOnNO' 60/226’894’?1edOnAug' A graphics system including a custom graphics and audio ’ ' processor produces exciting 2D and 3D graphics and sur (51) Int.Cl. roun ‘1 soun dTh. e s y stem1nc' 1du es a gPhira cs an‘1 au d'P10 ro G06F 13/18 (2006 01) cessor including a 3D graphics pipeline and an audio digital G09G 5/39 (200601) signal processor. A memory controller performs a Wide range G06F 13/00 (200601) of memory control related functions including arbitrating (52) U 5 Cl 345/535 345/531_ 711/100 betWeen Various competing resources seeking access to main (58) Fi'el'd 0} ’ ’7 1 1/154 memory, handling memory latency and bandwidth require / """""""" " ’ ments of the resources requesting memory access, buffering 7171 1?’5 0?’ as; Writes to reduce bus turn around, refreshing main memory, / ’ ’ ’ ’ ’ ’ ’ and protecting main memory using programmable registers. -

Voodoo5™ 5500

Voodoo 5™ 5500 AGP 64MB Dual-Chip SLI 2D/3D Accelerator Preliminary Specifications Voodoo 5 5500 from 3dfx is the next stage in the evolution ultra-high resolution gaming. Utilizing a revolutionary scalable architecture, the Voodoo 5 5500 features dual 3dfx VSA-100 processors for more 3D horsepower. Working in parallel, these processors combine to produce over 667 Megatexels per second to create extraordinary 3D worlds in vivid 32-bit color. Boasting state-of-the-art Real-Time Full-Scene HW Anti-Aliasing, the exclusive T-Buffer™ Digital Cinematic Effects engine, 64MB of graphics memory for top performance and support for 2D resolutions as high as 2048x1536, the Voodoo 5 5500 gives today’s PC gamers the raw 3D power and premium 2D performance they crave. Product Features • Fully-integrated 128-bit 2D/3D/Video Accelerator • 667-733 Megapixels/second • 64MB of Graphics Memory • 32-bit color rendering • Real-Time Full-Scene HW Anti-Aliasing • Exclusive T-Buffer™ Digital Cinematic Effects • 3dfx FXT1™ and DirectX® Texture Compression • 2K x 2K Textures • AGP with full sideband support • 350MHz RAMDAC for resolutions up to 2048 x 1536 • Windows 95, 98, NT4.0, Windows 2000 drivers • Fully software-compatible with 3dfx Voodoo3 Corporate Headquarters: 4435 Fortran Drive, San Jose CA 95134 Sales Division: 3400 Waterway Parkway, Richardson, TX 75080 Ph: 972.234.8750 Voodoo 5™ 5500 AGP 64MB Dual-Chip SLI 2D/3D Accelerator Preliminary Specifications 3D Acceleration • 4 fully-featured pixels/clock • DirectX® and FXT1™ Texture Compression support • Real-Time -

![(12) Ulllted States Patent (10) Patent N0.: US 7,701,461 B2 Fouladi Et A]](https://docslib.b-cdn.net/cover/6067/12-ulllted-states-patent-10-patent-n0-us-7-701-461-b2-fouladi-et-a-7736067.webp)

(12) Ulllted States Patent (10) Patent N0.: US 7,701,461 B2 Fouladi Et A]

US00770l46lB2 (12) Ulllted States Patent (10) Patent N0.: US 7,701,461 B2 Fouladi et a]. (45) Date of Patent: Apr. 20, 2010 (54) METHOD AND APPARATUS FOR EP 0 637 813 A2 2/1995 BUFFERING GRAPHICS DATA IN A EP 1 074 945 2/2001 GRAPHICS SYSTEM (Continued) (75) Inventors: Farhad Fouladi, Los Altos Hills, CA OTHER PUBLICATIONS (US); Robert Moore, HeathroW, FL (Us) Torborg, John G.; “A Parallel Processor Architecture for Graphics Arithmetic Operations”; Computer Graphics, vol. 21, No. 4; Jul. (73) Assignee: Nintendo Co., Ltd., Kyoto (JP) 1987; PP‘ l97'204'* ( * ) Notice: Subject to any disclaimer, the term of this (Commued) patent is extended or adjusted under 35 Primary Examineriloni Hsu U.S.C. 154(b) by 0 days. (74) Attorney, Agent, or FirmiNiXon & Vanderhye PC (21) Appl. N0.: 11/709,750 (57) ABSTRACT (22) Filed: Feb- 23, 2007 A graphics system including a custom graphics and audio processor produces exciting 2D and 3D graphics and sur (65) Prior Publication Data round sound. The system includes a graphics and audio pro US 2007/0165043 A1 JUL 19, 2007 cessor including a 3D graphics pipeline and an audio digital signal processor. Techniques for e?iciently buffering graph Related U_s_ Application Data ics data between a producer and a consumer Within a loW-cost _ _ _ _ _ graphics systems such as a 3D home video game overcome (62) Dlvlslon of apphcanon NO' 09/726,215’ ?led on NOV' the problem that a small-sized FIFO buffer in the graphics 28’ 2000’ HOW Pat‘ NO‘ 7,196,710‘ hardWare may not adequately load balance a producer and (60) Provisional application No.