IBM Data Center Networking Planning for Virtualization and Cloud Computing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Understanding Full Virtualization, Paravirtualization, and Hardware Assist

VMware Understanding Full Virtualization, Paravirtualization, and Hardware Assist Contents Introduction .................................................................................................................1 Overview of x86 Virtualization..................................................................................2 CPU Virtualization .......................................................................................................3 The Challenges of x86 Hardware Virtualization ...........................................................................................................3 Technique 1 - Full Virtualization using Binary Translation......................................................................................4 Technique 2 - OS Assisted Virtualization or Paravirtualization.............................................................................5 Technique 3 - Hardware Assisted Virtualization ..........................................................................................................6 Memory Virtualization................................................................................................6 Device and I/O Virtualization.....................................................................................7 Summarizing the Current State of x86 Virtualization Techniques......................8 Full Virtualization with Binary Translation is the Most Established Technology Today..........................8 Hardware Assist is the Future of Virtualization, but the Real Gains Have -

Enabling Intel® Virtualization Technology Features and Benefits

WHITE PAPER Intel® Virtualization Technology Enterprise Server Enabling Intel® Virtualization Technology Features and Benefits Maximizing the benefits of virtualization with Intel’s new CPUs and chipsets EXECUTIVE SUMMARY Although virtualization has been accepted in most data centers, some users have not yet taken advantage of all the virtualization features available to them. This white paper describes the features available in Intel® Virtualization Technology (Intel® VT) that work with Intel’s new CPUs and chipsets, showing how they can benefit the end user and how to enable them. Intel® Virtualization Technology Goldberg. Thus, developers found it difficult Feature Brief and Usage Model to implement a virtual machine platform on Intel VT combines with software-based the x86 architecture without significant virtualization solutions to provide maximum overhead on the host machine. system utilization by consolidating multiple environments into a single server or PC. In 2005 and 2006, Intel and AMD, working By abstracting the software away from the independently, each resolved this by creat- underlying hardware, a world of new usage ing new processor extensions to the x86 models opens up that can reduce costs, architecture. Although the actual implemen- increase management efficiency, and tation of processor extensions differs strengthen security—all while making your between AMD and Intel, both achieve the computing infrastructure more resilient in same goal of allowing a virtual machine the event of a disaster. hypervisor to run an unmodified operating system without incurring significant emula- During the last four years, Intel has intro- tion performance penalties. duced several new features to Intel VT. Most of these features are well known, but others Intel VT is Intel’s hardware virtualization for may not be. -

PCI DSS Virtualization Guidelines

Standard: PCI Data Security Standard (PCI DSS) Version: 2.0 Date: June 2011 Author: Virtualization Special Interest Group PCI Security Standards Council Information Supplement: PCI DSS Virtualization Guidelines Information Supplement • PCI DSS Virtualization Guidelines • June 2011 Table of Contents 1 Introduction ....................................................................................................................... 3 1.1 Audience ................................................................................................................ 3 1.2 Intended Use .......................................................................................................... 4 2 Virtualization Overview .................................................................................................... 5 2.1 Virtualization Concepts and Classes ..................................................................... 5 2.2 Virtual System Components and Scoping Guidance ............................................. 7 3 Risks for Virtualized Environments .............................................................................. 10 3.1 Vulnerabilities in the Physical Environment Apply in a Virtual Environment ....... 10 3.2 Hypervisor Creates New Attack Surface ............................................................. 10 3.3 Increased Complexity of Virtualized Systems and Networks .............................. 11 3.4 More Than One Function per Physical System ................................................... 11 3.5 Mixing VMs of -

Cloud Computing: a Taxonomy of Platform and Infrastructure-Level Offerings David Hilley College of Computing Georgia Institute of Technology

Cloud Computing: A Taxonomy of Platform and Infrastructure-level Offerings David Hilley College of Computing Georgia Institute of Technology April 2009 Cloud Computing: A Taxonomy of Platform and Infrastructure-level Offerings David Hilley 1 Introduction Cloud computing is a buzzword and umbrella term applied to several nascent trends in the turbulent landscape of information technology. Computing in the “cloud” alludes to ubiquitous and inexhaustible on-demand IT resources accessible through the Internet. Practically every new Internet-based service from Gmail [1] to Amazon Web Services [2] to Microsoft Online Services [3] to even Facebook [4] have been labeled “cloud” offerings, either officially or externally. Although cloud computing has garnered significant interest, factors such as unclear terminology, non-existent product “paper launches”, and opportunistic marketing have led to a significant lack of clarity surrounding discussions of cloud computing technology and products. The need for clarity is well-recognized within the industry [5] and by industry observers [6]. Perhaps more importantly, due to the relative infancy of the industry, currently-available product offerings are not standardized. Neither providers nor potential consumers really know what a “good” cloud computing product offering should look like and what classes of products are appropriate. Consequently, products are not easily comparable. The scope of various product offerings differ and overlap in complicated ways – for example, Ama- zon’s EC2 service [7] and Google’s App Engine [8] partially overlap in scope and applicability. EC2 is more flexible but also lower-level, while App Engine subsumes some functionality in Amazon Web Services suite of offerings [2] external to EC2. -

Oracle® Enterprise Manager Ops Center Security

Oracle® Enterprise Manager Ops Center Security 12c Release 4 (12.4.0.0.0) F17675-01 April 2019 Oracle Enterprise Manager Ops Center Security, 12c Release 4 (12.4.0.0.0) F17675-01 Copyright © 2007, 2019, Oracle and/or its affiliates. All rights reserved. Primary Author: Krithika Gangadhar This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, delivered to U.S. Government end users are "commercial computer software" pursuant to the applicable Federal Acquisition Regulation and agency- specific supplemental regulations. As such, use, duplication, disclosure, modification, and adaptation of the programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, shall be subject to license terms and license restrictions applicable to the programs. -

Virtualization and Shared Infrastructure Data Storage for IT in Kosovo Institutions Gani Zogaj

Rochester Institute of Technology RIT Scholar Works Theses Thesis/Dissertation Collections 2012 Virtualization and shared Infrastructure data storage for IT in Kosovo institutions Gani Zogaj Follow this and additional works at: http://scholarworks.rit.edu/theses Recommended Citation Zogaj, Gani, "Virtualization and shared Infrastructure data storage for IT in Kosovo institutions" (2012). Thesis. Rochester Institute of Technology. Accessed from This Master's Project is brought to you for free and open access by the Thesis/Dissertation Collections at RIT Scholar Works. It has been accepted for inclusion in Theses by an authorized administrator of RIT Scholar Works. For more information, please contact [email protected]. AMERICAN UNIVERSITY IN KOSOVO MASTER OF SCIENCE IN PROFESSIONAL STUDIES Virtualization and Shared Infrastructure Data Storage for IT in Kosovo institutions “Submitted as a Capstone Project Report in partial fulfillment of a Master of Science degree in Professional Studies at American University in Kosovo” By Gani ZOGAJ November, 2012 Virtualization and Shared Infrastructure Data Storage for IT in Kosovo institutions ACKNOWLEDGEMENTS First, I would like to thank God for giving me health for completing my Master’s Degree studies. I would like to express my gratitude to my supervisor of capstone proposal, Bryan, for his support and for giving me suggestions and recommendations throughout the Capstone Project work. Finally, I would like to thank my wife, Shkendije, and my lovely daughters, Elisa and Erona, who I love so much, for their understanding while I was preparing the Capstone Project and I couldn’t spend enough time with them. 2 Virtualization and Shared Infrastructure Data Storage for IT in Kosovo institutions Table of Contents Figures and Tables………………………………………………….……………….……….……5 List of Acronyms………………………………………………………………....………….……6 Executive Summary……………………………………………………..………………...………8 Chapter 1…………………………………………………………………………...…………….11 1. -

System Virtualization Support in Sun Java System Products

SystemVirtualization Support in Sun Java System Products October 2009 This document is maintained by Sun Java System team. Software Products Covered by this Statement This document summarizes Sun support for Sun Java System products when used in conjunction with system virtualization products and features. It applies to Sun products contained in the following Sun Java System suites: ■ Sun GlassFish Portfolio ■ Sun Java Application Platform Suite ■ Sun Java Identity Management Suite ■ Sun Java Composite Application Platform Suite ■ Sun B2B Suite ■ Sun ESB Suite ■ Sun MDM Suite ■ Sun Java Web Infrastructure Suite ■ Sun Java Communications Suite Refer to the Sun Java Enterprise System (Java ES) and Communications Suite product pages for more information on these suites. The Sun Java Availability Suite and Solaris Cluster are not addressed in this support statement. Refer to the Solaris Cluster product information for further details on Solaris Cluster's support for operating system virtualization. Introduction A core capability of system virtualization offerings is the ability to execute multiple operating system (OS) instances on shared hardware. Functionally, an application deployed to an OS hosted in a virtualized environment is generally unaware that the underlying platform has been virtualized. Sun performs testing of its Sun Java System products on select system virtualization and OS combinations to help validate that the Sun Java System products continue to function on properly sized and configured virtualized environments as they do on non-virtualized systems. System Resource Sizing The combination of being able to deploy multiple OS instances and applications on a single system and the ease by which system resources can be allocated to OS instances increases the likelihood of realizing undersized environments for your applications. -

1- BRUNEL: Welcome to Another Episode of Getting the Most

BRUNEL: Welcome to another episode of Getting the Most Out of IBM U2. I'm Kenny Brunel, and I'm your host for today's episode, and today we're going to talk about IBM U2's latest technology, U2.NET. First of all, what is .NET? So I'm going to turn my attention, first of all, to a guest that I have in the studio with me today in Denver, Dave Peters. Dave is the product manager for the U2 data servers and the client tools, and Dave has been with IBM for over 12 years. What can you tell us about U2.NET? But first of all could you give us a brief rundown or a description of what .NET is itself. PETERS: Sure, Kenny. .NET is really a development framework architected by Microsoft. This is the framework that Microsoft hopes that will be the development choice for any new development on Windows. It consists of user-defined interfaces, data access, database connectivity, cryptography, Web application development and communications. IBM has three options for U2 developers that want to access the U2 data servers using .NET. The first I'll mention is [UniObjects] for .NET or UO.NET. UO.NET is a MultiValue API for use in the .NET applications. It's very familiar within -1- the constructs and was introduced to help Basic programmers get to .NET very easily. And one good thing is it's simple to get. UO .NET is available on the client CD that comes with either UniVerse or UniData. The second option is, the long name is IBM database add-ins for Visual Studio or as we refer to it as IBM .NET. -

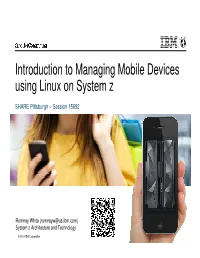

Introduction to Managing Mobile Devices Using Linux on System Z

Introduction to Managing Mobile Devices using Linux on System z SHARE Pittsburgh – Session 15692 Romney White ([email protected]) System z Architecture and Technology © 2014 IBM Corporation Mobile devices are 80% of devices sold to access the Internet Worldwide Shipment of Internet Access Devices 2013 2017 PC (Desktop & Notebook) PC (Ultrabook) Tablet Phone Worldwide Devices Shipments by Segment (Thousands of Units) Device Type 2012 2013 2014 2017 PC (Desk-Based and Notebook) 341,263 315,229 302,315 271,612 PC (Ultrabooks) 9,822 23,592 38,687 96,350 Tablet 116,113 197,202 265,731 467,951 Mobile Phone 1,746,176 1,875,774 1,949,722 2,128,871 Total 2,213,373 2,411,796 2,556,455 2,964,783 2 Source: Gartner (April 2013) © 2014 IBM Corporation Mobile Internet users will surpass PC internet users by 2015 The number of people accessing the Internet from smartphones, tablets and other mobile devices will surpass the number of users connecting from a home or office computer by 2015, according to a September 2013 study by market analyst firm IDC. PC is the new Legacy! 3 © 2014 IBM Corporation Five mobile trends with significant implications for the enterprise Mobile enables the Mobile is primary Internet of Things Mobile is primary 91% of mobile users keep Global Machine-to-machine 91% of mobile users keep their device within arm’s connections will increase their device within arm’s reach 100% of the time from 2 billion in 2011 to 18 reach 100% of the time billion at the end of 2022 Mobile must create a continuous brand Insights from mobile experience -

System Tools Reference Manual for Filenet Image Services

IBM FileNet Image Services Version 4.2 System Tools Reference Manual SC19-3326-00 IBM FileNet Image Services Version 4.2 System Tools Reference Manual SC19-3326-00 Note Before using this information and the product it supports, read the information in “Notices” on page 1439. This edition applies to version 4.2 of IBM FileNet Image Services (product number 5724-R95) and to all subsequent releases and modifications until otherwise indicated in new editions. © Copyright IBM Corporation 1984, 2019. US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents About this manual 17 Manual Organization 18 Document revision history 18 What to Read First 19 Related Documents 19 Accessing IBM FileNet Documentation 20 IBM FileNet Education 20 Feedback 20 Documentation feedback 20 Product consumability feedback 21 Introduction 22 Tools Overview 22 Subsection Descriptions 35 Description 35 Use 35 Syntax 35 Flags and Options 35 Commands 35 Examples or Sample Output 36 Checklist 36 Procedure 36 May 2011 FileNet Image Services System Tools Reference Manual, Version 4.2 5 Contents Related Topics 36 Running Image Services Tools Remotely 37 How an Image Services Server can hang 37 Best Practices 37 Why an intermediate server works 38 Cross Reference 39 Backup Preparation and Analysis 39 Batches 39 Cache 40 Configuration 41 Core Files 41 Databases 42 Data Dictionary 43 Document Committal 43 Document Deletion 43 Document Services 44 Document Retrieval 44 Enterprise Backup/Restore (EBR) -

Congressional Record United States Th of America PROCEEDINGS and DEBATES of the 108 CONGRESS, SECOND SESSION

E PL UR UM IB N U U S Congressional Record United States th of America PROCEEDINGS AND DEBATES OF THE 108 CONGRESS, SECOND SESSION Vol. 150 WASHINGTON, WEDNESDAY, DECEMBER 8, 2004 No. 139 House of Representatives The House was not in session today. Its next meeting will be held on Tuesday, January 4, 2005, at 12 noon. Senate WEDNESDAY, DECEMBER 8, 2004 The Senate met at 9:30 a.m. and was generations. Thank You for Your pro- ple with issues and wisdom to seek called to order by the President pro tection. You make wars to cease, de- Your guidance. tempore (Mr. STEVENS). stroying the weapons of those who Bless and strengthen the many staff- fight against Your purposes. Today, ers who provide the wind beneath the PRAYER guide our lawmakers with Your justice wings of our leaders. Bring to them a The Chaplain, Dr. Barry C. Black, of- and keep them as the apple of Your bountiful harvest for their many fered the following prayer: eye. Instruct them in Your wisdom and months of faithful toil. Let us pray. hide them under the shadow of Your Bless all who mourn the loss of Stan Faithful God, who stretches out the wings. Help them to find light in Your Kimmitt. He will be greatly missed. Earth above the waters, Your Name is laws and knowledge in Your instruc- We pray this in Your holy Name. great and Your goodness extends to all tions. Give them patience as they grap- Amen. NOTICE If the 108th Congress, 2d Session, adjourns sine die on or before December 10, 2004, a final issue of the Congres- sional Record for the 108th Congress, 2d Session, will be published on Monday, December 20, 2004, in order to permit Members to revise and extend their remarks. -

Secure Service Provisioning in a Public Cloud

Mälardalen University Press Licentiate Theses No. 157 SECURE SERVICE PROVISIONING IN A PUBLIC CLOUD Mudassar Aslam 2012 School of Innovation, Design and Engineering Copyright © Mudassar Aslam, 2012 ISBN 978-91-7485-081-9 ISSN 1651-9256 Printed by Mälardalen University, Västerås, Sweden Populärvetenskaplig sammanfattning Utvecklingen av molntekniker möjliggör utnyttjande av IT-resurser över Internet, och kan innebära många fördelar för såväl företag som privat- personer. Dock innebär denna nya modell för användandet av resurser att säkerhetsfrågor uppstår, frågor som inte existerat i traditionell resur- shantering på datorer. I avhandlingen fokuserar vi på säkerhetsfrågor som rör en användare av molntjänster (t.ex. en organisation, myndighet etc.), när användaren vill leasa molntjänster i form av Virtuella maskiner (VM) från en publik leverantör av Infrastructure-as-a-Service (IaaS). Det finns många säkerhetsområden i molnsystem: att hålla data hemliga, att resurserna är korrekta, att servicen är den utlovade, att säkerheten kan kontrolleras, etc. I denna avhandling fokuserar vi på säkerhetsproblem som resulterar i att tillit saknas mellan aktörerna i molnsystem, och som därmed hindrar säkerhetskänsliga användare från att använda molntjänster. Från en behovsanalys ur säkerhetsperspektiv föreslår vi lösningar som möjliggör tillit i publika IaaS-moln. Våra lösningar rör i huvudsak säker livscykelhantering av virtuella maskiner, inklusive mekanismer för säker start och säker migrering av virtuella maskiner. Lösningarna säkerställer att användarens VM alltid är skyddad i molnet genom att den endast tillåts exekveras på pål- itliga (trusted) plattformar. Detta sker genom att använda tekniker för s.k. trusted computing (pålitlig datoranvändning), vilket innebär att användaren på distans kan kontrollera om plattformen är tillförlitlig eller inte.