Lecture 14: Randomized Classes 1 Introduction and Examples

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Complexity Zoo

The Complexity Zoo Scott Aaronson www.ScottAaronson.com LATEX Translation by Chris Bourke [email protected] 417 classes and counting 1 Contents 1 About This Document 3 2 Introductory Essay 4 2.1 Recommended Further Reading ......................... 4 2.2 Other Theory Compendia ............................ 5 2.3 Errors? ....................................... 5 3 Pronunciation Guide 6 4 Complexity Classes 10 5 Special Zoo Exhibit: Classes of Quantum States and Probability Distribu- tions 110 6 Acknowledgements 116 7 Bibliography 117 2 1 About This Document What is this? Well its a PDF version of the website www.ComplexityZoo.com typeset in LATEX using the complexity package. Well, what’s that? The original Complexity Zoo is a website created by Scott Aaronson which contains a (more or less) comprehensive list of Complexity Classes studied in the area of theoretical computer science known as Computa- tional Complexity. I took on the (mostly painless, thank god for regular expressions) task of translating the Zoo’s HTML code to LATEX for two reasons. First, as a regular Zoo patron, I thought, “what better way to honor such an endeavor than to spruce up the cages a bit and typeset them all in beautiful LATEX.” Second, I thought it would be a perfect project to develop complexity, a LATEX pack- age I’ve created that defines commands to typeset (almost) all of the complexity classes you’ll find here (along with some handy options that allow you to conveniently change the fonts with a single option parameters). To get the package, visit my own home page at http://www.cse.unl.edu/~cbourke/. -

The Polynomial Hierarchy

ij 'I '""T', :J[_ ';(" THE POLYNOMIAL HIERARCHY Although the complexity classes we shall study now are in one sense byproducts of our definition of NP, they have a remarkable life of their own. 17.1 OPTIMIZATION PROBLEMS Optimization problems have not been classified in a satisfactory way within the theory of P and NP; it is these problems that motivate the immediate extensions of this theory beyond NP. Let us take the traveling salesman problem as our working example. In the problem TSP we are given the distance matrix of a set of cities; we want to find the shortest tour of the cities. We have studied the complexity of the TSP within the framework of P and NP only indirectly: We defined the decision version TSP (D), and proved it NP-complete (corollary to Theorem 9.7). For the purpose of understanding better the complexity of the traveling salesman problem, we now introduce two more variants. EXACT TSP: Given a distance matrix and an integer B, is the length of the shortest tour equal to B? Also, TSP COST: Given a distance matrix, compute the length of the shortest tour. The four variants can be ordered in "increasing complexity" as follows: TSP (D); EXACTTSP; TSP COST; TSP. Each problem in this progression can be reduced to the next. For the last three problems this is trivial; for the first two one has to notice that the reduction in 411 j ;1 17.1 Optimization Problems 413 I 412 Chapter 17: THE POLYNOMIALHIERARCHY the corollary to Theorem 9.7 proving that TSP (D) is NP-complete can be used with DP. -

Ex. 8 Complexity 1. by Savich Theorem NL ⊆ DSPACE (Log2n)

Ex. 8 Complexity 1. By Savich theorem NL ⊆ DSP ACE(log2n) ⊆ DSP ASE(n). we saw in one of the previous ex. that DSP ACE(n2f(n)) 6= DSP ACE(f(n)) we also saw NL ½ P SP ASE so we conclude NL ½ DSP ACE(n) ½ DSP ACE(n3) ½ P SP ACE but DSP ACE(n) 6= DSP ACE(n3) as needed. 2. (a) We emulate the running of the s ¡ t ¡ conn algorithm in parallel for two paths (that is, we maintain two pointers, both starts at s and guess separately the next step for each of them). We raise a flag as soon as the two pointers di®er. We stop updating each of the two pointers as soon as it gets to t. If both got to t and the flag is raised, we return true. (b) As A 2 NL by Immerman-Szelepsc¶enyi Theorem (NL = vo ¡ NL) we know that A 2 NL. As C = A \ s ¡ t ¡ conn and s ¡ t ¡ conn 2 NL we get that A 2 NL. 3. If L 2 NICE, then taking \quit" as reject we get an NP machine (when x2 = L we always reject) for L, and by taking \quit" as accept we get coNP machine for L (if x 2 L we always reject), and so NICE ⊆ NP \ coNP . For the other direction, if L is in NP \coNP , then there is one NP machine M1(x; y) solving L, and another NP machine M2(x; z) solving coL. We can therefore de¯ne a new machine that guesses the guess y for M1, and the guess z for M2. -

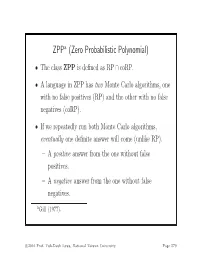

ZPP (Zero Probabilistic Polynomial)

ZPPa (Zero Probabilistic Polynomial) • The class ZPP is defined as RP ∩ coRP. • A language in ZPP has two Monte Carlo algorithms, one with no false positives (RP) and the other with no false negatives (coRP). • If we repeatedly run both Monte Carlo algorithms, eventually one definite answer will come (unlike RP). – A positive answer from the one without false positives. – A negative answer from the one without false negatives. aGill (1977). c 2016 Prof. Yuh-Dauh Lyuu, National Taiwan University Page 579 The ZPP Algorithm (Las Vegas) 1: {Suppose L ∈ ZPP.} 2: {N1 has no false positives, and N2 has no false negatives.} 3: while true do 4: if N1(x)=“yes”then 5: return “yes”; 6: end if 7: if N2(x) = “no” then 8: return “no”; 9: end if 10: end while c 2016 Prof. Yuh-Dauh Lyuu, National Taiwan University Page 580 ZPP (concluded) • The expected running time for the correct answer to emerge is polynomial. – The probability that a run of the 2 algorithms does not generate a definite answer is 0.5 (why?). – Let p(n) be the running time of each run of the while-loop. – The expected running time for a definite answer is ∞ 0.5iip(n)=2p(n). i=1 • Essentially, ZPP is the class of problems that can be solved, without errors, in expected polynomial time. c 2016 Prof. Yuh-Dauh Lyuu, National Taiwan University Page 581 Large Deviations • Suppose you have a biased coin. • One side has probability 0.5+ to appear and the other 0.5 − ,forsome0<<0.5. -

The Weakness of CTC Qubits and the Power of Approximate Counting

The weakness of CTC qubits and the power of approximate counting Ryan O'Donnell∗ A. C. Cem Sayy April 7, 2015 Abstract We present results in structural complexity theory concerned with the following interre- lated topics: computation with postselection/restarting, closed timelike curves (CTCs), and approximate counting. The first result is a new characterization of the lesser known complexity class BPPpath in terms of more familiar concepts. Precisely, BPPpath is the class of problems that can be efficiently solved with a nonadaptive oracle for the Approximate Counting problem. Similarly, PP equals the class of problems that can be solved efficiently with nonadaptive queries for the related Approximate Difference problem. Another result is concerned with the compu- tational power conferred by CTCs; or equivalently, the computational complexity of finding stationary distributions for quantum channels. Using the above-mentioned characterization of PP, we show that any poly(n)-time quantum computation using a CTC of O(log n) qubits may as well just use a CTC of 1 classical bit. This result essentially amounts to showing that one can find a stationary distribution for a poly(n)-dimensional quantum channel in PP. ∗Department of Computer Science, Carnegie Mellon University. Work performed while the author was at the Bo˘gazi¸ciUniversity Computer Engineering Department, supported by Marie Curie International Incoming Fellowship project number 626373. yBo˘gazi¸ciUniversity Computer Engineering Department. 1 Introduction It is well known that studying \non-realistic" augmentations of computational models can shed a great deal of light on the power of more standard models. The study of nondeterminism and the study of relativization (i.e., oracle computation) are famous examples of this phenomenon. -

The Computational Complexity Column by V

The Computational Complexity Column by V. Arvind Institute of Mathematical Sciences, CIT Campus, Taramani Chennai 600113, India [email protected] http://www.imsc.res.in/~arvind The mid-1960’s to the early 1980’s marked the early epoch in the field of com- putational complexity. Several ideas and research directions which shaped the field at that time originated in recursive function theory. Some of these made a lasting impact and still have interesting open questions. The notion of lowness for complexity classes is a prominent example. Uwe Schöning was the first to study lowness for complexity classes and proved some striking results. The present article by Johannes Köbler and Jacobo Torán, written on the occasion of Uwe Schöning’s soon-to-come 60th birthday, is a nice introductory survey on this intriguing topic. It explains how lowness gives a unifying perspective on many complexity results, especially about problems that are of intermediate complexity. The survey also points to the several questions that still remain open. Lowness results: the next generation Johannes Köbler Humboldt Universität zu Berlin [email protected] Jacobo Torán Universität Ulm [email protected] Abstract Our colleague and friend Uwe Schöning, who has helped to shape the area of Complexity Theory in many decisive ways is turning 60 this year. As a little birthday present we survey in this column some of the newer results related with the concept of lowness, an idea that Uwe translated from the area of Recursion Theory in the early eighties. Originally this concept was applied to the complexity classes in the polynomial time hierarchy. -

Quantum Zero-Error Algorithms Cannot Be Composed∗

CORE Metadata, citation and similar papers at core.ac.uk Provided by CERN Document Server Quantum Zero-Error Algorithms Cannot be Composed∗ Harry Buhrman Ronald de Wolf CWI and U. of Amsterdam CWI [email protected] [email protected] Abstract We exhibit two black-box problems, both of which have an efficient quantum algorithm with zero-error, yet whose composition does not have an efficient quantum algorithm with zero-error. This shows that quantum zero-error algorithms cannot be composed. In oracle terms, we give a relativized world where ZQPZQP = ZQP, while classically we always have ZPPZPP =ZPP. 6 Keywords: Analysis of algorithms. Quantum computing. Zero-error computation. 1 Introduction We can define a “zero-error” algorithm of complexity T in two different but essentially equivalent ways: either as an algorithm that always outputs the correct value with expected complexity T (expectation taken over the internal randomness of the algorithm), or as an algorithm that outputs the correct value with probability at least 1=2, never outputs an incorrect value, and runs in worst- case complexity T . Expectation is linear, so we can compose two classical algorithms that have an efficient expected complexity to get another algorithm with efficient expected complexity. If algorithm A uses an expected number of a applications of B and an expected number of a0 other operations, then using a subroutine for B that has an expected number of b operations gives A an expected number of a b + a operations. In terms of complexity classes, we have · 0 ZPPZPP =ZPP; where ZPP is the class of problems that can be solved by a polynomial-time classical zero-error algorithm. -

A Detailed Examination of the Principles of Ion Gauge Calibration

ZPP-1 v A DETAILED EXAMINATION OF THE PRINCIPLES OF ION GAUGE CALIBRATION WAYNE B. NOTTINGHAM Research Laboratory of Electronics Massachusetts Institute of Technology FRANKLIN L. TORNEY, JR. i N. R. C. Equipment Corporation Newton Highlands, Massachusetts TECHNICAL REPORT 379 OCTOBER 25, 1960 MASSACHUSETTS INSTITUTE OF TECHNOLOGY RESEARCH LABORATORY OF ELECTRONICS CAMBRIDGE, MASSACHUSETTS The Research Laboratory of Electronics is an interdepartmental laboratory of the Department of Electrical Engineering and the Department of Physics. The research reported in this document was made possible in part by support extended the Massachusetts Institute of Technology, Research Laboratory of Electronics, jointly by the U. S. Army (Sig- nal Corps), the U.S. Navy (Office of Naval Research), and the U.S. Air Force (Office of Scientific Research, Air Research and Develop- ment Command), under Signal Corps Contract DA36-039-sc-78108, Department of the Army Task 3-99-20-001 and Project 3-99-00-000. MASSACHUSETTS INSTITUTE OF TECHNOLOGY RESEARCH LABORATORY OF ELECTRONICS Technical Report 379 October 25, 1960 A DETAILED EXAMINATION OF THE PRINCIPLES OF ION GAUGE CALIBRATION Wayne B. Nottingham and Franklin L. Torney, Jr. N. R. C. Equipment Corporation Newton Highlands, Massachusetts Abstract As ionization gauges are adapted to a wider variety of applications, including in particular space research, the calibration accuracy becomes more important. One of the best standards for calibration is the McLeod gauge. Its use must be better under- stood and better experimental methods applied for satisfactory results. These details are discussed. The theory of the ionization gauge itself is often simplified to the point that a gauge "constant" is often determined in terms of a single measurement as: K :1= 1+ Experiments described show that, in three typical gauges of the Bayard-Alpert type, K is not a constant but depends on both p and I . -

Observation of the Distribution of Zn Protoporphyrin IX (ZPP) in Parma Ham by Using Purple LED and Image Analysis

Title Observation of the distribution of Zn protoporphyrin IX (ZPP) in Parma ham by using purple LED and image analysis Author(s) Wakamatsu, J.; Odagiri, H.; Nishimura, T.; Hattori, A. Meat Science, 74(3), 594-599 Citation https://doi.org/10.1016/j.meatsci.2006.05.011 Issue Date 2006-11 Doc URL http://hdl.handle.net/2115/14762 Type article (author version) File Information MS74-3.pdf Instructions for use Hokkaido University Collection of Scholarly and Academic Papers : HUSCAP 1 Observation of the distribution of zinc protoporphyrin IX (ZPP) in Parma ham by using purple 2 LED and image analysis 3 4 J. Wakamatsu ab,*, H. Odagiri b, T. Nishimura ab, A. Hattori ab 5 6 a Division of Bioresource and Product Science, Graduate School of Agriculture, 7 Hokkaido University, Sapporo, Hokkaido 060-8589, Japan 8 b Department of Animal Science, Faculty of Agriculture, Hokkaido University, Sapporo, 9 Hokkaido 060-8589, Japan 10 11 12 13 14 15 16 17 18 19 20 *Corresponding author. 21 Tel.: +81 11 706 2547; Fax.: +81 11 706 2547 22 E-mail address: [email protected] (J. Wakamatsu) 23 Meat Science Laboratory, Division of Bioresource and Product Science, Graduate School of 24 Agriculture, Hokkaido University 25 N-9 W-9, Kita-ku, Sapporo, Hokkaido 060-8589, Japan 26 27 1 1 Abstract 2 3 We investigated the distribution of zinc protoporphyrin IX (ZPP) in Parma ham by using 4 purple LED light and image analysis in order to elucidate the mechanism of ZPP formation. 5 Autofluorescence spectra of Parma ham revealed that ZPP was present in both lean meat and 6 fat, while red emission other than that of ZPP was hardly detected. -

In Search of an Easy Witness: Exponential Time Vs. Probabilistic

In Search of an Easy Witness Exp onential Time vs Probabilistic Polynomial Time y Valentine Kabanets Russell Impagliazzo Department of Computer Science Department of Computer Science University of California San Diego University of California San Diego La Jolla CA La Jolla CA kabanetscsucsdedu russellcsucsdedu z Avi Wigderson Department of Computer Science Hebrew University Jerusalem Israel and Institute for Advanced Study Princeton NJ aviiasedu May Abstract n Restricting the search space f g to the set of truth tables of easy Bo olean functions e establish on log n variables as well as using some known hardnessrandomness tradeos w a numb er of results relating the complexityof exp onentialtime and probabilistic p olynomial time complexity classes In particular we show that NEXP Ppoly NEXP MA this can be interpreted as saying that no derandomization of MA and hence of promiseBPP is p ossible unless NEXP contains a hard Bo olean function Wealsoprove several downward closure results for ZPP RP BPP and MA eg weshow EXP BPP EE BPE where EE is the doubleexp onential time class and BPE is the exp onentialtime analogue of BPP Intro duction One of the most imp ortant question in complexity theory is whether probabilistic algorithms are more p owerful than their deterministic counterparts A concrete formulation is the op en question of whether BPP P Despite growing evidence that BPP can be derandomized ie simulated deterministically without a signicant increase in the running time so far it has not b een ruled out that NEXP -

Quantum Complexity Theory

QQuuaannttuumm CCoommpplelexxitityy TThheeooryry IIII:: QQuuaanntutumm InInterateracctivetive ProofProof SSysystemtemss John Watrous Department of Computer Science University of Calgary CCllaasssseess ooff PPrroobblleemmss Computational problems can be classified in many different ways. Examples of classes: • problems solvable in polynomial time by some deterministic Turing machine • problems solvable by boolean circuits having a polynomial number of gates • problems solvable in polynomial space by some deterministic Turing machine • problems that can be reduced to integer factoring in polynomial time CComommmonlyonly StudStudiedied CClasseslasses P class of problems solvable in polynomial time on a deterministic Turing machine NP class of problems solvable in polynomial time on some nondeterministic Turing machine Informally: problems with efficiently checkable solutions PSPACE class of problems solvable in polynomial space on a deterministic Turing machine CComommmonlyonly StudStudiedied CClasseslasses BPP class of problems solvable in polynomial time on a probabilistic Turing machine (with “reasonable” error bounds) L class of problems solvable by some deterministic Turing machine that uses only logarithmic work space SL, RL, NL, PL, LOGCFL, NC, SC, ZPP, R, P/poly, MA, SZK, AM, PP, PH, EXP, NEXP, EXPSPACE, . ThThee lliistst ggoesoes onon…… …, #P, #L, AC, SPP, SAC, WPP, NE, AWPP, FewP, CZK, PCP(r(n),q(n)), D#P, NPO, GapL, GapP, LIN, ModP, NLIN, k-BPB, NP[log] PP P , P , PrHSPACE(s), S2P, C=P, APX, DET, DisNP, EE, ELEMENTARY, mL, NISZK, -

Quantum Circuits

Ii : Meeting G. Classical warm. ups to quantum computing : I . Probabilistic classical computing BPD and MA TL . Reversible classical computing Next time : circuits BQP and QMA quantum , Axioms of quantum mechanics lead to important problems if to we want to use quantum mechanical systems build a - : even in the ideal of a noise less computer , case system The I . classical information extracted via measurement a distribution is probability . How this? can we formalize complexity theory around If 2. want of we to use a Hilbert space some system " " as a the- quantum memory register , fqumtum) transformations must be hence in unitary , , particular, invertible ) reversible . Is reversible computation feasible? : these Goats answer questions in classical - warm up cases . The won It of classical analogs address all the issues " " . the . are in quantum case Erg quantum States not just classical distributions and unitary Uk) is probability , group Uncountable, infinite . : - Also important later non idealized quantum computing . Need error and a theory of quantum correction fault tolerance . I . Probabilistic classical computing : classical is Informally a probabilistic algorithm any algorithm that is allowed access to coin or flips , , equivalently, random lait strings . : Two to this more formal equivalent ways make the definition I. Extend of Turing machine so the transition function can in addition to the machine 's , using internal state and read of the toss a fair coin memory , . " " 2. Resolve a non - deterministic Turing machine by flipping to a coin to decide how branch . Remarks : I . It doesn't matter so much if the coin is fair but if , . heads) 't it should at least be reasonable number .