Knowledge Inn (In Nature)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Dynamic Behavior of Constained Back-Propagation Networks

642 Chauvin Dynamic Behavior of Constrained Back-Propagation Networks Yves Chauvin! Thomson-CSF, Inc. 630 Hansen Way, Suite 250 Palo Alto, CA. 94304 ABSTRACT The learning dynamics of the back-propagation algorithm are in vestigated when complexity constraints are added to the standard Least Mean Square (LMS) cost function. It is shown that loss of generalization performance due to overtraining can be avoided when using such complexity constraints. Furthermore, "energy," hidden representations and weight distributions are observed and compared during learning. An attempt is made at explaining the results in terms of linear and non-linear effects in relation to the gradient descent learning algorithm. 1 INTRODUCTION It is generally admitted that generalization performance of back-propagation net works (Rumelhart, Hinton & Williams, 1986) will depend on the relative size ofthe training data and of the trained network. By analogy to curve-fitting and for theo retical considerations, the generalization performance of the network should de crease as the size of the network and the associated number of degrees of freedom increase (Rumelhart, 1987; Denker et al., 1987; Hanson & Pratt, 1989). This paper examines the dynamics of the standard back-propagation algorithm (BP) and of a constrained back-propagation variation (CBP), designed to adapt the size of the network to the training data base. The performance, learning dynamics and the representations resulting from the two algorithms are compared. 1. Also in the Psychology Department, Stanford University, Stanford, CA. 94305 Dynamic Behavior of Constrained Back-Propagation Networks 643 2 GENERALIZATION PERFORM:ANCE 2.1 STANDARD BACK-PROPAGATION In Chauvin (In Press). the generalization performance of a back-propagation net work was observed for a classification task from spectrograms into phonemic cate gories (single speaker. -

ARCHITECTS of INTELLIGENCE for Xiaoxiao, Elaine, Colin, and Tristan ARCHITECTS of INTELLIGENCE

MARTIN FORD ARCHITECTS OF INTELLIGENCE For Xiaoxiao, Elaine, Colin, and Tristan ARCHITECTS OF INTELLIGENCE THE TRUTH ABOUT AI FROM THE PEOPLE BUILDING IT MARTIN FORD ARCHITECTS OF INTELLIGENCE Copyright © 2018 Packt Publishing All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews. Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the author, nor Packt Publishing or its dealers and distributors, will be held liable for any damages caused or alleged to have been caused directly or indirectly by this book. Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information. Acquisition Editors: Ben Renow-Clarke Project Editor: Radhika Atitkar Content Development Editor: Alex Sorrentino Proofreader: Safis Editing Presentation Designer: Sandip Tadge Cover Designer: Clare Bowyer Production Editor: Amit Ramadas Marketing Manager: Rajveer Samra Editorial Director: Dominic Shakeshaft First published: November 2018 Production reference: 2201118 Published by Packt Publishing Ltd. Livery Place 35 Livery Street Birmingham B3 2PB, UK ISBN 978-1-78913-151-2 www.packt.com Contents Introduction ........................................................................ 1 A Brief Introduction to the Vocabulary of Artificial Intelligence .......10 How AI Systems Learn ........................................................11 Yoshua Bengio .....................................................................17 Stuart J. -

Cogsci Little Red Book.Indd

OUTSTANDING QUESTIONS IN COGNITIVE SCIENCE A SYMPOSIUM HONORING TEN YEARS OF THE DAVID E. RUMELHART PRIZE IN COGNITIVE SCIENCE August 14, 2010 Portland Oregon, USA OUTSTANDING QUESTIONS IN COGNITIVE SCIENCE A SYMPOSIUM HONORING TEN YEARS OF THE DAVID E. RUMELHART PRIZE IN COGNITIVE SCIENCE August 14, 2010 Portland Oregon, USA David E. Rumelhart David E. Rumelhart ABOUT THE RUMELHART PRIZE AND THE 10 YEAR SYMPOSIUM At the August 2000 meeting of the Cognitive Science Society, Drs. James L. McClelland and Robert J. Glushko presented the initial plan to honor the intellectual contributions of David E. Rumelhart to Cognitive Science. Rumelhart had retired from Stanford University in 1998, suffering from Pick’s disease, a degenerative neurological illness. The plan involved honoring Rumelhart through an annual prize of $100,000, funded by the Robert J. Glushko and Pamela Samuelson Foundation. McClelland was a close collaborator of Rumelhart, and, together, they had written numerous articles and books on parallel distributed processing. Glushko, who had been Rumelhart’s Ph.D student in the late 1970s and a Silicon Valley entrepreneur in the 1990s, is currently an adjunct professor at the University of California – Berkeley. The David E. Rumelhart prize was conceived to honor outstanding research in formal approaches to human cognition. Rumelhart’s own seminal contributions to cognitive science included both connectionist and symbolic models, employing both computational and mathematical tools. These contributions progressed from his early work on analogies and story grammars to the development of backpropagation and the use of parallel distributed processing to model various cognitive abilities. Critically, Rumelhart believed that future progress in cognitive science would depend upon researchers being able to develop rigorous, formal theories of mental structures and processes. -

Introspection:Accelerating Neural Network Training by Learning Weight Evolution

Published as a conference paper at ICLR 2017 INTROSPECTION:ACCELERATING NEURAL NETWORK TRAINING BY LEARNING WEIGHT EVOLUTION Abhishek Sinha∗ Mausoom Sarkar Department of Electronics and Electrical Comm. Engg. Adobe Systems Inc, Noida IIT Kharagpur Uttar Pradesh,India West Bengal, India msarkar at adobe dot com abhishek.sinha94 at gmail dot com Aahitagni Mukherjee∗ Balaji Krishnamurthy Department of Computer Science Adobe Systems Inc, Noida IIT Kanpur Uttar Pradesh,India Uttar Pradesh, India kbalaji at adobe dot com ahitagnimukherjeeam at gmail dot com ABSTRACT Neural Networks are function approximators that have achieved state-of-the-art accuracy in numerous machine learning tasks. In spite of their great success in terms of accuracy, their large training time makes it difficult to use them for various tasks. In this paper, we explore the idea of learning weight evolution pattern from a simple network for accelerating training of novel neural networks. We use a neural network to learn the training pattern from MNIST classifi- cation and utilize it to accelerate training of neural networks used for CIFAR-10 and ImageNet classification. Our method has a low memory footprint and is computationally efficient. This method can also be used with other optimizers to give faster convergence. The results indicate a general trend in the weight evolution during training of neural networks. 1 INTRODUCTION Deep neural networks have been very successful in modeling high-level abstractions in data. How- ever, training a deep neural network for any AI task is a time-consuming process. This is because a arXiv:1704.04959v1 [cs.LG] 17 Apr 2017 large number of parameters need to be learnt using training examples. -

Herrmann Collection Books Pertaining to Human Memory

Special Collections Department Cunningham Memorial Library Indiana State University September 28, 2010 Herrmann Collection Books Pertaining to Human Memory Gift 1 (10/30/01), Gift 2 (11/20/01), Gift 3 (07/02/02) Gift 4 (10/21/02), Gift 5 (01/28/03), Gift 6 (04/22/03) Gift 7 (06/27/03), Gift 8 (09/22/03), Gift 9 (12/03/03) Gift 10 (02/20/04), Gift 11 (04/29/04), Gift 12 (07/23/04) Gift 13 (09/15/10) 968 Titles Abercrombie, John. Inquiries Concerning the Intellectual Powers and the Investigation of Truth. Ed. Jacob Abbott. Revised ed. New York: Collins & Brother, c1833. Gift #9. ---. Inquiries Concerning the Intellectual Powers, and the Investigation of Truth. Harper's Stereotype ed., from the second Edinburgh ed. New York: J. & J. Harper, 1832. Gift #8. ---. Inquiries Concerning the Intellectual Powers, and the Investigation of Truth. Boston: John Allen & Co.; Philadelphia: Alexander Tower, 1835. Gift #6. ---. The Philosophy of the Moral Feelings. Boston: Otis, Broaders, and Company, 1848. Gift #8. Abraham, Wickliffe, C., Michael Corballis, and K. Geoffrey White, eds. Memory Mechanisms: A Tribute to G. V. Goddard. Hillsdale, New Jersey: Lawrence Erlbaum Associates, 1991. Gift #9. Adams, Grace. Psychology: Science or Superstition? New York: Covici Friede, 1931. Gift #8. Adams, Jack A. Human Memory. McGraw-Hill Series in Psychology. New York: McGraw-Hill Book Company, c1967. Gift #11. ---. Learning and Memory: An Introduction. Homewood, Illinois: The Dorsey Press, 1976. Gift #9. Adams, John. The Herbartian Psychology Applied to Education Being a Series of Essays Applying the Psychology of Johann Friederich Herbart. -

CUA V04 1913 14 16.Pdf (6.493Mb)

T OFFICIAL PUBLICATIONS OF CORNELL UNIVERSITY VOLUME IV NUMBER 16 CATALOGUE NUMBER 1912-13 AUGUST I. 1913 PUBLISHED BY CORNELL UNIVERSITY ITHACA. NEW YORK — . r, OFFICIAL PUBLICATIONS OF CORNELL UNIVERSITY VOLUME IV NUMBER 16 CATALOGUE NUMBER 1912-13 AUGUST 1, 1913 PUBLISHED BY CORNELL UNIVERSITY ITHACA, NEW YORK CALENDAR First Term, 1913-14 Sept. 12, Friday, Entrance examinations begin. Sept. 22, Monday, Academic year begins. Registration of new students. Scholarship examinations begin. Sept. 23, Tuesday, Registration of new students. Registration in the Medical College in N. Y. City. Sept. 24, Wednesday, Registration of old students. Sept. 25, Thursday, Instruction begins in all departments of the University at Ithaca. President’s annual address to the students at 12 m. Sept. 27, Saturday, Registration, Graduate School. Oct. 14, Tuesday, Last day for payment of tuition. Nov. 11, Tuesday, Winter Courses in Agriculture begin. Nov. — , Thursday and Friday, Thanksgiving Recess. Dec. 1, Monday, Latest date for announcing subjects of theses for advanced degrees. Dec. 20, Saturday, Instruction ends 1 ™ ■ , Jan. 5, Monday, Instruction resumed [Christmas Recess. Jan. 10, Saturday, The ’94 Memorial Prize Competition. Jan. 11, Sunday, Founder’s Day. Jan. 24, Saturday, Instruction ends. Jan. 26, Monday, Term examinations begin. Second Term, 1913-14 Feb. 7, Saturday, Registration, undergraduates. Feb. 9, Monday, Registration, Graduate School. Feb. 9, Monday, Instruction begins. Feb. 13, Friday, Winter Courses in Agriculture end. Feb. 27, Friday, Last day for payment of tuition. Mar. 16, Monclay, The latest date for receiving applications for Fellowships and Scholarships in the Gradu ate School. April 1, Wednesday, Instruction ends T o - April 9, Thursday, Instruction resumed ) Spring Recess. -

Techofworld.In

Techofworld.In YouTube Mock Test Previous Year PDF Best Exam Books Check Here Check Here Check Here Check Here Previous Year CT & B.Ed Cut Off Marks (2019) Items CT Entrance B.Ed Arts B.Ed Science Cut Off Check Now Check Now Check Now Best Book For CT & B.Ed Entrance 2020 Items CT Entrance B.Ed Arts B.Ed Science Book (Amazon) Check Now Check Now Check Now Mock Test Related Important Links- Mock Test Features (Video) Watch Now Watch Now How To Register For Mock Test (Video) Watch Now Watch Now How To Give Mock (Video) Watch Now Watch Now P a g e | 1 Click Here- Online Test Series For CT, B.Ed, OTET, Railway, Odisha Police, OSSSC, B.Ed Arts (2018 & 2019) Teaching Aptitude Total Shifts – 13 ( By Techofworld.In ) 8596976190 P a g e | 2 Click Here- Online Test Series For CT, B.Ed, OTET, Railway, Odisha Police, OSSSC, 03-June-2019_Batch1 B) Project method 21) One of the five components of the centrally C) Problem solving method sponsored Scheme of Restructuring and D) Discussion method Reorganization of Teacher Education under the Government of India was establishment of DIETs. What is the full form of DIET? 25) Focus of the examination on rank ordering A) Dunce Institute of Educational Training students or declaring them failed tilts the classroom climate and the school ethos towards B) Deemed Institute of Educational Training A) vicious competition C) District Institute of Educational Training B) passive competition D) Distance Institute of Educational Training C) healthy competition D) joyful competition 22) One of the maxims of teaching -

Behavioral Finance Micro 19 CHAPTER 3 Incorporating Investor Behavior Into the Asset Allocation Process 39

00_POMPIAN_i_xviii 2/7/06 1:58 PM Page iii Behavioral Finance and Wealth Management How to Build Optimal Portfolios That Account for Investor Biases MICHAEL M. POMPIAN John Wiley & Sons, Inc. 00_POMPIAN_i_xviii 2/7/06 1:58 PM Page vi 00_POMPIAN_i_xviii 2/7/06 1:58 PM Page i Behavioral Finance and Wealth Management 00_POMPIAN_i_xviii 2/7/06 1:58 PM Page ii Founded in 1807, John Wiley & Sons is the oldest independent publish- ing company in the United States. With offices in North America, Europe, Australia, and Asia, Wiley is globally committed to developing and marketing print and electronic products and services for our cus- tomers’ professional and personal knowledge and understanding. The Wiley Finance series contains books written specifically for fi- nance and investment professionals as well as sophisticated individual in- vestors and their financial advisors. Book topics range from portfolio management to e-commerce, risk management, financial engineering, valuation, and financial instrument analysis, as well as much more. For a list of available titles, please visit our web site at www.Wiley Finance.com. 00_POMPIAN_i_xviii 2/7/06 1:58 PM Page iii Behavioral Finance and Wealth Management How to Build Optimal Portfolios That Account for Investor Biases MICHAEL M. POMPIAN John Wiley & Sons, Inc. 00_POMPIAN_i_xviii 2/7/06 1:58 PM Page iv Copyright © 2006 by Michael M. Pompian. All rights reserved. Published by John Wiley & Sons, Inc., Hoboken, New Jersey. Published simultaneously in Canada. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopy- ing, recording, scanning, or otherwise, except as permitted under Section 107 or 108 of the 1976 United States Copyright Act, without either the prior writ- ten permission of the Publisher, or authorization through payment of the ap- propriate per-copy fee to the Copyright Clearance Center, Inc., 222 Rosewood Drive, Danvers, MA 01923, (978) 750-8400, fax (978) 646-8600, or on the web at www.copyright.com. -

Backpropagation: 1Th E Basic Theory

Backpropagation: 1Th e Basic Theory David E. Rumelhart Richard Durbin Richard Golden Yves Chauvin Department of Psychology, Stanford University INTRODUCTION Since the publication of the PDP volumes in 1986,1 learning by backpropagation has become the most popular method of training neural networks. The reason for the popularity is the underlying simplicity and relative power of the algorithm. Its power derives from the fact that, unlike its precursors, the perceptron learning rule and the Widrow-Hoff learning rule, it can be employed for training nonlinear networks of arbitrary connectivity. Since such networks are often required for real-world applications, such a learning procedure is critical. Nearly as important as its power in explaining its popularity is its simplicity. The basic idea is old and simple; namely define an error function and use hill climbing (or gradient descent if you prefer going downhill) to find a set of weights which optimize perfor mance on a particular task. The algorithm is so simple that it can be implemented in a few lines of code, and there have been no doubt many thousands of imple mentations of the algorithm by now. The name back propagation actually comes from the term employed by Rosenblatt (1962) for his attempt to generalize the perceptron learning algorithm to the multilayer case. There were many attempts to generalize the perceptron learning procedure to multiple layers during the 1960s and 1970s, but none of them were especially successful. There appear to have been at least three inde pendent inventions of the modern version of the back-propagation algorithm: Paul Werbos developed the basic idea in 1974 in a Ph.D. -

Teaching Guide for the History of Psychology Instructor Compiled by Jennifer Bazar, Elissa Rodkey, and Jacy Young

A Teaching Guide for History of Psychology www.feministvoices.com 1 Psychology’s Feminist Voices in the Classroom A Teaching Guide for the History of Psychology Instructor Compiled by Jennifer Bazar, Elissa Rodkey, and Jacy Young One of the goals of Psychology’s Feminist Voices is to serve as a teaching resource. To facilitate the process of incorporating PFV into the History of Psychology course, we have created this document. Below you will find two primary sections: (1) Lectures and (2) Assignments. (1) Lectures: In this section you will find subject headings of topics often covered in History of Psychology courses. Under each heading, we have provided an example of the relevant career, research, and/or life experiences of a woman featured on the Psychology’s Feminist Voices site that would augment a lecture on that particular topic. Below this description is a list of additional women whose histories would be well-suited to lectures on that topic. (2) Assignments: In this section you will find several suggestions for assignments that draw on the material and content available on Psychology’s Feminist Voices that you can use in your History of Psychology course. The material in this guide is intended only as a suggestion and should not be read as a “complete” list of all the ways Psychology’s Feminist Voices could be used in your courses. We would love to hear all of the different ideas you think of for how to include the site in your classroom - please share you thoughts with us by emailing Alexandra Rutherford, our project coordinator, -

Voice Identity Finder Using the Back Propagation Algorithm of An

Available online at www.sciencedirect.com ScienceDirect Procedia Computer Science 95 ( 2016 ) 245 – 252 Complex Adaptive Systems, Publication 6 Cihan H. Dagli, Editor in Chief Conference Organized by Missouri University of Science and Technology 2016 - Los Angeles, CA Voice Identity Finder Using the Back Propagation Algorithm of an Artificial Neural Network Roger Achkar*, Mustafa El-Halabi*, Elie Bassil*, Rayan Fakhro*, Marny Khalil* *Department of Computer and Communications Engineering, American University of Science and Technology, AUST Beirut, Lebanon Abstract Voice recognition systems are used to distinguish different sorts of voices. However, recognizing a voice is not always successful due to the presence of different parameters. Hence, there is a need to create a set of estimation criteria and a learning process using Artificial Neural Network (ANN). The learning process performed using ANN allows the system to mimic how the brain learns to understand and differentiate among voices. The key to undergo this learning is to specify the free parameters that will be adapted through this process of simulation. Accordingly, this system will store the knowledge processed after performing the back propagation learning and will be able to identify the corresponding voices. The proposed learning allows the user to enter a number of different voices to the system through a microphone. © 20162016 The The Authors. Authors. Published Published by byElsevier Elsevier B.V. B.V. This is an open access article under the CC BY-NC-ND license (http://creativecommons.org/licenses/by-nc-nd/4.0/). Peer-review under responsibility of scientific committee of Missouri University of Science and Technology. Peer-review under responsibility of scientific committee of Missouri University of Science and Technology Keywords: Frequency; Neural Network; Backpropagation; Voice Recognition; Multi-layer Perceptrons. -

Intelligence & Testing

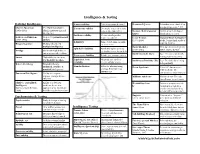

Intelligence & Testing Defining Intelligence Content validity Is the test comprehensive? Deviation IQ score Individual score divided by averge group score * 100 Charles Spearman Used factor analysis to Concurrent validity Do results match other tests (1863-1945) identify g-factor (general done at the same time? Normal / Bell / Gaussian Symmetrical bell-shaped intelligence) Curve distribution of scores Predictive validity Do test results predict Louis Leon Thurstone Proposed 7 primary mental future outcomes? Lewis Terman Stanford-Binet Intelligence (1887-1955) abilities (1877-1956) Scale; longitudinal study of Reliability Same object, same measure high IQ children Howard Gardner Modular theory of 8 → same score multiple intelligences David Wechsler Developed several IQ tests; Split-half reliability Randomly split test items (1896-1981) WAIS, WISC, WPPSI Prodigy Child with high ability in → similar scores for each ½ one area; normal in others Intellectual Giftedness IQ ≥ 130; associated with Test-retest reliability Retake test; compare scores Savant High ability in one area; many positive outcomes low/disability in others Equivalent form Alternate test versions Intellectual Disability (ID) IQ ≤ 70; difficulties living reliability receive similar scores Robert Sternberg Triarchic theory: independently analytical, creative, & Standardization Rules for administering, Down Syndrome Extra 21st chromosome; practical intelligences scoring, & interpreting mild/moderate ID, (norms) test Emotional Intelligence Ability to recognize, characteristic