Controllable Text Generation Should Machines Reflect the Way Humans

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Star Channels, May 26-June 1

MAY 26 - JUNE 1, 2019 staradvertiser.com BRIDGING THE GAP The formula of the police procedural gets a spiritual new twist on The InBetween. The drama series follows Cassie Bedford (Harriet Dyer), who experiences uncontrollable visions of the future and the past and visits from spirits desperately seeking her help. To make use of her unique talents, she assists her father, Det. Tom Hackett (Paul Blackthorne), and his former FBI partner as they tackle complicated crimes. Premieres Wednesday, May 29 on NBC. WE EMPOWER YOUR VOICE, BY EMPOWERING YOU. Tell your story by learning how to shoot, edit and produce your own show. Start your video training today at olelo.org/training olelo.org ON THE COVER | THE INBETWEEN Crossing over Medium drama ‘The As for Dyer, she may be a new face to North first time channelling a cop character; he American audiences, but she has a long list of starred as Det. Kyle Craig in the “Training Day” InBetween’ premieres on NBC acting credits, including dramatic and comedic series inspired by the 2001 film of the same roles in her home country of Australia. She is name. By Sarah Passingham best known for portraying Patricia Saunders in Everything old really is new again. There was TV Media the hospital drama “Love Child” and April in the a heyday for psychic, clairvoyant and medium- cop comedy series “No Activity,” which was centred television in the mid-2000s, with he formula of the police procedural gets a adapted for North American audiences by CBS shows like “Medium” and “Ghost Whisperer,” spiritual new twist when “The InBetween” All Access in 2017. -

Dissecting the Sundance Curse: Exploring Discrepancies Between Film Reviews by Professional and Amateur Critics

Dissecting the Sundance Curse by Lucas Buck — 27 Dissecting the Sundance Curse: Exploring Discrepancies Between Film Reviews by Professional and Amateur Critics Lucas Buck Cinema and Television Arts Elon University Submitted in partial fulfillment of the requirements in an undergraduate senior capstone course in communications Abstract There has been a growing discrepancy between professional-critic film reviews and audience-originating film reviews. In fact, these occurrences have become so routine, industry writers often reference a “Sundance Curse” – when a buzzy festival-circuit film bombs with the general public, commercially or critically. This study examines this inconsistency to determine which aspects of a film tend to draw the most attention from each respective type of critic. A qualitative content analysis of 20 individual reviews was conducted to determine which elements present in a film garnered the most attention from the reviewers, and whether that attention was positive, negative or neutral. This study indicates that audience film reviewers overwhelmingly focused on the “emotional response” gleaned from their movie-going experience above all other aspects of the film, whereas professional critics focused attention to more tangible – above-the-line contributions, such as direction, performances, and writing. I. Introduction As one of the most talked-about films of the 2018 Sundance Film Festival, theA24-released Hereditary became the breakout horror film of the year, opening in nearly 3,000 theaters and raking in $79 million while produced on just a $10 million production budget (Cusumano, 2018). Despite the obvious box office success, Hereditary’s word-of-mouth power seemed to have mostly been driven by glowing critical reviews, rather than by the opinion of audiences who paid to see the film. -

Prohibiting Product Placement and the Use of Characters in Marketing to Children by Professor Angela J. Campbell Georgetown Univ

PROHIBITING PRODUCT PLACEMENT AND THE USE OF CHARACTERS IN MARKETING TO CHILDREN BY PROFESSOR ANGELA J. CAMPBELL1 GEORGETOWN UNIVERSITY LAW CENTER (DRAFT September 7, 2005) 1 Professor Campbell thanks Natalie Smith for her excellent research assistance, Russell Sullivan for pointing out examples of product placements, and David Vladeck, Dale Kunkel, Jennifer Prime, and Marvin Ammori for their helpful suggestions. Introduction..................................................................................................................................... 3 I. Product Placements............................................................................................................. 4 A. The Practice of Product Placement......................................................................... 4 B. The Regulation of Product Placements................................................................. 11 II. Character Marketing......................................................................................................... 16 A. The Practice of Celebrity Spokes-Character Marketing ....................................... 17 B. The Regulation of Spokes-Character Marketing .................................................. 20 1. FCC Regulation of Host-Selling............................................................... 21 2. CARU Guidelines..................................................................................... 22 3. Federal Trade Commission....................................................................... 24 -

REAL STEEL (PG-13) Ebert: Users: You: Rate This Movie Right Now

movie reviews Reviews Great Movies Answer Man People Commentary Festivals Oscars Glossary One-Minute Reviews Letters Roger Ebert's Journal Scanners Store News Sports Business Entertainment Classifieds Columnists search REAL STEEL (PG-13) Ebert: Users: You: Rate this movie right now GO Search powered by YAHOO! register You are not logged in. Log in » Subscribe to weekly newsletter » times & tickets in theaters Fandango Search movie more current releases » showtimes and buy Real Steel tickets. one-minute movie reviews BY ROGER EBERT / October 5, 2011 about us "Real Steel" imagines a still playing near future when human cast & credits About the site » boxers have been replaced Dolphin Tale by robots. Well, why not? Amigo Site FAQs » Matches between small Charlie Kenton Hugh Jackman Bellflower fighting robot machines are Max Dakota Goyo The Big Year Contact us » popular enough to be on Bailey Evangeline Lilly Blackthorn Brighton Rock television, but in "Real Finn Anthony Mackie Email the Movie Steel," these robots are The Change-Up Ricky Kevin Durand Chasing Madoff Answer Man » towering, computer- Deborah Hope Davis Circumstance controlled machines with Marvin James Rebhorn Conan the Barbarian nimble footwork and Contagion on sale now instinctive balance. (In the DreamWorks presents a film The Debt real world, 'bots can be directed by Shawn Levy. Written by Detective Dee and the Mystery of the Phantom Flame rendered helpless on their The Devil's Double John Gatins, based in part on the backs, like turtles.) It also Don't Be Afraid of the Dark must be said that in color short story “Steel” by Richard Drive and design, the robots of Matheson. -

Factualwritingfinal.Pdf

Introduction to Disney There is a long history behind Disney and many surprising facts that not many people know. Over the years Disney has evolved as a company and their films evolved with them, there are many reasons for this. So why and how has Disney changed? I have researched into Disney from the beginning to see how the company started and how they got their ideas. This all lead up to what happened in the company including the involvement of pixar, and how the Disney films changed in regards to the content and the reception. Now with the relevant facts you can make up your own mind about when Disney was at their best. Walt Disney Studios was founded in 1923 by brothers Walt Disney and Roy O. Disney. Originally Disney was called ‘Disney Brothers cartoon studio’ that produced a series of short animated films called the Alice Comedies. The first Alice Comedy was a ten minute reel called Alice’s Wonderland. In 1926 the company name was changed to the Walt Disney Studio. Disney then developed a cartoon series with the character Oswald the Lucky Rabbit this was distributed by Winkler Pictures, therefore Disney didn’t make a lot of money. Winkler’s Husband took over the company in 1928 and Disney ended up losing the contract of Oswald. He was unsuccessful in trying to take over Disney so instead started his own animation studio called Snappy Comedies, and hired away four of Disney’s primary animators to do this. Disney planned to get back on track by coming up with the idea of a mouse character which he originally named Mortimer but when Lillian (Disney’s wife) disliked the name, the mouse was renamed Mickey Mouse. -

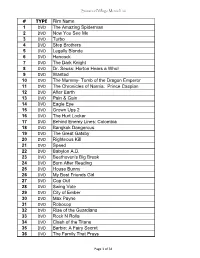

DVD LIST 05-01-14 Xlsx

Seawood Village Movie List # TYPE Film Name 1 DVD The Amazing Spiderman 2 DVD Now You See Me 3 DVD Turbo 4 DVD Step Brothers 5 DVD Legally Blonde 6 DVD Hancock 7 DVD The Dark Knight 8 DVD Dr. Seuss: Horton Hears a Who! 9 DVD Wanted 10 DVD The Mummy- Tomb of the Dragon Emperor 11 DVD The Chronicles of Narnia: Prince Caspian 12 DVD After Earth 13 DVD Pain & Gain 14 DVD Eagle Eye 15 DVD Grown Ups 2 16 DVD The Hurt Locker 17 DVD Behind Enemy Lines: Colombia 18 DVD Bangkok Dangerous 19 DVD The Great Gatsby 20 DVD Righteous Kill 21 DVD Speed 22 DVD Babylon A.D. 23 DVD Beethoven's Big Break 24 DVD Burn After Reading 25 DVD House Bunny 26 DVD My Best Friends Girl 27 DVD Cop Out 28 DVD Swing Vote 29 DVD City of Ember 30 DVD Max Payne 31 DVD Robocop 32 DVD Rise of the Guardians 33 DVD Rock N Rolla 34 DVD Clash of the Titans 35 DVD Barbie: A Fairy Secret 36 DVD The Family That Preys Page 1 of 34 Seawood Village Movie List # TYPE Film Name 37 DVD Open Season 2 38 DVD Lakeview Terrace 39 DVD Fire Proof 40 DVD Space Buddies 41 DVD The Secret Life of Bees 42 DVD Madagascar: Escape 2 Africa 43 DVD Nights in Rodanthe 44 DVD Skyfall 45 DVD Changeling 46 DVD House at the End of the Street 47 DVD Australia 48 DVD Beverly Hills Chihuahua 49 DVD Life of Pi 50 DVD Role Models 51 DVD The Twilight Saga: Twilight 52 DVD Pinocchio 70th Anniversary Edition 53 DVD The Women 54 DVD Quantum of Solace 55 DVD Courageous 56 DVD The Wolfman 57 DVD Hugo 58 DVD Real Steel 59 DVD Change of Plans 60 DVD Sisterhood of the Traveling Pants 61 DVD Hansel & Gretel: Witch Hunters 62 DVD The Cold Light of Day 63 DVD Bride & Prejudice 64 DVD The Dilemma 65 DVD Flight 66 DVD E.T. -

New Cultural Horizons: 3D

The Turkish Online Journal of Design, Art and Communication - TOJDAC April 2012 Volume 2 Issue 2 NEW CULTURAL HORIZONS: 3D Ioana MISCHIE I.L. Caragiale National University of Theatre and Film, Scriptwriting, Bucharest, Romania [email protected] ABSTRACT Nowadays, we are witnessing new horizons in the contemporary cinema: the 3D technique’s giant step. The question is: is 3D a valid criteria? The filmmaker wants you to live with the characters, offering Cinema an incredible flexibility as a multicultural platform. We have no universal scale at our disposal to measure the quality of a good story, of a memorable film, or even of an innovative technique. It is more a matter of communis opinio: what is generally accepted as good quality. At the start of the film you have to engage the minds and hearts of the audience. It’s crucial, it is a hyatus. The presentation is based on individual film analysis, exemplified by interviews with James Cameron (the director of “Avatar”), with Wim Wenders (the director of the 3D documentary “Pina”) and as well, will take into consideration a case study focused on how the Romanian public understands 3D. Keywords: 3D, Cinema, New cultural wave, Storytelling 1. INTRODUCTION In his book “The Century”, Alain Badiou asks himself : “How many years has century? A hundred years?” Is a century really defined by the number of years or by something more intrinsic than that? His question is, in fact: are our criteria efficient enough? Extrapolating, how can we measure three-dimensionality, culture, new media, digitalization, democracy, fairness or happiness? Which one is our main criteria? Box office? Google searches? Or maybe… something deeply connected to our inner selves, our inner perception, our own cultural imago mundi. -

Sing. Dance. Laugh. Repeat!

Sing. Dance. Laugh. Repeat! BRING HOME HAPPY WITH DREAMWORKS TROLLS ON DIGITAL HD JAN. 24 AND BLU-RAY™ & DVD FEB. 7 DREAMWORKS TROLLS PARTY EDITION INCLUDES NEVER-BEFORE-SEEN SING-ALONG, DANCE-ALONG “PARTY MODE”, AND IS PACKED WITH FUN, COLORFUL EXTRAS, PERFECT FOR YOUR FAMILY MOVIE NIGHT! LOS ANGELES, CA (Jan. 10, 2016) – A hit with audiences and critics alike, DreamWorks Animation’s TROLLS, is the “feel-good” movie of the year receiving an outstanding audience reaction with a coveted “A” CinemaScore® and a Certified Fresh rating on RottenTomatoes.com. DreamWorks TROLLS, the fresh, music- filled adventure packed with humor and heart dances onto Digital HD on Jan. 24 and Blu-ray™ and DVD on Feb. 7 from Twentieth Century Fox Home Entertainment. The colorful, richly textured Troll Village is full of optimistic Trolls, who are always ready to sing, dance and party. When the comically pessimistic Bergens invade, Poppy (Anna Kendrick), the happiest Troll ever born, and the overly-cautious, curmudgeonly Branch (Justin Timberlake) set off on an epic journey to rescue her friends. Their mission is full of adventure and mishaps, as this mismatched duo try to tolerate each other long enough to get the job done. From Shrek franchise veterans Mike Mitchell (Shrek Forever After) and Walt Dohrn (Shrek 2, Shrek the Third), TROLLS features the voice talents of Golden Globe® nominee Anna Kendrick (Pitch Perfect, The Accountant), Grammy and Emmy® Award winner and Golden Globe® Award nominee Justin Timberlake (Friends with Benefits, Shrek the Third), Golden -

The Baylor Men Are Ranked, and the Lariat Previews In-Depth. Page B4

FRIDAY | NOVEMBER 4, 2011 Sports www.baylorlariat.com Saving the day Pressing on Baylor has had one of its best seasons, The No. 1 Lady Bears start their season and the keeper is a big reason why with two easy exhibition victories Vol. 112 No. 37 Dazed & Confused© 2011, Baylor University >> After two straight blowout losses on the road, can Baylor return to glory in front of the homecoming crowd? Page B3 >> The Baylor secondary has faced its share of challenges this season. Page B5 >> The Baylor men are ranked, and the Lariat previews ABOVE: ASSOCIATED PRESS in-depth. Page B4 LEFT: MATTHEW MCCARROLL | LARIAT PHOTOGRAPHER FRIDAY | NOVEMBER 4, 2011 | the Sports B2 Baylor Lariat www.baylorlariat.com Big 12 Weekly Review Key matchup’s early hype downgrades after disappointing start ago. Saturday the Cowboys will touchdown with an 8-yard rush. meant Baylor couldn’t propel past Justin Blackmon - That in itself should add plenty host the Kansas State Wildcats. Tech’s offense was on the field Oklahoma State. Junior receiver of fuel to the offensive fire for a Offensively, Oklahoma Statefor less than 20 minutes. This Saturday is homecoming Sooner squad that will take on should be able to control their own It’s hard to tell which Red for Baylor, which brings extra touchdown. Texas A&M Saturday. destiny. Raider team will show up in Austin motivation to get a W. I o w a On the other side of the ball, on Saturday; the team who won Missouri is calling Griffin III an S t a t e Texas A&M (5-3, 3-2) the number one priority needs in Norman or the team left in iPhone because he can do it all. -

Real Steel : Place Aux Géants D'acier Sur Le Ring

ARTICLE par Cécile Dard | 10 octobre 2011 Real Steel : place aux géants d'acier sur le ring Les films pour enfants mettent souvent en scène des robots ; en voici un tout à fait adapté aux parents ! Très réalistes, ce film aborde la séparation, les problèmes liés à la carrière, les liens familiaux et la passion des foules pour les combats. Un univers où Max et son papa Charlie, un papa super sexy, nous emmènent avec brio. Une famille qui se recompose Real Steel, c'est l'histoire d'un père et de son fils qui vont renouer grâce à une passion commune : les combats de robots. Hugh Jackman incarne un "mauvais père", qui peine à se souvenir de l'âge de son fils Max, essaye de le vendre, le nourrit de hamburgers, et vit d'arnaques et de bagarres. Cet homme en perdition retrouve un avenir grâce à l'amour de son fils, orphelin de mère. L'histoire se déroule sur fond de combats de robots d'un réalisme troublant, avec des scènes spectaculaires. Découvrez la bande annonce : Allo maman Robot A mi-chemin entre Rocky et Astro Boy, Real Steel est un beau film à voir à partir de 7 ans, car il contient de nombreuses scènes de combats impressionnants. Le mélange entre les acteurs réels et les robots virtuels est tout à fait réussi. De quoi faire rêver les petits garçons qui jouent avec les robots qui se transforment ! Bien réalisé et mis en scène, le film impressionne avec son savant mélange du monde d'aujourd'hui et de celui qu'il pourrait être demain. -

Copyright by Alexander Joseph Brannan 2021

Copyright by Alexander Joseph Brannan 2021 The Thesis Committee for Alexander Joseph Brannan Certifies that this is the approved version of the following Thesis: Artful Scares: A24 and the Elevated Horror Cycle APPROVED BY SUPERVISING COMMITTEE: Thomas Schatz, Supervisor Alisa Perren Artful Scares: A24 and the Elevated Horror Cycle by Alexander Joseph Brannan Thesis Presented to the Faculty of the Graduate School of The University of Texas at Austin in Partial Fulfillment of the Requirements for the Degree of Master of Arts The University of Texas at Austin May 2021 Acknowledgements This project would not have been completed were it not for the aid and support of a number of fine folks. First and foremost, my committee members Thomas Schatz and Alisa Perren, who with incisive notes have molded my disparate web of ideas into a legible, linear thesis. My fellow graduate students, who have kept me sane during the COVID-19 pandemic with virtual happy hours and (socially distant) meetups in the park serving as brief respites from the most trying of academic years. In addition, I am grateful to the University of Texas at Austin and the department of Radio-Television-Film in the Moody College of Communications for all of the resources and opportunities they have provided to me. Finally, my parents, who have enough pride on my behalf to trump my doubts. Here’s hoping they don’t find these chapters too mind-numbingly dull. Onward and upward! iv Abstract Artful Scares: A24 and the Elevated Horror Cycle Alexander Joseph Brannan, M.A. The University of Texas at Austin, 2021 Supervisor: Thomas Schatz One notable cycle of production in horror cinema in the 2010s was so-called “elevated horror.” The independent company A24 has contributed heavily to this cycle. -

21St Century Film Criticism: the Volute Ion of Film Criticism from Professional Intellectual Analysis to a Democratic Phenomenon Asher Weiss

CORE Metadata, citation and similar papers at core.ac.uk Provided by Keck Graduate Institute Claremont Colleges Scholarship @ Claremont CMC Senior Theses CMC Student Scholarship 2018 21st Century Film Criticism: The volutE ion of Film Criticism from Professional Intellectual Analysis to a Democratic Phenomenon Asher Weiss Recommended Citation Weiss, Asher, "21st Century Film Criticism: The vE olution of Film Criticism from Professional Intellectual Analysis to a Democratic Phenomenon" (2018). CMC Senior Theses. 1910. http://scholarship.claremont.edu/cmc_theses/1910 This Open Access Senior Thesis is brought to you by Scholarship@Claremont. It has been accepted for inclusion in this collection by an authorized administrator. For more information, please contact [email protected]. Claremont McKenna College 21st Century Film Criticism: The Evolution of Film Criticism from Professional Intellectual Analysis to a Democratic Phenomenon Submitted to Professor James Morrison By Asher Weiss For Senior Thesis Spring 2018 April 23, 2018 Weiss 2 “In the arts, the critic is the only independent source of information. The rest is advertising.” – Pauline Kael “Good films by real filmmakers aren’t made to be decoded, consumed or instantly comprehended.” – Martin Scorsese “Firms and aggregators have set a tone that is hostile to serious filmmakers, suggesting that they have conditioned viewers to be less interested in complex offerings.” – Martin Scorsese Weiss 3 Acknowledgements I would like to thank my reader, Professor James Morrison for his continued mentorship and support over the last four years which has greatly improved my film knowledge and writing. Thank you Professor Morrison, for always challenging me to look at films in new ways and encouraging me to express my true opinions.