Detecting Malicious Accounts Showing Adversarial Behavior in Permissionless Blockchains

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Reasons and Decision: in the Matter of 3Iq Corp. and the Bitcoin Fund

Ontario Commission des 22nd Floor 22e étage Securities valeurs mobilières 20 Queen Street West 20, rue Queen Ouest Commission de l’Ontario Toronto ON M5H 3S8 Toronto ON M5H 3S8 Citation: 3iQ Corp (Re), 2019 ONSEC 37 Date: 2019-10-29 File No. 2019-7 IN THE MATTER OF 3IQ CORP. and THE BITCOIN FUND REASONS AND DECISION (Section 8 of the Securities Act, RSO 1990, c S.5) Hearing: June 3, 6, 7 and July 24, 2019 Decision: October 29, 2019 Panel: Lawrence P. Haber Commissioner and Chair of the Panel Appearances: Christopher Naudie For 3iQ Corp. and The Bitcoin Fund Lori Stein Evan Thomas Louis Tsilivis Michelle Vaillancourt For Staff of the Ontario Securities Alvin Qian Commission TABLE OF CONTENTS I. OVERVIEW .............................................................................................. 1 II. BACKGROUND.......................................................................................... 2 A. The Application ............................................................................... 2 B. Bitcoin ........................................................................................... 3 III. PRELIMINARY ISSUES ............................................................................... 4 IV. ISSUES ................................................................................................... 5 V. IS BITCOIN AN ILLIQUID ASSET SUCH THAT THE FUND WILL NOT BE COMPLIANT WITH THE NI 81-102 RESTRICTIONS ON ILLIQUID ASSETS? ......... 6 A. Law on Liquidity ............................................................................. -

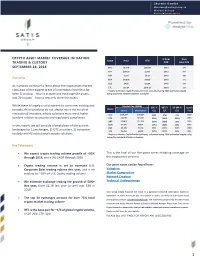

Crypto Asset Market Coverage Initiation: Trading

Sherwin Dowlat [email protected] Michael Hodapp [email protected] CRYPTO ASSET MARKET COVERAGE INITIATION: % from Days Name Price ATH TRADING & CUSTODY ATH Since ATH SEPTEMBER 18, 2018 BTC $6,270 $20,089 (69%) 274 ETH $197.86 $1,432 (86%) 247 XRP $.273 $3.84 (93%) 256 Overview BCH $420.06 $4,330 (90%) 271 EOS $4.90 $22.89 (79%) 141 As investors continue to learn about the cryptoasset market LTC $52.34 $375.29 (86%) 272 class, two of the biggest areas of uncertainty have thus far * Refers to Market Capitalization estimate, calculated using 2050 estimated supply using respective network inflation schedules been 1) trading – how to acquire and exchange the assets, and 2) custody – how to securely store the assets. While there is largely a solid market for consumer trading and Market Cap ($MM) 30D % 90D % 52-Wk % Launch Name custody, these products do not always meet the needs of Current 2050 Implied* G/L G/L G/L Year institutional investors, whose solutions must meet higher BTC $108,304 $131,567 (3%) (7%) 45% 2009 burdens relative to security and regulatory compliance. ETH $20,186 $29,081 (35%) (62%) (30%) 2015 XRP $10,874 $27,316 (19%) (50%) 38% 2013 In this report, we will provide a breakdown of the current BCH $7,290 $8,814 (27%) (53%) (2%) 2017 $4,438 $7,150 (6%) (54%) 643% 2018 landscape for 1) exchanges, 2) OTC providers, 3) consumer EOS LTC $3,053 $4,390 (10%) (47%) (4%) 2011 custody and 4) institutional custody solutions. * Refers to Market Capitalization estimate, calculated using 2050 estimated supply using respective network inflation schedules. -

List of Bitcoin Companies

List of bitcoin companies This is a list of Wikipedia articles about for-profit companies with notable commercial activities related to bitcoin. Common services are cryptocurrency wallet providers, bitcoin exchanges, payment service providers[a] and venture capital. Other services include mining pools, cloud mining, peer-to-peer lending, exchange-traded funds, over-the-counter trading, gambling, micropayments, affiliates and prediction markets. Headquarters Company Founded Service Notes Refs Country City bitcoin exchange, wallet Binance 2017 Japan Tokyo [1] provider bitcoin exchange, wallet Bitcoin.com [data unknown/missing] Japan Tokyo provider bitcoin exchange, digital Hong currency exchange, Bitfinex 2012 Kong electronic trading platform United San multisignature security BitGo 2013 States Francisco platform for bitcoin ASIC-based bitcoin BitMain 2013 China Beijing miners cryptocurrency BitMEX 2014 derivatives trading Seychelles platform United payment service BitPay 2011 Atlanta States provider Bitstamp 2011 bitcoin exchange Luxembourg bitcoin debit card, Bitwala 2015 Berlin international transfers, [2] Germany bitcoin wallet Blockchain.com 2011 wallet provider Luxembourg United San Blockstream 2014 software States Francisco shut down by the United BTC-e 2011 Russia bitcoin exchange States government in July 2017 Canaan ASIC-based bitcoin 2013 China Beijing Creative miners United Circle 2013 Boston wallet provider States United San wallet provider, bitcoin Coinbase 2012 States Francisco exchange bitcoin/ether exchange, wallet provider, -

Investing in Cryptocurrency, the Labyrinth of Tax Reporting

Investing in Cryptocurrency, the Labyrinth of Tax Reporting This article is an extract of the CH Alliance yearly publication This article is an extract of the CH Alliance yearly publication on Innovation for Financial Services. This 2019 edition includes several articles on the “Tokenization” of the economy, expressed by the Initial Coin Offering (ICO) wave, which is now becoming a semi-regulated activity in major financial centers - the first step to global recognition. The remaining articles provide insights on hot topics for several Financial Services sub industries: AI for Wealth Management, chatbots in B2C Banking, autonomous vehicles and catastrophe bonds in Insurance, hot Fintech for Real Estate finance management and data visualization in CIB. CH&Co. is a leading Swiss consulting firm focused exclusively on the Financial Services Industry. With 7 offices worldwide and over 300 professionals, we proudly serve the industry’s major banks, insurance companies, wealth managers and investment funds. To read more please visit Chappuishalder.com 1 Tokenisation challenges and opportunities Investing in Cryptocurrency, the Labyrinth of Tax Reporting CRYPTOCURRENCY, A NEW ERA OF INVESTMENTS Like all investments, undisclosed Cryptocurrency markets gained significant profits will be sanctioned and power over the past three years. With their now more than ever, with the rapid surges and collapses in value, virtu- emergence of cryptocurrencies, al currencies have shifted into the public eye regulators are eager to catch in recent years. Numerous investors started uncompliant investors with brand trading cryptocurrencies on brand new online new regulations. platforms with the hope of making some good money quickly. But this craze also woke up the interest of regulators and tax administrations. -

Asia's Crypto Landscape

A MESSARI REPORT Asia’s Crypto Landscape The key exchanges, funds, and market makers that define crypto in China, Japan, Korea, Hong Kong, Singapore, and Southeast Asia, with commentary on regulatory and investment trends. SPONSORED BY Author Mira Christanto RESEARCH ANALYST AT MESSARI Mira is a Research Analyst at Messari. Previously, she was a Senior Portfolio Manager at APG Asset Management, managing a US $7 billion fund focused on Real Estate equity investments across Asia Pacific. Prior to APG, Mira worked at $15 billion hedge fund TPG-Axon Capital Management, an affiliate of Texas Pacific Group, where she was a private and public market investor across multiple sectors and geographies. Before that, Mira was in Credit Suisse’s Investment Banking Division in the Leveraged Finance and Restructuring group in New York and worked on a variety of mergers & acquisitions, leveraged buyouts and restructuring deals. She received a BA in Economics and Mathematical Methods in the Social Sciences from Northwestern University. Never miss an update Real-time monitoring and alerts for all the assets you support Built for: • Funds • Exchanges • Custodians • Infrastructure Providers Learn More Asia’s Crypto Landscape 2 © 2021 Messari Table of Contents Introduction 5 SBI Group 67 SBI Virtual Currencies Trade 68 1.0 The Countries 8 TaoTao 69 GMO Coin 69 China 8 Bitpoint 69 Hong Kong 13 DMM Bitcoin 69 Japan 15 Rakuten Wallet 70 LVC (BITMAX) 70 South Korea 20 B Dash Ventures & B Cryptos 71 Singapore 23 Rest of Southeast Asia 25 South Korea 72 Philippines -

BITCOIN at the Tipping Point

BITCOIN At the Tipping Point Citi GPS: Global Perspectives & Solutions March 2021 Data provided by Citi is one of the world’s largest financial institutions, operating in all major established and emerging markets. Across these world markets, our employees conduct an ongoing multi-disciplinary conversation – accessing information, analyzing data, developing insights, and formulating advice. As our premier thought leadership product, Citi GPS is designed to help our readers navigate the global economy’s most demanding challenges and to anticipate future themes and trends in a fast-changing and interconnected world. Citi GPS accesses the best elements of our global conversation and harvests the thought leadership of a wide range of senior professionals across our firm. This is not a research report and does not constitute advice on investments or a solicitations to buy or sell any financial instruments. For more information on Citi GPS, please visit our website at www.citi.com/citigps. Citi GPS: Global Perspectives & Solutions March 2021 Sandy Kaul Richard Webley Global Head of Citi Business Advisory Head of Citi Global Data Insights Services +1-212-723-4576 | [email protected] +1-212-723-5118 | [email protected] Jonathan Klein Shobhit Maini Associate, Citi Business Advisory Services Markets & Securities Services Transformation +1-212-723-2622 | [email protected] +44-20-7508-7470 | [email protected] Omid Malekan Ioana Niculcea Blockchain Innovation Expert FinTech & Emerging Technology Lead, Citi Ventures Citi Business Advisory Services Adjunct Professor, Columbia Business School +1-212-723-5075 | [email protected] [email protected] March 2021 Citi GPS: Global Perspectives & Solutions 3 BITCOIN At the Tipping Point Kathleen Boyle, CFA The first Citi GPS venture into digital currency was back in 2014 when we featured it Managing Editor, Citi GPS in an article in our second Disruptive Innovations report. -

Detecting Fake Trading Volume on Cryptocurrency Exchange By

Detecting Fake Trading Volume on Cryptocurrency Exchange by Yuting Wu An honors thesis submitted in partial fulfillment of the requirements for the degree of Bachelor of Science Undergraduate College Leonard N. Stern School of Business New York University May 2019 Professor Marti G. Subrahmanyam Professor David L. Yermack Faculty Adviser Thesis Adviser 1 Introduction To date, there are more than 500 cryptocurrency exchanges that support active trading of cryptocurrencies and the combined 24-hour volume of the whole market is up to billions of dollars. However, most exchanges were founded within the last five years and can be founded by anybody without any inspection, regulation, or warranty. About 120 new crypto exchanges were created in 2018 and also made themselves known to the public, but the estimated total number of new exchanges exceeds 2001. In fact, getting the total count of cryptocurrency exchanges around the world is nearly impossible. Many exchanges have failed or bailed, and some exchanges are created just to scam investors. The only channel for customers to compare and know exchanges is through exchange ranking websites, and CoinMarketCap is the most popular one which ranks exchanges by their daily trading volume. As a higher trading volume means better liquidity, popularity, and recognition from the public, for these exchanges, a higher ranking can serve as a better propaganda than any advertisement or social media campaign. As CoinMarketCap takes data directly from exchange's website, the trading volume is completely self-reported. Therefore, many exchanges start using bots to fake trades and create fraudulent high volume to get better ranking and exposure to the public. -

Address Clustering Heuristics for Ethereum

Address clustering heuristics for Ethereum Friedhelm Victor[0000−0001−8329−3133] Technical University of Berlin, Straße des 17. Juni 135, 10623 Berlin, Germany [email protected] Abstract. For many years, address clustering for the identification of entities has been the basis for a variety of graph-based investigations of the Bitcoin blockchain and its derivatives. Especially in the field of fraud detection it has proven to be useful. With the popularization and increasing use of alternative blockchains, the question arises how to rec- ognize entities in these new systems. Currently, there are no heuristics that can directly be applied to Ethereum's account balance model. This drawback also applies to other smart contract platforms like EOS or NEO, for which previous transaction network analyses have been lim- ited to address graphs. In this paper, we show how addresses can be clustered in Ethereum, yielding entities that are likely in control of mul- tiple addresses. We propose heuristics that exploit patterns related to deposit addresses, multiple participation in airdrops and token autho- rization mechanisms. We quantify the applicability of each individual heuristic over the first 4 years of the Ethereum blockchain and illustrate identified entities in a sample token network. Our results show that we can cluster 17.9% of all active externally owned account addresses, indi- cating that there are more than 340,000 entities that are likely in control of multiple addresses. Comparing the heuristics, we conclude that the deposit address heuristic is currently the most effective approach. Keywords: Blockchain · Accounts · Ethereum · Network Analysis. 1 Introduction Since the introduction and popularization of Bitcoin [22] in 2009, blockchain and cryptocurrency analysis has gained a foothold in science as well as in business. -

21 Cryptos Magazine 2018

MARCH 2018 N] EdItIo [ReBrAnD RYPTOS 21 C ZINE MAGA NdAr OiN CaLe t sIs + c MeN AnAlY NtErTaIn s lEs + e sSoN ArTiC aDiNg lE s + tR ViEwS GuIdE iNtEr iCkS + PrO PPAGE 1 21 CRYPTOS ISSUE 5 THE 21C PRO SQUAD (In alphabetical order) INDEX ANBESSA @anbessa100 BEASTLORION @Beastlyorion WELCOME 02-04 BITCOIN DAD @bitcoin_dad PARABULLIC TROLL @Crypto_God CRYPTO CATCH-UP 05-07 BULLY @cryptobully CRYPTO BULLDOG @cryptobulld0g TOP PICKS 21-16 08-14 CRYPTO CHIEF @cryptochief_ IVAN S. (CRYPTOGAT) @cryptogat A LESSON WITH THE TUTOR 15-17 CRYPTOMANIAC @happywithcrypto LESSON 4 CRYPTORCA @cryptorca CRYPTO RAND @crypto_rand TOP PICKS 15-11 18-22 CRYPTOTUTOR @cryptotutor D. DICKERSON @dickerson_des JOE’S ICOS 23-26 FLORIAN @Marsmensch JOE @CryptoSays TOP PICKS 10- 6 27-31 JOE CRYPTO @cryptoridy MISSNATOSHI @missnatoshi FLORIAN’S SECURITY 32-36 MOCHO17 @cryptomocho NOTSOFAST @notsofast MISSNATOSHI’S CORNER 37-40 PAMELA PAIGE @thepinkcrypto PATO PATINYO @CRYPTOBANGer TOP 5 PICKS 41-46 THEBITCOINBEAR @thebitcoinbare UNCLE YAK @yakherders PINK PAGES 47 NEEDACOIN @needacoin PINKCOIN’S CREATIVE @elypse_pink DESIGN DIRECTOR COLLEEN’S NOOB JOURNEY 48-51 CEO & EDITOR-IN-CHIEF THE COIN BRIEF V PAMELA’S AMA 52-58 @GameOfCryptos BURGER’S ANALYSIS 59-60 PRODUCTION MANAGER NOTSOFAST’S MINING PART 2 61-64 ÍRIS @n00bqu33n CRYPTO ALL- STARS 65-69 ART DIRECTOR BEAST’S TRADING DOJO 70-73 ANANKE @anankestudio BULLDOG’S FA 74-80 EDITOR COLLEEN G DECENTRALADIES @cryptonoobgirl IRIS ASKS 81-88 DESIGNER PAMELA’S DIARY 89-91 TIFF CRYPTO CULTURE 92-95 @tiffchau SOCIAL MEDIA MANAGER EVENT CALENDAR 96-102 JAMIE SEE YA NEXT MONTH! 103 @CryptoHulley PAGE 1 21 CRYPTOS ISSUE 5 DISCLAIMER Here’s a bit of the boring disclaimery type stuff. -

(Upbit Market Index) Methodology Book

UpbitIndexLaboratory UBMI(UpbitMarketIndex) MethodologyBook version1.1 UpbitMarketIndex(UBMI)is ThestructureofindexesthatcomprisedigitalassetslistedonUpbitExchangeandiscalculatedin realtime.Itisdesignedtocalculatetheindexesthatrepresentthedigitalassetmarketusingthe transactiondataofthedigitalassetslistedontheExchange.ItisannouncedontheUpbitIndex page(https://ubcindex.com)dailyandinrealtime. Methodologyandrules|UpbitIndexLaboratory 목차 1. Index Overview.......................................................................................................................3 1.1CompositionStructure...................................................................................................4 1.2ListofIndexes................................................................................................................5 2. Composite Market Index.........................................................................................................6 2.1IndexComponents.........................................................................................................8 2.2WeightingMethod.........................................................................................................9 2.3PriceandCirculatingSupply...................................................................................10 Price............................................................................................................................10 CriculatingSupply........................................................................................................10 -

Huobi 19 Robinhood 20 Shapeshift 20 Tzero 21 Custodians 21 Other 22 IV

Security Token Economy & Investments #3 Review of important news and content New Bermuda Legislation Will Create a Novel Class of Bank to Service Fintech Companies. www.zeus.exchange List of content Introduction 2 I. Legal status in the world 3 Bermuda 3 China 3 EU 3 Hong Kong 4 India 4 Iran 4 Japan 5 Malta 5 Netherlands 5 Philippines 5 Poland 5 Singapore 6 South Korea 6 Switzerland 6 Thailand 6 UK 7 USA 7 II. Tokenization 10 III. Cryptoexchanges & Trading platforms 14 Binance 14 Bitmex 14 Bithumb 15 Bittrex 16 Coinbase 16 eToro 19 Huobi 19 Robinhood 20 Shapeshift 20 tZero 21 Custodians 21 Other 22 IV. Top investments in blockchain startups 25 Argo Blockchain IPO 25 Atlas Protocol 25 Audius 25 Australian State Government 25 Zeus.Exchange - We Tokenize Stock Markets 0 Binance 26 Bitmain 26 Blockchain Startups 26 Dfinity startup 27 Decentraland 27 Golden Gate Ventures 27 Hong Kong University 27 LINE 28 LuneX Ventures 28 NFL Players Association 28 Pantera Capital 28 Review.Network 29 SBI Holdings 29 South Korea 29 SFOX 29 V. Top content (trading & tokenization) 31 Security Tokens 31 Tokenization 31 Сonclusions 32 Legal disclaimer 33 Zeus.Exchange - We Tokenize Stock Markets 1 Introduction Dear Reader! We are glad to welcome you in our second issue of the analytical edition of the New Blockchain Economy and Investment, where we will regularly publish an overview of the industry news. In this issues we will describe top August news following topics: Legislation News - news across the world regarding the legislation of the blockchain industry and cryptocurrency. -

Digital Asset Law of Thailand After Three Years

DIGITAL ASSET LAW OF THAILAND AFTER THREE YEARS It has been almost three years since the Emergency Decree on Digital Asset Business Operation B.E. 2561 of Thailand (“DA Law”) came into force on 14th May 2018. Since then several implementation rules have been issued by the Ministry of Finance (“MOF”), the Securities and Exchange Commission Thailand (“SEC”) and the SEC Office. This article gives an overview of the implementation and practice of the DA Law from May 2018 to February 2021. 1. Allowed Cryptocurrencies On 11th September 2018, the SEC Office announced a list of 7 cryptocurrencies that have been allowed for ICO investments and transactions and base trading pairs. They are Bitcoin, Bitcoin Cash, Ethereum, Ethereum Classic, Litecoin, Ripple and Stellar. However, the SEC did not recognize or certify that they are a legal tender. Also six months later, the SEC removed Bitcoin Cash, Ethereum Classic and Litecoin from the list. 2. Digital Asset Business Licenses As of 15th February 2021, the MOF has issued 14 licenses to 11 operators as follows:- No. Licensed Operators Type of Permitted Digital Websites Licenses Assets 1 Bitcoin Co., Ltd. Exchange Cryptocurrencies www.bx.in.th (“CC”) and Digital Tokens (“DT”) 2 Bitkub Online Co., Ltd. Exchange CC and DT www.bitkub.com 3 Satang Corporation Co., Ltd. Exchange CC and DT www.satang.pro 4 Huobi (Thailand) Co., Ltd. Exchange CC and DT www.huobi.co.th 5 ER X Co., Ltd. Exchange DT www.er-x.io 6 Zipmex Co., Ltd, Exchange CC and DT www.zipmex.co.th 7 Upbit Exchange (Thailand) Exchange CC and DT www.th.upbit.com Co., Ltd.