A Neurophysiologic Study of Visual Fatigue in Stereoscopic Related Displays

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Rapport AI Internationale Verkenning Van De Sociaaleconomische

RAPPORT ARTIFICIËLE INTELLIGENTIE INTERNATIONALE VERKENNING VAN DE SOCIAAL-ECONOMISCHE IMPACT STAND VAN ZAKEN EIND OKTOBER 2020 5. AI als een octopus met tentakels in diverse sectoren: een illustratie Artificiële intelligentie: sociaal-economische verkenning 5 AI als een octopus met tentakels in diverse sectoren: een illustratie 5.1 General purpose: Duurzame ontwikkeling (SDG’s) AI is een technologie voor algemene doeleinden en kan worden aangewend om het algemeen maatschappelijk welzijn te bevorderen.28 De bijdrage van AI in het realiseren van de VN- doelstellingen voor duurzame ontwikkeling (SDG's) op het vlak van onder meer onderwijs, gezondheidszorg, vervoer, landbouw en duurzame steden, vormt hiervan het beste bewijs. Veel openbare en particuliere organisaties, waaronder de Wereldbank, een aantal agentschappen van de Verenigde Naties en de OESO, willen AI benutten om stappen vooruit te zetten op de weg naar de SDG's. Uit de database van McKinsey Global Institute29 met AI-toepassingen blijkt dat AI ingezet kan worden voor elk van de 17 duurzame ontwikkelingsdoelstellingen. Onderstaande figuur geeft het aantal AI-use cases (toepassingen) in de database van MGI aan die elk van de SDG's van de VN kunnen ondersteunen. Figuur 1: AI use cases voor de ondersteuning van de SDG’s30 28 Steels, L., Berendt, B., Pizurica, A., Van Dyck, D., Vandewalle,J. Artificiële intelligentie. Naar een vierde industriële revolutie?, Standpunt nr. 53 KVAB, 2017. 29 McKinsey Global Institute, notes from the AI frontier applying AI for social good, Discussion paper, December 2018. Chui, M., Chung, R., van Heteren, A., Using AI to help achieve sustainable development goals, UNDP, January 21, 2019 30 Noot McKinsey: This chart reflects the number and distribution of use cases and should not be read as a comprehensive evaluation of AI potential for each SDG; if an SDG has a low number of cases, that is a reflection of our library rather than of AI applicability to that SDG. -

Stereo Capture and Display At

Creation of a Complete Stereoscopic 3D Workflow for SoFA Allison Hettinger and Ian Krassner 1. Introduction 1.1 3D Trend Stereoscopic 3D motion pictures have recently risen to popularity once again following the success of films such as James Cameron’s Avatar. More and more films are being converted to 3D but few films are being shot in 3D. Current available technology and knowledge of that technology (along with cost) is preventing most films from being shot in 3D. Shooting in 3D is an advantage because two slightly different images are produced that mimic the two images the eyes see in normal vision. Many take the cheaper route of shooting in 2D and converting to 3D. This results in a 3D image, but usually nowhere near the quality as if the film was originally shot in 3D. This is because a computer has to create the second image, which can result in errors. It is also important to note that a 3D image does not necessarily mean a stereo image. 3D can be used to describe images that have an appearance of depth, such as 3D animations. Stereo images refer to images that make use of retinal disparity to create the illusion of objects going out of and into the screen plane. Stereo images are optical illusions that make use of several cues that the brain uses to perceive a scene. Examples of monocular cues are relative size and position, texture gradient, perspective and occlusion. These cues help us determine the relative depth positions of objects in an image. Binocular cues such as retinal disparity and convergence are what give the illusion of depth. -

Appearance-Based Gaze Estimation with Deep Learning: a Review and Benchmark

MANUSCRIPT, APRIL 2021 1 Appearance-based Gaze Estimation With Deep Learning: A Review and Benchmark Yihua Cheng1, Haofei Wang2, Yiwei Bao1, Feng Lu1,2,* 1State Key Laboratory of Virtual Reality Technology and Systems, SCSE, Beihang University, China. 2Peng Cheng Laboratory, Shenzhen, China. fyihua c, baoyiwei, [email protected], [email protected] Abstract—Gaze estimation reveals where a person is looking. It is an important clue for understanding human intention. The Deep learning algorithms recent development of deep learning has revolutionized many computer vision tasks, the appearance-based gaze estimation is no exception. However, it lacks a guideline for designing deep learn- ing algorithms for gaze estimation tasks. In this paper, we present a comprehensive review of the appearance-based gaze estimation methods with deep learning. We summarize the processing Chip pipeline and discuss these methods from four perspectives: deep feature extraction, deep neural network architecture design, personal calibration as well as device and platform. Since the Platform Calibration Feature Model data pre-processing and post-processing methods are crucial for gaze estimation, we also survey face/eye detection method, data rectification method, 2D/3D gaze conversion method, and gaze origin conversion method. To fairly compare the performance of various gaze estimation approaches, we characterize all the Fig. 1. Deep learning based gaze estimation relies on simple devices and publicly available gaze estimation datasets and collect the code of complex deep learning algorithms to estimate human gaze. It usually uses typical gaze estimation algorithms. We implement these codes and off-the-shelf cameras to capture facial appearance, and employs deep learning set up a benchmark of converting the results of different methods algorithms to regress gaze from the appearance. -

Through the Eyes of the Beholder: Simulated Eye-Movement Experience (“SEE”) Modulates Valence Bias in Response to Emotional Ambiguity

Emotion © 2018 American Psychological Association 2018, Vol. 18, No. 8, 1122–1127 1528-3542/18/$12.00 http://dx.doi.org/10.1037/emo0000421 BRIEF REPORT Through the Eyes of the Beholder: Simulated Eye-Movement Experience (“SEE”) Modulates Valence Bias in Response to Emotional Ambiguity Maital Neta and Michael D. Dodd University of Nebraska—Lincoln Although some facial expressions provide clear information about people’s emotions and intentions (happy, angry), others (surprise) are ambiguous because they can signal both positive (e.g., surprise party) and negative outcomes (e.g., witnessing an accident). Without a clarifying context, surprise is interpreted as positive by some and negative by others, and this valence bias is stable across time. When compared to fearful expressions, which are consistently rated as negative, surprise and fear share similar morphological features (e.g., widened eyes) primarily in the upper part of the face. Recently, we demonstrated that the valence bias was associated with a specific pattern of eye movements (positive bias associated with faster fixation to the lower part of the face). In this follow-up, we identified two participants from our previous study who had the most positive and most negative valence bias. We used their eye movements to create a moving window such that new participants viewed faces through the eyes of one our previous participants (subjects saw only the areas of the face that were directly fixated by the original participants in the exact order they were fixated; i.e., Simulated Eye-movement Experience). The input provided by these windows modulated the valence ratings of surprise, but not fear faces. -

Modulation of Saccadic Curvature by Spatial Memory and Associative Learning

Modulation of Saccadic Curvature by Spatial Memory and Associative Learning Dissertation zur Erlangung des Doktorgrades der Naturwissenschaften (Dr. rer. nat.) dem Fachbereich Psychologie der Philipps-Universität Marburg vorgelegt von Stephan König aus Arnsberg Marburg/Lahn, Oktober 2010 Vom Fachbereich Psychologie der Philipps-Universität Marburg als Dissertation angenommen am _________________ Erstgutachter: Prof. Dr. Harald Lachnit, Philipps Universität Marburg Zweitgutachter: Prof. Dr. John Pearce, Cardiff University Tag der mündlichen Prüfung am: 27.10.2010 Acknowledgment I would like to thank Prof. Dr. Harald Lachnit for providing the opportunity for my work on this thesis. I would like to thank Prof. Dr. Harald Lachnit, Dr. Anja Lotz and Prof. Dr. John Pearce for proofreading, reviewing and providing many valuable suggestions during the process of writing. I would like to thank Peter Nauroth, Sarah Keller, Gloria-Mona Knospe, Sara Lucke and Anja Riesner who helped in recruiting participants as well as collecting data. I would like to thank the Deutsche Forschungsgemeinschaft for their support through the graduate program NeuroAct (DFG 885/1), and project Blickbewegungen als Indikatoren assoziativen Lernens (DFG LA 564/20-1) granted to Prof. Dr. Harald Lachnit. Summary The way the eye travels during a saccade typically does not follow a straight line but rather shows some curvature instead. Converging empirical evidence has demonstrated that curvature results from conflicting saccade goals when multiple stimuli in the visual periphery compete for selection as the saccade target (Van der Stigchel, Meeter, & Theeuwes, 2006). Curvature away from a competing stimulus has been proposed to result from the inhibitory deselection of the motor program representing the saccade towards that stimulus (Sheliga, Riggio, & Rizzolatti, 1994; Tipper, Howard, & Houghton, 2000). -

A Survey of Autonomous Driving: Common Practices and Emerging Technologies

Accepted March 22, 2020 Digital Object Identifier 10.1109/ACCESS.2020.2983149 A Survey of Autonomous Driving: Common Practices and Emerging Technologies EKIM YURTSEVER1, (Member, IEEE), JACOB LAMBERT 1, ALEXANDER CARBALLO 1, (Member, IEEE), AND KAZUYA TAKEDA 1, 2, (Senior Member, IEEE) 1Nagoya University, Furo-cho, Nagoya, 464-8603, Japan 2Tier4 Inc. Nagoya, Japan Corresponding author: Ekim Yurtsever (e-mail: [email protected]). ABSTRACT Automated driving systems (ADSs) promise a safe, comfortable and efficient driving experience. However, fatalities involving vehicles equipped with ADSs are on the rise. The full potential of ADSs cannot be realized unless the robustness of state-of-the-art is improved further. This paper discusses unsolved problems and surveys the technical aspect of automated driving. Studies regarding present challenges, high- level system architectures, emerging methodologies and core functions including localization, mapping, perception, planning, and human machine interfaces, were thoroughly reviewed. Furthermore, many state- of-the-art algorithms were implemented and compared on our own platform in a real-world driving setting. The paper concludes with an overview of available datasets and tools for ADS development. INDEX TERMS Autonomous Vehicles, Control, Robotics, Automation, Intelligent Vehicles, Intelligent Transportation Systems I. INTRODUCTION necessary here. CCORDING to a recent technical report by the Eureka Project PROMETHEUS [11] was carried out in A National Highway Traffic Safety Administration Europe between 1987-1995, and it was one of the earliest (NHTSA), 94% of road accidents are caused by human major automated driving studies. The project led to the errors [1]. Against this backdrop, Automated Driving Sys- development of VITA II by Daimler-Benz, which succeeded tems (ADSs) are being developed with the promise of in automatically driving on highways [12]. -

Virtual Reality and Visual Perception by Jared Bendis

Virtual Reality and Visual Perception by Jared Bendis Introduction Goldstein (2002) defines perception as a “conscious sensory experience” (p. 6) and as scientists investigate how the human perceptual system works they also find themselves investigating how the human perceptual system doesn’t work and how that system can be fooled, exploited, and even circumvented. The pioneers in the ability to control the human perceptual system have been in the field of Virtual Realty. In Simulated and Virtual Realities – Elements of Perception, Carr (1995) defines Virtual Reality as “…the stimulation of human perceptual experience to create an impression of something which is not really there” (p. 5). Heilig (2001) refers to this form of “realism” as “experience” and in his 1955 article about “The Cinema of the Future” where he addresses the need to look carefully at perception and breaks down the precedence of perceptual attention as: Sight 70% Hearing 20% Smell 5% Touch 4% Taste 1% (p. 247) Not surprisingly sight is considered the most important of the senses as Leonardo da Vinci observed: “They eye deludes itself less than any of the other senses, because it sees by none other than the straight lines which compose a pyramid, the base of which is the object, and the lines conduct the object to the eye… But the ear is strongly subject to delusions about the location and distance of its objects because the images [of sound] do not reach it in straight lines, like those of the eye, but by tortuous and reflexive lines. … The sense of smells is even less able to locate the source of an odour. -

Intraocular Lenses and Spectacle Correction

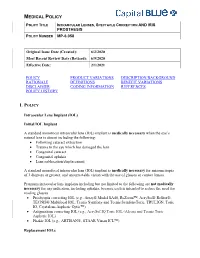

MEDICAL POLICY POLICY TITLE INTRAOCULAR LENSES, SPECTACLE CORRECTION AND IRIS PROSTHESIS POLICY NUMBER MP-6.058 Original Issue Date (Created): 6/2/2020 Most Recent Review Date (Revised): 6/9/2020 Effective Date: 2/1/2021 POLICY PRODUCT VARIATIONS DESCRIPTION/BACKGROUND RATIONALE DEFINITIONS BENEFIT VARIATIONS DISCLAIMER CODING INFORMATION REFERENCES POLICY HISTORY I. POLICY Intraocular Lens Implant (IOL) Initial IOL Implant A standard monofocal intraocular lens (IOL) implant is medically necessary when the eye’s natural lens is absent including the following: Following cataract extraction Trauma to the eye which has damaged the lens Congenital cataract Congenital aphakia Lens subluxation/displacement A standard monofocal intraocular lens (IOL) implant is medically necessary for anisometropia of 3 diopters or greater, and uncorrectable vision with the use of glasses or contact lenses. Premium intraocular lens implants including but not limited to the following are not medically necessary for any indication, including aphakia, because each is intended to reduce the need for reading glasses. Presbyopia correcting IOL (e.g., Array® Model SA40, ReZoom™, AcrySof® ReStor®, TECNIS® Multifocal IOL, Tecnis Symfony and Tecnis SymfonyToric, TRULIGN, Toric IO, Crystalens Aspheric Optic™) Astigmatism correcting IOL (e.g., AcrySof IQ Toric IOL (Alcon) and Tecnis Toric Aspheric IOL) Phakic IOL (e.g., ARTISAN®, STAAR Visian ICL™) Replacement IOLs MEDICAL POLICY POLICY TITLE INTRAOCULAR LENSES, SPECTACLE CORRECTION AND IRIS PROSTHESIS POLICY NUMBER -

Passenger Response to Driving Style in an Autonomous Vehicle

Passenger Response to Driving Style in an Autonomous Vehicle by Nicole Belinda Dillen A thesis presented to the University of Waterloo in fulfillment of the thesis requirement for the degree of Master of Mathematics in Computer Science Waterloo, Ontario, Canada, 2019 c Nicole Dillen 2019 I hereby declare that I am the sole author of this thesis. This is a true copy of the thesis, including any required final revisions, as accepted by my examiners. I understand that my thesis may be made electronically available to the public. ii Abstract Despite rapid advancements in automated driving systems (ADS), current HMI research tends to focus more on the safety driver in lower level vehicles. That said, the future of automated driving lies in higher level systems that do not always require a safety driver to be present. However, passengers might not fully trust the capability of the ADS in the absence of a safety driver. Furthermore, while an ADS might have a specific set of parameters for its driving profile, passengers might have different driving preferences, some more defensive than others. Taking these preferences into consideration is, therefore, an important issue which can only be accomplished by understanding what makes a passenger uncomfortable or anxious. In order to tackle this issue, we ran a human study in a real-world autonomous vehicle. Various driving profile parameters were manipulated and tested in a scenario consisting of four different events. Physiological measurements were also collected along with self- report scores, and the combined data was analyzed using Linear Mixed-Effects Models. The magnitude of a response was found to be situation dependent: the presence and proximity of a lead vehicle significantly moderated the effect of other parameters. -

Gaze Shifts As Dynamical Random Sampling

GAZE SHIFTS AS DYNAMICAL RANDOM SAMPLING Giuseppe Boccignone Mario Ferraro Dipartimento di Scienze dell’Informazione Dipartimento di Fisica Sperimentale Universita´ di Milano Universita´ di Torino Via Comelico 39/41 via Pietro Giuria 1 20135 Milano, Italy 10125 Torino, Italy [email protected] [email protected] ABSTRACT so called “focus of attention” (FOA), to extract detailed in- formation from the visual environment. An average of three We discuss how gaze behavior of an observer can be simu- eye fixations per second generally occurs, intercalated by lated as a Monte Carlo sampling of a distribution obtained saccades, during which vision is suppressed. The succession from the saliency map of the observed image. To such end we of gaze shifts is referred to as a scanpath [1]. propose the Levy Hybrid Monte Carlo algorithm, a dynamic Beyond the field of computational vision,[2], [3],[4], [5] Monte Carlo method in which the walk on the distribution and robotics [6], [7], much research has been performed in landscape is modelled through Levy flights. Some prelimi- the image/video coding [8], [9]. [10], [11] , [12], and im- nary results are presented comparing with data gathered by age/video retrieval domains [13],[14]. eye-tracking human observers involved in an emotion recog- However, one issue which is not addressed by most mod- nition task from facial expression displays. els [15] is the ”noisy”, idiosyncratic variation of the random Index Terms— Gaze analysis, Active vision, Hybrid exploration exhibited by different observers when viewing the Monte Carlo, Levy flights same scene, or even by the same subject along different trials [16] (see Fig. -

Stereo 3D (Depth) from 2D

Stereo 3D (Depth) from 2D • 3D information is lost by Viewing Stereo projection. Stereograms • How do we recover 3D Autostereograms information? Depth from Stereo Image 3D Model Depth Cues Shadows: Occlusions: Shading: Size Constancy Perspective Illusions (perspective): Height in Plane: Texture Gradient: 3D from 2D + Accommodation • Accommodation (Focus) • Change in lens curvature • Eye Vengeance according to object depth. • Effective depth: 20-300 cm. • Motion. • Stereo Accommodation Eye Vergence • Change in lens curvature • Change in lens curvature according to object depth. according to object depth. • Effective depth: 20-300 cm. • Effective depth: up to 6 m. Motion: Motion: Stereo Vision • In a system with 2 cameras (eyes), 2 different images are captured. • The "disparity" between the images is larger for closer objects: 1 disp ∝ depth • "Fusion" of these 2 images gives disparities depth information. Left Right Right Eye Left Eye Right Eye Left Eye Image Separation for Stereo • Special Glasses • Red/green images with red/green glasses. • Orthogonal Polarization • Alternating Shuttering Optic System Optic System Parlor Stereo Viewer 1850 Viewmaster 1939 ViduTech 2011 Active Shutter System Red/Green Filters Anaglyphs Anaglyphs How they Work Orthogonal Polarization Orthogonal Polarization Linear Polarizers: 2 polarized projectors are used (or alternating polarization) Orthogonal Polarization Orthogonal Polarization Circular Polarizers: Circular Polarizers: Left handed Right handed Orthogonal Polarization TV and Computer Screens Polarized Glasses Circular Polarizer Glasses: Same as polarizers – but reverse light direction Left handed Right handed Glasses Free TV and Glasses Free TV and Computer Screens Computer Screens Parallax Stereogram Parallax Stereogram Parallax Barrier display Uses Vertical Slits Blocks part of screen from each eye Glasses Free TV and Glasses Free TV and Computer Screens Computer Screens Lenticular lens method Lenticular lens method Uses lens arrays to send different Image to each eye. -

New Methodology to Explore the Role of Visualisation in Aircraft Design Tasks

220 Int. J. Agile Systems and Management, Vol. 7, Nos. 3/4, 2014 New methodology to explore the role of visualisation in aircraft design tasks Evelina Dineva*, Arne Bachmann, Erwin Moerland, Björn Nagel and Volker Gollnick Deutsches Zentrum für Luft- und Raumfahrt e.V. (DLR), German Aerospace Center, Blohmstr. 18, 21079 Hamburg, Germany E-mail: [email protected] E-mail: [email protected] E-mail: [email protected] E-mail: [email protected] E-mail: [email protected] *Corresponding author Abstract: A toolbox for the assessment of engineering performance in a realistic aircraft design task is presented. Participants solve a multidisciplinary optimisation (MDO) task by means of a graphical user interface (GUI). The quantity and quality of visualisation may be systematically varied across experimental conditions. The GUI also allows tracking behavioural responses like cursor trajectories and clicks. Behavioural data together with evaluation of the generated aircraft design can help uncover details about the underlying decision making process. The design and the evaluation of the experimental toolbox are presented. Pilot studies with two novice and two advanced participants confirm and help improve the GUI functionality. The selection of the aircraft design task is based on a numerical analysis that helped to identify a ‘sweet spot’ where the task is not too easy nor too difficult. Keywords: empirical performance evaluation; visualisation; aircraft design; multidisciplinary optimisation; MDO; multidisciplinary collaboration; collaborative engineering. Reference to this paper should be made as follows: Dineva, E., Bachmann, A., Moerland, E., Nagel, B. and Gollnick, V. (2014) ‘New methodology to explore the role of visualisation in aircraft design tasks’, Int.