Multi-Modal Semantic Inconsistency Detection in Social Media News Posts

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Alex Pareene: Pundit of the Century

Alex Pareene: Pundit of the Century Alex Pareene, first of Wonkette, then Gawker, then Salon, then back to Gawker, then a stillborn First Run Media project, and now Splinter News is a great pundit. In fact, he is a brilliant pundit and criminally underrated. His talent is generally overlooked because he has by-and-large written for outlets derided by both the right and the center. Conservatives have treated Salon as a punching bag for years now, and Gawker—no matter how biting or insightful it got—was never treated as serious by the mainstream because of their willingness to sneer, and even cuss at, the powers that be. If instead Mr. Pareene had been blogging at Mother Jones or Slate for the last ten years, he would be delivering college commencement speeches by now. In an attempt to make the world better appreciate this elucidating polemicist, here are some of his best hits. Mr. Pareene first got noticed, rightfully, for his “Hack List” feature when he was still with Salon. Therein, he took mainstream pundits both “left” and right to task for, well, being idiots. What is impressive about the list is that although it was written years ago, when America’s political landscape was dramatically different from what it is today, it still holds up. In 2012, after noting that while The New York Times has good reporting and that not all of their opinion columns were bad… most of them were. Putting it succinctly: “Ross Douthat is essentially a parody of the sort of conservative Times readers would find palatable, now that David Brooks is a sad shell of his former self, listlessly summarizing random bits of social science and pretending the Republican Party is secretly moderate and reasonable.” Mr. -

Online Media and the 2016 US Presidential Election

Partisanship, Propaganda, and Disinformation: Online Media and the 2016 U.S. Presidential Election The Harvard community has made this article openly available. Please share how this access benefits you. Your story matters Citation Faris, Robert M., Hal Roberts, Bruce Etling, Nikki Bourassa, Ethan Zuckerman, and Yochai Benkler. 2017. Partisanship, Propaganda, and Disinformation: Online Media and the 2016 U.S. Presidential Election. Berkman Klein Center for Internet & Society Research Paper. Citable link http://nrs.harvard.edu/urn-3:HUL.InstRepos:33759251 Terms of Use This article was downloaded from Harvard University’s DASH repository, and is made available under the terms and conditions applicable to Other Posted Material, as set forth at http:// nrs.harvard.edu/urn-3:HUL.InstRepos:dash.current.terms-of- use#LAA AUGUST 2017 PARTISANSHIP, Robert Faris Hal Roberts PROPAGANDA, & Bruce Etling Nikki Bourassa DISINFORMATION Ethan Zuckerman Yochai Benkler Online Media & the 2016 U.S. Presidential Election ACKNOWLEDGMENTS This paper is the result of months of effort and has only come to be as a result of the generous input of many people from the Berkman Klein Center and beyond. Jonas Kaiser and Paola Villarreal expanded our thinking around methods and interpretation. Brendan Roach provided excellent research assistance. Rebekah Heacock Jones helped get this research off the ground, and Justin Clark helped bring it home. We are grateful to Gretchen Weber, David Talbot, and Daniel Dennis Jones for their assistance in the production and publication of this study. This paper has also benefited from contributions of many outside the Berkman Klein community. The entire Media Cloud team at the Center for Civic Media at MIT’s Media Lab has been essential to this research. -

2018 Evaluation Report

2018 Evaluation Report February 2019 Youth Engagement Fund - 2018 Evaluation Report 1 1 2018 Evaluation Report February 2019 PO Box 7748 Albuquerque, NM 87194 Youth Engagement Fund - 2018 Evaluation Report Table of Contents Executive Summary ......................................................................................................................................1 Background ......................................................................................................................................................3 Objectives ............................................................................................................................................3 Methodology .......................................................................................................................................3 Approach ..........................................................................................................................................................4 Metrics ....................................................................................................................................................4 Findings .............................................................................................................................................................5 Voter Registration .............................................................................................................................5 Get Out The Vote (GOTV) ............................................................................................................7 -

The Structural Determinants of Media Contagion by Cameron Alexander Marlow

The Structural Determinants of Media Contagion by Cameron Alexander Marlow B.S. Computer Science University of Chicago, I999 M.S. Media Arts and Sciences Massachusetts Institute of Technology,200I SUBMITTED TO THE PROGRAM IN MEDIA ARTS AND SCIENCES, SCHOOL OF ARCHITECTURE AND PLANNING, IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY AT THE MASSACHUSETTS INSTITUTE OF TECHNOLOGY SEPTEMBER, 2005 O 2005 MASSACHUSETTS INSTITUTE OF TECHNOLOGY. ALL RIGHTS RESERVED. Author Department of Media Arts and Sciences August 2, 2005 Certified by Walter Bender Senior Research Scientist Department of Media Arts and Sciences Thesis Supervisor A Accepted by Andrew Lippman Chairman Departmental Committee for Graduate Students Department of Media Arts and Sciences MASSACHUSETTS INSTIUIE OF TECHNOLOGY ARCHIVES SEP RAR2 IES2005 LIBRARIES Thesis Committee Thesis Supervisor Walter Bender Senior Research Scientist Massachusetts Institute of Technology Department of Media Arts and Sciences Thesis Reader X'At ;/ KeithHampton Assistant Professor Massachusetts Institute of Technology Department of Urban Studies and Planning Thesis Reader Tom Valente Associate Professor University of Southern California Department of Preventative Medicine The Structural Determinants of Media Contagion by Cameron Alexander Marlow Submitted to the Program in Media Arts and Sciences, School of Architecture and Planning, in partial fulfillment of the requirements for the degree of Doctor of Philosophy ABSTRACT Informal exchanges between friends, family and acquaintances play a crucial role in the dissemination of news and opinion. These casual interactions are embedded in a network of communication that spans our society, allowing information to spread from any one person to another via some set of intermediary ties. -

3 PM 8 Journalism Should Not Be Sponsored

The Society of Professional Journalists Board of Directors Zoom Meeting Feb. 1, 2020 Noon – 3 p.m. EST Improving and Protecting Journalism Since 1909 The Society of Professional Journalists is the nation’s largest and most broad-based journalism organization, dedicated to encouraging the free practice of journalism and stimulating high standards of ethical behavior. Founded in 1909 as Sigma Delta Chi, SPJ promotes the free flow of information vital to a well-informed citizenry, works to inspire and educate the next generation of journalists, and protects First Amendment guarantees of freedom of speech and press. AGENDA SOCIETY OF PROFESSIONAL JOURNALISTS BOARD OF DIRECTORS MEETING DATE: FEB. 1, 2020 TIME: NOON-3 P.M. EST JOIN VIA ZOOM AT https://zoom.us/j/250975465 (Meeting ID # 250 975 465) 1. Call to order – Newberry 2. Roll call – Aguilar • Patricia Gallagher Newberry • Matt Hall • Rebecca Aguilar • Lauren Bartlett • Erica Carbajal • Tess Fox • Taylor Mirfendereski • Mike Reilley • Yvette Walker • Andy Schotz (parliamentarian) 3. Minutes from meetings of 10/19/19 and 11/3/19 – Newberry 4. President’s report (attached) – Newberry 5. Executive director’s report (attached) – John Shertzer 6. Update on Strategic Planning Task Force (attached) – Hall 7. Update on EIJ Sponsorship Task Force (attached) – Nerissa Young 8. First Quarter FY20 report (attached) – Shertzer 9. Update on EIJ20 planning – Shertzer/Newberry 10. Committee reports: • Awards & Honors Committee (attached) – Sue Kopen Katcef • Membership Committee (attached) – Colin DeVries • FOI Committee (attached) – Paul Fletcher • Bylaws Committee (attached) – Bob Becker 11. Public comment Enter Executive Session 1 12. SPJ partnership agreements 13. Data management contract 14. -

Feeds That Matter: a Study of Bloglines Subscriptions

Feeds That Matter: A Study of Bloglines Subscriptions Akshay Java, Pranam Kolari, Tim Finin, Anupam Joshi, Tim Oates Department of Computer Science and Electrical Engineering University of Maryland, Baltimore County Baltimore, MD 21250, USA {aks1, kolari1, finin, joshi, oates}@umbc.edu Abstract manually. Recent efforts in tackling these problems have re- As the Blogosphere continues to grow, finding good qual- sulted in Share your OPML, a site where you can upload an ity feeds is becoming increasingly difficult. In this paper OPML feed to share it with other users. This is a good first we present an analysis of the feeds subscribed by a set of step but the service still does not provide the capability of publicly listed Bloglines users. Using the subscription infor- finding good feeds topically. mation, we describe techniques to induce an intuitive set of An alternative is to search for blogs by querying blog search topics for feeds and blogs. These topic categories, and their engines with generic keywords related to the topic. However, associated feeds, are key to a number of blog-related applica- blog search engines present results based on the freshness. tions, including the compilation of a list of feeds that matter Query results are typically ranked by a combination of how for a given topic. The site FTM! (Feeds That Matter) was well the blog post content matches the query and how recent implemented to help users browse and subscribe to an au- it is. Measures of the blog’s authority, if they are used, are tomatically generated catalog of popular feeds for different mostly based on the number of inlinks. -

What Blogs Cost American Business

WHAT BLOGS COST AMERICAN BUSINESS Welcome, S Bradley Search / Interactive News (if this is not you, click Oct. 24, 2005 QwikFIND here) Home » Request Reprints News Hispanic Marketing [Interactive News] Media News Account Action American Demographics WHAT BLOGS COST AMERICAN BUSINESS Data Center In 2005, Employees Will Waste 551,000 Years Career Center Reading Them Marketplace October 24, 2005 My AdAge QwikFIND ID: AAR05Y Print Edition Customer Services By Bradley Johnson Contact Us Media Kit LOS ANGELES (AdAge.com) -- Blog this: U.S. workers in 2005 will waste Privacy Statement the equivalent of 551,000 years reading blogs. Account Intelligence AdCritic.com About 35 million workers -- one in four Agency Preview people in the labor force -- visit blogs Madison+Vine and on average spend 3.5 hours, or Point 9%, of the work week engaged with Encyclopedia them, according to Advertising Age’s AdAgeChina analysis. Time spent in the office on non-work blogs this year will take up the equivalent of 2.3 million jobs. Forget lunch breaks -- blog readers essentially take a daily 40-minute blog break. Bogged down in blogs Currently, the time employees spend While blogs are becoming an accepted reading non-work blogs is the equivalent part of the media sphere, and are of 2.3 million jobs. increasingly being harnessed by marketers -- American Express last week paid a handful of bloggers to discuss small business, following other marketers like General Motors Corp. and Microsoft Corp. into the blogosphere -- they are proving to be competition for traditional media messages and are sapping employees’ time. http://adage.com/news.cms?newsId=46494 (1 of 4)10/24/2005 3:55:17 PM WHAT BLOGS COST AMERICAN BUSINESS Case in point: Gawker Media, blog home of Gawker (media), Wonkette (politics) and Fleshbot (porn). -

Infographic by Ben Fry; Data by Technorati There Are Upwards of 27 Million Blogs in the World. to Discover How They Relate to On

There are upwards of 27 million blogs in the world. To discover how they relate to one another, we’ve taken the most-linked-to 50 and mapped their connections. Each arrow represents a hypertext link that was made sometime in the past 90 days. Think of those links as votes in an endless global popularity poll. Many blogs vote for each other: “blogrolling.” Some top-50 sites don’t have any links from the others shown here, usually because they are big in Japan, China, or Europe—regions still new to the phenomenon. key tech politics gossip other gb2312 23. Fark gouy2k 13. Dooce huangmj 22. Kottke 24. Gawker 40. Xiaxue 2. Engadget 4. Daily Kos 6. Gizmodo 12. SamZHU para Blogs 41. Joystiq 44. nosz50j 3. PostSecret 29. Wonkette 39. Eschaton 1. Boing Boing 7. InstaPundit 17. Lifehacker 25. chattie555 com/msn-sa 14. Beppe Grillo 18. locker2man 27. spaces.msn. 34. A List Apart 37. Power Line 16. Herramientas 43. AMERICAblog 20. Think Progress 35. manabekawori 49. The Superficial 9. Crooks and Liars11. Michelle Malkin 28. lwhanz198153030. shiraishi31. The seesaa Space Craft 50. Andrew Sullivan 19. Open Palm! silicn 33. spaces.msn.com/ 45. Joel46. on spaces.msn.com/Software 5. The Huffington Post 8. Thought Mechanics 15. theme.blogfa.com 21. Official Google Blog 38. Weebl’s Stuff News 47. princesscecicastle 32. Talking Points Memo 48. Google Blogoscoped 42. Little Green Footballs 26. spaces.msn. c o m/ 36. spaces.msn.com/atiger 10. spaces.msn.com/klcintw 1. Boing Boing A herald from the 6. -

“The Duty of Comedy Is to Amuse Men by Correcting Them": Analyzing the Value and Effectiveness of Using Comedy As an Informational Tool

“The Duty of Comedy is to Amuse Men by Correcting Them": Analyzing the Value and Effectiveness of Using Comedy as an Informational Tool A Thesis Submitted to the Faculty of the Graduate School of Arts and Sciences of Georgetown University in partial fulfillment of the requirements for the degree of Master of Arts In Communication, Culture, and Technology By David Jacobs Garr, B.A Washington, DC May 1, 2009 Copyright © 2009 by David Jacobs Garr All Rights Reserved ii “The Duty of Comedy is to Amuse Men by Correcting Them": Analyzing the Value and Effectiveness of Using Comedy as an Informational Tool David Jacobs Garr, B.A. Thesis Advisor: Diana Owen, Ph.D. ABSTRACT Often in today’s culture, individuals seek information from comedy sources. From cable television programs to Internet sources, as well as interpersonal communication, the role and prominence of comedy content as a vehicle for news and information has been steadily growing throughout the past decade. Throughout my research I look into the communication areas of news—specifically political news—and education to determine the effectiveness and value of using comedy to convey information. Employing secondary analysis research, communication theory investigation, research in two elementary school classrooms, an online survey, and interviews with prominent members of communication industries, I look into questions of how and why humor is an effective method for conveying information. Among the research findings are that the power of the joke, as well as the attentive nature of comedy, contribute to the effectiveness of comedy as a vehicle of relevant news and information. -

The World's a Blog | Huffpost

All the World's a Blog | HuffPost US EDITION THE BLOG All the World’s a Blog By Marty Kaplan 03/23/2006 12:01 pm ET | Updated May 25, 2011 This week I moderated a panel with Kevin Drum, Kevin Roderick and the original Wonkette, Ana Marie Cox. The topic - Have bloggers replaced newspapers, and should we care? - elicited a unanimous yawn from the panelists; without big city dailies, they agreed, there would be no political blogosphere. One unexpected theme that emerged, though, got me wondering. It’s the idea that political blogs are a self- consciously theatrical space, where bloggers believe they must stylize themselves, exaggerate, play stock roles. Kind of like Kabuki, or the WWE. In this formulation, bloggers take public positions more extreme, less nuanced, easier to stereotype, than they would over beer with friends (you know, in “real” life). It’s as though there’s something about the Web, and the attention economy, and maybe polarized contemporary politics, that requires bloggers to slip on these costumes before they enter the fray. Online is onstage. It’s not that they’re inauthentic, or pretending to believe things they don’t; it’s that the medium itself elicits the kind of gestures and voice projection that play more to the balcony than to people you’d actually have a conversation with. Maybe the right analogy is to the difference between the banter of the commentariat in the green room, and their blovatiation when the camera light is on. In any event, it’s kind of surprising (to me, anyway) how something that people can notoriously do in their pajamas can seem to require such ritualistic and mannered discourse. -

Newstex Blogs Available on Lexisnexis

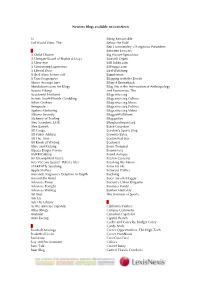

Newstex Blogs available on LexisNexis 3i Being Reasonable 3rd World View, The Below the Fold Ben Hammersley's Dangerous Precedent A Between Lawyers A Child Chosen Big Picture Speculator A Chequer-Board of Nights & Days Biofuels Digest A Clear eye BillHobbs.com A Consuming Experience Billtrippe.com A Liberal Dose Bird Watching A shel of my former self Bizinformer A True Progressive Blogging with the Devils Above Average Jane Blogs 4 Brownback Absolutearts.com Art Blogs Blog Sits at the Intersection of Anthropology Access Vikings and Economics, The Accidental Hedonist Blogcritics.org Action: South Florida Gambling Blogcritics.org Culture Adam Graham Blogcritics.org Music Aeroposte Blogcritics.org Politics Ageless Marketing Blogcritics.org Video Albanys Insanity BlogginWallStreet Alchemy of Trading Blogspotter Alec Saunders .LOG BluegrassReport.org Alex Barnett Boise Guardian All Cougs, Bordow's Sports Blog All Poker Addicts Brownie Bytes All The Time Boston Red Sox All Kinds of Writing Bostonist Alive and Kicking Brain Terminal Alpaca Burger Forum Brainwaves AMERICAblog Brand Autopsy An Unamplified Voice Brazen Careerist Ana Veciana Suarez' What a life! Breaking the Mirror aPARENTly Speaking Brew Ha Ha Apple Matters Broward Politics Armando Salguero's Dolphins in Depth Buckdog Around the Bend Bucs' Swash-Blogger Arkansas Times Burnett's Urban Etiquette Arkansas Tonight Business Pundit Arkansas Weblog Bunker Mentality Art Beat The Business of Sports Ask Us Ask The Editors C As the universe expands California Yankee Atlas Shrugs Campus Commons -

The Available Means of Imagination : Personal Narrative, Public Rhetoric, and Circulation

University of Louisville ThinkIR: The University of Louisville's Institutional Repository Electronic Theses and Dissertations 8-2016 The va ailable means of imagination : personal narrative, public rhetoric, and circulation. Stephanie D. Weaver University of Louisville Follow this and additional works at: https://ir.library.louisville.edu/etd Part of the Rhetoric Commons Recommended Citation Weaver, Stephanie D., "The va ailable means of imagination : personal narrative, public rhetoric, and circulation." (2016). Electronic Theses and Dissertations. Paper 2551. https://doi.org/10.18297/etd/2551 This Doctoral Dissertation is brought to you for free and open access by ThinkIR: The nivU ersity of Louisville's Institutional Repository. It has been accepted for inclusion in Electronic Theses and Dissertations by an authorized administrator of ThinkIR: The nivU ersity of Louisville's Institutional Repository. This title appears here courtesy of the author, who has retained all other copyrights. For more information, please contact [email protected]. THE AVAILABLE MEANS OF IMAGINATION: PERSONAL NARRATIVE, PUBLIC RHETORIC, AND CIRCULATION By Stephanie D. Weaver B.A., Middle Tennessee State University, 2009 M.A., Miami University, 2011 A Dissertation Submitted to the Faculty of the College of Arts and Sciences of the University of Louisville in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy in English/Rhetoric and Composition Department of English University of Louisville Louisville Kentucky August 2016 Copyright 2016 by Stephanie D. Weaver All Rights Reserved THE AVAILABLE MEANS OF PERSUASION: PERSONAL NARRATIVE, PUBLIC RHETORIC, AND CIRCULATION By Stephanie D. Weaver B.A., Middle Tennessee State University, 2009 M.A., Miami University, 2011 A Dissertation Approved on July 18, 2016 By the following Dissertation Committee: ___________________________________________ Dissertation Director Dr.