Towards a Discipline for Agile Requirements

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Rugby - a Process Model for Continuous Software Engineering

INSTITUT FUR¨ INFORMATIK DER TECHNISCHEN UNIVERSITAT¨ MUNCHEN¨ Forschungs- und Lehreinheit I Angewandte Softwaretechnik Rugby - A Process Model for Continuous Software Engineering Stephan Tobias Krusche Vollstandiger¨ Abdruck der von der Fakultat¨ fur¨ Informatik der Technischen Universitat¨ Munchen¨ zur Erlangung des akademischen Grades eines Doktors der Naturwissenschaften (Dr. rer. nat.) genehmigten Dissertation. Vorsitzender: Univ.-Prof. Dr. Helmut Seidl Prufer¨ der Dissertation: 1. Univ.-Prof. Bernd Brugge,¨ Ph.D. 2. Prof. Dr. Jurgen¨ Borstler,¨ Blekinge Institute of Technology, Karlskrona, Schweden Die Dissertation wurde am 28.01.2016 bei der Technischen Universitat¨ Munchen¨ eingereicht und durch die Fakultat¨ fur¨ Informatik am 29.02.2016 angenommen. Abstract Software is developed in increasingly dynamic environments. Organizations need the capability to deal with uncertainty and to react to unexpected changes in require- ments and technologies. Agile methods already improve the flexibility towards changes and with the emergence of continuous delivery, regular feedback loops have become possible. The abilities to maintain high code quality through reviews, to regularly re- lease software, and to collect and prioritize user feedback, are necessary for con- tinuous software engineering. However, there exists no uniform process model that handles the increasing number of reviews, releases and feedback reports. In this dissertation, we describe Rugby, a process model for continuous software en- gineering that is based on a meta model, which treats development activities as parallel workflows and which allows tailoring, customization and extension. Rugby includes a change model and treats changes as events that activate workflows. It integrates re- view management, release management, and feedback management as workflows. As a consequence, Rugby handles the increasing number of reviews, releases and feedback and at the same time decreases their size and effort. -

Agile Testing Practices

Agile Testing Practices Megan S. Sumrell Director of Transformation Services Valtech Introductions About us… Now about you… Your name Your company Your role Your experience with Agile or Scrum? Personal Expectations Agenda Introductions Agile Overview Traditional QA Teams Traditional Automation Approaches Role of an Agile Tester Testing Activities Refine Acceptance Criteria TDD Manual / Exploratory Testing Defect Management Documentation Performance Testing Regression Testing Agenda Continued Test Automation on Agile Teams Testing on a Greenfield Project Testing on a Legacy Application Estimation Sessions Sprint Planning Meetings Retrospectives Infrastructure Skills and Titles Closing Agile Overview Agile Manifesto "We are uncovering better ways of developing software by doing it and helping others do it. Through this work we have come to value: Individuals and interactions over processes and tools Working software over comprehensive documentation Customer collaboration over contract negotiation Responding to change over following a plan That is, while there is value in the items on the right, we value the items on the left more." Scrum Terms and Definitions User Story: high level requirements Product Backlog: list of prioritized user stories Sprint : one cycle or iteration (usually 2 or 4 weeks in length) Daily Stand-up: 15 minute meeting every day to review status Scrum Master: owns the Scrum process and removes impediments Product Owner: focused on ROI and owns priorities on the backlog Pigs and Chickens Traditional QA Teams How are you organized? When do you get involved in the project? What does your “test phase” look like? What testing challenges do you have? Traditional Test Automation Automation Challenges Cost of tools Hard to learn Can’t find time Maintenance UI dependent Only a few people can run them Traditional Test Pyramid UNIT TESTS Business Rules GUI TESTS Will these strategies work in an Agile environment? Food for Thought……. -

User Stories.Book

User Stories Applied for Agile Software Development Mike Cohn Boston • San Francisco • New York • Toronto • Montreal London • Munich • Paris • Madrid Capetown • Sydney • Tokyo • Singapore • Mexico City Chapter 2 Writing Stories In this chapter we turn our attention to writing the stories. To create good sto- ries we focus on six attributes. A good story is: • Independent • Negotiable • Valuable to users or customers •Estimatable •Small •Testable Bill Wake, author of Extreme Programming Explored and Refactoring Workbook, has suggested the acronym INVEST for these six attributes (Wake 2003a). Independent As much as possible, care should be taken to avoid introducing dependencies between stories. Dependencies between stories lead to prioritization and plan- ning problems. For example, suppose the customer has selected as high priority a story that is dependent on a story that is low priority. Dependencies between stories can also make estimation much harder than it needs to be. For example, suppose we are working on the BigMoneyJobs website and need to write stories for how companies can pay for the job openings they post to our site. We could write these stories: 1. A company can pay for a job posting with a Visa card. 2. A company can pay for a job posting with a MasterCard. 17 18 WRITING STORIES 3. A company can pay for a job posting with an American Express card. Suppose the developers estimate that it will take three days to support the first credit card type (regardless of which it is) and then one day each for the second and third. -

User-Stories-Applied-Mike-Cohn.Pdf

ptg User Stories Applied ptg From the Library of www.wowebook.com The Addison-Wesley Signature Series The Addison-Wesley Signature Series provides readers with practical and authoritative information on the latest trends in modern technology for computer professionals. The series is based on one simple premise: great books come from great authors. Books in the series are personally chosen by expert advi- sors, world-class authors in their own right. These experts are proud to put their signatures on the cov- ers, and their signatures ensure that these thought leaders have worked closely with authors to define topic coverage, book scope, critical content, and overall uniqueness. The expert signatures also symbol- ize a promise to our readers: you are reading a future classic. The Addison-Wesley Signature Series Signers: Kent Beck and Martin Fowler Kent Beck has pioneered people-oriented technologies like JUnit, Extreme Programming, and patterns for software development. Kent is interested in helping teams do well by doing good — finding a style of software development that simultaneously satisfies economic, aesthetic, emotional, and practical con- straints. His books focus on touching the lives of the creators and users of software. Martin Fowler has been a pioneer of object technology in enterprise applications. His central concern is how to design software well. He focuses on getting to the heart of how to build enterprise software that will last well into the future. He is interested in looking behind the specifics of technologies to the patterns, ptg practices, and principles that last for many years; these books should be usable a decade from now. -

Selecting a Development Process: Choosing Among the Leading Alternatives Mike Cohn Mountain Goat Software [email protected]

Selecting a Development Process: Choosing Among the Leading Alternatives Mike Cohn Mountain Goat Software [email protected] Copyright Mountain Goat Software, LLC 1 Mike Cohn - background Copyright Mountain Goat Software, LLC 2 Today’s agenda Considerations Team Software Proce ss Scrum Extreme Programming OpenUP/Basic Rational Unified Process Copyright Mountain Goat Software, LLC 3 Ceremony • The amount of formalism in a process • Documentation, method weight, reviews Few documents Many documents Few steps Formal steps Copyright Mountain Goat Software, LLC 4 Cycles Sequential • Number and length of iterations Few documents Many documents Few steps Formal steps Many short iterations (5 days) Copyright Mountain Goat Software, LLC 5 Placing the processes Sequential Few documents Many documents Few steps Formal steps Many short iterations (5 days) Copyright Mountain Goat Software, LLC 6 Today’s agenda Considerations Team Software Proce ss Scrum Extreme Programming OpenUP/Basic Rational Unified Process Copyright Mountain Goat Software, LLC 7 Team Software Process (TSP) • Created by Watts Humphrey • Of Software Engineering Institute and Capability Maturity Model (CMM) • Builds on his Personal Software Process • High discipline, highly defined • A “cyclic development strategy” • Another way of saying “iterative and incremental” Copyright Mountain Goat Software, LLC 8 Goals of the TSP 1. Build on the Personal Software Process 2. Develop products in cycles 3. Establish standard measures for quality and performance 4. Provide precise measures -

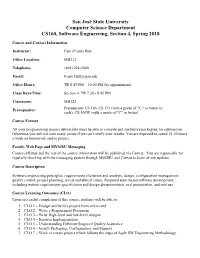

San José State University Computer Science Department CS160, Software Engineering, Section 4, Spring 2018

San José State University Computer Science Department CS160, Software Engineering, Section 4, Spring 2018 Course and Contact Information Instructor: Fain (Frank) Butt Office Location: MH212 Telephone: (408) 924-5060 Email: [email protected] Office Hours: TR 8:45 PM – 10:00 PM (by appointment) Class Days/Time: Section 4: TR 7:30 - 8:45 PM Classroom: MH222 Prerequisites: Prerequisite: CS 146, CS 151 (with a grade of "C-" or better in each); CS 100W (with a grade of "C" or better) Course Format All your programming project deliverable must be able to compile and run before packaging for submission. Otherwise you will not earn many points if we can’t verify your results. You are expected to spend 15-20 hours a week on homework and/or project. Faculty Web Page and MYSJSU Messaging Course syllabus and the rest of the course information will be published via Canvas. You are responsible for regularly checking with the messaging system through MySJSU and Canvas to learn of any updates. Course Description Software engineering principles, requirements elicitation and analysis, design, configuration management, quality control, project planning, social and ethical issues. Required team-based software development, including written requirements specification and design documentation, oral presentation, and tool use. Course Learning Outcomes (CLO) Upon successful completion of this course, students will be able to: 1. CLO 1 – Design and build a project from end to end 2. CLO 2 – Write a Requirement Document 3. CLO 3 – Write High-level and low-level designs 4. CLO 4 – Iterative Implementation 5. CLO 5 – Understanding Different Stages of Quality Assurance 6. -

Best Agile Articles of 2017

Best Agile Articles of 2017 Editors: Michael de la Maza, CEC & Cherie Silas, CEC BEST AGILE ARTICLES OF 2017 Edited By: Michael de la Maza, CEC & Cherie Silas, CEC Copyright ©2018 by Michael de la Maza All rights reserved. Printed in the United States of America. Cover design by Christopher Kyle Wilson The text of this book is set in Warnock Pro & Myriad Pro Book layout by THDesign, Inc. First Edition: November 2018 ii Table of Contents Foreword .................................................................................................................................................................................................Page vii Pete Behrens Lean Startup has Changed Nothing! ..................................................................................................Pages 9–13 Sonja Blignaut If you want to innovate, don’t say so ................................................................................................Pages 14–18 Melissa Boggs At the Intersection of Culture & Strategy ...................................................................................Pages 19–21 Zach Bonaker Scrum Guide Sliders ....................................................................................................................................Pages 22–26 Braz Brandt Agile in Highly Regulated Environments ....................................................................................Pages 27–30 Maxime Castera What Kids Taught Me About Being Agile...................................................................................Pages -

To View Or Download This Issue PDF File, 670 K

METHODS & TOOLS Global knowledge source for software development professionals ISSN 1661-402X Fall 2005 (Volume 13 - number 3) Man-Machine Interface The reduction of the transformation activities between man thoughts and executable software has been the quest of the software development world for a very long time. Behind this objective is the fact that the translation process to executable instructions is where distortions and errors are created. Among the solutions adopted to try to solve this problem, we can mention the approach that wants to express computer instructions in syntax as close as possible to "natural language" and the efforts to transform specifications automatically in executable code. With the natural language approach, you meet quickly the barrier of giving a clear meaning to natural language. If you have tried to build a general interpreter, you have seen how difficult it is to manage the input of natural language. In a similar approach, attempts have been made to create programming languages with "verbose" syntax so that they could be understood easily by non-programmers. The "automatic transformation" road is close to the previous approach but recognise that a special language is needed to express requirements. Its difficulties are the creation of a specification language that can be understood by the end-users and the detail of specifications needed before code generation. Maintaining different levels of abstraction is not easy and you could simply end by writing your code in a high level language. Both solutions generate even more complexity if you try to implement them so that they could be applicable to all problem domains. -

Release Planning Meeting

John Blanco Agile Transformation Consultant The Eliassen Group Playing the Pointing Game “Before you commission a painter to decorate your home or a mechanic to fix your car, you get an estimate from them, right? You need to know how much it’s likely to cost and how long it might take. It’s just common sense.” - David Morris, Estimating on Agile Projects: What’s the story, what’s the point? Agenda Old vs. New Where we came from and why we need to change Why Points? Why Fibonacci? Understanding basic units of measure The Art of Relative Sizing Some Ceremonies around pointing SAFe: Normalized Pointing Why Time matters Complexity Clusters Helping the team thru the transition Balancing Buffers, Invisible Load Q&A Where we came from and why we need to change BUSINESS Products VALUE Customer Delight Services Self Worth Accountability Cadence Quality Self-governing Teams Velocity Reusability Goal Oriented Metrics TDD/ATDD/CI Clarity Predictability DevOps Synchronization PEOPLE PROCESS TECHNOLOGY culture engineering Scrum XP Services Roles Kanban Platforms Rituals Lean Startup Business Applications Responsibilities SAFe Development/Support Individuals and interactions over processes and tools Working software over comprehensive documentation Customer collaboration over contract negotiation AGILE Responding to change over following a plan principles Foundation and Philosophy structure Where we came from and why we need to change we started with this… Front end planning Sizing using task duration Sizing done by the elite Uncertainty vs. Time (Boehm) …and we forgot about this People are fickle – ideation & creativity can not be frozen Requirements harden as the product evolves Where we came from and why we need to change we started with this… Front end planning Sizing using task duration Sizing done by the elite Uncertainty vs. -

The 7Th International Conference on Extreme Programming and Agile

ESPOO 2006 VTT SYMPOSIUM 241 This proceedings is a collection of all the tutorials, workshops, activities VTT SYMPOSIUM 241 and keynote speeches of the 7th International Conference on eXtreme Programming and Agile Processes in Software Engineering (XP 2006) held in June 17–22, 2006, Oulu, Finland. The 7th International Conference on eXtreme Programming and Agile... The 7th International Outi Salo, Pekka Abrahamsson & Päivi Järing (eds.) The 7th International Conference on eXtreme Programming and Agile Processes in Software Engineering Tutorials, Workshops, Activities, Tätä julkaisua myy Denna publikation säljs av This publication is available from VTT VTT VTT and Keynote Speeches PL 1000 PB 1000 P.O. Box 1000 02044 VTT 02044 VTT FI-02044 VTT, Finland Puh. 020 722 4404 Tel. 020 722 4404 Phone internat. +358 20 722 4404 Faksi 020 722 4374 Fax 020 722 4374 Fax +358 20 722 4374 ISBN 951–38–6305–0 (soft back ed.) ISBN 951–38–6306–9 (URL: http://www.vtt.fi/inf/pdf/) ISSN 0357–9387 (soft back ed.) ISSN 1455–0873 (URL: http://www.vtt.fi/inf/pdf/) VTT SYMPOSIUM 241 Keywords: software engineering, eXtreme programming, agile processes, adaptive processes, agile development, software development, XP projects, testing The 7th International Conference on eXtreme Programming and Agile Processes in Software Engineering Tutorials, Workshops, Activities, and Keynote Speeches Oulu, Finland, June 17–22, 2006 Edited by Outi Salo, Pekka Abrahamsson & Päivi Jaring VTT Technical Research Centre of Finland Organised by VTT Technical Research Centre of Finland University of Oulu ISBN 951–38–6305–0 (soft back ed.) ISSN 0357–9387 (soft back ed.) ISBN 951–38–6306–9 (URL: http://www.vtt.fi/publications/index.jsp) ISSN 1455–0873 (URL: http://www.vtt.fi/publications/index.jsp) Copyright © VTT Technical Research Centre of Finland 2006 JULKAISIJA – UTGIVARE – PUBLISHER VTT, Vuorimiehentie 3, PL 1000, 02044 VTT puh. -

Impact of Unified User-Story-Based Modeling on Agile Methods: Aspects on Requirements, Design and Life Cycle Management by Samedi Heng

Impact of Unified User-Story-Based Modeling on Agile Methods: Aspects on Requirements, Design and Life Cycle Management by Samedi Heng A thesis submitted in fulfillment of the requirements for the degree of Doctor in Economics and Management Sciences of the Université catholique de Louvain Examination Committee: Prof. Manuel Kolp (UCLouvain), Advisor Prof. Yves Wautelet (KULeuven), Co-Advisor Prof. Jean Vanderdonckt (UCLouvain), Examiner Prof. Isabelle Mirbel (Université Nice Sophia Antipolis), Examiner Prof. Vincent Englebert (UNamur), Reader Prof. Per Agrell (UCLouvain), President of the jury February 2017 To my parents, for your hard work and sacrifices to support our family and, more importantly, your vision that only better education can improve our standards of living to increased enjoyment and happiness. Acknowledgements As long as I remember, writing a PhD thesis has always been a dream. It has been a unique experience comparable to no others I have had before. It took me six years to finally made it to the end. This would not have been possible without the precious help and encouragements from the people I thank hereafter. First, I would like to express my sincere gratitude to my supervisors Profs. Manuel Kolp and Yves Wautelet. Yves once told me that the path to the PhD is made of ups and downs; downs can be the periods when most of the improvements are there to put yourself into question which is, by nature, the essence of any scientific work. My personal path has also been made of these with a down peak in 2013. Both of you helping me back on my feet in a hard but fair manner made of interventions and coaching but also encouragements, advices, and amusement. -

Analysis of Requirements Incompleteness Using Metamodel Specification

Analysis of requirements incompleteness using metamodel specification Ao Li University of Tampere School of Information Sciences Computer Science / Software Development M.Sc. thesis Supervisor: Zheying Zhang June 2015 i University of Tampere School of Information Sciences Computer Science / Software Development Ao Li: Analysis of requirements incompleteness using metamodel M.Sc. thesis, 52 pages and 3 index and appendix pages June 2015 Abstract. Incompleteness of requirements has been treated as a huge challenge in software development projects. Since it is hard to obtain all required information before software design and implementation starts, the software modeling process may start with an incomplete requirements specification. In order to help analyzing the incompleteness in requirements, I propose a metamodel approach for detecting the missing requirements that are needed for constructing conceptual models for a software system, and implement it in MetaEdit+. The detected missing information in a conceptual model is reported in natural language, which is easy to understand. Furthermore, the conceptual modelers can identify the potential problems indicated by the report to analyze and update the model. The contribution of my thesis is twofold, i.e. analyzing the link between business rules and the ER models, and implementing a method to automatically detect and show the incompleteness in ER models. Keywords: Requirements incompleteness, business rules, metamodel, MetaEdit+. ii Contents Introduction ...........................................................................................................