The Influence of Open Access on Advanced Placement Achievement

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Relationship of PSAT/NMSQT Scores and AP Examination Grades

Research Notes Office of Research and Development RN-02, November 1997 The Relationship of PSAT/NMSQT Scores and AP® Examination Grades he PSAT/NMSQT, which measures devel- Recent analyses have shown that student per- oped verbal and quantitative reasoning, as formance on the PSAT/NMSQT can be useful in Twell as writing skills generally associated identifying additional students who may be suc- with academic achievement in college, is adminis- cessful in AP courses. PSAT/NMSQT scores can tered each October to nearly two million students, identify students who may not have been initially the vast majority of whom are high school juniors considered for an AP course through teacher or and sophomores. PSAT/NMSQT information has self-nomination or other local procedures. For been used by high school counselors to assist in many AP courses, students with moderate scores advising students in college planning, high school on the PSAT/NMSQT have a high probability of suc- course selection, and for scholarship awards. In- cess on the examinations. For example, a majority formation from the PSAT/NMSQT can also be very of students with PSAT/NMSQT verbal scores of useful for high schools in identifying additional 46–50 received grades of 3 or above on nearly all of students who may be successful in Advanced the 29 AP Examinations studied, while over one- Placement courses, and assisting schools in deter- third of students with scores of 41–45 achieved mining whether to offer additional Advanced grades of 3 or above on five AP Examinations. Placement courses. There are substantial variations across AP subjects that must be considered. -

Curriculum Overview Physics/Pre-AP 2018-2019 1St Nine Weeks

Curriculum Overview Physics/Pre-AP 2018-2019 1st Nine Weeks RESOURCES: Essential Physics (Ergopedia – online book) Physics Classroom http://www.physicsclassroom.com/ PHET Simulations https://phet.colorado.edu/ ONGOING TEKS: 1A, 1B, 2A, 2B, 2C, 2D, 2F, 2G, 2H, 2I, 2J,3E 1) SAFETY TEKS 1A, 1B Vocabulary Fume hood, fire blanket, fire extinguisher, goggle sanitizer, eye wash, safety shower, impact goggles, chemical safety goggles, fire exit, electrical safety cut off, apron, broken glass container, disposal alert, biological hazard, open flame alert, thermal safety, sharp object safety, fume safety, electrical safety, plant safety, animal safety, radioactive safety, clothing protection safety, fire safety, explosion safety, eye safety, poison safety, chemical safety Key Concepts The student will be able to determine if a situation in the physics lab is a safe practice and what appropriate safety equipment and safety warning signs may be needed in a physics lab. The student will be able to determine the proper disposal or recycling of materials in the physics lab. Essential Questions 1. How are safe practices in school, home or job applied? 2. What are the consequences for not using safety equipment or following safe practices? 2) SCIENCE OF PHYSICS: Glossary, Pages 35, 39 TEKS 2B, 2C Vocabulary Matter, energy, hypothesis, theory, objectivity, reproducibility, experiment, qualitative, quantitative, engineering, technology, science, pseudo-science, non-science Key Concepts The student will know that scientific hypotheses are tentative and testable statements that must be capable of being supported or not supported by observational evidence. The student will know that scientific theories are based on natural and physical phenomena and are capable of being tested by multiple independent researchers. -

AP Physics 2 Student Sample Responses To

2017 AP Physics 2: Algebra-Based Sample Student Responses and Scoring Commentary Inside: R Free Response Question 3 R Scoring Guideline R Student Samples R Scoring Commentary © 2017 The College Board. College Board, Advanced Placement Program, AP, AP Central, and the acorn logo are registered trademarks of the College Board. Visit the College Board on the Web: www.collegeboard.org. AP Central is the official online home for the AP Program: apcentral.collegeboard.org AP® PHYSICS 2017 SCORING GUIDELINES General Notes About 2017 AP Physics Scoring Guidelines 1. The solutions contain the most common method of solving the free-response questions and the allocation of points for this solution. Some also contain a common alternate solution. Other methods of solution also receive appropriate credit for correct work. 2. The requirements that have been established for the paragraph length response in Physics 1 and Physics 2 can be found on AP Central at https://secure-media.collegeboard.org/digitalServices/pdf/ap/paragraph-length-response.pdf. 3. Generally, double penalty for errors is avoided. For example, if an incorrect answer to part (a) is correctly substituted into an otherwise correct solution to part (b), full credit will usually be awarded. One exception to this may be cases when the numerical answer to a later part should be easily recognized as wrong, e.g., a speed faster than the speed of light in vacuum. 4. Implicit statements of concepts normally receive credit. For example, if use of the equation expressing a particular concept is worth one point, and a student’s solution embeds the application of that equation to the problem in other work, the point is still awarded. -

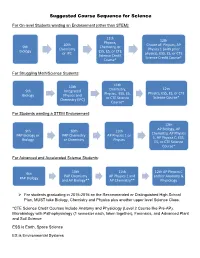

Suggested Course Sequence for Science

Suggested Course Sequence for Science For On-level Students wanting an Endorsement (other than STEM): 11th 12th Physics, 10th Choice of: Physics, AP 9th Chemistry, or Chemistry Physics 1 (with prior Biology ESS, ES, or CTE or IPC physics), ESS, ES, or CTE Science Credit Science Credit Course* Course* For Struggling Math/Science Students: 10th 11th 12th 9th Integrated Chemistry, Physics, ESS, ES, or CTE Biology Physics and Physics, ESS, ES, Science Course* Chemistry (IPC) or CTE Science Course* For Students wanting a STEM Endorsement: 12th AP Biology, AP 9th 10th 11th Chemistry, AP Physics PAP Biology or PAP Chemistry AP Physics 1 or 1, AP Physics C, ESS, Biology or Chemistry Physics ES, or CTE Science Course* For Advanced and Accelerated Science Students: 10th 11th 12th AP Physics C 9th PAP Chemistry AP Physics 1 and and/or Anatomy & PAP Biology and AP Biology** AP Chemistry** Physiology For students graduating in 2015-2016 on the Recommended or Distinguished High School Plan, MUST take Biology, Chemistry and Physics plus another upper level Science Class. *CTE Science Credit Courses include Anatomy and Physiology (Level 2 Course like Pre-AP), Microbiology with Pathophysiology (1 semester each, taken together), Forensics, and Advanced Plant and Soil Science ESS is Earth, Space Science ES is Environmental Systems **Double enrollment in science classes can begin at any point in the sequence for advanced students. A note about mathematics and science: Many upper division science courses require varying degrees of use of mathematics. For students seeking to get the most out of their science courses, use the following suggested pre- and co- requisites. -

Ap Physics(B) Syllabus

AP PHYSICS (B) SYLLABUS Text: Physics, Sixth Edition by Cutnell and Johnson ISBN 0471-15183-1, Wiley and Sons, 2004 COURSE OVERVIEW Advanced Placement Physics is an intensive and rigorous college level introductory course in algebra based physics. This course provides an opportunity for high school students to pursue and receive credit for college-level course work undertaken while in high school. This course will be covered in two semesters. The emphasis in the course is to utilize guided inquiry and student centered learning to develop conceptual understanding and critical thinking skills so that the students may be able to the following: (abbreviated from the 2005-2006 College Board AP Physics Course Description) 1) to reason, to think critically, and to solve particular physical phenomenon or problems 2) to use the scientific method to analyze a physical situation or problem 3) to apply mathematical tools as an aid to understanding those relationships 4) to perform experiments and to interpret the results of observations and communicate those results while making an assessment of experimental uncertainties. Laboratory work will be covered as an integral part of this course. After the AP exam, the class will culminate with an underwater ROV (remote operated vehicle) project. GRADING 40 % Tests and quizzes 30 % Lab activities and lab reports 30 % Homework COURSE OUTLINE The following course outline is adapted from the Physics B outline as presented in the 2006 Course Description for AP Physics by the College Board. The outline includes a time line and chapters in our textbook. SEMESTER 1 I. Newtonian Mechanics A. Kinematics including vectors, vector algebra, components of vectors (Chapter 1, 2, 3 in 4 weeks) 1. -

AP Physics B Review Ch 23: Optics 1. How Does Do Compare to Di for A

AP Physics B Review Ch 23: Optics 1. How does do compare to di for a plane mirror? 2. What type of image is formed in a plane mirror? 3. If a light ray is incident on a plane mirror at 24°, what is the reflected ray? _____ What is the angle between the incident ray and the reflected ray? ____ 4. A concave mirror with radius of curvature 40 cm creates a real image 60 cm from the mirror. What is the object distance? 5. A concave mirror has a radius of curvature of 40 cm. What distance is the focal length from the mirror? 6. Draw a ray diagram for a concave mirror with do > 2f using 3 rays in the space below: 7. What does negative mean for di? 8. What does negative mean for m? 9. A convex mirror has a focal length -15 cm. An object is placed 10 cm in front of the mirror on the principal axis. Where is the image located? 10. What is the speed of light through a material with an index of refraction, n = 1.33? 11. If light enters a substance from air at an angle of 38°, and continues and an angle of 25°, what is the index of refraction of the material? 12. What is the critical angle, θc for any material? (definition and equation) 13. What is the critical angle for light traveling from glass (n = 1.52) into air (n = 1.003)? 14. The critical angle for an optic fiber is 23°. What happens to all light rays with an angle of incidence larger than this? 15. -

Science Courses

The image part with relationship ID rId1 was not found in the file. Science Courses CREDIT COURSE COURSE TITLE PREREQUISITE OFFERED .5 3000 Integrated Physical Science, S1 None 9 .5 3002 Integrated Physical Science, S2 .5 3004 Integrated Physical Science Honors, S1 A or B in 8th grade Algebra and A or B in 9 .5 3006 Integrated Physical Science Honors, S2 8th grade Earth Science .5 3008 Principles of Chemistry, S1 Successful completion of Physical Science 10-12 .5 3010 Principles of Chemistry, S2 .5 3012 Chemistry, S1 C or better in Physical Science or Physical 10-12 .5 3014 Chemistry, S2 Science Honors .5 3016 Chemistry Honors, S1 A or B in Physical Science Honors, A in 10-12 .5 3018 Chemistry Honors, S2 Physical Science or Physics 1. Concurrently enrolled in Higher Algebra or beyond. .5 T7202S Pre-AP Chemistry, summer, Tonka Online Students should be registered for AP 9-12 Chemistry in the fall; optional course for preceding summer, elective credit .5 AP304 AP Chemistry, S1 A or B in Physical Science Honors with 10-12 .5 AP306 AP Chemistry, S2 teacher recommendation and A or B in Higher Algebra .5 T3100 AP Environmental Science, part 1, Tonka Online Chemistry, Physical Science. 11-12 Select Term: T3100S / T3102F This course may also be taken through VANTAGE course #V300 (grades 11-12) .5 T3102 AP Environmental Science, part 2, Tonka Online Select Term: T3102S / T3102F / T3102W .5 T3023 Fundamentals of Neuroscience, Tonka Online None 9-12 .5 3020 Human Anatomy & Physiology 1 Physical Science or Physical Science 10-12 .5 T3020 Human Anatomy & Physiology 1, Tonka Online Honors, Chemistry This course may also be taken through VANTAGE #V200 (grades 11-12) .5 3022 Human Anatomy & Physiology 2 Human Anatomy and Physiology 1 or 11-12 VANTAGE Health Sciences Human Anatomy, Physical Science, and Chemistry .5 3024 Earth & Space Systems Physical Science or Physical Science Honors 10-12 .5 3026 Astronomy None 10-12 Page 1 of 4 The image part with relationship ID rId1 was not found in the file. -

Applying AP & IB Courses to the SAT Subject Tests

Applying AP & IB Courses to the SAT Subject Tests How much strategy and content can students take away from AP & IB courses to utilize on the SAT Subject Tests? www.arborbridge.com | [email protected] www.arborbridge.com | [email protected] What’s in this book? Oftentimes students have already built up an arsenal of strategy and content to tackle the SAT Sub ject t ests from AP or IB courses in school. In this book, we break down nine Subject Tests and their corresponding AP and IB (SL and HL) courses by four dimensions: Strategy Covered, Strategy Gap, Content Covered and Content Gap. Additionally, we provided students with the specific topics in each Subject where Strategy and Content are inconsistent to ensure more targeted studying. We’ll cover the following Subject Tests: • Biology (Ecological) • Biology (Molecular) • Chemistry • Literature • Math Level 1 • Math Level 2 • Physics • World History • U.S. History www.arborbridge.com | [email protected] Plus we have added... Subject Test Quick Guides Your go-to guides for each of the SAT Subject Tests. Get to know exam format, content, question types, and key test-taking strategies from ArborBridge test prep experts! Flip to the next page to check them out. www.arborbridge.com | [email protected] Biology Subject Test Quick Guide Format Scoring • Students can choose to take either the • Raw score converted to scaled score Ecological (E) or Molecular (M) section. from 200–800 Pick and master one! • Average score (Biology E): 618 • 80 questions • Average score (Biology M): 650 • Time: 60 minutes (1 hour) • Question type: Multiple choice Content 60 of the 80 questions are “Common Core” Biology questions. -

Course Description for AP Physics C at BCC Aims/Objectives of The

Course Description for AP Physics C at BCC Aims/Objectives of the Course The goal of AP Physics C is to replicate a twosemester, firstyear college physics course series for science or engineering majors. In many colleges and universities, science and engineering majors must take a “Physics 101” and “Physics 102” course. The high school AP Physics C course covers the same content, with the same difficulty, while emphasizing laboratory experiments. The AP Physics C course builds upon the introductory, algebrabased Honors Physics course. Students in AP Physics C will learn how to add calculus into their physical models, and to analyze more complex systems than what is encountered in Honors Physics. Because the AP Physics C course moves quickly, students will need to be familiar with most of the concepts at an introductory level before the course begins. Therefore, a prerequisite for taking AP Physics C is the completion of Honors Physics. The AP Physics C course at BCC currently uses the Young & Freedman University Physics textbook, which is also one of the most commonly used physics textbooks at the college level. Course Content The first part of the course covers mechanics, which includes velocity, acceleration, and the forces that cause acceleration. Nonconstant forces are analyzed, as well as air resistance. Other topics in mechanics include energy, momentum, gravitation, oscillatory motion, and rotational motion. The second part of the course covers electricity & magnetism. Topics covered include electric forces, electric fields, Gauss’s Law, magnetic forces, magnetic fields, induced currents, voltage, current, series/parallel circuits, capacitors, dielectrics, resistors, transformers, and inductors. -

AP Physics C Course Syllabus This Course Prepares Students for the AP

AP Physics C Course Syllabus This course prepares students for the AP® Exams, Physics C: Mechanics and Physics C: Electricity & Magnetism. The Mechanics portion will provide instruction in each of the following six content areas assessed on the AP® Exam: Kinematics; Newton’s laws of motion; work, energy, and power; systems of particles and linear momentum; circular motion and rotation; and oscillations and gravitation. The Electricity & Magnetism portion will provide instruction in each of the following five content areas assessed on the AP® Exam: Electrostatics; conductors, capacitors, and dielectrics; electric circuits; magnetic fields; and electromagnetism. Additional topics of modern physics are also included in the course curriculum. The course will utilize guided inquiry and student-centered learning to foster the development of critical-thinking skills. It will use introductory differential and integral calculus throughout the course. This course meets for three 44-minute periods per week, plus two 90-minute lab periods. Prerequisites: Students who have successfully completed Honors Physics with a “B” minimum and have already completed or are taking concurrently AP® Calculus or Calculus. Text: Fundamentals of Physics, Extended, 9th ed., by Halliday, Resnick, and Walker, 2010, John Wiley & Sons. This is a standard text for calculus-based physics teaching at both the AP® and university levels. The text will be supplemented by additional material when appropriate. Teaching Strategies: Students will be assigned all of the end-of-chapter conceptual questions for the chapters covered. These will be followed by student-centered roundtable discussions to assure understanding by all. Students will also proceed, in groups and individually, to work through an intense selection of the end-of-chapter problems. -

AP Physics B Or C

Physics Which course is right for you? Of course the question is “which one”, not “whether?” • Bob Foley • Nick Swanson • [email protected] • [email protected] Junior Physics Options Which Physics Course? AP Physics I Physics I • suited for those interested • seen as a survey in majoring in a STEM in course of physics college topics • equivalent to a College • two projects: catapult Physics course and Rube Goldberg • weighted, +1 • college credit is usually given for scoring > 4/5 on the AP test Junior Physics Topics Physics I and AP Physics 1 • Translational Kinematics • Work, Power, and • Newtonian Mechanics Energy • Gravity and Planetary • Oscillations and Waves Motion • Electrostatics • Rotational Motion • Electrical Current and • Linear Momentum and Simple Circuits Impulse • Other topics as time permits AP topics much deeper & faster-paced than regular physics Junior Physics Expectations AP Physics I Physics I • Math corequisite of • math corequisite of precalculus Algebra II • 5 hours of work each week • ~ 30 min. of homework • pacing is very fast each block • pacing is slower • Regular Physics also does AP2 2 projects per year: AP1 – Catapult & – Rube Goldberg Senior Physics Options • AP Physics 2 - overview of a wide spectrum of physics topics using algebra/trigonometry – Pre-calculus is the minimum pre/co-requisite • AP Physics C - an in-depth, rigorous study of mechanics using Calculus – Calculus AB is the minimum pre/co-requisite • Both – Require Physics 1 (Regular or AP) as a prerequisite • Recommended 11th Grade Math & Physics -

AP Score Credit Guide

AP Score Credit Guide AP Courses Grade Earned Course Conversion Unless otherwise listed, 100-Level Free Electives (FREE 1XX) are always granted at the value of 3.0 semester AP Capstone AP Research N/A No credit AP Seminar N/A No credit Arts AP Art History 4, 5 FREE 1XX AP Music Theory 4, 5 FREE 1XX AP Studio Art: 2-D Design 4, 5 FREE 1XX AP Studio Art: 3-D Design 4, 5 FREE 1XX AP Studio Art: Drawing 4, 5 FREE 1XX English AP English Lang. & Comp. 3, 4, 5 FREE 1XX AP English Lit. & Comp. 3, 4, 5 FREE 1XX History & Social Science AP Comparative Gov’t & Politics 4, 5 FREE 1XX AP European History 4, 5 FREE 1XX AP Human Geography 4, 5 FREE 1XX AP Macroeconomics 4, 5 FREE 1XX - EBGN 201 if credit also received for AP Micro. AP Microeconomics 4, 5 FREE 1XX - EBGN 201 if credit also received for AP Macro. AP Psychology 3, 4, 5 FREE 1XX AP U.S. Gov. & Politics 3, 4, 5 FREE 1XX AP United States History 3, 4, 5 FREE 1XX AP World History 3, 4, 5 FREE 1XX Math & Computer Science AP Calculus AB 3 FREE 1XX AP Calculus AB 4, 5 MATH 111 AP Calculus BC 4 MATH 111, Only if AB subscore of also a 4 AP Calculus BC 5 MATH 111 and MATH 112, MATH 112 upon successful completion of MATH 113 AP Computer Science A 4, 5 CSCI 261 AP Comp. Sci. Principles 4, 5 CSCI 101 AP Statistics 3, 4, 5 FREE 1XX Sciences AP Biology 3 FREE 1XX AP Biology 4 Challenge Exam, Biology 1, If student does not pass the Challenge Exam, FREE 1XX for 3 credit hours will be awarded AP Biology 5 CBEN 110, CBEN 120 AP Chemistry 3 FREE 1XX AP Chemistry 4 CHGN 121 AP Chemistry 5 CHGN 121, CHGN 122 AP Environmental Science 4, 5 FREE 1XX AP Physics C: Electricity & 4, 5 Challenge Exam, PHGN 200, If student does not Magnetism pass the Challenge Exam, FREE 1XX for 3 credit hours will be awarded AP Physics C: Mechanics 4 Challenge exam, PHGN 100, If student does not pass the Challenge Exam, FREE 1XX for 3 credit hours will be awarded AP Physics C: Mechanics 5 PHGN 100 AP Physics 1: Algebra-Based N/A No credit AP Physics 2: Algebra-Based N/A No credit World Lang.