1 Functions, Bijections, and Counting

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Section 4.2 Counting

Section 4.2 Counting CS 130 – Discrete Structures Counting: Multiplication Principle • The idea is to find out how many members are present in a finite set • Multiplication Principle: If there are n possible outcomes for a first event and m possible outcomes for a second event, then there are n*m possible outcomes for the sequence of two events. • From the multiplication principle, it follows that for 2 sets A and B, |A x B| = |A|x|B| – A x B consists of all ordered pairs with first component from A and second component from B CS 130 – Discrete Structures 27 Examples • How many four digit number can there be if repetitions of numbers is allowed? • And if repetition of numbers is not allowed? • If a man has 4 suits, 8 shirts and 5 ties, how many outfits can he put together? CS 130 – Discrete Structures 28 Counting: Addition Principle • Addition Principle: If A and B are disjoint events with n and m outcomes, respectively, then the total number of possible outcomes for event “A or B” is n+m • If A and B are disjoint sets, then |A B| = |A| + |B| using the addition principle • Example: A customer wants to purchase a vehicle from a dealer. The dealer has 23 autos and 14 trucks in stock. How many selections does the customer have? CS 130 – Discrete Structures 29 More On Addition Principle • If A and B are disjoint sets, then |A B| = |A| + |B| • Prove that if A and B are finite sets then |A-B| = |A| - |A B| and |A-B| = |A| - |B| if B A (A-B) (A B) = (A B) (A B) = A (B B) = A U = A Also, A-B and A B are disjoint sets, therefore using the addition principle, |A| = | (A-B) (A B) | = |A-B| + |A B| Hence, |A-B| = |A| - |A B| If B A, then A B = B Hence, |A-B| = |A| - |B| CS 130 – Discrete Structures 30 Using Principles Together • How many four-digit numbers begin with a 4 or a 5 • How many three-digit integers (numbers between 100 and 999 inclusive) are even? • Suppose the last four digit of a telephone number must include at least one repeated digit. -

180: Counting Techniques

180: Counting Techniques In the following exercise we demonstrate the use of a few fundamental counting principles, namely the addition, multiplication, complementary, and inclusion-exclusion principles. While none of the principles are particular complicated in their own right, it does take some practice to become familiar with them, and recognise when they are applicable. I have attempted to indicate where alternate approaches are possible (and reasonable). Problem: Assume n ≥ 2 and m ≥ 1. Count the number of functions f :[n] ! [m] (i) in total. (ii) such that f(1) = 1 or f(2) = 1. (iii) with minx2[n] f(x) ≤ 5. (iv) such that f(1) ≥ f(2). (v) that are strictly increasing; that is, whenever x < y, f(x) < f(y). (vi) that are one-to-one (injective). (vii) that are onto (surjective). (viii) that are bijections. (ix) such that f(x) + f(y) is even for every x; y 2 [n]. (x) with maxx2[n] f(x) = minx2[n] f(x) + 1. Solution: (i) A function f :[n] ! [m] assigns for every integer 1 ≤ x ≤ n an integer 1 ≤ f(x) ≤ m. For each integer x, we have m options. As we make n such choices (independently), the total number of functions is m × m × : : : m = mn. (ii) (We assume n ≥ 2.) Let A1 be the set of functions with f(1) = 1, and A2 the set of functions with f(2) = 1. Then A1 [ A2 represents those functions with f(1) = 1 or f(2) = 1, which is precisely what we need to count. We have jA1 [ A2j = jA1j + jA2j − jA1 \ A2j. -

Useful Relations in Permutations and Combination 1. Useful Relations

Useful Relations in Permutations and Combination 1. Useful Relations - Factorial n! = n.(n-1)! 2. n퐶푟= n푃푟/r! n n-1 3. Pr = n( Pr-1) 4. Useful Relations - Combinations n n 1. Cr = C(n - r) Example 8 8 C6 = C2 = 8×72×1 = 28 n 2. Cn = 1 n 3. C0 = 1 n n n n n 4. C0 + C1 + C2 + ... + Cn = 2 Example 4 4 4 4 4 4 C0 + C1 + C2 + C3+ C4 = (1 + 4 + 6 + 4 + 1) = 16 = 2 n n (n+1) Cr-1 + Cr = Cr (Pascal's Law) n퐶푟 =n/퐶푟−1=n-r+1/r n n If Cx = Cy then either x = y or (n-x) = y. 5. Selection from identical objects: Some Basic Facts The number of selections of r objects out of n identical objects is 1. Total number of selections of zero or more objects from n identical objects is n+1. 6. Permutations of Objects when All Objects are Not Distinct The number of ways in which n things can be arranged taking them all at a time, when st nd 푃1 of the things are exactly alike of 1 type, 푃2 of them are exactly alike of a 2 type, and th 푃푟of them are exactly alike of r type and the rest of all are distinct is n!/ 푃1! 푃2! ... 푃푟! 1 Example: how many ways can you arrange the letters in the word THESE? 5!/2!=120/2=60 Example: how many ways can you arrange the letters in the word REFERENCE? 9!/2!.4!=362880/2*24=7560 7.Circular Permutations: Case 1: when clockwise and anticlockwise arrangements are different Number of circular permutations (arrangements) of n different things is (n-1)! 1. -

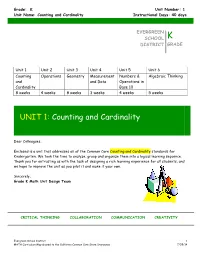

UNIT 1: Counting and Cardinality

Grade: K Unit Number: 1 Unit Name: Counting and Cardinality Instructional Days: 40 days EVERGREEN SCHOOL K DISTRICT GRADE Unit 1 Unit 2 Unit 3 Unit 4 Unit 5 Unit 6 Counting Operations Geometry Measurement Numbers & Algebraic Thinking and and Data Operations in Cardinality Base 10 8 weeks 4 weeks 8 weeks 3 weeks 4 weeks 5 weeks UNIT 1: Counting and Cardinality Dear Colleagues, Enclosed is a unit that addresses all of the Common Core Counting and Cardinality standards for Kindergarten. We took the time to analyze, group and organize them into a logical learning sequence. Thank you for entrusting us with the task of designing a rich learning experience for all students, and we hope to improve the unit as you pilot it and make it your own. Sincerely, Grade K Math Unit Design Team CRITICAL THINKING COLLABORATION COMMUNICATION CREATIVITY Evergreen School District 1 MATH Curriculum Map aligned to the California Common Core State Standards 7/29/14 Grade: K Unit Number: 1 Unit Name: Counting and Cardinality Instructional Days: 40 days UNIT 1 TABLE OF CONTENTS Overview of the grade K Mathematics Program . 3 Essential Standards . 4 Emphasized Mathematical Practices . 4 Enduring Understandings & Essential Questions . 5 Chapter Overviews . 6 Chapter 1 . 8 Chapter 2 . 9 Chapter 3 . 10 Chapter 4 . 11 End-of-Unit Performance Task . 12 Appendices . 13 Evergreen School District 2 MATH Curriculum Map aligned to the California Common Core State Standards 7/29/14 Grade: K Unit Number: 1 Unit Name: Counting and Cardinality Instructional Days: 40 days Overview of the Grade K Mathematics Program UNIT NAME APPROX. -

Combinatorics

Combinatorics Problem: How to count without counting. I How do you figure out how many things there are with a certain property without actually enumerating all of them. Sometimes this requires a lot of cleverness and deep mathematical insights. But there are some standard techniques. I That's what we'll be studying. We sometimes use the bijection rule without even realizing it: I count how many people voted are in favor of something by counting the number of hands raised: I I'm hoping that there's a bijection between the people in favor and the hands raised! Bijection Rule The Bijection Rule: If f : A ! B is a bijection, then jAj = jBj. I We used this rule in defining cardinality for infinite sets. I Now we'll focus on finite sets. Bijection Rule The Bijection Rule: If f : A ! B is a bijection, then jAj = jBj. I We used this rule in defining cardinality for infinite sets. I Now we'll focus on finite sets. We sometimes use the bijection rule without even realizing it: I count how many people voted are in favor of something by counting the number of hands raised: I I'm hoping that there's a bijection between the people in favor and the hands raised! Answer: 26 choices for the first letter, 26 for the second, 10 choices for the first number, the second number, and the third number: 262 × 103 = 676; 000 Example 2: A traveling salesman wants to do a tour of all 50 state capitals. How many ways can he do this? Answer: 50 choices for the first place to visit, 49 for the second, . -

Inclusion‒Exclusion Principle

Inclusionexclusion principle 1 Inclusion–exclusion principle In combinatorics, the inclusion–exclusion principle (also known as the sieve principle) is an equation relating the sizes of two sets and their union. It states that if A and B are two (finite) sets, then The meaning of the statement is that the number of elements in the union of the two sets is the sum of the elements in each set, respectively, minus the number of elements that are in both. Similarly, for three sets A, B and C, This can be seen by counting how many times each region in the figure to the right is included in the right hand side. More generally, for finite sets A , ..., A , one has the identity 1 n This can be compactly written as The name comes from the idea that the principle is based on over-generous inclusion, followed by compensating exclusion. When n > 2 the exclusion of the pairwise intersections is (possibly) too severe, and the correct formula is as shown with alternating signs. This formula is attributed to Abraham de Moivre; it is sometimes also named for Daniel da Silva, Joseph Sylvester or Henri Poincaré. Inclusion–exclusion illustrated for three sets For the case of three sets A, B, C the inclusion–exclusion principle is illustrated in the graphic on the right. Proof Let A denote the union of the sets A , ..., A . To prove the 1 n Each term of the inclusion-exclusion formula inclusion–exclusion principle in general, we first have to verify the gradually corrects the count until finally each identity portion of the Venn Diagram is counted exactly once. -

17 Axiom of Choice

Math 361 Axiom of Choice 17 Axiom of Choice De¯nition 17.1. Let be a nonempty set of nonempty sets. Then a choice function for is a function f sucFh that f(S) S for all S . F 2 2 F Example 17.2. Let = (N)r . Then we can de¯ne a choice function f by F P f;g f(S) = the least element of S: Example 17.3. Let = (Z)r . Then we can de¯ne a choice function f by F P f;g f(S) = ²n where n = min z z S and, if n = 0, ² = min z= z z = n; z S . fj j j 2 g 6 f j j j j j 2 g Example 17.4. Let = (Q)r . Then we can de¯ne a choice function f as follows. F P f;g Let g : Q N be an injection. Then ! f(S) = q where g(q) = min g(r) r S . f j 2 g Example 17.5. Let = (R)r . Then it is impossible to explicitly de¯ne a choice function for . F P f;g F Axiom 17.6 (Axiom of Choice (AC)). For every set of nonempty sets, there exists a function f such that f(S) S for all S . F 2 2 F We say that f is a choice function for . F Theorem 17.7 (AC). If A; B are non-empty sets, then the following are equivalent: (a) A B ¹ (b) There exists a surjection g : B A. ! Proof. (a) (b) Suppose that A B. -

STRUCTURE ENUMERATION and SAMPLING Chemical Structure Enumeration and Sampling Have Been Studied by Mathematicians, Computer

STRUCTURE ENUMERATION AND SAMPLING MARKUS MERINGER To appear in Handbook of Chemoinformatics Algorithms Chemical structure enumeration and sampling have been studied by 5 mathematicians, computer scientists and chemists for quite a long time. Given a molecular formula plus, optionally, a list of structural con- straints, the typical questions are: (1) How many isomers exist? (2) Which are they? And, especially if (2) cannot be answered completely: (3) How to get a sample? 10 In this chapter we describe algorithms for solving these problems. The techniques are based on the representation of chemical compounds as molecular graphs (see Chapter 2), i.e. they are mainly applied to constitutional isomers. The major problem is that in silico molecular graphs have to be represented as labeled structures, while in chemical 15 compounds, the atoms are not labeled. The mathematical concept for approaching this problem is to consider orbits of labeled molecular graphs under the operation of the symmetric group. We have to solve the so–called isomorphism problem. According to our introductory questions, we distinguish several dis- 20 ciplines: counting, enumerating and sampling isomers. While counting only delivers the number of isomers, the remaining disciplines refer to constructive methods. Enumeration typically encompasses exhaustive and non–redundant methods, while sampling typically lacks these char- acteristics. However, sampling methods are sometimes better suited to 25 solve real–world problems. There is a wide range of applications where counting, enumeration and sampling techniques are helpful or even essential. Some of these applications are closely linked to other chapters of this book. Counting techniques deliver pure chemical information, they can help to estimate 30 or even determine sizes of chemical databases or compound libraries obtained from combinatorial chemistry. -

Methods of Enumeration of Microorganisms

Microbiology BIOL 275 ENUMERATION OF MICROORGANISMS I. OBJECTIVES • To learn the different techniques used to count the number of microorganisms in a sample. • To be able to differentiate between different enumeration techniques and learn when each should be used. • To have more practice in serial dilutions and calculations. II. INTRODUCTION For unicellular microorganisms, such as bacteria, the reproduction of the cell reproduces the entire organism. Therefore, microbial growth is essentially synonymous with microbial reproduction. To determine rates of microbial growth and death, it is necessary to enumerate microorganisms, that is, to determine their numbers. It is also often essential to determine the number of microorganisms in a given sample. For example, the ability to determine the safety of many foods and drugs depends on knowing the levels of microorganisms in those products. A variety of methods has been developed for the enumeration of microbes. These methods measure cell numbers, cell mass, or cell constituents that are proportional to cell number. The four general approaches used for estimating the sizes of microbial populations are direct and indirect counts of cells and direct and indirect measurements of microbial biomass. Each method will be described in more detail below. 1. Direct Count of Cells Cells are counted directly under the microscope or by an electronic particle counter. Two of the most common procedures used in microbiology are discussed below. Direct Count Using a Counting Chamber Direct microscopic counts are performed by spreading a measured volume of sample over a known area of a slide, counting representative microscopic fields, and relating the averages back to the appropriate volume-area factors. -

Unit 1: Counting and Cardinality Goal: Students Will Learn Number Names, the Counting Sequence, How to Count to Tell the Number of Objects, and How to Compare Numbers

Kindergarten – Trimester 1: Aug – Oct (11 weeks) Unit 1: Counting and Cardinality Goal: Students will learn number names, the counting sequence, how to count to tell the number of objects, and how to compare numbers. Students will represent and use whole numbers, initially with a set of objects. Students will learn to describe shapes and space. Structures to Support CA Content Standards/CGI/Problem Solving: Real World Math, Problem Analysis “Think Time”, Partner Collaboration, Productive Struggle, Whole Group Student Share Youcubed Week of Inspirational Math https://www.youcubed.org/week-inspirational-math/ Common Unit Tasks and Critical Areas of Instruction Core Curriculum Work Assessments/Proficiency Scale(s): Critical Concepts: Focus Areas (use these activities most of the time): Trimester 1 Tasks & Assessments: ● Cognitively Guided Counting and Cardinality: -Counting Collections Instruction ● MyMath Ch 1: Numbers 0 to 5 -Describe, Draw, Describe ● Counting Collections ● MyMath Ch 2: Numbers to 10 -Card games ● Word Problems (Separate – ● MyMath Ch 3: Numbers Beyond 10 -CGI Assessment (do in BOY, MOY, and EOY Result Unknown, Join – ● Counting Collections: What is it? to gauge student growth) Result Unknown, Partitive ● Questions for Counting Collections ● CGI Assessment Launch Script Division) ● Number Talks/Math Warm Ups (Ordinal numbers, compare ● CGI Kinder intro Assessment A ● Name, recognize, count, and numbers) ● K-1 CGI assess codebook write numbers 0 to 20 Operations/Algebraic Thinking: ● Compare numbers to 10 ● Word Problems -

Equivalents to the Axiom of Choice and Their Uses A

EQUIVALENTS TO THE AXIOM OF CHOICE AND THEIR USES A Thesis Presented to The Faculty of the Department of Mathematics California State University, Los Angeles In Partial Fulfillment of the Requirements for the Degree Master of Science in Mathematics By James Szufu Yang c 2015 James Szufu Yang ALL RIGHTS RESERVED ii The thesis of James Szufu Yang is approved. Mike Krebs, Ph.D. Kristin Webster, Ph.D. Michael Hoffman, Ph.D., Committee Chair Grant Fraser, Ph.D., Department Chair California State University, Los Angeles June 2015 iii ABSTRACT Equivalents to the Axiom of Choice and Their Uses By James Szufu Yang In set theory, the Axiom of Choice (AC) was formulated in 1904 by Ernst Zermelo. It is an addition to the older Zermelo-Fraenkel (ZF) set theory. We call it Zermelo-Fraenkel set theory with the Axiom of Choice and abbreviate it as ZFC. This paper starts with an introduction to the foundations of ZFC set the- ory, which includes the Zermelo-Fraenkel axioms, partially ordered sets (posets), the Cartesian product, the Axiom of Choice, and their related proofs. It then intro- duces several equivalent forms of the Axiom of Choice and proves that they are all equivalent. In the end, equivalents to the Axiom of Choice are used to prove a few fundamental theorems in set theory, linear analysis, and abstract algebra. This paper is concluded by a brief review of the work in it, followed by a few points of interest for further study in mathematics and/or set theory. iv ACKNOWLEDGMENTS Between the two department requirements to complete a master's degree in mathematics − the comprehensive exams and a thesis, I really wanted to experience doing a research and writing a serious academic paper. -

Bijections and Infinities 1 Key Ideas

INTRODUCTION TO MATHEMATICAL REASONING Worksheet 6 Bijections and Infinities 1 Key Ideas This week we are going to focus on the notion of bijection. This is a way we have to compare two different sets, and say that they have \the same number of elements". Of course, when sets are finite everything goes smoothly, but when they are not... things get spiced up! But let us start, as usual, with some definitions. Definition 1. A bijection between a set X and a set Y consists of matching elements of X with elements of Y in such a way that every element of X has a unique match, and every element of Y has a unique match. Example 1. Let X = f1; 2; 3g and Y = fa; b; cg. An example of a bijection between X and Y consists of matching 1 with a, 2 with b and 3 with c. A NOT example would match 1 and 2 with a and 3 with c. In this case, two things fail: a has two partners, and b has no partner. Note: if you are familiar with these concepts, you may have recognized a bijection as a function between X and Y which is both injective (1:1) and surjective (onto). We will revisit the notion of bijection in this language in a few weeks. For now, we content ourselves of a slightly lower level approach. However, you may be a little unhappy with the use of the word \matching" in a mathematical definition. Here is a more formal definition for the same concept.