Compiling and Running a Parallel Program on a First Generation Cray Supercluster

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

New CSC Computing Resources

New CSC computing resources Atte Sillanpää, Nino Runeberg CSC – IT Center for Science Ltd. Outline CSC at a glance New Kajaani Data Centre Finland’s new supercomputers – Sisu (Cray XC30) – Taito (HP cluster) CSC resources available for researchers CSC presentation 2 CSC’s Services Funet Services Computing Services Universities Application Services Polytechnics Ministries Data Services for Science and Culture Public sector Information Research centers Management Services Companies FUNET FUNET and Data services – Connections to all higher education institutions in Finland and for 37 state research institutes and other organizations – Network Services and Light paths – Network Security – Funet CERT – eduroam – wireless network roaming – Haka-identity Management – Campus Support – The NORDUnet network Data services – Digital Preservation and Data for Research Data for Research (TTA), National Digital Library (KDK) International collaboration via EU projects (EUDAT, APARSEN, ODE, SIM4RDM) – Database and information services Paituli: GIS service Nic.funet.fi – freely distributable files with FTP since 1990 CSC Stream Database administration services – Memory organizations (Finnish university and polytechnics libraries, Finnish National Audiovisual Archive, Finnish National Archives, Finnish National Gallery) 4 Current HPC System Environment Name Louhi Vuori Type Cray XT4/5 HP Cluster DOB 2007 2010 Nodes 1864 304 CPU Cores 10864 3648 Performance ~110 TFlop/s 34 TF Total memory ~11 TB 5 TB Interconnect Cray QDR IB SeaStar Fat tree 3D Torus CSC -

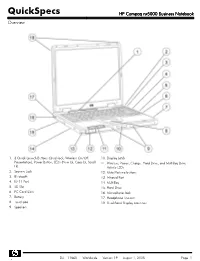

HP Compaq Nx5000 Business Notebook Overview

QuickSpecs HP Compaq nx5000 Business Notebook Overview 1. 3 Quick Launch Buttons (Quicklock, Wireless On/Off, 10. Display Latch Presentation), Power Button, LEDs (Num Lk, Caps Lk, Scroll 11. Wireless, Power, Charge, Hard Drive, and MultiBay Drive Lk) Activity LEDs 2. Security Lock 12. Mute/Volume buttons 3. Bluetooth 13. Infrared Port 4. RJ-11 Port 14. MultiBay 5. SD Slot 15. Hard Drive 6. PC Card Slots 16. Microphone Jack 7. Battery 17. Headphone line-out 8. Touchpad 18. Dual-Band Display Antennas 9. Speakers DA - 11860 Worldwide — Version 19 — August 1, 2005 Page 1 QuickSpecs HP Compaq nx5000 Business Notebook Overview 1. Power Connector 5. VGA 2. Serial 6. IEEE-1394 3. Parallel 7. RJ-45 4. S-Video 8. USB 2.0 (2) At A Glance Microsoft Windows XP Professional, Microsoft Windows XP Home Edition Intel® Pentium® M Processors up to 2.0 GHz Intel Celeron™ M up to 1.4-GHz Intel 855GM chipset with 400-MHz front side bus FreeDOS™ and SUSE® Linux HP Edition 9.1 preinstalled Support for 14.1-inch and 15.0-inch display 266-MHz DDR SDRAM 2 SODIMM slots available – upgradeable to 2048 MB Up to 60-GB 4200 or 5400 rpm, user-removable hard drives. 10/100 Ethernet NIC Touchpad with right/left button Support for MultiBay devices Support for Basic Port Replicator and Advanced Port Replicator Protected by a One- or Three-Year, Worldwide Limited Warranty – certain restrictions and exclusions apply. Consult the HP Customer Support Center for details. What's Special Microsoft Windows XP Professional, Microsoft Windows XP Home Edition Sleek industrial design with weight starting at 5.44 lb (travel weight) and 1.38-inch thin Integrated Intel Extreme Graphics 2 with up to 64 MB of dynamically allocated shared system memory for graphics Up to 9 hours battery life with 8-cell standard Li-Ion battery and Multibay battery. -

HP/NVIDIA Solutions for HPC Compute and Visualization

HP Update Bill Mannel VP/GM HPC & Big Data Business Unit Apollo Servers © Copyright 2015 Hewlett-Packard Development Company, L.P. The information contained herein is subject to change without notice. The most exciting shifts of our time are underway Security Mobility Cloud Big Data Time to revenue is critical Decisions Making IT critical Business needs must be rapid to business success happen anywhere Change is constant …for billion By 2020 billion 30 devices 8 people trillion GB million 40 data 10 mobile apps 2 © Copyright 2015 Hewlett-Packard Development Company, L.P. The information contained herein is subject to change without notice. HP’s compute portfolio for better IT service delivery Software-defined and cloud-ready API HP OneView HP Helion OpenStack RESTful APIs WorkloadWorkload-optimized Optimized Mission-critical Virtualized &cloud Core business Big Data, HPC, SP environments workloads applications & web scalability Workloads HP HP HP Apollo HP HP ProLiant HP Integrity HP Integrity HP BladeSystem HP HP HP ProLiant SL Moonshot Family Cloudline scale-up blades & Superdome NonStop MicroServer ProLiant ML ProLiant DL Density and efficiency Lowest Cost Convergence Intelligence Availability to scale rapidly built to Scale for continuous business to accelerate IT service delivery to increase productivity Converged Converged network Converged management Converged storage Common modular HP Networking HP OneView HP StoreVirtual VSA architecture Global support and services | Best-in-class partnerships | ConvergedSystem 3 © Copyright 2015 Hewlett-Packard Development Company, L.P. The information contained herein is subject to change without notice. Hyperscale compute grows 10X faster than the total market HPC is a strategic growth market for HP Traditional Private Cloud HPC Service Provider $50 $40 36% $30 50+% $B $20 $10 $0 2013 2014 2015 2016 2017 4 © Copyright 2015 Hewlett-Packard Development Company, L.P. -

CRAY-2 Design Allows Many Types of Users to Solve Problems That Cannot Be Solved with Any Other Computers

Cray Research's mission is to lead in the development and marketingof high-performancesystems that make a unique contribution to the markets they serve. For close toa decade, Cray Research has been the industry leader in large-scale computer systems. Today, the majority of supercomputers installed worldwide are Cray systems. These systems are used In advanced research laboratories around the world and have gained strong acceptance in diverse industrial environments. No other manufacturer has Cray Research's breadth of success and experience in supercomputer development. The company's initial product, the GRAY-1 Computer System, was first installed in 1978. The CRAY-1 quickly established itself as the standard of value for large-scale computers and was soon recognized as the first commercially successful vector processor. For some time previously, the potential advantagee af vector processing had been understood, but effective Dractical imolementation had eluded com~uterarchitects. The CRAY-1 broke that barrier, and today vect~rization'techni~uesare used commonly by scientists and engineers in a widevariety of disciplines. With its significant innovations in architecture and technology, the GRAY-2 Computer System sets the standard for the next generation of supercomputers. The CRAY-2 design allows many types of users to solve problems that cannot be solved with any other computers. The GRAY-2 provides an order of magnitude increase in performanceaver the CRAY-1 at an attractive price/performance ratio. Introducing the CRAY-2 Computer System The CRAY-2 Computer System sets the standard for the next generation of supercomputers. It is characterized by a large Common Memory (256 million 64-bit words), four Background Processors, a clock cycle of 4.1 nanoseconds (4.1 billionths of a second) and liquid immersion cooling. -

Revolution: Greatest Hits

REVOLUTION: GREATEST HITS SHORT ON TIME? VISIT THESE OBJECTS INSIDE THE REVOLUTION EXHIBITION FOR A QUICK OVERVIEW REVOLUTION: PUNCHED CARDS REVOLUTION: BIRTH OF THE COMPUTER REVOLUTION: BIRTH OF THE COMPUTER REVOLUTION: REAL-TIME COMPUTING Hollerith Electric ENIAC, 1946 ENIGMA, ca. 1935 Raytheon Apollo Guidance Tabulating System, 1890 Used in World War II to calculate Few technologies were as Computer, 1966 This device helped the US gun trajectories, only a few piec- decisive in World War II as the This 70 lb. box, built using the government complete the 1890 es remain of this groundbreaking top-secret German encryption new technology of Integrated census in record time and, in American computing system. machine known as ENIGMA. Circuits (ICs), guided Apollo 11 the process, launched the use of ENIAC used 18,000 vacuum Breaking its code was a full-time astronauts to the Moon and back in July of 1969, the first manned punched cards in business. IBM tubes and took several days task for Allied code breakers who moon landing in human history. used the Hollerith system as the to program. invented remarkable comput- The AGC was a lifeline for the basis of its business machines. ing machines to help solve the astronauts throughout the eight ENIGMA riddle. day mission. REVOLUTION: MEMORY & STORAGE REVOLUTION: SUPERCOMPUTERS REVOLUTION: MINICOMPUTERS REVOLUTION: AI & ROBOTICS IBM RAMAC Disk Drive, 1956 Cray-1 Supercomputer, 1976 Kitchen Computer, 1969 SRI Shakey the Robot, 1969 Seeking a faster method of The stunning Cray-1 was the Made by Honeywell and sold by Shakey was the first robot that processing data than using fastest computer in the world. -

Utilizing the Modern Computing Capabilities of the HP Nonstop Server a Business White Paper from Creative System Software, Inc

Utilizing the Modern Computing Capabilities of the HP NonStop Server A business white paper from Creative System Software, Inc. Executive Summary ................................................ 2 The Road To NonStop ............................................. 2 Software Development – Time To Market .............. 4 A Tale Of Two Developers ...................................... 5 Modern Tools And Technologies ............................ 6 System Management ............................................. 7 Security .................................................................. 8 Make Your Data Accessible .................................... 9 The Proof Of The Pudding Is In The Eating ............ 10 Summary .............................................................. 11 EXECUTIVE SUMMARY During a downturn, it’s hard to see the upside, revenues are down and budgets have fallen along with them. Companies have frozen capital expenditures and the push is on to cut the costs of IT. This means intense pressure to do far more with fewer resources. For years, companies have known that they need to eliminate duplication of effort, lower service costs, increase efficiency, and improve business agility by reducing complexity. Fortunately there are some technologies that can help, the most important of which is the HP Integrity NonStop server platform. The Integrity NonStop is a modern, open and standards based computing platform that just happens to offer the highest reliability and scalability in the industry along with an outstanding total -

Compaq Ipaq H3650 Pocket PC

Compaq iPAQ H3650 QUICKSPECS Pocket PC Overview . AT A GLANCE . Easy expansion and customization . through Compaq Expansion Pack . System . • Thin, lightweight design with . brilliant color screen. • Audio record and playback – . Audio programs from the web, . MP3 music, or voice notations . • . Rechargeable battery that gives . up to 12 hours of battery life . • Protected by Compaq Services, . including a one-year warranty — . Certain restrictions and exclusions . apply. Consult the Compaq . Customer Support Center for . details. In Canada, consult the . Product Information Center at 1- . 800-567-1616 for details. 1. Instant on/off and Light Button 7. Calendar Button . 2. Display 8. Voice Recorder Button . 3. QStart Button 9. Microphone . 4. QMenu 10. Ambient Light Sensor . 5. Speaker and 5-way joystick 11. Alarm/Charge Indicator Light . 6. Contacts Button . 1 DA-10632-01-002 — 06.05.2000 Compaq iPAQ H3650 QUICKSPECS Pocket PC Standard Features . MODELS . Processor . Compaq iPAQ H3650 Pocket . 206 MHz Intel StrongARM SA-1110 32-bit RISC Processor . PC . Memory . 170293-001 – NA Commercial . 32-MB SDRAM, 16-MB Flash Memory . Interfaces . Front Panel Buttons 5 buttons plus five-way joystick; (1 on/off and backlight button and (2-5) . customizable application buttons) . Navigator Button 1 Five-way joystick . Side Panel Recorder Button 1 . Bottom Panel Reset Switch 1 . Stylus Eject Button 1 . Communications Port includes serial port . Infrared Port 1 (115 Kbps) . Speaker 1 . Light Sensor 1 . Microphone 1 . Communications Port 1 (with USB/Serial connectivity) . Stereo Audio Output Jack 1 (standard 3.5 mm) . Cradle Interfaces . Connector 1 . Cable 1 USB or Serial cable connects to PC . -

Sps3000 030602.Qxd (Page 1)

SPS 3000 MOBILE ACCESSORIES Scanning and Wireless Connectivity for the Compaq iPAQ™ Pocket PC The new Symbol SPS 3000 is the first expansion pack that delivers integrated data capture and real-time wireless communi- cation to users of the Compaq iPAQ™ Pocket PC. With the SPS 3000, the Compaq iPAQ instantly becomes a more effective, business process automation tool with augmented capabilities that include bar code scanning and wireless connectivity. Compatible, Versatile and Flexible The SPS 3000 pack attaches easily to the Compaq iPAQ Pocket PC, and the lightweight, ergonomic design provides a secure, comfortable fit in the hand. The coupled device maintains 100% compatibility with Compaq iPAQ recharging and synchronization cradles for maximum convenience and cost-effectiveness. Features Benefits The SPS 3000 is available in three feature configurations: Symbol’s 1-D scan engine Fast, accurate data capture Scanning only; Wireless Local Area Network (WLAN) only; Integrated 802.11b WLAN Enables real-time information Scanning and WLAN. sharing between remote activities Scanning Only: Enables 1-dimensional bar code scanning, and host system which is activated using any of the five programmable Ergonomic, lightweight design Offers increased user comfort and application buttons on the Compaq iPAQ. An optional ‘virtual’ acceptance on-screen button may also be used. Peripheral compatibility Maximum convenience and WLAN Only: Provides 11Mbps Direct Sequence WLAN efficiency ® connectivity through an integrated Spectrum24 802.11b radio Very low power consumption Maintains expected battery life of and antenna. device Scanning and WLAN: Delivers a powerful data management solution with integrated bar code scanning and WLAN the corporate intranet, the Internet or mission critical applications, communication. -

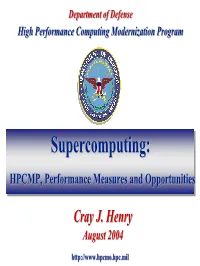

Supercomputing

DepartmentDepartment ofof DefenseDefense HighHigh PerformancePerformance ComputingComputing ModernizationModernization ProgramProgram Supercomputing:Supercomputing: CrayCray Henry,Henry, DirectorDirector HPCMP,HPCMP, PerformancePerformance44 May MayMeasuresMeasures 20042004 andand OpportunitiesOpportunities CrayCray J.J. HenryHenry AugustAugust 20042004 http://http://www.hpcmo.hpc.milwww.hpcmo.hpc.mil 20042004 HPECHPEC ConferenceConference PresentationPresentation OutlineOutline zz WhatWhat’sWhat’’ss NewNew inin thethe HPCMPHPCMP 00NewNew hardwarehardware 00HPCHPC SoftwareSoftware ApplicationApplication InstitutesInstitutes 00CapabilityCapability AllocationsAllocations 00OpenOpen ResearchResearch SystemsSystems 00OnOn-demand-demand ComputingComputing zz PerformancePerformance MeasuresMeasures -- HPCMPHPCMPHPCMP zz PerformancePerformance MeasuresMeasures –– ChallengesChallengesChallenges && OpportunitiesOpportunities HPCMPHPCMP CentersCenters 19931993 20042004 Legend MSRCs ADCs and DDCs TotalTotal HPCMHPCMPP EndEnd-of-Year-of-Year ComputationalComputational CapabilitiesCapabilities 80 MSRCs ADCs 120,000 70 13.1 MSRCs DCs 100,000 60 23,327 80,000 Over 400X Growth 50 s F Us 60,000 40 eak G HAB 12.1 P 21,759 30 59.3 40,000 77,676 5,86 0 20,000 4,393 30,770 20 2.6 21,946 1, 2 76 3,171 26.6 18 9 3 6 0 688 1, 16 8 2,280 8,03212 , 0 14 2.7 18 1 47 10 0 1,944 3,477 10 15.7 0 50 400 1,200 10.6 3 4 5 6 7 8 9 0 1 2 3 4 0 199 199 199 199 199 199 199 200 200 200 200 200 FY 01 FY 02 FY 03 FY 04 Year Fiscal Year (TI-XX) HPCMPHPCMP SystemsSystems (MSRCs)(MSRCs)20042004 -

Nonstop Product Offerings and Roadmap

NonStop Product Offerings and Roadmap Iain Liston-Brown (EMEA NED Presales Consulting) 8th May, 2013 © Copyright 2013 Hewlett-Packard Development Company, L.P. The information contained herein is subject to change without notice. Forward-looking statements This is a rolling (up to three year) Roadmap and is subject to change without notice. This document contains forward looking statements regarding future operations, product development, product capabilities and availability dates. This information is subject to substantial uncertainties and is subject to change at any time without prior notification. Statements contained in this document concerning these matters only reflect Hewlett Packard's predictions and / or expectations as of the date of this document and actual results and future plans of Hewlett-Packard may differ significantly as a result of, among other things, changes in product strategy resulting from technological, internal corporate, market and other changes. This is not a commitment to deliver any material, code or functionality and should not be relied upon in making purchasing decisions. 2 © Copyright 2013 Hewlett-Packard Development Company, L.P. The information contained herein is subject to change without notice. HP confidential information This is a rolling (up to three year) Roadmap and is subject to change without notice. This Roadmap contains HP Confidential Information. If you have a valid Confidential Disclosure Agreement with HP, disclosure of the Roadmap is subject to that CDA. If not, it is subject to the following terms: for a period of 3 years after the date of disclosure, you may use the Roadmap solely for the purpose of evaluating purchase decisions from HP and use a reasonable standard of care to prevent disclosures. -

NQE Release Overview RO–5237 3.3

NQE Release Overview RO–5237 3.3 Document Number 007–3795–001 Copyright © 1998 Silicon Graphics, Inc. and Cray Research, Inc. All Rights Reserved. This manual or parts thereof may not be reproduced in any form unless permitted by contract or by written permission of Silicon Graphics, Inc. or Cray Research, Inc. RESTRICTED RIGHTS LEGEND Use, duplication, or disclosure of the technical data contained in this document by the Government is subject to restrictions as set forth in subdivision (c) (1) (ii) of the Rights in Technical Data and Computer Software clause at DFARS 52.227-7013 and/or in similar or successor clauses in the FAR, or in the DOD or NASA FAR Supplement. Unpublished rights reserved under the Copyright Laws of the United States. Contractor/manufacturer is Silicon Graphics, Inc., 2011 N. Shoreline Blvd., Mountain View, CA 94043-1389. Autotasking, CF77, CRAY, Cray Ada, CraySoft, CRAY Y-MP, CRAY-1, CRInform, CRI/TurboKiva, HSX, LibSci, MPP Apprentice, SSD, SUPERCLUSTER, UNICOS, and X-MP EA are federally registered trademarks and Because no workstation is an island, CCI, CCMT, CF90, CFT, CFT2, CFT77, ConCurrent Maintenance Tools, COS, Cray Animation Theater, CRAY APP, CRAY C90, CRAY C90D, Cray C++ Compiling System, CrayDoc, CRAY EL, CRAY J90, CRAY J90se, CrayLink, Cray NQS, Cray/REELlibrarian, CRAY S-MP, CRAY SSD-T90, CRAY T90, CRAY T3D, CRAY T3E, CrayTutor, CRAY X-MP, CRAY XMS, CRAY-2, CSIM, CVT, Delivering the power . ., DGauss, Docview, EMDS, GigaRing, HEXAR, IOS, ND Series Network Disk Array, Network Queuing Environment, Network Queuing Tools, OLNET, RQS, SEGLDR, SMARTE, SUPERLINK, System Maintenance and Remote Testing Environment, Trusted UNICOS, UNICOS MAX, and UNICOS/mk are trademarks of Cray Research, Inc. -

Mr. Capellas Has Served As Our Non-Executive Chairman of the Chairman of the Board Board Since June 2017 and As a Member of Our Board of Directors Since March 2014

Toppan Merrill - Flex LTD Annual Report Combo Book - FYE 3.31.19 ED | 105212 | 09-Jul-19 16:19 | 19-11297-1.bb | Sequence: 20 CHKSUM Content: 43498 Layout: 11362 Graphics: 60748 CLEAN Part III—Proposals to be Considered at the 2019 Annual General Meeting of Shareholders AGM Proposal Nos. 1 and 2: Re-Election of Directors Michael D. Capellas, Mr. Capellas has served as our non-executive Chairman of the Chairman of the Board Board since June 2017 and as a member of our Board of Directors since March 2014. He has served as Principal at Capellas Strategic Principal, Capellas Partners since June 2013. He served as the Chairman of the Board Strategic Partners of VCE Company, LLC (VCE) from January 2011 until Director Since: 2014 November 2012 and as VCE’s Chief Executive Officer from May 2010 to September 2011. VCE is a joint venture between EMC Age: 64 Corporation and Cisco with investments from VMware, Inc. and Intel Board Committee: Corporation. Mr. Capellas was the Chairman and Chief Executive Nominating and Corporate Officer of First Data Corporation from September 2007 to Governance Committee (Chair) March 2010. From October 2006 to July 2007, Mr. Capellas served as a Senior Advisor at Silver Lake Partners. From November 2002 Other Public Company to January 2006, he served as Chief Executive Officer of MCI, Inc. Boards: (MCI), previously WorldCom, Inc. From March 2004 to Cisco Systems, Inc. January 2006, he also served as that company’s President. From November 2002 to March 2004, he was also Chairman of the Board Key Qualifications and of WorldCom, and he continued to serve as a member of the board Expertise: of directors of MCI until January 2006.