Scalable Creation of a Web-Scale Ontology

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Why the Inimitable Sarah Paulson Is the Future Of

he won an Emmy, SAG Award and Golden Globe for her bravura performance as Marcia Clark in last year’s FX miniseries, The People v. O.J. Simpson: American Crime Story, but it took Sarah Paulson almost another year to confirm what the TV industry really thinks about her acting chops. Earlier this year, her longtime collaborator and O.J. executive producer Ryan Murphy offered the actress the lead in Ratched, an origin story he is executive producing that focuses on Nurse Ratched, the Siconic, sadistic nurse from the 1975 film One Flew Over the Cuckoo’s Nest. Murphy shopped the project around to networks, offering a package for the first time that included his frequent muse Paulson attached as star and producer. “That was very exciting and also very scary, because I thought, oh God, what if they take this out, and people are like, ‘No thanks, we’re good. We don’t need a Sarah Paulson show,’” says Paulson. “Thankfully, it all worked out very well.” In the wake of last year’s most acclaimed TV performance, everyone—TV networks and movie studios alike—wants to be in business with Paulson. Ratched sparked a high-stakes bidding war, with Netflix ultimately fending off suitors like Hulu and Apple (which is developing an original TV series strategy) for the project last month, giving the drama a hefty Why the inimitable two-season commitment. And that is only one of three high- profile TV series that Paulson will film over the next year. In Sarah Paulson is the 2018, she’ll begin production on Katrina, the third installment in Murphy’s American Crime Story anthology series for FX, and continue on the other Murphy FX anthology hit that future of TV. -

Formal Concept Analysis in Knowledge Processing: a Survey on Applications ⇑ Jonas Poelmans A,C, , Dmitry I

Expert Systems with Applications 40 (2013) 6538–6560 Contents lists available at SciVerse ScienceDirect Expert Systems with Applications journal homepage: www.elsevier.com/locate/eswa Review Formal concept analysis in knowledge processing: A survey on applications ⇑ Jonas Poelmans a,c, , Dmitry I. Ignatov c, Sergei O. Kuznetsov c, Guido Dedene a,b a KU Leuven, Faculty of Business and Economics, Naamsestraat 69, 3000 Leuven, Belgium b Universiteit van Amsterdam Business School, Roetersstraat 11, 1018 WB Amsterdam, The Netherlands c National Research University Higher School of Economics, Pokrovsky boulevard, 11, 109028 Moscow, Russia article info abstract Keywords: This is the second part of a large survey paper in which we analyze recent literature on Formal Concept Formal concept analysis (FCA) Analysis (FCA) and some closely related disciplines using FCA. We collected 1072 papers published Knowledge discovery in databases between 2003 and 2011 mentioning terms related to Formal Concept Analysis in the title, abstract and Text mining keywords. We developed a knowledge browsing environment to support our literature analysis process. Applications We use the visualization capabilities of FCA to explore the literature, to discover and conceptually repre- Systematic literature overview sent the main research topics in the FCA community. In this second part, we zoom in on and give an extensive overview of the papers published between 2003 and 2011 which applied FCA-based methods for knowledge discovery and ontology engineering in various application domains. These domains include software mining, web analytics, medicine, biology and chemistry data. Ó 2013 Elsevier Ltd. All rights reserved. 1. Introduction mining, i.e. gaining insight into source code with FCA. -

Facts Are Stubborn Things": Protecting Due Process from Virulent Publicity

Touro Law Review Volume 33 Number 2 Article 8 2017 "Facts Are Stubborn Things": Protecting Due Process from Virulent Publicity Benjamin Brafman Darren Stakey Follow this and additional works at: https://digitalcommons.tourolaw.edu/lawreview Part of the Civil Procedure Commons, Civil Rights and Discrimination Commons, and the First Amendment Commons Recommended Citation Brafman, Benjamin and Stakey, Darren (2017) ""Facts Are Stubborn Things": Protecting Due Process from Virulent Publicity," Touro Law Review: Vol. 33 : No. 2 , Article 8. Available at: https://digitalcommons.tourolaw.edu/lawreview/vol33/iss2/8 This Article is brought to you for free and open access by Digital Commons @ Touro Law Center. It has been accepted for inclusion in Touro Law Review by an authorized editor of Digital Commons @ Touro Law Center. For more information, please contact [email protected]. Brafman and Stakey: Facts Are Stubborn Things “FACTS ARE STUBBORN THINGS”: PROTECTING DUE PROCESS FROM VIRULENT PUBLICITY by Benjamin Brafman, Esq.* and Darren Stakey, Esq.** *Benjamin Brafman is the principal of a seven-lawyer firm Brafman & Associates, P.C. located in Manhattan. Mr. Brafman’s firm specializes in criminal law with an emphasis on White Collar criminal defense. Mr. Brafman received his law degree from Ohio Northern University, in 1974, graduating with Distinction and serving as Manuscript Editor of The Law Review. He went on to earn a Masters of Law Degree (LL.M.) in Criminal Justice from New York University Law School. In May of 2014, Mr. Brafman was awarded an Honorary Doctorate from Ohio Northern University Law School. Mr. Brafman, a former Assistant District Attorney in the Rackets Bureau of the New York County District Attorney’s Office, has been in private practice since 1980. -

Concept Maps Mining for Text Summarization

UNIVERSIDADE FEDERAL DO ESPÍRITO SANTO CENTRO TECNOLÓGICO DEPARTAMENTO DE INFORMÁTICA PROGRAMA DE PÓS-GRADUAÇÃO EM INFORMÁTICA Camila Zacché de Aguiar Concept Maps Mining for Text Summarization VITÓRIA-ES, BRAZIL March 2017 Camila Zacché de Aguiar Concept Maps Mining for Text Summarization Dissertação de Mestrado apresentada ao Programa de Pós-Graduação em Informática da Universidade Federal do Espírito Santo, como requisito parcial para obtenção do Grau de Mestre em Informática. Orientador (a): Davidson CurY Co-orientador: Amal Zouaq VITÓRIA-ES, BRAZIL March 2017 Dados Internacionais de Catalogação-na-publicação (CIP) (Biblioteca Setorial Tecnológica, Universidade Federal do Espírito Santo, ES, Brasil) Aguiar, Camila Zacché de, 1987- A282c Concept maps mining for text summarization / Camila Zacché de Aguiar. – 2017. 149 f. : il. Orientador: Davidson Cury. Coorientador: Amal Zouaq. Dissertação (Mestrado em Informática) – Universidade Federal do Espírito Santo, Centro Tecnológico. 1. Informática na educação. 2. Processamento de linguagem natural (Computação). 3. Recuperação da informação. 4. Mapas conceituais. 5. Sumarização de Textos. 6. Mineração de dados (Computação). I. Cury, Davidson. II. Zouaq, Amal. III. Universidade Federal do Espírito Santo. Centro Tecnológico. IV. Título. CDU: 004 “Most of the fundamental ideas of science are essentially simple, and may, as a rule, be expressed in a language comprehensible to everyone.” Albert Einstein 4 Acknowledgments I would like to thank the manY people who have been with me over the years and who have contributed in one way or another to the completion of this master’s degree. Especially my advisor, Davidson Cury, who in a constructivist way disoriented me several times as a stimulus to the search for new answers. -

Empowering Video Storytellers: Concept Discovery and Annotation for Large Audio-Video-Text Archives

Empowering Video Storytellers: Concept Discovery and Annotation for Large Audio-Video-Text Archives A large amount of video content is available and stored by broadcasters, libraries and other enterprises. However, the lack of effective and efficient tools for searching and retrieving has prevented video becoming a major value asset. Much work has been done for retrieving video information from constrained structured domains, such as news and sports, but general solutions for unstructured audio-video-language intensive content are missing. Our goal is to transform the multimedia archive into an organized and searchable asset so that human efforts can focus on a more important aspect - storytelling. This project involves a cross- disciplinary effort towards development of new techniques for organizing, summarizing, and question answering over audio-video-language intensive content, such as raw documentary footage. Such domains offer the full range of real-world situations and events, manifested with interaction of rich multimedia information. The team includes investigators from Schools of Engineering and Applied Science, Journalism, and Arts. It has extensive experience and deep expertise in analysis of multimedia information - video, audio, and language - as well as broad experience with professional documentary film production and interactive media designs. The project includes two major content partners, WITNESS and WNET, both providing unique video archives, well-defined application domains and user communities. In particular, WITNESS collects and manages a documentary video archive from activists all over the world to support the promotion of human rights. This archive has several important qualities: it is diverse (including interviews, documentation of events, and ‘hidden camera’ reporting), it is relatively ‘raw’ (shot by semi-professional operators), it has been shot for specific purposes (e.g. -

Technical Phrase Extraction for Patent Mining: a Multi-Level Approach

2020 IEEE International Conference on Data Mining (ICDM) Technical Phrase Extraction for Patent Mining: A Multi-level Approach Ye Liu, Han Wu, Zhenya Huang, Hao Wang, Jianhui Ma, Qi Liu, Enhong Chen*, Hanqing Tao, Ke Rui Anhui Province Key Laboratory of Big Data Analysis and Application, School of Data Science & School of Computer Science and Technology, University of Science and Technology of China {liuyer, wuhanhan, wanghao3, hqtao, kerui}@mail.ustc.edu.cn, {huangzhy, jianhui, qiliuql, cheneh}@ustc.edu.cn Abstract—Recent years have witnessed a booming increase of TABLE I: Technical Phrases vs. Non-technical Phrases patent applications, which provides an open chance for revealing Domain Technical phrase Non-technical phrase the inner law of innovation, but in the meantime, puts forward Electricity wireless communication, wire and cable, TV sig- higher requirements on patent mining techniques. Considering netcentric computer service nal, power plug Mechanical fluid leak detection, power building materials, steer that patent mining highly relies on patent document analysis, Engineering transmission column, seat back this paper makes a focused study on constructing a technology portrait for each patent, i.e., to recognize technical phrases relevant works have been explored on phrase extraction. Ac- concerned in it, which can summarize and represent patents cording to the extraction target, they can be divided into Key from a technology angle. To this end, we first give a clear Phrase Extraction [8], Named Entity Recognition (NER) [9] and detailed description about technical phrases in patents and Concept Extraction [10]. Key Phrase Extraction aims to based on various prior works and analyses. Then, combining characteristics of technical phrases and multi-level structures extract phrases that provide a concise summary of a document, of patent documents, we develop an Unsupervised Multi-level which prefers those both frequently-occurring and closed to Technical Phrase Extraction (UMTPE) model. -

OJ Episode 1, FINAL, 6-3-15.Fdx Script

EPISODE 1: “FROM THE ASHES OF TRAGEDY” Written by Scott Alexander and Larry Karaszewski Based on “THE RUN OF HIS LIFE” By Jeffrey Toobin Directed by Ryan Murphy Fox 21 FX Productions Color Force FINAL 6-3-15 Ryan Murphy Television ALL RIGHTS RESERVED. COPYRIGHT © 2015 TWENTIETH CENTURY FOX FILM CORPORATION. NO PORTION OF THIS SCRIPT MAY BE PERFORMED, PUBLISHED, REPRODUCED, SOLD, OR DISTRIBUTED BY ANY MEANS OR QUOTED OR PUBLISHED IN ANY MEDIUM, INCLUDING ANY WEB SITE, WITHOUT PRIOR WRITTEN CONSENT OF TWENTIETH CENTURY FOX FILM CORPORATION. DISPOSAL OF THIS SCRIPT COPY DOES NOT ALTER ANY OF THE RESTRICTIONS SET FORTH ABOVE. 1 ARCHIVE FOOTAGE - THE RODNEY KING BEATING. Grainy, late-night 1 video. An AFRICAN-AMERICAN MAN lies on the ground. A handful of white LAPD COPS stand around, watching, while two of them ruthlessly BEAT and ATTACK him. ARCHIVE FOOTAGE - THE L.A. RIOTS. The volatile eruption of a city. Furious AFRICAN-AMERICANS tear Los Angeles apart. Trash cans get hurled through windows. Buildings burn. Cars get overturned. People run through the streets. Faces are angry, frustrated, screaming. The NOISE and FURY and IMAGES build, until -- SILENCE. Then, a single CARD: "TWO YEARS LATER" CUT TO: 2 EXT. ROCKINGHAM HOUSE - LATE NIGHT 2 ANGLE on a BRONZE STATUE of OJ SIMPSON, heroic in football regalia. Larger than life, inspiring. It watches over OJ'S estate, an impressive Tudor mansion on a huge corner lot. It's June 12, 1994. Out front, a young LIMO DRIVER waits. He nervously checks his watch. Then, OJ SIMPSON comes rushing from the house. -

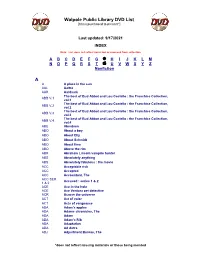

Walpole Public Library DVD List A

Walpole Public Library DVD List [Items purchased to present*] Last updated: 9/17/2021 INDEX Note: List does not reflect items lost or removed from collection A B C D E F G H I J K L M N O P Q R S T U V W X Y Z Nonfiction A A A place in the sun AAL Aaltra AAR Aardvark The best of Bud Abbot and Lou Costello : the Franchise Collection, ABB V.1 vol.1 The best of Bud Abbot and Lou Costello : the Franchise Collection, ABB V.2 vol.2 The best of Bud Abbot and Lou Costello : the Franchise Collection, ABB V.3 vol.3 The best of Bud Abbot and Lou Costello : the Franchise Collection, ABB V.4 vol.4 ABE Aberdeen ABO About a boy ABO About Elly ABO About Schmidt ABO About time ABO Above the rim ABR Abraham Lincoln vampire hunter ABS Absolutely anything ABS Absolutely fabulous : the movie ACC Acceptable risk ACC Accepted ACC Accountant, The ACC SER. Accused : series 1 & 2 1 & 2 ACE Ace in the hole ACE Ace Ventura pet detective ACR Across the universe ACT Act of valor ACT Acts of vengeance ADA Adam's apples ADA Adams chronicles, The ADA Adam ADA Adam’s Rib ADA Adaptation ADA Ad Astra ADJ Adjustment Bureau, The *does not reflect missing materials or those being mended Walpole Public Library DVD List [Items purchased to present*] ADM Admission ADO Adopt a highway ADR Adrift ADU Adult world ADV Adventure of Sherlock Holmes’ smarter brother, The ADV The adventures of Baron Munchausen ADV Adverse AEO Aeon Flux AFF SEAS.1 Affair, The : season 1 AFF SEAS.2 Affair, The : season 2 AFF SEAS.3 Affair, The : season 3 AFF SEAS.4 Affair, The : season 4 AFF SEAS.5 Affair, -

2019 Olden Lobes Ballot

CD 2019 �olden �lobes Ballot BEST MOTION PICTURE / BEST PERFORMANCE BY AN ACTOR IN A BEST PERFORMANCE BY AN ACTRESS MUSICAL OR COMEDY TELEVISION SERIES / MUSICAL OR COMEDY IN A SUPPORTING ROLE IN A SERIES, ¨ Crazy Rich Asians ¨ Sasha Baron Cohen Who Is America? LIMITED SERIES OR MOTION PICTURE MADE ¨ The Favourite ¨ Jim Carrey Kidding FOR TELEVISION ¨ Green Book ¨ Michael Douglas The Kominsky Method ¨ Alex Bornstein The Marvelous Mrs. Maisel ¨ Mary Poppins Returns ¨ Donald Glover Atlanta ¨ Patricia Clarkson Sharp Objects ¨ Vice ¨ Bill Hader Barry ¨ Penelope Cruz The Assassination of Gianni Versace: American Crime Story ¨ Thandie Newton Westworld BEST MOTION PICTURE / DRAMA BEST PERFORMANCE BY AN ACTRESS ¨ Yvonne Strahovski The Handmaid’s Tale ¨ Black Panther IN A TELEVISION SERIES / DRAMA ¨ BlacKkKlansman ¨ Caitriona Balfe Outlander BEST PERFORMANCE BY AN ACTOR ¨ Bohemian Rhapsody ¨ Elisabeth Moss Handmaid’s Tale IN A SUPPORTING ROLE IN A SERIES, ¨ Sandra Oh Killing Eve ¨ If Beale Streat Could Talk LIMITED SERIES OR MOTION PICTURE MADE ¨ A Star Is Born ¨ Julia Roberts Homecoming FOR TELEVISION ¨ Keri Russell The Americans ¨ Alan Arkin The Kominsky Method BEST TELEVISION SERIES / ¨ ¨ Kieran Culkin Succession MUSICAL OR COMEDY BEST PERFORMANCE BY AN ACTOR ¨ Edgar Ramirez The Assassination ¨ Barry HBO IN A TELEVISION SERIES / DRAMA of Gianni Versace: American Crime Story ¨ The Good Place NBC ¨ Jason Bateman Ozark ¨ Ben Wishaw A Very English Scandal ¨ Kidding Showtime ¨ Stephan James Homecoming ¨ Henry Winkler Barry ¨ The Kominsky Method -

Information Extraction in Text Mining Matt Ulinsm Western Washington University

Western Washington University Western CEDAR Computer Science Graduate and Undergraduate Computer Science Student Scholarship 2008 Information extraction in text mining Matt ulinsM Western Washington University Follow this and additional works at: https://cedar.wwu.edu/computerscience_stupubs Part of the Computer Sciences Commons Recommended Citation Mulins, Matt, "Information extraction in text mining" (2008). Computer Science Graduate and Undergraduate Student Scholarship. 4. https://cedar.wwu.edu/computerscience_stupubs/4 This Research Paper is brought to you for free and open access by the Computer Science at Western CEDAR. It has been accepted for inclusion in Computer Science Graduate and Undergraduate Student Scholarship by an authorized administrator of Western CEDAR. For more information, please contact [email protected]. Information Extraction in Text Mining Matt Mullins Computer Science Department Western Washington University Bellingham, WA [email protected] Information Extraction in Text Mining Matt Mullins Computer Science Department Western Washington University Bellingham, WA [email protected] ABSTRACT Text mining’s goal, simply put, is to derive information from text. Using multitudes of technologies from overlapping fields like Data Mining and Natural Language Processing we can yield knowledge from our text and facilitate other processing. Information Extraction (IE) plays a large part in text mining when we need to extract this data. In this survey we concern ourselves with general methods borrowed from other fields, with lower-level NLP techniques, IE methods, text representation models, and categorization techniques, and with specific implementations of some of these methods. Finally, with our new understanding of the field we can discuss a proposal for a system that combines WordNet, Wikipedia, and extracted definitions and concepts from web pages into a user-friendly search engine designed for topic- specific knowledge. -

Concept Mining: a Conceptual Understanding Based Approach

Concept Mining: A Conceptual Understanding based Approach by Shady Shehata A thesis presented to the University of Waterloo in ful¯llment of the thesis requirement for the degree of Doctor of Philosophy in Electrical and Computer Engineering Waterloo, Ontario, Canada, 2009 °c Shady Shehata 2009 I hereby declare that I am the sole author of this thesis. This is a true copy of the thesis, including any required ¯nal revisions, as accepted by my examiners. I understand that my thesis may be made electronically available to the public. ii Abstract Due to the daily rapid growth of the information, there are considerable needs to extract and discover valuable knowledge from data sources such as the World Wide Web. Most of the common techniques in text mining are based on the statistical analysis of a term either word or phrase. These techniques consider documents as bags of words and pay no attention to the meanings of the document content. In addition, statistical analysis of a term frequency captures the importance of the term within a document only. However, two terms can have the same frequency in their documents, but one term contributes more to the meaning of its sentences than the other term. Therefore, there is an intensive need for a model that captures the meaning of linguistic utterances in a formal structure. The underlying model should indicate terms that capture the semantics of text. In this case, the model can capture terms that present the concepts of the sentence, which leads to discover the topic of the document. A new concept-based model that analyzes terms on the sentence, document and corpus levels rather than the traditional analysis of document only is introduced. -

A User-Centered Concept Mining System for Query and Document Understanding at Tencent

A User-Centered Concept Mining System for Query and Document Understanding at Tencent Bang Liu1∗, Weidong Guo2∗, Di Niu1, Chaoyue Wang2 Shunnan Xu2, Jinghong Lin2, Kunfeng Lai2, Yu Xu2 1University of Alberta, Edmonton, AB, Canada 2Platform and Content Group, Tencent, Shenzhen, China ABSTRACT ACM Reference Format: 1∗ 2∗ 1 2 2 Concepts embody the knowledge of the world and facilitate the Bang Liu , Weidong Guo , Di Niu , Chaoyue Wang and Shunnan Xu , Jinghong Lin2, Kunfeng Lai2, Yu Xu2. 2019. A User-Centered Concept Min- cognitive processes of human beings. Mining concepts from web ing System for Query and Document Understanding at Tencent. In The documents and constructing the corresponding taxonomy are core 25th ACM SIGKDD Conference on Knowledge Discovery and Data Mining research problems in text understanding and support many down- (KDD ’19), August 4–8, 2019, Anchorage, AK, USA. ACM, New York, NY, USA, stream tasks such as query analysis, knowledge base construction, 11 pages. https://doi.org/10.1145/3292500.3330727 recommendation, and search. However, we argue that most prior studies extract formal and overly general concepts from Wikipedia or static web pages, which are not representing the user perspective. 1 INTRODUCTION In this paper, we describe our experience of implementing and de- The capability of conceptualization is a critical ability in natural ploying ConcepT in Tencent QQ Browser. It discovers user-centered language understanding and is an important distinguishing factor concepts at the right granularity conforming to user interests, by that separates a human being from the current dominating machine mining a large amount of user queries and interactive search click intelligence based on vectorization.