3D Rasterization II

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Texture Mapping Textures Provide Details Makes Graphics Pretty

Texture Mapping Textures Provide Details Makes Graphics Pretty • Details creates immersion • Immersion creates fun Basic Idea Paint pictures on all of your polygons • adds color data • adds (fake) geometric and texture detail one of the basic graphics techniques • tons of hardware support Texture Mapping • Map between region of plane and arbitrary surface • Ensure “right things” happen as textured polygon is rendered and transformed Parametric Texture Mapping • Texture size and orientation tied to polygon • Texture can modulate diffuse color, specular color, specular exponent, etc • Separation of “texture space” from “screen space” • UV coordinates of range [0…1] Retrieving Texel Color • Compute pixel (u,v) using barycentric interpolation • Look up texture pixel (texel) • Copy color to pixel • Apply shading How to Parameterize? Classic problem: How to parameterize the earth (sphere)? Very practical, important problem in Middle Ages… Latitude & Longitude Distorts areas and angles Planar Projection Covers only half of the earth Distorts areas and angles Stereographic Projection Distorts areas Albers Projection Preserves areas, distorts aspect ratio Fuller Parameterization No Free Lunch Every parameterization of the earth either: • distorts areas • distorts distances • distorts angles Good Parameterizations • Low area distortion • Low angle distortion • No obvious seams • One piece • How do we achieve this? Planar Parameterization Project surface onto plane • quite useful in practice • only partial coverage • bad distortion when normals perpendicular Planar Parameterization In practice: combine multiple views Cube Map/Skybox Cube Map Textures • 6 2D images arranged like faces of a cube • +X, -X, +Y, -Y, +Z, -Z • Index by unnormalized vector Cube Map vs Skybox skybox background Cube maps map reflections to emulate reflective surface (e.g. -

Texture / Image-Based Rendering Texture Maps

Texture / Image-Based Rendering Texture maps Surface color and transparency Environment and irradiance maps Reflectance maps Shadow maps Displacement and bump maps Level of detail hierarchy CS348B Lecture 12 Pat Hanrahan, Spring 2005 Texture Maps How is texture mapped to the surface? Dimensionality: 1D, 2D, 3D Texture coordinates (s,t) Surface parameters (u,v) Direction vectors: reflection R, normal N, halfway H Projection: cylinder Developable surface: polyhedral net Reparameterize a surface: old-fashion model decal What does texture control? Surface color and opacity Illumination functions: environment maps, shadow maps Reflection functions: reflectance maps Geometry: bump and displacement maps CS348B Lecture 12 Pat Hanrahan, Spring 2005 Page 1 Classic History Catmull/Williams 1974 - basic idea Blinn and Newell 1976 - basic idea, reflection maps Blinn 1978 - bump mapping Williams 1978, Reeves et al. 1987 - shadow maps Smith 1980, Heckbert 1983 - texture mapped polygons Williams 1983 - mipmaps Miller and Hoffman 1984 - illumination and reflectance Perlin 1985, Peachey 1985 - solid textures Greene 1986 - environment maps/world projections Akeley 1993 - Reality Engine Light Field BTF CS348B Lecture 12 Pat Hanrahan, Spring 2005 Texture Mapping ++ == 3D Mesh 2D Texture 2D Image CS348B Lecture 12 Pat Hanrahan, Spring 2005 Page 2 Surface Color and Transparency Tom Porter’s Bowling Pin Source: RenderMan Companion, Pls. 12 & 13 CS348B Lecture 12 Pat Hanrahan, Spring 2005 Reflection Maps Blinn and Newell, 1976 CS348B Lecture 12 Pat Hanrahan, Spring 2005 Page 3 Gazing Ball Miller and Hoffman, 1984 Photograph of mirror ball Maps all directions to a to circle Resolution function of orientation Reflection indexed by normal CS348B Lecture 12 Pat Hanrahan, Spring 2005 Environment Maps Interface, Chou and Williams (ca. -

3D Visualization of Weather Radar Data Examensarbete Utfört I Datorgrafik Av

3D Visualization of Weather Radar Data Examensarbete utfört i datorgrafik av Aron Ernvik lith-isy-ex-3252-2002 januari 2002 3D Visualization of Weather Radar Data Thesis project in computer graphics at Linköping University by Aron Ernvik lith-isy-ex-3252-2002 Examiner: Ingemar Ragnemalm Linköping, January 2002 Avdelning, Institution Datum Division, Department Date 2002-01-15 Institutionen för Systemteknik 581 83 LINKÖPING Språk Rapporttyp ISBN Language Report category Svenska/Swedish Licentiatavhandling ISRN LITH-ISY-EX-3252-2002 X Engelska/English X Examensarbete C-uppsats Serietitel och serienummer ISSN D-uppsats Title of series, numbering Övrig rapport ____ URL för elektronisk version http://www.ep.liu.se/exjobb/isy/2002/3252/ Titel Tredimensionell visualisering av väderradardata Title 3D visualization of weather radar data Författare Aron Ernvik Author Sammanfattning Abstract There are 12 weather radars operated jointly by SMHI and the Swedish Armed Forces in Sweden. Data from them are used for short term forecasting and analysis. The traditional way of viewing data from the radars is in 2D images, even though 3D polar volumes are delivered from the radars. The purpose of this work is to develop an application for 3D viewing of weather radar data. There are basically three approaches to visualization of volumetric data, such as radar data: slicing with cross-sectional planes, surface extraction, and volume rendering. The application developed during this project supports variations on all three approaches. Different objects, e.g. horizontal and vertical planes, isosurfaces, or volume rendering objects, can be added to a 3D scene and viewed simultaneously from any angle. Parameters of the objects can be set using a graphical user interface and a few different plots can be generated. -

Deconstructing Hardware Usage for General Purpose Computation on Gpus

Deconstructing Hardware Usage for General Purpose Computation on GPUs Budyanto Himawan Manish Vachharajani Dept. of Computer Science Dept. of Electrical and Computer Engineering University of Colorado University of Colorado Boulder, CO 80309 Boulder, CO 80309 E-mail: {Budyanto.Himawan,manishv}@colorado.edu Abstract performance, in 2001, NVidia revolutionized the GPU by making it highly programmable [3]. Since then, the programmability of The high-programmability and numerous compute resources GPUs has steadily increased, although they are still not fully gen- on Graphics Processing Units (GPUs) have allowed researchers eral purpose. Since this time, there has been much research and ef- to dramatically accelerate many non-graphics applications. This fort in porting both graphics and non-graphics applications to use initial success has generated great interest in mapping applica- the parallelism inherent in GPUs. Much of this work has focused tions to GPUs. Accordingly, several works have focused on help- on presenting application developers with information on how to ing application developers rewrite their application kernels for the perform the non-trivial mapping of general purpose concepts to explicitly parallel but restricted GPU programming model. How- GPU hardware so that there is a good fit between the algorithm ever, there has been far less work that examines how these appli- and the GPU pipeline. cations actually utilize the underlying hardware. Less attention has been given to deconstructing how these gen- This paper focuses on deconstructing how General Purpose ap- eral purpose application use the graphics hardware itself. Nor has plications on GPUs (GPGPU applications) utilize the underlying much attention been given to examining how GPUs (or GPU-like GPU pipeline. -

Texture Mapping Modeling an Orange

Texture Mapping Modeling an Orange q Problem: Model an orange (the fruit) q Start with an orange-colored sphere – Too smooth q Replace sphere with a more complex shape – Takes too many polygons to model all the dimples q Soluon: retain the simple model, but add detail as a part of the rendering process. 1 Modeling an orange q Surface texture: we want to model the surface features of the object not just the overall shape q Soluon: take an image of a real orange’s surface, scan it, and “paste” onto a simple geometric model q The texture image is used to alter the color of each point on the surface q This creates the ILLUSION of a more complex shape q This is efficient since it does not involve increasing the geometric complexity of our model Recall: Graphics Rendering Pipeline Application Geometry Rasterizer 3D 2D input CPU GPU output scene image 2 Rendering Pipeline – Rasterizer Application Geometry Rasterizer Image CPU GPU output q The rasterizer stage does per-pixel operaons: – Turn geometry into visible pixels on screen – Add textures – Resolve visibility (Z-buffer) From Geometry to Display 3 Types of Texture Mapping q Texture Mapping – Uses images to fill inside of polygons q Bump Mapping – Alter surface normals q Environment Mapping – Use the environment as texture Texture Mapping - Fundamentals q A texture is simply an image with coordinates (s,t) q Each part of the surface maps to some part of the texture q The texture value may be used to set or modify the pixel value t (1,1) (199.68, 171.52) Interpolated (0.78,0.67) s (0,0) 256x256 4 2D Texture Mapping q How to map surface coordinates to texture coordinates? 1. -

Opengl 4.0 Shading Language Cookbook

OpenGL 4.0 Shading Language Cookbook Over 60 highly focused, practical recipes to maximize your use of the OpenGL Shading Language David Wolff BIRMINGHAM - MUMBAI OpenGL 4.0 Shading Language Cookbook Copyright © 2011 Packt Publishing All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews. Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the author, nor Packt Publishing, and its dealers and distributors will be held liable for any damages caused or alleged to be caused directly or indirectly by this book. Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information. First published: July 2011 Production Reference: 1180711 Published by Packt Publishing Ltd. 32 Lincoln Road Olton Birmingham, B27 6PA, UK. ISBN 978-1-849514-76-7 www.packtpub.com Cover Image by Fillipo ([email protected]) Credits Author Project Coordinator David Wolff Srimoyee Ghoshal Reviewers Proofreader Martin Christen Bernadette Watkins Nicolas Delalondre Indexer Markus Pabst Hemangini Bari Brandon Whitley Graphics Acquisition Editor Nilesh Mohite Usha Iyer Valentina J. D’silva Development Editor Production Coordinators Chris Rodrigues Kruthika Bangera Technical Editors Adline Swetha Jesuthas Kavita Iyer Cover Work Azharuddin Sheikh Kruthika Bangera Copy Editor Neha Shetty About the Author David Wolff is an associate professor in the Computer Science and Computer Engineering Department at Pacific Lutheran University (PLU). -

A Snake Approach for High Quality Image-Based 3D Object Modeling

A Snake Approach for High Quality Image-based 3D Object Modeling Carlos Hernandez´ Esteban and Francis Schmitt Ecole Nationale Superieure´ des Tel´ ecommunications,´ France e-mail: carlos.hernandez, francis.schmitt @enst.fr f g Abstract faces. They mainly work for 2.5D surfaces and are very de- pendent on the light conditions. A third class of methods In this paper we present a new approach to high quality 3D use the color information of the scene. The color informa- object reconstruction by using well known computer vision tion can be used in different ways, depending on the type of techniques. Starting from a calibrated sequence of color im- scene we try to reconstruct. A first way is to measure color ages, we are able to recover both the 3D geometry and the consistency to carve a voxel volume [28, 18]. But they only texture of the real object. The core of the method is based on provide an output model composed of a set of voxels which a classical deformable model, which defines the framework makes difficult to obtain a good 3D mesh representation. Be- where texture and silhouette information are used as exter- sides, color consistency algorithms compare absolute color nal energies to recover the 3D geometry. A new formulation values, which makes them sensitive to light condition varia- of the silhouette constraint is derived, and a multi-resolution tions. A different way of exploiting color is to compare local gradient vector flow diffusion approach is proposed for the variations of the texture such as in cross-correlation methods stereo-based energy term. -

Phong Shading

Computer Graphics Shading Based on slides by Dianna Xu, Bryn Mawr College Image Synthesis and Shading Perception of 3D Objects • Displays almost always 2 dimensional. • Depth cues needed to restore the third dimension. • Need to portray planar, curved, textured, translucent, etc. surfaces. • Model light and shadow. Depth Cues Eliminate hidden parts (lines or surfaces) Front? “Wire-frame” Back? Convex? “Opaque Object” Concave? Why we need shading • Suppose we build a model of a sphere using many polygons and color it with glColor. We get something like • But we want Shading implies Curvature Shading Motivation • Originated in trying to give polygonal models the appearance of smooth curvature. • Numerous shading models – Quick and dirty – Physics-based – Specific techniques for particular effects – Non-photorealistic techniques (pen and ink, brushes, etching) Shading • Why does the image of a real sphere look like • Light-material interactions cause each point to have a different color or shade • Need to consider – Light sources – Material properties – Location of viewer – Surface orientation Wireframe: Color, no Substance Substance but no Surfaces Why the Surface Normal is Important Scattering • Light strikes A – Some scattered – Some absorbed • Some of scattered light strikes B – Some scattered – Some absorbed • Some of this scattered light strikes A and so on Rendering Equation • The infinite scattering and absorption of light can be described by the rendering equation – Cannot be solved in general – Ray tracing is a special case for -

UNWRELLA Step by Step Unwrapping and Texture Baking Tutorial

Introduction Content Defining Seams in 3DSMax Applying Unwrella Texture Baking Final result UNWRELLA Unwrella FAQ, users manual Step by step unwrapping and texture baking tutorial Unwrella UV mapping tutorial. Copyright 3d-io GmbH, 2009. All rights reserved. Please visit http://www.unwrella.com for more details. Unwrella Step by Step automatic unwrapping and texture baking tutorial Introduction 3. Introduction Content 4. Content Defining Seams in 3DSMax 10. Defining Seams in Max Applying Unwrella Texture Baking 16. Applying Unwrella Final result 20. Texture Baking Unwrella FAQ, users manual 29. Final Result 30. Unwrella FAQ, user manual Unwrella UV mapping tutorial. Copyright 3d-io GmbH, 2009. All rights reserved. Please visit http://www.unwrella.com for more details. Introduction In this comprehensive tutorial we will guide you through the process of creating optimal UV texture maps. Introduction Despite the fact that Unwrella is single click solution, we have created this tutorial with a lot of material explaining basic Autodesk 3DSMax work and the philosophy behind the „Render to Texture“ workflow. Content Defining Seams in This method, known in game development as texture baking, together with deployment of the Unwrella plug-in achieves the 3DSMax following quality benchmarks efficiently: Applying Unwrella - Textures with reduced texture mapping seams Texture Baking - Minimizes the surface stretching Final result - Creates automatically the largest possible UV chunks with maximal use of available space Unwrella FAQ, users manual - Preserves user created UV Seams - Reduces the production time from 30 minutes to 3 Minutes! Please follow these steps and learn how to utilize this great tool in order to achieve the best results in minimum time during your everyday productions. -

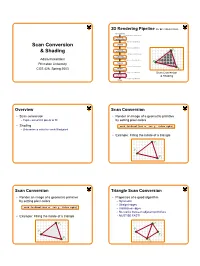

Scan Conversion & Shading

3D Rendering Pipeline (for direct illumination) 3D Primitives 3D Modeling Coordinates Modeling TransformationTransformation 3D World Coordinates Scan Conversion LightingLighting 3D World Coordinates ViewingViewing P1 & Shading TransformationTransformation 3D Camera Coordinates ProjectionProjection TransformationTransformation Adam Finkelstein 2D Screen Coordinates P2 Princeton University Clipping 2D Screen Coordinates ViewportViewport COS 426, Spring 2003 TransformationTransformation P3 2D Image Coordinates Scan Conversion ScanScan Conversion & Shading 2D Image Coordinates Image Overview Scan Conversion • Scan conversion • Render an image of a geometric primitive o Figure out which pixels to fill by setting pixel colors • Shading void SetPixel(int x, int y, Color rgba) o Determine a color for each filled pixel • Example: Filling the inside of a triangle P1 P2 P3 Scan Conversion Triangle Scan Conversion • Render an image of a geometric primitive • Properties of a good algorithm by setting pixel colors o Symmetric o Straight edges void SetPixel(int x, int y, Color rgba) o Antialiased edges o No cracks between adjacent primitives • Example: Filling the inside of a triangle o MUST BE FAST! P1 P1 P2 P2 P4 P3 P3 1 Triangle Scan Conversion Simple Algorithm • Properties of a good algorithm • Color all pixels inside triangle Symmetric o void ScanTriangle(Triangle T, Color rgba){ o Straight edges for each pixel P at (x,y){ Antialiased edges if (Inside(T, P)) o SetPixel(x, y, rgba); o No cracks between adjacent primitives } } o MUST BE FAST! P P1 -

Evaluation of Image Quality Using Neural Networks

R. CANT et al: EVALUATION OF IMAGE QUALITY EVALUATION OF IMAGE QUALITY USING NEURAL NETWORKS R.J. CANT AND A.R. COOK Simulation and Modelling Group, Department of Computing, The Nottingham Trent University, Burton Street, Nottingham NG1 4BU, UK. Tel: +44-115-848 2613. Fax: +44-115-9486518. E-mail: [email protected]. ABSTRACT correspondence between human and neural network seems to depend on the level of expertise of the human subject. We discuss the use of neural networks as an evaluation tool for the graphical algorithms to be used in training simulator visual systems. The neural network used is of a type developed by the authors and is The results of a number of trial evaluation studies are presented in described in detail in [Cant and Cook, 1995]. It is a three-layer, feed- summary form, including the ‘calibration’ exercises that have been forward network customised to allow both unsupervised competitive performed using human subjects. learning and supervised back-propagation training. It has been found that in some cases the results of competitive learning alone show a INTRODUCTION better correspondence with human performance than those of back- propagation. The problem here is that the back-propagation results are too good. Training simulators, such as those employed in vehicle and aircraft simulation, have been used for an ever-widening range of applications The following areas of investigation are presented here: resolution and in recent years. Visual displays form a major part of virtually all of sampling, shading algorithms, texture anti-aliasing techniques, and these systems, most of which are used to simulate normal optical views, model complexity. -

Synthesizing Realistic Facial Expressions from Photographs Z

Synthesizing Realistic Facial Expressions from Photographs z Fred eric Pighin Jamie Hecker Dani Lischinski y Richard Szeliski David H. Salesin z University of Washington y The Hebrew University Microsoft Research Abstract The applications of facial animation include such diverse ®elds as character animation for ®lms and advertising, computer games [19], We present new techniques for creating photorealistic textured 3D video teleconferencing [7], user-interface agents and avatars [44], facial models from photographs of a human subject, and for creat- and facial surgery planning [23, 45]. Yet no perfectly realistic facial ing smooth transitions between different facial expressions by mor- animation has ever been generated by computer: no ªfacial anima- phing between these different models. Starting from several uncali- tion Turing testº has ever been passed. brated views of a human subject, we employ a user-assisted tech- There are several factors that make realistic facial animation so elu- nique to recover the camera poses corresponding to the views as sive. First, the human face is an extremely complex geometric form. well as the 3D coordinates of a sparse set of chosen locations on the For example, the human face models used in Pixar's Toy Story had subject's face. A scattered data interpolation technique is then used several thousand control points each [10]. Moreover, the face ex- to deform a generic face mesh to ®t the particular geometry of the hibits countless tiny creases and wrinkles, as well as subtle varia- subject's face. Having recovered the camera poses and the facial ge- tions in color and texture Ð all of which are crucial for our compre- ometry, we extract from the input images one or more texture maps hension and appreciation of facial expressions.