Investigating Cursor-Based Interactions to Support Non-Visual Exploration in the Real World

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chapter 2: Windows 7

Chapter 2: Windows 7 When you delete a file, a. A copy of the file will be sent to the desktop b. You send the file to the Recycle Bin. c. The file will not be affected. d. A copy of the file will be stored in your active folder. Which of the following statements is correct about arrange icon on desktop. a. Icons on desktop can be arranged by name. b. Icons on desktop can be arranged by type. c. Icons on desktop can be arranged by size. d. All of the above. Which of the following statements is correct about opening control panel. a. You can open control panel from windows explorer. b. You can open control panel from start menu. c. You can open control panel from my computer. d. All of the above. The documents that is located in start menu store. a. The last 15 files that you have open. b. The last 15 files that you have delete. c. The last 15 files that you have copy. d. None of the above. The desktop is: a. An example of a hardware device. b. A folder. c. A file d. A window. The Shutdown icon on start menu means: a. Close all windows. b. Close the current windows. c. Close your computer. d. None of the above. To open a minimized window, you can click on the:- a. window’s button on the body of the taskbar. b. maximized button on the title bar. c. restore button on the title bar. d. all of the above. -

Coloriync J43 Chooser {Antral Strip Limltruli Panels; Date 3; ‘Time I»

US 20120174031Al (19) United States (12) Patent Application Publication (10) Pub. N0.: US 2012/0174031 A1 DONDURUR et a]. (43) Pub. Date: Jul. 5, 2012 (54) CLICKLESS GRAPHICAL USER INTERFACE (52) US. Cl. ...................................................... .. 715/808 (75) Inventors: MEHMET DONDURUR, (57) ABSTRACT DHAHRAN (SA); AHMET Z. SAHIN’ DH AHRAN (SA) The chckless graphical user mterface provldes a pop-up Wm doW When a cursor is moved over designated areas on the (73) Assigneez KING FAHD UNIVERSITY OF screen. The pop-up WindoW includes menu item choices, e. g., PETROLEUM AND MINERALS “double click”, “single click”, “close” that execute ‘When the DH AHRAN (SA) ’ cursor is moved over the item. This procedure eliminates the traditional ‘mouse click’, thereby allowing users to move the cursor over the a lication or ?le and 0 en it b choosin (21) Appl' NO" 12/985’165 among the aforerrrifntioned choices in the? ?le or Zipplicatior'i . bein focused on. The 0 -u WindoW shoWs the navi ation (22) Flled: Jan‘ 5’ 2011 choiges in the form of 1; tgxt,pe.g., yes/no or color, egg, red/ _ _ _ _ blue, or character, such as triangle for ‘yes’ and square for Pubhcatlon Classl?catlon ‘no’. Pop-up WindoW indicator types are virtually unlimited (51) Int, Cl, and canbe changed to any text, color or character. The method G06F 3/048 (200601) is compatible With touch pads and mouses. .5 10a About This Cam pater Appearance 5;‘ Fort Menu ?ptinns System Pm?ler Talk Calculatar Coloriync J43 Chooser {antral Strip limltruli Panels; Date 3; ‘time I» f Favmjltes } Extensions Manager B "Dali?i ?' File Exchange Q Key Caps File Sharing Patent Application Publication Jul. -

Creating Document Hyperlinks Via Rich-Text Dialogs

__________________________________________________________________________________________________ Creating Document Hyperlinks via Rich-Text Dialogs EXAMPLE: CREATING A HYPERLINK IN EXCEPTIONS/REQUIREMENTS: Enter the appropriate paragraph either by selecting from the Lookup Table or manually typing in the field, completing any prompts as necessary. Locate and access the item to be hyperlinked on the external website (ie through the County Recorder Office): Copy the address (typically clicking at the end of the address will highlight the entire address) by right click/copy or Control C: __________________________________________________________________________________________________ Page 1 of 8 Creating Hyperlinks (Requirements/Exceptions and SoftPro Live) __________________________________________________________________________________________________ In Select, open the Requirement or Exception, highlight the text to hyperlink. Click the Add a Hyperlink icon: __________________________________________________________________________________________________ Page 2 of 8 Creating Hyperlinks (Requirements/Exceptions and SoftPro Live) __________________________________________________________________________________________________ The Add Hyperlink dialog box will open. Paste the link into the Address field (right click/paste or Control V) NOTE: The Text to display (name) field will autopopulate with the text that was highlighted. Click OK. The text will now be underlined indicating a hyperlink. __________________________________________________________________________________________________ -

Welcome to Computer Basics

Computer Basics Instructor's Guide 1 COMPUTER BASICS To the Instructor Because of time constraints and an understanding that the trainees will probably come to the course with widely varying skills levels, the focus of this component is only on the basics. Hence, the course begins with instruction on computer components and peripheral devices, and restricts further instruction to the three most widely used software areas: the windows operating system, word processing and using the Internet. The course uses lectures, interactive activities, and exercises at the computer to assure accomplishment of stated goals and objectives. Because of the complexity of the computer and the initial fear experienced by so many, instructor dedication and patience are vital to the success of the trainee in this course. It is expected that many of the trainees will begin at “ground zero,” but all should have developed a certain level of proficiency in using the computer, by the end of the course. 2 COMPUTER BASICS Overview Computers have become an essential part of today's workplace. Employees must know computer basics to accomplish their daily tasks. This mini course was developed with the beginner in mind and is designed to provide WTP trainees with basic knowledge of computer hardware, some software applications, basic knowledge of how a computer works, and to give them hands-on experience in its use. The course is designed to “answer such basic questions as what personal computers are and what they can do,” and to assist WTP trainees in mastering the basics. The PC Novice dictionary defines a computer as a machine that accepts input, processes it according to specified rules, and produces output. -

Fuzzy Mouse Cursor Control System for Computer Users with Spinal Cord Injuries

Georgia State University ScholarWorks @ Georgia State University Computer Science Theses Department of Computer Science 8-8-2006 Fuzzy Mouse Cursor Control System for Computer Users with Spinal Cord Injuries Tihomir Surdilovic Follow this and additional works at: https://scholarworks.gsu.edu/cs_theses Part of the Computer Sciences Commons Recommended Citation Surdilovic, Tihomir, "Fuzzy Mouse Cursor Control System for Computer Users with Spinal Cord Injuries." Thesis, Georgia State University, 2006. https://scholarworks.gsu.edu/cs_theses/49 This Thesis is brought to you for free and open access by the Department of Computer Science at ScholarWorks @ Georgia State University. It has been accepted for inclusion in Computer Science Theses by an authorized administrator of ScholarWorks @ Georgia State University. For more information, please contact [email protected]. i Fuzzy Mouse Cursor Control System For Computer Users with Spinal Cord Injuries A Thesis Presented in Partial Fulfillment of Requirements for the Degree of Master of Science in the College of Arts and Sciences Georgia State University 2005 by Tihomir Surdilovic Committee: ____________________________________ Dr. Yan-Qing Zhang, Chair ____________________________________ Dr. Rajshekhar Sunderraman, Member ____________________________________ Dr. Michael Weeks, Member ____________________________________ Dr. Yi Pan, Department Chair Date July 21st 2005 ii Abstract People with severe motor-impairments due to Spinal Cord Injury (SCI) or Spinal Cord Dysfunction (SCD), often experience difficulty with accurate and efficient control of pointing devices (Keates et al., 02). Usually this leads to their limited integration to society as well as limited unassisted control over the environment. The questions “How can someone with severe motor-impairments perform mouse pointer control as accurately and efficiently as an able-bodied person?” and “How can these interactions be advanced through use of Computational Intelligence (CI)?” are the driving forces behind the research described in this paper. -

B426 Ethernet Communication Module B426 Ethernet Communication Module

Intrusion Alarm Systems | B426 Ethernet Communication Module B426 Ethernet Communication Module www.boschsecurity.com u Full two-way IP event reporting with remote control panel programming support u 10/100 Base-T Ethernet communication for IPv6 and IPv4 networks u NIST-FIPS197 Certified for 128-bit to 256-bit AES Encrypted Line Security u Plug and Play installation, including UPnP service to enable remote programming behind firewalls u Advanced configuration by browser, RPS, or A-Link The Conettix Ethernet Communication Modules are 1 four-wire powered SDI, SDI2, and Option bus devices 2 4 that provides two-way communication with compatible control panels over IPv4 or IPv6 Ethernet networks. 3 Typical applications include: • Reporting and path supervision to a Conettix 12 Communications Receiver/Gateway. • Remote administration and control with Remote 11 5 Programming Software or A-Link. 9 • Connection to building automation and integration applications. 10 8 6 System overview 7 The modules (B426/B426-M) are built for a wide variety of secure commercial and industrial applications. Flexible end-to-end path supervision, AES encryption, and anti-substitution features make the Callout ᅳ Description Callout ᅳ Description modules desirable for high security and fire monitoring 1 ᅳ Compatible Bosch control 7 ᅳ Conettix D6100i applications. Use the modules as stand-alone paths or panel Communications Receiver/ with another communication technology. Gateway and/or Conettix D6600 Communications Receiver/ Gateway (Conettix D6600 Communications -

The Control Panel and Settings in Windows 10 Most Programs and Apps Have Settings Specific to That Program

GGCS Introduction to Windows 10 Part 3: The Control Panel and Settings in Windows 10 Most programs and apps have settings specific to that program. For example, in a word processor such as Microsoft Word there are settings for margins, fonts, tabs, etc. If you have another word processor, it can have different settings for margins, fonts, etc. These specific settings only affect one program. The settings in the Control Panel and in Settings are more general and affect the whole computer and peripherals such as the mouse, keyboard, monitor and printers. For example, if you switch the right and left buttons on the mouse in the Control Panel or in Settings, they are switched for everything you click on. If you change the resolution of the monitor, it is changed for the desktop, menus, Word, Internet Explorer and Edge, etc. How to display the Control Panel 1. Right-click the Windows Start button or press the Windows key on the keyboard + X. 2. Click “Control Panel” on the popup menu as shown in the first screen capture. In Windows 10, many of the settings that once were in the Control Panel have moved to Settings. However, there are often links in Settings that take you back to the Control Panel and many other settings that still only exist in the Control Panel. Settings versus Control Panel is an evolving part of Windows design that started with Windows 8. It is not clear at this time whether the Control Panel will eventually go away or whether it will simply be used less frequently by most users. -

B9512G Control Panels B9512G Control Panels

Intrusion Alarm Systems | B9512G Control Panels B9512G Control Panels www.boschsecurity.com u Fully integrated intrusion, fire, and access control allows users to interface with one system instead of three u Provides up to 599 points using a combination of hardwired or wireless devices for installation flexibility, and up to 32 areas and 32 doors for up to 2,000 users u On-board Ethernet port for Conettix IP alarm communication and remote programming, compatible with modern IP networks including IPv6/ IPv4, Auto-IP, and Universal Plug and Play u Installer-friendly features for simple installation and communications, including plug-in PSTN and cellular communication modules u Remote Security Control app which allows users to control their security systems - and view system cameras - remotely from mobile devices such as phones and tablets The B9512G Control Panel and the B8512G Control • Monitor alarm points for intruder, gas, or fire alarms. Panel are the new premier commercial control panels • Program all system functions local or remote using from Bosch. B9512G control panels integrate Remote Programming Software (RPS) or the Installer intrusion, fire, and access control providing one simple Services Portal programming tool (available in user interface for all systems. Europe, Middle East, Africa, and China) or by using With the ability to adapt to large and small basic programming through the keypad. applications, the B9512G provides up to 599 • Add up to 32 doors of access control using the optional B901 Access Control Module. Optionally use individually identified points that can be split into 32 the D9210C Access Control Interface Module for up areas. -

How to Open Control Panel in Windows 10 Way 1: Open It in the Start Menu

Course Name : O Level(B4-Ist sem.) Subject : ITT&NB Topic : Control Panel Date : 27-03-20 Control Panel The Control Panel is a component of Microsoft Windows that provides the ability to view and change system settings. It consists of a set of applets that include adding or removing hardware and software, controlling user accounts, changing accessibility options, and accessing networking settings. How to open Control Panel in Windows 10 Way 1: Open it in the Start Menu. Click the bottom-left Start button to open the Start Menu, type control panel in the search box and select Control Panel in the results. Way 2: Access Control Panel from the Quick Access Menu. Press Windows+X or right-tap the lower-left corner to open the Quick Access Menu, and then choose Control Panel in it. Way 3: Go to Control Panel through the Settings Panel. Open the Settings Panel by Windows+I, and tap Control Panel on it. Way 4: Open Control Panel in the File Explorer. Click the File Explorer icon on the taskbar, select Desktop and double-tap Control Panel. Way 5: Open the program via Run. Press Windows+R to open the Run dialog, enter control panel in the empty box and click OK. Changing System Date and Time Step 1: Click the bottom-right clock icon on the taskbar, and select Date and time settings. Or we can right click the clock icon, click Adjust data /time. Step 2: As the Date and time Windows opens, we can turn off Set time automatically. Step 3: In the Date and Time Settings window, respectively change date and time, and then tap OK to confirm the changes. -

Licensing Guide

Licensing Guide Plesk licenses, editions and standard features ............................................................... 2 Plesk Onyx – Special Editions (2018) .................................................................................. 4 Plesk Onyx Licensing on Hyperscalers ............................................................................... 4 Extra Features, Feature Packs and Extensions ................................................................ 5 Available Plesk Feature Packs ............................................................................................ 6 Plesk-developed extensions ............................................................................................... 8 Third-party premium extensions.................................................................................... 14 Plesk licenses, editions and standard features Plesk uses a simple, flexible license model with loads of options: 1) Server-based licenses – example: Plesk licenses a. Installation on dedicated servers (also known as physical servers) b. Installation on virtual servers (also known as virtual private servers or VPS) 2) You can buy all our licenses on a monthly/annual basis - or in discounted bundles. You can end this license at any time and it renews automatically through our licensing servers. 3) All three editions of our server-based licenses present a number of core features: a. Plesk Web Admin Edition For Web & IT Admins who manage sites for an employer, business, or themselves. If you need simple -

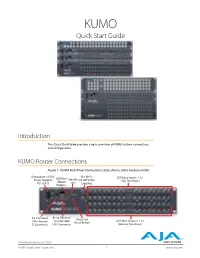

Quick Start Guide

KUMO Quick Start Guide Introduction This Quick Start Guide provides a basic overview of KUMO system connections and configuration. KUMO Router Connections Figure 1. KUMO Rear Panel Connections (3232 shown, other models similar) Redundant 12 VDC REF BNCs USB Port SDI Video Inputs 1-32 Power Supplies Identify Ext Ref Video (Top Two Rows) PS1 & PS2 (Newer LED Looping KUMOs) RS-422 Serial RJ-45 Ethernet Recessed SDI Video Outputs 1-32 9 Pin Female 10/100/1000 Reset Button D Connector LAN Connector (Bottom Two Rows) Published January 30, 2020 KUMO Quick Start Guide v4.6 1 www.aja.com PS 1 & PS 2 Power Connectors Power to the KUMO unit is supplied by an external power supply module that accepts a 110-220VAC, 50/60Hz power input and supplies +12 VDC to KUMO via connector PS1 or PS2. One power supply is provided, and it may be connected to either of the two power connectors. An optional second power supply can provide redundancy to help protect against outages. IMPORTANT: The power connector has a latch, similar to an Ethernet connector. Depress the latch (facing the outside edge of the KUMO device) before disconnecting the power cable from the unit. Power Loss Recovery If KUMO experiences a loss of power, when power is restored the router returns to the previous state of all source to destination crosspoints, and all configured source and destination names are retained. If a KUMO control panel configured with a KUMO router loses power, when power is restored the control panel’s configuration is retained, and button tallies will return to their previous states. -

Microsoft Excel 2013: Mouse Pointers & Cursor Movements

Microsoft Excel 2013: Mouse Pointers & Cursor Movements As you move the mouse over the Excel window it changes shape to indicate the availability of different functions. The five main shapes are shown in the diagram below. General pointer for selecting cells singly or in groups Pointer used at bottom right of selection to extend and fill data. Selected cells are shown by means of a heavy border as shown. The extension point is at the bottom right of the border and is a detached square. Insertion point. When pointer is like this you may type in text in this area. You must double click the left mouse button to move the cursor (a flashing vertical line) into the cell area. Insertion and editing can then be done in the normal way. Pointer for menus or moving a selection. When Copying a selection a small cross appears Used where you can change the dimensions of a Row or column. This pointer indicates that you can drag a boundary in the direction of the arrows. 1 What do the different mouse pointer shapes mean in Microsoft Excel? The mouse pointer changes shape in Microsoft Excel depending upon the context. The six shapes are as follows: Used for selecting cells The I-beam which indicates the cursor position when editing a cell entry. The fill handle. Used for copying formula or extending a data series. To select cells on the worksheet. Selects whole row/column when positioned on the number/letter heading label. At borders of column headings. Drag to widen a column.