Data Quality Maturity Model for Citizen Science Applications

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ISO/IEC JTC 1 N13604 ISO/IEC JTC 1 Information Technology

ISO/IEC JTC 1 N13604 2017-09-17 Replaces: ISO/IEC JTC 1 Information Technology Document Type: other (defined) Document Title: Study Group Report on 3D Printing and Scanning Document Source: SG Convenor Project Number: Document Status: This document is circulated for review and consideration at the October 2017 JTC 1 meeting in Russia. Action ID: ACT Due Date: 2017-10-02 Pages: Secretariat, ISO/IEC JTC 1, American National Standards Institute, 25 West 43rd Street, New York, NY 10036; Telephone: 1 212 642 4932; Facsimile: 1 212 840 2298; Email: [email protected] Study Group Report on 3D Printing and Scanning September 11, 2017 ISO/IEC JTC 1 Plenary (October 2017, Vladivostok, Russia) Prepared by the ISO/IEC JTC 1 Study Group on 3D Printing and Scanning Executive Summary The purpose of this report is to assess the possible contributions of JTC 1 to the global market enabled by 3D Printing and Scanning. 3D printing, also known as additive manufacturing, is considered by many sources as a truly disruptive technology. 3D printers range presently from small table units to room size and can handle simple plastics, metals, biomaterials, concrete or a mix of materials. They can be used in making simple toys, airplane engine components, custom pills, large buildings components or human organs. Depending on process, materials and precision, 3D printer costs range from hundreds to millions of dollars. 3D printing makes possible the manufacturing of devices and components that cannot be constructed cost-effectively with other manufacturing techniques (injection molding, computerized milling, etc.). It also makes possible the fabrications of customized devices, or individual (instead of identical mass-manufactured) units. -

Deliverable D7.5: Standards and Methodologies Big Data Guidance

Project acronym: BYTE Project title: Big data roadmap and cross-disciplinarY community for addressing socieTal Externalities Grant number: 619551 Programme: Seventh Framework Programme for ICT Objective: ICT-2013.4.2 Scalable data analytics Contract type: Co-ordination and Support Action Start date of project: 01 March 2014 Duration: 36 months Website: www.byte-project.eu Deliverable D7.5: Standards and methodologies big data guidance Author(s): Jarl Magnusson, DNV GL AS Erik Stensrud, DNV GL AS Tore Hartvigsen, DNV GL AS Lorenzo Bigagli, National Research Council of Italy Dissemination level: Public Deliverable type: Final Version: 1.1 Submission date: 26 July 2017 Table of Contents Preface ......................................................................................................................................... 3 Task 7.5 Description ............................................................................................................... 3 Executive summary ..................................................................................................................... 4 1 Introduction ......................................................................................................................... 5 2 Big Data Standards Organizations ...................................................................................... 6 3 Big Data Standards ............................................................................................................. 8 4 Big Data Quality Standards ............................................................................................. -

ISO Mai.Xlsx

Standard reference Title 1 ISO/IEC 23006-1:2018 Information technology -- Multimedia service platform technologies -- Part 1: Architecture 2 ISO 21001:2018 Educational organizations -- Management systems for educational organizations -- Requirements with guidance for use 3 ISO 17832:2018 Non-parallel steel wire and cords for tyre reinforcement 4 ISO 7240-5:2018 Fire detection and fire alarm systems -- Part 5: Point type heat detectors 5 ISO 18541-6:2018 Road vehicles -- Standardized access to automotive repair and maintenance information (RMI) -- Part 6: L-Category vehicle specific RMI use cases and requirements 6 ISO 1083:2018 Spheroidal graphite cast irons -- Classification 7 ISO 5496:2006/Amd 1:2018 Sensory analysis -- Methodology -- Initiation and training of assessors in the detection and recognition of odours -- Amendment 1 8 ISO/TS 19392-1:2018 Paints and varnishes -- Coating systems for wind-turbine rotor blades -- Part 1: Minimum requirements and weathering 9 ISO/TS 19392-2:2018 Paints and varnishes -- Coating systems for wind-turbine rotor blades -- Part 2: Determination and evaluation of resistance to rain erosion using rotating arm 10 ISO/TS 19392-3:2018 Paints and varnishes -- Coating systems for wind-turbine rotor blades -- Part 3: Determination and evaluation of resistance to rain erosion using water jet 11 ISO 4730:2017/Amd 1:2018 Essential oil of Melaleuca, terpinen-4-ol type (Tea Tree oil) -- Amendment 1: Enantiomeric distribution 12 ISO 20558-1:2018 Plastics -- Poly(phenylene sulfide) (PPS) moulding and extrusion materials -

Technical Report

TECHNICAL REPORT SEMICOLON : STATE OF THE ART REPORT NO 2008-0996 DET NORSKE VERITAS DET NORSKE VERITAS TECHNICAL REPORT Date of first issue: Project No: DET NORSKE VERITAS AS 1. December 2008 Approved by: Organisational unit: 1322 Høvik Jon Ølnes Norway Principal Engineer Tel: Client: Client ref.: Fax: http://www.dnv.com NO 945 748 931 MVA Summary: Report No: Subject Group: 2008-0996 Indexing terms Report title: Keywords Service Area Semicolon: State Of The Art Market Sector Work carried out by: Terje Grimstad, Per Myrseth, Henrik Smith- Unrestricted distribution (internal and external) Meyer, Lasse Udjus, Hans Solli-Sæther, Dag Belsnes, Tore Christiansen, Riitta Hellman, Birger Unrestricted distribution within DNV Møller-Pedersen, Audun Stolpe, Arild Waaler, Jim Yang. Limited distribution within DNV after 3 years Work verified by: No distribution (confidential) Date of this revision: Revision No: Number of pages: <rev> 143 © 2002 Det Norske Veritas AS All rights reserved. This publication or parts thereof may not be reproduced or transmitted in any form or by any means, including photocopying or recording, without the prior written consent of Det Norske Veritas AS. Semicolon_SOTA_v1.0 DET NORSKE VERITAS Report No: 2008-0996 TECHNICAL REPORT Table of Contents Page 1 EXECUTIVE SUMMARY.............................................................................................................3 2 INTRODUCTION..........................................................................................................................3 2.1 Norwegian -

(PSI) Project Overview 2009

Private Sector Investment programme (PSI) Project overview 2009 Colophon Contact Private Sector Investment programme (PSI) T +31 88 602 80 32 F +31 88 602 90 26 [email protected] NL EVD International Juliana van Stolberglaan 148 | 2595 CL The Hague PO Box 20105 | 2500 EC The Hague The Netherlands Index Africa 11 Benin 13 PSI09/BJ/21 Bridging the digital gap in rural Benin 13 Burkina Faso 17 PSI09/BF/01 La Générale Poisson (LGP) 17 PSI09/BF/02 Small scale biodiesel production in Leo, Burkina Faso 20 PSI09/BF/03 MaxiGrana: a collaborative innovation for a value added sesame seeds production chain in Burkina Faso 24 PSI09/BF/21 Cashew nut kernels cracking plant in Burkina Faso 27 PSI09/BF/22 Burkina Primeur, sustainable production of onions and shallots for the West African market 31 Burundi (PSI Plus) 35 PSIP09/BI/31 Introducing modular construction for high quality, affordable housing in Burundi 35 Cape Verde 38 PSI09/CV/02 Shrimp farming on the Cape Verde Island São Vicente 38 PSI09/CV/22 An innovative and ISO 9001 certified manufacturing facility for high quality, aluminium fenestrations in Cape Verde 40 PSI09/CV/23 Safe and reliable transport services for Cape Verde 43 PSI09/CV/24 Tilapia juveniles for tuna live bait on Cape Verde 47 Egypt 50 PSI09/EG/04 Quality controlled logistics & distribution of high quality fresh vegetables & fruits for the domestic urban market in Egypt 50 PSI09/EG/21 MABA Retail: joint venture for the retail-packing and distribution of ware potatoes in Egypt 53 PSI09/EG/22 Growing Radish in the Egyptian -

ISO Aprilie.Xlsx

Nr. Standard reference Title Information technology -- Telecommunications and information ISO/IEC/IEEE 8802- exchange between systems -- Local and metropolitan area 1 A:2015/Amd 1:2018 networks -- Part A: Overview and architecture -- Amendment 1: Allocation of Uniform Resource Name (URN) values in IEEE 802® Information technology -- Telecommunications and information exchange between systems -- Local and metropolitan area 2 ISO/IEC/IEEE 8802-21:2018 networks -- Specific requirements -- Part 21: Media independent services framework Information technology -- Telecommunications and information exchange between systems -- Local and metropolitan area 3 ISO/IEC/IEEE 8802-1AC:2018 networks -- Part 1AC: Media access control (MAC) service definition Information technology -- Telecommunications and information 4 ISO/IEC/IEEE 8802-21-1:2018 exchange between systems -- Local and metropolitan area networks -- Part 21-1: Media independent services Systems and software engineering -- Lifecycle profiles for Very 5 ISO/IEC 29110-3-2:2018 Small Entities (VSEs) -- Part 3-2: Conformity certification scheme Software and systems engineering-- Lifecycle profiles for very small entities (VSEs) -- Part 5-1-4: Software engineering: 6 ISO/IEC TR 29110-5-1-4:2018 Management and engineering guidelines: Generic profile group: Advanced profile 7 ISO/IEC TS 19216:2018 Programming Languages -- C++ Extensions for Networking Information technology -- Small computer system interface (SCSI) 8 ISO/IEC 14776-454:2018 -- Part 454: SCSI Primary Commands - 4 (SPC-4) Information -

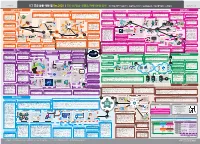

ICT 표준 활용·개발 맵 Ver.2020 | SW·AI/방송·콘텐츠/차세대보안 분야 - 지능정보, 클라우드컴퓨팅, 실감방송·미디어, 실감형콘텐츠, 정보·물리보안, 융합보안

TTA-19078-SD ICT 표준 활용·개발 맵 Ver.2020 | SW·AI/방송·콘텐츠/차세대보안 분야 - 지능정보, 클라우드컴퓨팅, 실감방송·미디어, 실감형콘텐츠, 정보·물리보안, 융합보안 http://www.tta.or.kr 실감방송·미디어 AR 서비스 프레임워크 기술 지상파기반 UHDTV 재난방송 송수신 기술 위성기반 UHDTV 송수신 정합 기술 미디어사물 간 거래 및 고속 데이터 교환을 위한 데이터포맷 표준 미디어사물 간 거래를 위한 API 표준 후각 스크리닝 및 발향 재현 API 표준 VR 콘텐츠용 오감정보 표현 표준 실감형콘텐츠 (병행 | 추격/협력공략 | 표준특허전략⑦ | ~’22) (병행 | 지속/확산공략 | 표준특허전략⑩ | ~’21) (병행 | 지속/확산공략 | 표준특허전략⑪ | ~’20) (병행 | 선도경쟁공략 | 표준특허전략④ | ~’23) (병행 | 선도경쟁공략 | 표준특허전략④ | ~’23) (병행 | 차세대공략 | 표준특허전략① | ~’22) (병행 | 선도경쟁공략 | 표준특허전략④ | ~’22) 하이브리드 라디오 서비스 기술 ETSI ISG ARF, JTC1 SC29 WG11, 3GPP ATSC TG3 ITU-R SG4/SG6, DVB TM-S2/CM-UHDTV JTC1 SC29 WG11 JTC1 SC29 WG11 JTC1 SC29 WG11 JTC1 SC29 WG11 기능성게임의 성과 측정 및 평가 절차 표준 아케이드 게임기기 운영 및 디지털 정산 표준 (병행 | 전략적수용 | 표준특허전략⑩ | ~’21) 80% 기술수준 | 표준수준 80% 95% 기술수준 | 표준수준 95% 90% 기술수준 | 표준수준 80% 100% 기술수준 | 표준수준 100% 95% 기술수준 | 표준수준 100% 95% 기술수준 | 표준수준 100% 100% 기술수준 | 표준수준 100% (병행 | 차세대공략 | 표준특허전략④ | ~’23) (병행 | 선도경쟁공략 | 표준특허전략④ | ~’22) ETSI Broadcast DAB, RadioDNS WT 고품질 파노라마 영상 기술보고서, 하이브리드기반 가상현실에서의 발향 미디어 서비스를 위한 가이드라인, 5th ed. Control information, 디지털 라디오 TTA | TTAR-07.0015, TTAK.KO-07.0121, TTA | 2018-1758, 지상파 UHDTV 재난경보방송 시나리오(기술보고서), 2019-0144, TTA | 2018-1764, HDRTV 표준화 및 기술개발 현황(기술보고서), 2018-1762, 8K 위성방송 서비스(기술보고서), TTAK.KO-07.0008/R9, JTC1 SC29 | ISO/IEC 23093-1 2nd ed., IoMT JTC1 SC29 | ISO/IEC 23093-1 2nd ed., IoMT TTA | TTAK.KO-10.1072, TTAK.KO-10.0511/R2, JTC1 SC29 | ISO/IEC 23005-2, ISO/IEC JTC1 SC35 WG1/SC29 WG11, W3C GSA 85% 기술수준 | 표준수준 85% 지상파 증강방송 송수신 정합 지상파 UHDTV 방송 송수신 정합, TTAK.KO-07.0127, 지상파 UHDTV 방송 송수신 디지털 위성방송 송수신 정합(개정), TTAK.KO-07.0122/R1, 초고선명(UHD) 디지털 위성방송 송수신 정합 Architecture, ISO/IEC 23093-2 2nd ed., IoMT Architecture, ISO/IEC 23093-2 2nd ed., IoMT 콘텐츠와 후각 인식 장치 간 상호 작용 참조 모델, TTAK.KO-10.0919-Part1/R1, 발향장치와 콘텐츠 상호연동 참조 모델 23005-3, 5th ed. -

International Standard Iso 8000-8:2015(E)

This preview is downloaded from www.sis.se. Buy the entire standard via https://www.sis.se/std-919734 INTERNATIONAL ISO STANDARD 8000-8 First edition 2015-11-15 Data quality — Part 8: Information and data quality: Concepts and measuring Qualité des données — Partie 8: Informations et qualité des données: Concepts et mesurage Reference number ISO 8000-8:2015(E) © ISO 2015 This preview is downloaded from www.sis.se. Buy the entire standard via https://www.sis.se/std-919734 ISO 8000-8:2015(E) COPYRIGHT PROTECTED DOCUMENT © ISO 2015, Published in Switzerland All rights reserved. Unless otherwise specified, no part of this publication may be reproduced or utilized otherwise in any form orthe by requester. any means, electronic or mechanical, including photocopying, or posting on the internet or an intranet, without prior written permission. Permission can be requested from either ISO at the address below or ISO’s member body in the country of Ch. de Blandonnet 8 • CP 401 ISOCH-1214 copyright Vernier, office Geneva, Switzerland Tel. +41 22 749 01 11 Fax +41 22 749 09 47 www.iso.org [email protected] ii © ISO 2015 – All rights reserved This preview is downloaded from www.sis.se. Buy the entire standard via https://www.sis.se/std-919734 ISO 8000-8:2015(E) Contents Page Foreword ........................................................................................................................................................................................................................................iv Introduction ..................................................................................................................................................................................................................................v -

Standards Analysis Ict Sector Luxembourg

STANDARDS ANALYSIS ICT SECTOR LUXEMBOURG Version 8.0 · November 2017 · ISSN 2354-483X 2 WHITE PAPER · DIGITAL TRUST · Version 3.0 · October 2016 FOREWORD Technical standardization and standards play ILNAS carries out different legal missions in the an important role in the support of economy field of ICT. In addition, through the development. They can provide, for example, a "Luxembourg’s policy on ICT technical guide of the best practices for services and standardization 2015-2020", ILNAS product development, governance, quality and commissioned the Economic Interest Grouping assessment guarantee, safety, etc. Nowadays, “Agence pour la Normalisation et l’Économie de almost every professional sector relies on la Connaissance” (ANEC GIE) to strengthen standards to perform its daily activities and the national ICT sector involvement in provide services in an efficient manner. standardization work. Standards remain under a voluntary application scheme, but often they are a real added value ILNAS, with the support of ANEC GIE, has in order to comply with legislation. They can be launched several activities dedicated to considered as a source of benefits in each strengthen and develop the ICT-related sector of the economy, what is particularly true standardization landscape at the national level in the Information and Communication in terms of education and involvement of Technology (ICT) sector, which supports all the stakeholders, such as the creation of a other economic developments. University certificate Smart ICT for Business Innovation in collaboration with the University of The ICT sector has gained more and more Luxembourg. This first development was importance in the society as a whole in the last reinforced in May 2017 by the launch of a decades. -

ISO 8000: the International Data Quality Standard

ISO 8000: the international data quality standard ISO TC184/SC4/WG 13 Industrial data quality | ISO TC 184/WG 6 Asset intensive industry interoperability Machine-readable data: “data in a format that can be easily processed by a computer without human intervention while ensuring no semantic meaning is lost.” * Definition from the US OPEN Government Data Act. January 2019 Peter Eales – MRO Insyte Limited [email protected] Copyright © 2020 MRO Insyte. All Rights Reserved Standardized references & assets data library lifecycle DATA QUALITY CHALLENGES Progressive loss of data quality over time Slide reproduced courtesy of TOTAL’s QUANTUM PROGRAM As presented by: Jean-Charles Leclerc – TOTAL to the ISO TC184/SC4 Plenary May 2019 - Toulouse Get quality right first time – Toyota principal 5 ‒ Data quality is addressed during the data creation phase to ensure the accuracy of future decisions; ‒ Data aggregation from multiple sources turns data silos into coherent and relevant information; ‒ Analysis of that information by experts creates corporate knowledge; ‒ That expert knowledge enables informed decision making. Copyright © 2020 MRO Insyte. All Rights Reserved Specify machine-readable data, not digital data ‒ Machine-readable data, is data in a format that can be automatically read and processed by a computer, such as JSON or XML; ‒ Machine-readable data must be structured data; ‒ Digital material may not be machine-readable. A PDF document containing tables of data is digital, but not machine-readable because a computer would struggle to access the tabular information – even though the tables are human readable. Source: http://opendatahandbook.org/glossary/en/terms/machine-readable/ Copyright © 2020 MRO Insyte. -

Iso 8000-2:2017

This preview is downloaded from www.sis.se. Buy the entire standard via https://www.sis.se/std-922374 INTERNATIONAL ISO STANDARD 8000-2 Second edition 2017-08 Data quality — Part 2: Vocabulary Qualité des données — Partie 2: Vocabulaire Reference number ISO 8000-2:2017(E) © ISO 2017 This preview is downloaded from www.sis.se. Buy the entire standard via https://www.sis.se/std-922374 ISO 8000-2:2017(E) COPYRIGHT PROTECTED DOCUMENT © ISO 2017, Published in Switzerland All rights reserved. Unless otherwise specified, no part of this publication may be reproduced or utilized otherwise in any form orthe by requester. any means, electronic or mechanical, including photocopying, or posting on the internet or an intranet, without prior written permission. Permission can be requested from either ISO at the address below or ISO’s member body in the country of Ch. de Blandonnet 8 • CP 401 ISOCH-1214 copyright Vernier, office Geneva, Switzerland Tel. +41 22 749 01 11 Fax +41 22 749 09 47 www.iso.org [email protected] ii © ISO 2017 – All rights reserved This preview is downloaded from www.sis.se. Buy the entire standard via https://www.sis.se/std-922374 ISO 8000-2:2017(E) Contents Page Foreword ........................................................................................................................................................................................................................................iv Introduction ..................................................................................................................................................................................................................................v -

Standardiseringsprosjekter Og Nye Standarder

Annonseringsdato: 2018-11-14 Listenummer: 20/2018 Standardiseringsprosjekter og nye standarder Listenummer: 20/2018 Side: 1 av 128 01 Generelt. Terminologi. Standardisering. Dokumentasjon 01 Generelt. Terminologi. Standardisering. Dokumentasjon Standardforslag til høring - nasjonale prNS 3467 Steg og leveranser i byggeprosessen Stages and deliverables in the building process Språk: no Kommentarfrist: 19.04.2020 Standardforslag til høring - europeiske (CEN) EN 16214-1:2012/prA1 Sustainability criteria for the production of biofuels and bioliquids for energy applications - Principles, criteria, indicators and verifiers - Part 1: Terminology Språk: en Kommentarfrist: 24.01.2019 prEN 15947-1:2018 Pyrotechnic articles - Fireworks, Categories F1, F2 and F3 - Part 1: Terminology Språk: en Kommentarfrist: 06.12.2018 prEN ISO 23243 Non-destructive testing - Terminology - Terms used in ultrasonic testing with phased arrays (ISO/DIS 23243:2018) Språk: en Kommentarfrist: 10.01.2019 Standardforslag til høring - internasjonale (ISO) ISO 7010:2011/DAmd 240 Safety sign P068: Do not expose to direct sunlight or hot surface Språk: en Kommentarfrist: 03.02.2019 ISO 7010:2011/DAmd 241 Safety sign P069: Not to be serviced by users Språk: en Kommentarfrist: 03.02.2019 ISO 7010:2011/DAmd 242 Safety sign P070: Do not put finger into the nozzle of a hydromassage Språk: en Kommentarfrist: 03.02.2019 ISO/DIS 10795 Space systems -- Programme management and quality -- Vocabulary Språk: en Kommentarfrist: 03.12.2018 ISO/DIS 20674-1 Information and documentation -- Transliteration of scripts in use in Thailand -- Part 1: Transliteration of Akson-Thai-Noi Språk: en Kommentarfrist: 04.02.2019 Listenummer: 20/2018 Side: 2 av 128 01 Generelt. Terminologi. Standardisering.