Monte Carlo Methods in Geophysical Inverse Problems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

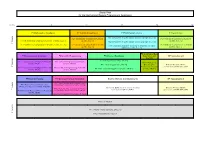

Study Plan for the International Masters Programme in Geophysics

Study Plan for the International Masters Programme in Geophysics Credits 6 12 18 24 30 P1 Mathematical Geophysics P2 Statistical Geophysics P 3 Earth System Science P 4 Geocontinua r e t s e P3.1 Introduction to Earth System Science 1 [2 SWS, 3 ECTS] P2.1 Statistics for Geosciences (Lecture) P4.1 Methods of Geocontinua (Lecture) m e S P1.1 Mathematical Geophysics (Lecture) [4 SWS, 6 ECTS] [2 SWS, 3 ECTS] [2 SWS, 3 ECTS] t P3.2 Introduction to Earth System Science 2 [2 SWS, 3 ECTS] s 1 P1.2 Mathematical Geophysics (Exercise) [2 SWS, 3 ECTS] P2.2 Statistics for Geosciences (Exercise) P4.2 Methods of Geocontinua (Exercise) P3.3 Geophysics Research: Overview on Methods and Open [2 SWS, 3 ECTS] [2 SWS, 3 ECTS] Questions [2 SWS, 3 ECTS] P8 Geophyiscal Data P 5 Computational Geophysics P6 Scientific Programming P7 Advanced Geophysics Acquisition and WP: Specialisation I r Analysis e t s e P7.1 Geodynamics [2 SWS, 3 ECTS] P5.1 Computational Geophysics (Lecture) P6.1 Scientific Programming (Lecture) m e P8.1 Geophysical S [2SWS, 3ECTS] [2SWS, 3 ECTS] d P7.2 Seismology [2 SWS, 3 ECTS] Data Analysis: Elective Module, 6 ECTS n 2 Practical Introduction Choose one of WP1, WP2, WP3 P5.2 Computational Geophysics (Exercise) P6.2 Scientific Programming (Exercise) P7.3 Geo- and Paleomagnetism [2 SWS, 3 ECTS] [2 SWS, 3 ECTS] [2SWS, 3ECTS] [2SWS, 3 ECTS] P9 Research Training P10 Advanced Topics in Geophysics Elective Modules: Interdisciplinarity WP: Specialisation II r e t s P10.1 Tools, Techniques and current Trends e P9.1 Presentation, Communication, in -

Contributors to This Issue

Contributors to this issue Faruq E. Akbar received his BS (1988) in civil engineering from Xiaofei Chen received his BSc (1982) in geophysics from the Univ- Bangladesh University of Engineering and ersity of Sciences and Technology of China, Technology and his MS (1992) in geophysics MSc (1985) from the Institute of Geophysics of from the University of New Orleans, Louisiana. SSB of China, and PhD (1991) from the He is currently a PhD student in the Department University of Southern California. He was with of Geological Sciences, University of Texas at IG/SSBC from 1985 to 1986. He is currently a Austin. His professional interests are seismic research associate at USC. His main research data processing, modeling, migration, and interests are seismic waves in complex hetero- inversion. geneous media, inversion techniques, and earthquake seismology. Mike D. Dentith is senior lecturer in geophysics at the Department T. Alkhalifah, see biography and photograph in September-October 1995 GEOPHYSICS, p. 1599. of Geology and Geophysics, University of Western Australia. His current research inter- ests include regional geophysical studies to Estelle Blais received her MScA (1994) in mineral engineering from determine the 3-D structure of greenstone belts, Ecole Polytechnique. She is currently working as a junior geophysi- the geometry of the Darling Fault and the min- cist for SIAL Geosciences in Montreal. eralization in the Canning Basin. He has edited ASEG's geophysics publication "Geophysical Fabio Boschetti graduated in geology from the University of Genoa, Signatures of Western Australia Mineral Italy. He then worked for four years with the Deposits." same university's physics department, special- izing in alternative energy assessment, atmos- pheric pollutant diffusion, and climatology. -

Mathematical Geophysics a Survey of Recent Developments in Seismology and Geodynamics

Mathematical Geophysics A Survey of Recent Developments in Seismology and Geodynamics edited by N. J. VU\AR G. NOLET M. J. R. WORTEL and S. A. P. L CLOETINGH University of Utrecht, The Netherlands D. Reidel Publishing Company AMEMBER OF THE KLUWER ACADEMIC PUBLISHERS GROUP Dordrecht / Boston / Lancaster / Tokyo CONTENTS Preface I. SEISMOLOGY AND THREE DIMENSIONAL STRUCTURE OF THE EARTH Waves in a 3-D Earth Chapter 1 G. Masters and M. Rilzwoller Low frequency seismology and three-dimensional structure - observational aspects. 1 Chapter 2 J.Park Free-oscillation coupling theory. 31 Chapter 3 K. Yomogida Surface waves in weakly heterogeneous media. 53 Chapter 4 R. Snieder On the connection between ray theory and scattering theory for surface waves. 11 Chapter 5 A. van den Berg A hybrid solution for wave propagation problems in inhomogeneous media. 85 Large-scale inversion Chapter 6 P. Mora Elastic wavefield inversion and the adjoint operator for the elastic wave equation. 117 Chapter 7 B.L.N. Kennett and P.R. Williamson Subspace methods for large-scale nonlinear inversion. 139 Chapter 8 W. Spakman and G. Nolet Imaging algorithms, accuracy and resolution in delay time tomography. 155 VI CONTENTS II. CONVECTION AND LITHOSPHERIC PROCESSES Geomagnetism Chapter 9 J. Bloxham The determination of fluid flow at the core surface from geomagnetic observations . Mantle convection Chapter 10 G.T. Jarvis and W.R. Peltier Long wavelength features of mantle convection. Chapter 11 F. Quareni and D.A. Yuen Mean-field methods in mantle convection. Chapter 12 P. Machetel and D.A. Yuen Infinite Prandtl number spherical-shell convection . -

Review 2012 I

Geomagnetism Review 2012 i Geomagnetism Review 2012 Alan W P Thomson (editor) [email protected] Contributors: Orsi Baillie, Ciarán Beggan, Ellen Clarke, Ewan Dawson, Simon Flower, Brian Hamilton, Ted Harris, Gemma Kelly, Susan Macmillan, Sarah Reay, Tom Shanahan, Alan Thomson, Christopher Turbitt The National Grid and other Ordnance Survey data are used with the permission of the Controller of Her Majesty’s Stationery Office. Ordnance Survey licence number 100017897/2012 Key words Geomagnetism. Front cover A snapshot of the electric field strength across the UK during the geomagnetic storm of 17 March 2013. Bibliographical reference THOMSON, A.W.P. ET AL 2013. Geomagnetism Review 2012. British Geological Survey Open Report OR/13/030 44pp. © NERC 2013 Edinburgh British Geological Survey 2013 To Contents Page ii To Contents Page 1 Contents Contents ...................................................................................................... 1 Introduction ................................................................................................ 2 Technical, Observatory and Field Operations .......................................... 8 UK and Overseas Observatories ................................................................................... 8 Geoelectric Field Monitoring ........................................................................................ 10 High Frequency Magnetometers ................................................................................. 12 The BGS contribution to INTERMAGNET .................................................................. -

Integration of Knowledge and Data in Active Seismology1

Integration of Knowledge and Data in Active Seismology1 Ludmila P. Braginskaya, Andrey P. Grigoruk, Valery V. Kovalevsky Institute of Computational Mathematics and Mathematical Geophysics SB RAS (ICM&MG SB RAS), Novosibirsk, Russia, [email protected] Abstract. The paper presents the principles of organization of an information environment providing a holistic view of the subject area and various aspects of scientific activity in active seismology. The subject ontology “Active Seismology” is used as a conceptual basis and information model. Keywords: active seismology, ontology, knowledge portal 1 Introduction Since the 1970s a new direction in geophysics has been developing, which has recently been called active seismology, based on powerful controlled vibration sources of seismic waves of the low-frequency range [1]. The use of such sources has opened up new possibilities for investigations of the deep structure of Earth's crust and upper mantle and the geodynamic processes in earthquake-prone and volcanic zones. The active seismology methods have found application in new areas of geophysics and geophysical engineering. Currently, the following new vibrational geotechnologies are being actively developed: active vibroseismic monitoring of earthquake-prone and volcanic zones; vibroseismic action on oil formations to increase oil recovery; vibrational microseismic zoning; study of the stability of deep structures in areas of construction and operation of environmentally hazardous constructions, etc. During the formation and development of the active seismology methods, numerous theoretical and experimental works have been carried out to substantiate the vibroseismic method, to study the processes of radiation of seismic waves by vibrational sources, the characteristics of their wave fields and physical effects under the vibrational action on the geological medium [2]. -

Coulomb Stress Accumulation Along the San Andreas Fault System

JOURNAL OF GEOPHYSICAL RESEARCH, VOL. 108, NO. B6, 2296, doi:10.1029/2002JB002136, 2003 Coulomb stress accumulation along the San Andreas Fault system Bridget Smith and David Sandwell Institute for Geophysics and Planetary Physics, Scripps Institution of Oceanography, La Jolla, California, USA Received 2 August 2002; revised 16 January 2003; accepted 18 February 2003; published 11 June 2003. [1] Stress accumulation rates along the primary segments of the San Andreas Fault system are computed using a three-dimensional (3-D) elastic half-space model with realistic fault geometry. The model is developed in the Fourier domain by solving for the response of an elastic half-space due to a point vector body force and analytically integrating the force from a locking depth to infinite depth. This approach is then applied to the San Andreas Fault system using published slip rates along 18 major fault strands of the fault zone. GPS-derived horizontal velocity measurements spanning the entire 1700 Â 200 km region are then used to solve for apparent locking depth along each primary fault segment. This simple model fits remarkably well (2.43 mm/yr RMS misfit), although some discrepancies occur in the Eastern California Shear Zone. The model also predicts vertical uplift and subsidence rates that are in agreement with independent geologic and geodetic estimates. In addition, shear and normal stresses along the major fault strands are used to compute Coulomb stress accumulation rate. As a result, we find earthquake recurrence intervals along the San Andreas Fault system to be inversely proportional to Coulomb stress accumulation rate, in agreement with typical coseismic stress drops of 1–10 MPa. -

International Union of Geodesy and Geophysics (Iugg)

Annual Report for 2005 of the INTERNATIONAL UNION OF GEODESY AND GEOPHYSICS (IUGG) INTRODUCTION Established in 1919, the International Union of Geodesy and Geophysics (IUGG) is the international organization dedicated to advancing, promoting, and communicating knowledge of the Earth system, its space environment, and the dynamical processes causing change. Through its constituent Associations, Commissions, and services, IUGG convenes international assemblies and workshops, undertakes research, assembles observations, gains insights, coordinates activities, liaises with other scientific bodies, plays an advocacy role, contributes to education, and works to expand capabilities and participation worldwide. Data, information, and knowledge gained are made openly available for the benefit of society – to provide the information necessary for the discovery and responsible use of natural resources, sustainable management of the environment, reducing the impact of natural hazards, and to satisfy our need to understand the Earth’s natural environment and the consequences of human activities. IUGG Associations and Union Commissions encourage scientific investigation of Earth science and especially interdisciplinary aspects. Each Association establishes working groups and commissions that can be accessed by using the links on our website. The web site, available in English and French, can be found at http://www.IUGG.org. MEMBERSHIP By their very nature, geodetic and geophysical studies require a high degree of international co-operation. IUGG is critically dependent on the scientific and financial support of its member Adhering Bodies. The list of present and past IUGG Adhering Bodies is published annually in the IUGG Yearbook, which is posted on the web site and is available from the Secretariat. Each Adhering Body establishes a National Committee for IUGG, and names Correspondents to each Association (as appropriate). -

Course Catalogue Master Geophysics

Course Catalogue Master Geophysics Master of Science, M.Sc. (120 ECTS points) According to the Examination Regulations as of 30 October 2007 88/066/—/M0/H/2007 The master’s programme is jointly offered by Ludwig-Maximilians-Universität München and Technische Universität München. Munich, 5 February 2014 Contents 1 Abbreviations and Remarks 3 2 Module: P 1 Fundamentals from Mathematics and Physics 4 3 Module: P 2 Basic Geophysics 7 4 Module: P 3 Tools 10 5 Module: P 4 Advanced Geophysics 13 6 Module: WP 1 Advanced Geodynamics 15 7 Module: WP 2 Advanced Seismology 18 8 Module: WP 3 Advanced Paleo- and Geomagnetism 21 9 Module: WP 4 Geochemistry and Geomaterials 24 10 Module: WP 5 Applied and Industrial Geophysics 26 11 Module: WP 6 ESPACE 29 12 Module: P 5 Independent Scientific Research 32 2 1 Abbreviations and Remarks CP Credit Point(s), ECTS Point(s) ECTS European Credit Transfer and Accumulation System h hours SS summer semester WS winter semester SWS credit hours 1. Please note: The course catalogue serves as an orientation only for your course of study. For binding regulations please consult the official examination regulations. These can be found at: www.lmu.de/studienangebot for the respective programme of study. 2. In the description of the associated module components ECTS points in brackets are for internal use only. ECTS points without brackets are awarded for passing the corre- sponding examination. 3. Modules whose identifier starts with P are mandatory modules. Modules whose identi- fier starts with WP are elective modules. -

An Extended Version of the C3FM Geomagnetic Field Model: Application of a Continuous Frozen-Flux Constraint

Originally published as: Wardinski, I., Lesur, V. (2012): An extended version of the C3FM geomagnetic field model: application of a continuous frozen-flux constraint. - Geophysical Journal International, 189, 3, pp. 1409—1429. DOI: http://doi.org/10.1111/j.1365-246X.2012.05384.x Geophysical Journal International Geophys. J. Int. (2012) 189, 1409–1429 doi: 10.1111/j.1365-246X.2012.05384.x An extended version of the C3FM geomagnetic field model: application of a continuous frozen-flux constraint Ingo Wardinski and Vincent Lesur Helmholtz Centre Potsdam, GFZ German Research Centre for Geosciences, Section 2.3: Earth’s Magnetic Field, Germany. E-mail: [email protected] Accepted 2012 January 11. Received 2012 January 11; in original form 2011 May 3 SUMMARY A recently developed method of constructing core field models that satisfies a frozen-flux constraint is used to built a field model covering 1957–2008. Like the previous model C3FM, we invert observatory secular variation data and adopt satellite-based field models to constrain the field morphology in 1980 and 2004. To derive a frozen-flux field model, we start from a field model that has been derived using ‘classical’ techniques, which is spatially and temporally smooth. This is achieved by using order six B-splines as basis functions for the temporal evolution of the Gauss coefficients and requiring that the model minimizes the integral of the third-time derivative of the field taken over the core surface. That guarantees a robust estimate of the secular acceleration. Comparisons between the ‘classical’ and the frozen-flux field models are given, and we describe to what extend the frozen-flux constraint is adhered. -

Geophysical Flows and the Effects of a Strong Surface Tension Francesco Fanelli

Geophysical flows and the effects of a strong surface tension Francesco Fanelli To cite this version: Francesco Fanelli. Geophysical flows and the effects of a strong surface tension. 2016. hal-01323692 HAL Id: hal-01323692 https://hal.archives-ouvertes.fr/hal-01323692 Preprint submitted on 31 May 2016 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Geophysical flows and the effects of a strong surface tension Francesco Fanelli Institut Camille Jordan - UMR CNRS 5208 Université de Lyon, Université Claude Bernard Lyon 1 Bâtiment Braconnier 43, Boulevard du 11 novembre 1918 F-69622 Villeurbanne cedex – FRANCE [email protected] May 31, 2016 Abstract In the present note we review some recent results for a class of singular perturbation problems for a Navier-Stokes-Korteweg system with Coriolis force. More precisely, we study the asymptotic behaviour of solutions when taking incompressible and fast rotation limits simultaneously, in a constant capillarity regime. Our main purpose here is to explain in detail the description of the phenomena we want to capture, and the mathematical derivation of the system of equations. Hence, a huge part of this work is devoted to physical considerations and mathematical modeling. -

Observational and Theoretical Studies of the Dynamics of Mantle Plume–Mid-Ocean Ridge Interaction

OBSERVATIONAL AND THEORETICAL STUDIES OF THE DYNAMICS OF MANTLE PLUME–MID-OCEAN RIDGE INTERACTION G. Ito J. Lin D. Graham Department of Geology and Department of Geology and College of Oceanic and Geophysics, School of Ocean Geophysics, Atmospheric Sciences, and Earth Science and Technology, Woods Hole Oceanographic Institution, Oregon State University, University of Hawaii, Woods Hole, Massachusetts, USA Corvallis, Oregon, USA Honolulu, Hawaii, USA Received 24 July 2002; revised 16 May 2003; accepted 5 August 2003; published 20 November 2003. [1] Hot spot–mid-ocean ridge interactions cause many interaction remain enigmatic. Outstanding problems of the largest structural and chemical anomalies in pertain to whether plumes flow toward and along mid- Earths ocean basins. Correlated geophysical and geo- ocean ridges in narrow pipe-like channels or as broad chemical anomalies are widely explained by mantle expanding gravity currents, the origin of geochemical plumes that deliver hot and compositionally distinct mixing trends observed along ridges, and how mantle material toward and along mid-ocean ridges. Composi- plumes alter the geometry of the mid-ocean ridge plate tional anomalies are seen in trace element and isotope boundary, as well as the origin of other ridge axis anom- ratios, while elevated mantle temperatures are suggested alies not obviously related to mantle plumes. by anomalously thick crust, low-density mantle, low INDEX TERMS: 8121 Tectonophysics: Dynamics, convection currents mantle seismic velocities, and elevated degrees -

Kenneth A. Hoffman

KENNETH A. HOFFMAN https://www.researchgate.net/profile/Kenneth_Hoffman3/?ev=hdr_xprf DEGREES: University of California, Berkeley, CA (thesis advisor: John Verhoogen) • 1966 B.A. Physics • 1969 M.A. Geophysics • 1973 Ph.D. Geophysics POSTDOCTORAL: • 1972-73 Dept. Geology & Geophysics, University of Minnesota PROFESSORSHIP: Physics Department, Cal Poly State University, San Luis Obispo, CA • 1974 Lecturer • 1974-78 Assist. Prof. • 1978-83 Assoc. Prof. • 1983-2006 Prof. • Now Emeritus VISITING PROFESSORSHIPS & RESEARCH POSITIONS: • 2007-14 Senior Research Scientist, Geoscience Department, University of Wisconsin–Madison • 2004 Université de Montpellier II, Montpellier, France • 1998 Institut für Geophysik, ETH-Zürich, Switzerland • 1997-98 Université de Genève, Geneva, Switzerland • 1988 Institute de Physique du Globe, Université de Paris, France • 1980-81 Research School of Earth Sciences, Australian National University, Canberra, Australia • 1981 Victoria University, Wellington, NZ • 1977-78 University of Minnesota __________________________________ PRIMARY DISTINCTIONS: 2001 • Allan Cox Memorial Lecture [Fall meeting of the American Geophysical Union (AGU), San Francisco] Featured Webcast: http://www.agu.org/webcast/hoffman.html 2001 • Series of invited talks in Beijing, China 1999 • Invited talk at the Royal Society of London 1998 • Invited public lecture at Geneva’s Museum of Natural History 1997-00 • President: Geomagnetism-Paleomagnetism (GP) Section of the American Geophysical Union 1995-99 • Chairman: Division I 'Internal Fields'