Rational Krylov Methods for Operator Functions

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Numerical Computation with Rational Functions

NUMERICAL COMPUTATION WITH RATIONAL FUNCTIONS (scalars only) Nick Trefethen, University of Oxford and ENS Lyon + thanks to Silviu Filip, Abi Gopal, Stefan Güttel, Yuji Nakatsukasa, and Olivier Sète 1/26 1. Polynomial vs. rational approximations 2. Four representations of rational functions 2a. Quotient of polynomials 2b. Partial fractions 2c. Quotient of partial fractions (= barycentric) 2d. Transfer function/matrix pencil 3. The AAA algorithm with Nakatsukasa and Sète, to appear in SISC 4. Application: conformal maps with Gopal, submitted to Numer. Math. 5. Application: minimax approximation with Filip, Nakatsukasa, and Beckermann, to appear in SISC 6. Accuracy and noise 2/26 1. Polynomial vs. rational approximation Newman, 1964: approximation of |x| on [−1,1] −휋 푛 퐸푛0 ~ 0.2801. ./푛 , 퐸푛푛 ~ 8푒 Poles and zeros of r: exponentially clustered near x=0, exponentially diminishing residues. Rational approximation is nonlinear, so algorithms are nontrivial. poles There may be nonuniqueness and local minima. type (20,20) 3/26 2. Four representations of rational functions Alpert, Carpenter, Coelho, Gonnet, Greengard, Hagstrom, Koerner, Levy, Quotient of polynomials 푝(푧)/푞(푧) SK, IRF, AGH, ratdisk Pachón, Phillips, Ruttan, Sanathanen, Silantyev, Silveira, Varga, White,… 푎푘 Beylkin, Deschrijver, Dhaene, Drmač, Partial fractions 푧 − 푧 VF, exponential sums Greengard, Gustavsen, Hochman, 푘 Mohlenkamp, Monzón, Semlyen,… Berrut, Filip, Floater, Gopal, Quotient of partial fractions 푛(푧)/푑(푧) Floater-Hormann, AAA Hochman, Hormann, Ionita, Klein, Mittelmann, Nakatsukasa, Salzer, (= barycentric) Schneider, Sète, Trefethen, Werner,… 푇 −1 Antoulas, Beattie, Beckermann, Berljafa, Transfer function/matrix pencil 푐 푧퐵 − 퐴 푏 IRKA, Loewner, RKFIT Druskin, Elsworth, Gugercin, Güttel, Knizhnerman, Meerbergen, Ruhe,… Sometimes the boundaries are blurry! 4/26 2a. -

An Extension of Matlab to Continuous Functions and Operators∗

SIAM J. SCI. COMPUT. c 2004 Society for Industrial and Applied Mathematics Vol. 25, No. 5, pp. 1743–1770 AN EXTENSION OF MATLAB TO CONTINUOUS FUNCTIONS AND OPERATORS∗ † † ZACHARY BATTLES AND LLOYD N. TREFETHEN Abstract. An object-oriented MATLAB system is described for performing numerical linear algebra on continuous functions and operators rather than the usual discrete vectors and matrices. About eighty MATLAB functions from plot and sum to svd and cond have been overloaded so that one can work with our “chebfun” objects using almost exactly the usual MATLAB syntax. All functions live on [−1, 1] and are represented by values at sufficiently many Chebyshev points for the polynomial interpolant to be accurate to close to machine precision. Each of our overloaded operations raises questions about the proper generalization of familiar notions to the continuous context and about appropriate methods of interpolation, differentiation, integration, zerofinding, or transforms. Applications in approximation theory and numerical analysis are explored, and possible extensions for more substantial problems of scientific computing are mentioned. Key words. MATLAB, Chebyshev points, interpolation, barycentric formula, spectral methods, FFT AMS subject classifications. 41-04, 65D05 DOI. 10.1137/S1064827503430126 1. Introduction. Numerical linear algebra and functional analysis are two faces of the same subject, the study of linear mappings from one vector space to another. But it could not be said that mathematicians have settled on a language and notation that blend the discrete and continuous worlds gracefully. Numerical analysts favor a concrete, basis-dependent matrix-vector notation that may be quite foreign to the functional analysts. Sometimes the difference may seem very minor between, say, ex- pressing an inner product as (u, v)orasuTv. -

Householder Triangularization of a Quasimatrix

IMA Journal of Numerical Analysis (2010) 30, 887–897 doi:10.1093/imanum/drp018 Advance Access publication on August 21, 2009 Householder triangularization of a quasimatrix LLOYD N.TREFETHEN† Oxford University Computing Laboratory, Wolfson Building, Parks Road, Downloaded from Oxford OX1 3QD, UK [Received on 4 July 2008] A standard algorithm for computing the QR factorization of a matrix A is Householder triangulariza- tion. Here this idea is generalized to the situation in which A is a quasimatrix, that is, a ‘matrix’ whose http://imajna.oxfordjournals.org/ ‘columns’ are functions defined on an intervala [ , b]. Applications are mentioned to quasimatrix least squares fitting, singular value decomposition and determination of ranks, norms and condition numbers, and numerical illustrations are presented using the chebfun system. Keywords: Householder triangularization; QR factorization; chebfun; quasimatrix; singular value decom- position. 1. QR factorization and Householder triangularization at Radcliffe Science Library, Bodleian Library on April 18, 2012 Let A be an m n matrix, where m n. A (reduced) QR factorization of A is a factorization × > x x x x q q q q r r r r x x x x q q q q r r r A QR, x x x x q q q q r r , (1.1) = x x x x q q q q r x x x x q q q q x x x x q q q q where Q is m n with orthonormal columns and R is upper triangular. Here and in subsequent equations we illustrate our× matrix operations by schematic pictures in which x denotes an arbitrary entry, r denotes an entry of an upper triangular matrix, q denotes an entry of an orthonormal column and a blank denotes a zero. -

Publications by Lloyd N. Trefethen

Publications by Lloyd N. Trefethen I. Books I.1. Numerical Conformal Mapping, editor, 269 pages. Elsevier, 1986. I.2. Finite Difference and Spectral Methods for Ordinary and Partial Differential Equations, x+315 pages. Graduate textbook, privately published at http://comlab.ox.ac.uk/nick.trefethen/, 1996. I.3. Numerical Linear Algebra, with David Bau III, xii+361 pages. SIAM, 1997. I.4. Spectral Methods in MATLAB, xviii+165 pages. SIAM, 2000. I.5. Schwarz-Christoffel Mapping, with Tobin A. Driscoll, xvi+132 pages. Cambridge U. Press, 2002. I.6. Spectra and Pseudospectra: The Behavior of Nonnormal Matrices and Operators, with Mark Embree, xviii+606 pages. Princeton U. Press, 2005. I.7. Trefethen’s Index Cards: Forty years of Notes about People, Words, and Mathematics, xv + 368 pages. World Scientific Publishing, 2011. I.8. Approximation Theory and Approximation Practice. SIAM, to appear in 2013. In addition to these a book has been published by other authors about the international problem solving challenge I organised in 2002 (item VIII.8 below): F. Bornemann, D. Laurie, S. Wagon and D. Waldvogel, The SIAM 100-Digit Challenge, SIAM, 2004, to appear in German translation as Abenteuer Numerik: Eine Führung entlang der Herausforderung von Trefethen, Springer, 2006. II. Finite difference and spectral methods for partial differential equations II.1. Group velocity in finite difference schemes. SIAM Review 24 (1982), 113-136. II.2. Group velocity interpretation of the stability theory of Gustafsson, Kreiss, and Sundström. J. Comp. Phys. 49 (1983), 199-217. II.3. On Lp-instability and dispersion at discontinuities in finite difference schemes. -

Efficient Shadowing of High Dimensional Chaotic Systems With

Efficient Shadowing of High Dimensional Chaotic Systems with the Large Astrophysical N-body Problem as an Example by Wayne Hayes A thesis submitted in conformity with the requirements for the degree of Master of Science Graduate Department of Computer Science University of Toronto c Copyright by Wayne Hayes 1995 Efficient Shadowing of High Dimensional Chaotic Systems with the Large Astrophysical N-body Problem as an Example Wayne Hayes Master of Science Department of Computer Science University of Toronto January 1995 Abstract N-body systems are chaotic. This means that numerical errors in their solution are magnified exponentially with time, perhaps making the solutions useless. Shadowing tries to show that numerical solutions of chaotic systems still have some validity. A previously published shadowing refinement algorithm is optimized to give speedups of order 60 for small problems and asymptotic speedups of O(N) on large problems. This optimized algorithm is used to shadow N-body systems with up to 25 moving particles. Preliminary results suggest that average shadow length scales roughly as 1=N, i.e., shadow lengths decrease rapidly as the number of phase-space dimensions of the system is increased. Some measures of simulation error for N-body systems are discussed that are less stringent than shadowing. Many areas of further research are discussed both for high-dimensional shadowing, and for N-body measures of error. -5 Acknowledgements I thank my supervisor, Prof. Ken Jackson, for many insightful conversations during the devel- opment of the ideas in this thesis, and for helpful comments on the text. My second reader, Dr. -

Chebfun Guide 1St Edition for Chebfun Version 5 ! ! ! ! ! ! ! ! ! ! ! ! ! ! Edited By: Tobin A

! ! ! Chebfun Guide 1st Edition For Chebfun version 5 ! ! ! ! ! ! ! ! ! ! ! ! ! ! Edited by: Tobin A. Driscoll, Nicholas Hale, and Lloyd N. Trefethen ! ! ! Copyright 2014 by Tobin A. Driscoll, Nicholas Hale, and Lloyd N. Trefethen. All rights reserved. !For information, write to [email protected].! ! ! MATLAB is a registered trademark of The MathWorks, Inc. For MATLAB product information, please write to [email protected]. ! ! ! ! ! ! ! ! Dedicated to the Chebfun developers of the past, present, and future. ! Table of Contents! ! !Preface! !Part I: Functions of one variable! 1. Getting started with Chebfun Lloyd N. Trefethen! 2. Integration and differentiation Lloyd N. Trefethen! 3. Rootfinding and minima and maxima Lloyd N. Trefethen! 4. Chebfun and approximation theory Lloyd N. Trefethen! 5. Complex Chebfuns Lloyd N. Trefethen! 6. Quasimatrices and least squares Lloyd N. Trefethen! 7. Linear differential operators and equations Tobin A. Driscoll! 8. Chebfun preferences Lloyd N. Trefethen! 9. Infinite intervals, infinite function values, and singularities Lloyd N. Trefethen! 10. Nonlinear ODEs and chebgui Lloyd N. Trefethen Part II: Functions of two variables (Chebfun2) ! 11. Chebfun2: Getting started Alex Townsend! 12. Integration and differentiation Alex Townsend! 13. Rootfinding and optimisation Alex Townsend! 14. Vector calculus Alex Townsend! 15. 2D surfaces in 3D space Alex Townsend ! ! Preface! ! This guide is an introduction to the use of Chebfun, an open source software package that aims to provide “numerical computing with functions.” Chebfun extends MATLAB’s inherent facilities with vectors and matrices to functions and operators. For those already familiar with MATLAB, much of what Chebfun does will hopefully feel natural and intuitive. Conversely, for those new to !MATLAB, much of what you learn about Chebfun can be applied within native MATLAB too. -

BIRS Proceedings 2015

Banff International Research Station Proceedings 2015 Contents Five-day Workshop Reports 1 1 Modern Applications of Complex Variables: Modeling, Theory and Computation (15w5052) 3 2 Random Dynamical Systems and Multiplicative Ergodic Theorems Workshop (15w5059) 12 3 Mathematics of Communications: Sequences, Codes and Designs (15w5139) 24 4 Discrete Geometry and Symmetry (15w5019) 33 5 Advances in Numerical Optimal Transportation (15w5067) 41 6 Hypercontractivity and Log Sobolev inequalities in Quantum Information Theory (15w5098) 48 7 Computability in Geometry and Analysis (15w5005) 53 8 Laplacians and Heat Kernels: Theory and Applications (15w5110) 60 9 Perspectives on Parabolic Points in Holomorphic Dynamics (15w5082) 71 10 Geometric Flows: Recent Developments and Applications (15w5148) 83 11 New Perspectives for Relational Learning (15w5080) 93 12 Stochasticity and Organization of Tropical Convection (15w5023) 99 13 Groups and Geometries (15w5017) 119 14 Dispersive Hydrodynamics: the Mathematics of Dispersive Shock Waves and Applications (15w5045)129 15 Applied Probability Frontiers: Computational and Modeling Challenges (15w5160) 140 16 Advances and Challenges in ProteinRNA: Recognition, Regulation and Prediction (15w5063) 148 17 Hybrid Methods of Imaging (15w5012) 158 18 Advances in Combinatorial and Geometric Rigidity (15w5114) 175 19 Lifting Problems and Galois Theory (15w5035) 184 20 New Trends in Nonlinear Elliptic Equations (15w5004) 193 21 The Use of Linear Algebraic Groups in Geometry and Number Theory (15w5016) 202 i ii 22 -

January 2014

LONDONLONDON MATHEMATICALMATHEMATICAL SOCIETYSOCIETY NEWSLETTER No. 432 January 2014 Society MeetingsSociety RETIRING MEMBERS OF COUNCIL andMeetings Events AND COMMITTEE CHAIRS and Events GRAEME SEGAL (President) 2014 After serving for two years, Dr introduced electronic voting for Graeme Segal, FRS, handed over elections, which has seen turnout Friday 28 February the LMS Presidency at the AGM more than double. Mary Cartwright in November 2013. Prior to being The past two years have been Lecture, York President, he had served for a challenging period for math- page 27 two years as a Member-at-Large ematical sciences research policy Monday 31 March of Council. Dr Segal is held in in the UK. Dr Segal has led the Northern Regional high esteem across the whole Society in dealing with a range of Meeting, Durham mathematics community and important issues: the introduc- 1 his standing, as well as his sig- tion of open access publishing Tuesday 8 April nificant efforts, have been enor- to which the Society made sig- Society Meeting mously valuable to the Society in nificant policy submissions, the at BMC, London meeting its objectives. issues surrounding impact and page 22 During his tenure as LMS the 2014 REF, the consequences 14–17 April President he has continued the of changes in science funding and Invited Lectures, work of previous presidents university student tuition fees, University of to increase member engage- and the EPSRC Shaping Capabil- East Anglia ment with the Society and to ity agenda. Dr Segal also worked page 28 modernise the way the Society closely with the Education Friday 25 April operates. -

New Directions in Numerical Computation

COMMUNICATION New Directions in Numerical Computation Tobin A. Driscoll, Endre Süli, and Alex Townsend, Editors In August 2015 a distinguished collec- use of smooth func- tion of numerical analysts gathered at tions in two and higher Oxford to celebrate the sixtieth birth- dimensions was, until day of Nick Trefethen FRS and consider recently, limited to rela- the future of numerical analysis. Some tively simple domains. of the plenary speakers provided short Fundamental research essays for Notices. The full collection is on infinitely smooth online.1 two-dimensional inter- In a 1992 essay, “The Definition of polants may lead to Numerical Analysis”,2 Trefethen writes interesting new ap- of the field, “[O]ur central mission is proaches, yet the number of scientists to compute quantities that are typically working on them ap- uncomputable, from an analytical point Photo courtesy of Burchard Kaup. Nick Trefethen pears to be surprisingly of view, and to do it at lightning speed.” Jean-Paul Berrut small. These essays explore a few of the particulars of that mission. Bengt Fornberg Jean-Paul Berrut The Method—Not the Machine It used to be said that improvements in sci- A Puzzling Question about Numerical entific computing capabilities originate about Analysis equally from advances in algorithms and in hard- Why are so many academic mathematicians con- ware. During the last decade or two, the focus tent with piecewise smooth approximations to has shifted to building heroic-scale supercom- solutions to functional equations when those so- puting facilities. Maybe this is partly because lutions are known a priori to be smooth? Chebfun processing characteristics are quantifiable and impressively and beautifully demonstrates the easy to show in lists, with national and world records falling incessantly. -

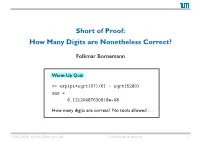

Short of Proof: How Many Digits Are Nonetheless Correct?

Short of Proof: How Many Digits are Nonetheless Correct? Folkmar Bornemann Warm-Up Quiz >> exp(pi*sqrt(67)/6) - sqrt(5280) ans = 6.12120487630818e-08 How many digits are correct? No tools allowed . ICMS 2018, NOTRE DAME,JULY 26 FOLKMAR BORNEMANN 1 CAVEAT At its highest level, numerical analysis is a mixture of science, art and bar-room brawl. —T.W. Körner: The Pleasures of Counting ICMS 2018, NOTRE DAME,JULY 26 FOLKMAR BORNEMANN 2 THE TITLE-QUESTION IS A WEE BIT OLDFASHIONED, ISN’TIT? ultimately, numerical analysis is about efficient algorithms/software . so, answering the title-question is left to the discretion of the educated user? prevalent attitudes towards the accuracy of numerical output level 0: scared! (errors all over the place: roundoff, approximation, . ) level 1: far more accurate than ever needed in practice (lots of plots) level 2: as good as it gets in the realm of noisy data (a.k.a. backward stability) level 3: adaptivity steered by accuracy requirement (“user tolerance”) in short 0: pessimistic; 1: optimistic; 2: blame the data; 3: gimme steering wheel ICMS 2018, NOTRE DAME,JULY 26 FOLKMAR BORNEMANN 3 Prologue Setting the Stage The mathematical proposition has been given the stamp of incontestability: “dispute other things; this is immovable.” —L. Wittgenstein: On Certainty ICMS 2018, NOTRE DAME,JULY 26 FOLKMAR BORNEMANN 4 POINT OF ENTRY I: THE SIAM 100-DIGIT CHALLENGE prehistory: Problem Solving Squad @ Stanford George Pólya George Forsythe Bob Floyd Don Knuth Nick Trefethen ! ! ! ! the challenge (Nick Trefethen, SIAM News & Science, January ’02) 10 problems, the answer to each is a real number sought to 10 sig. -

Master of Technology (M.Tech.) in Modeling and Simulation

Centre for Modeling and Simulation university of pune • pune 411 007 • india cms.unipune.ac.in +91.(20).2560.1448 • +91.(20).2569.0842 offi[email protected] Master of Technology (M.Tech.) in Modeling and Simulation Approved by the University of Pune Board of Studies: Scientific Computing July 12, 2007 • Version 3.0 Minor Corrections: July 19, 2011 2 About This Document This document outlines an academic programme, the Master of Technology (M.Tech.) in Modeling and Simulation, designed by the people at the Centre for Modeling and Simulation, University of Pune. This academic programme has been approved by the University of Pune. Feedback Requested. The utility of modeling and simulation as a methodology is extensive, and the community that uses it, both from academics and from industry, is very diverse. We would thus highly appreciate your feedback and suggestions on any aspect of this programme { We believe that your feedback will help us make this programme better. Citing This Document This is a public document available at http://cms.unipune.ac.in/reports/. Complete citation for this document is as follows: Mihir Arjunwadkar, Abhay Parvate, Sukratu Barve, P. M. Gade, D. G. Kanhere, Sheelan Chowdhary, Deepak Bankar, and Anjali Kshirsagar Master of Technology (M.Tech.) in Modeling and Simulation. Public Document CMS-PD-20120305 of the Centre for Modeling and Simulation, University of Pune, Pune 411 007, INDIA (2012); available at http://cms.unipune.ac.in/reports/. Acknowledgments Many people have contributed to the creation of this this programme and this document. Their time and effort, contributions and insights, and comments and suggestions have all gone a long way towards bringing this programme to its present form. -

M.Sc. in Mathematical Modelling and Scientific Computing

M.Sc. in Mathematical Modelling and Scientific Computing Dissertation Projects December 2019 Contents 1 Numerical Analysis Projects 3 1.1 Operator Preconditioning and Accuracy of Linear System Solutions . 3 1.2 Computing Common Zeros of Trivariate Functions . 4 1.3 Univariate Optimisation for Smooth Functions . 4 1.4 Algorithms for Least-Squares and Underdetermined Linear Systems Based on CholeskyQR . 5 1.5 Tensor and Matrix Eigenvalue Perturbation Theory . 6 1.6 Chebfun Dissertation Topics . 7 1.7 Rational Functions Dissertation Topics . 8 1.8 Low-Rank Plus Sparse Matrix Model and its Application . 9 1.9 Efficient Exemplar Selection for Representation Learning . 10 1.10 Numerical Solution of a Problem in Electrochemistry . 11 1.11 Nonconvex Optimisation . 12 2 Biological and Medical Application Projects 13 2.1 Localisation of Turing Patterning in Discrete Heterogeneous Media . 13 2.2 Distributed Delay in Reaction-Diffusion Systems . 14 2.3 Aspects of Flagellate Swimmer Dynamics and Mechanics . 16 2.4 Learning Time Dependence with Minimal Time Information . 17 2.5 Surrogate Modelling for Particle Infiltration into Tumour Spheroids . 18 2.6 Mathematical Modelling of polyelectrolyte hydrogels for Application in Regenerative Medicine . 19 3 Physical Application Projects 21 3.1 Evaporation-Driven Instabilities in Complex Fluids . 21 1 3.2 Phase Separation in Swollen Hydrogels . 22 3.3 Pattern Formation in Polymers . 23 4 Data Science 25 4.1 Computational Topology for Analysing Neuronal Branching Models and Data . 25 5 Research Interests of Academic Staff 26 2 1 Numerical Analysis Projects 1.1 Operator Preconditioning and Accuracy of Linear System Solu- tions Supervisors: Prof Yuji Nakatsukasa and Dr Carolina Urzua Torres Contact: [email protected] and [email protected] Preconditioning is widely recognised as a crucial technique for improving the convergence of iterative (usually Krylov) methods for linear systems Ax = b.