Bfn-Skripten Are Not Available in Book Trade but Can Be Downloaded in a Pdf Version from the Internet At

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Adobe Application Manager Enterprise Edition Deployment Guide

ADOBE® APPLICATION MANAGER ENTERPRISE EDITION GUIDE DE DEPLOIEMENT EN ENTREPRISE Adobe® Application Manager Enterprise Edition version 3.1 Version de document 3.1 Date du document : Septembre 2012 © 2012 Adobe Systems Incorporated and its licensors. All rights reserved. Adobe® Application Manager Enterprise Edition Guide de déploiement en entreprise This guide is licensed for use under the terms of the Creative Commons Attribution Non-Commercial 3.0 License. This License allows users to copy, distribute, and transmit the guide for noncommercial purposes only so long as (1) proper attribution to Adobe is given as the owner of the guide; and (2) any reuse or distribution of the guide contains a notice that use of the guide is governed by these terms. The best way to provide notice is to include the following link. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-sa/3.0/ Adobe, the Adobe logo, Acrobat, Adobe Audition, Adobe Bridge, Adobe Device Central, Adobe OnLocation, Adobe Premiere, Adobe Premiere Pro, Adobe Technical Communication Suite, After Effects, Contribute, Captivate, Creative Suite, CS Live, Dreamweaver, Encore, Fireworks, Flash, Flash Builder, Flash Catalyst, FrameMaker, Illustrator, InDesign, Photoshop, RoboHelp, SiteCatalyst, and Soundbooth are either registered trademarks or trademarks of Adobe Systems Incorporated in the United States and/or other countries. Apple, Mac, and Mac OS are trademarks of Apple Inc., registered in the United States and other countries. Microsoft, Windows, and Windows Vista are either registered trademarks or trademarks of Microsoft Corporation in the United States and/or other countries. UNIX is a registered trademark of The Open Group in the US and other countries. -

Maximum Internet Security: a Hackers Guide - Networking - Intrusion Detection

- Maximum Internet Security: A Hackers Guide - Networking - Intrusion Detection Exact Phrase All Words Search Tips Maximum Internet Security: A Hackers Guide Author: Publishing Sams Web Price: $49.99 US Publisher: Sams Featured Author ISBN: 1575212684 Benoît Marchal Publication Date: 6/25/97 Pages: 928 Benoît Marchal Table of Contents runs Pineapplesoft, a Save to MyInformIT consulting company that specializes in Internet applications — Now more than ever, it is imperative that users be able to protect their system particularly e-commerce, from hackers trashing their Web sites or stealing information. Written by a XML, and Java. In 1997, reformed hacker, this comprehensive resource identifies security holes in Ben co-founded the common computer and network systems, allowing system administrators to XML/EDI Group, a think discover faults inherent within their network- and work toward a solution to tank that promotes the use those problems. of XML in e-commerce applications. Table of Contents I Setting the Stage 1 -Why Did I Write This Book? 2 -How This Book Will Help You Featured Book 3 -Hackers and Crackers Sams Teach 4 -Just Who Can Be Hacked, Anyway? Yourself Shell II Understanding the Terrain Programming in 5 -Is Security a Futile Endeavor? 24 Hours 6 -A Brief Primer on TCP/IP 7 -Birth of a Network: The Internet Take control of your 8 -Internet Warfare systems by harnessing the power of the shell. III Tools 9 -Scanners 10 -Password Crackers 11 -Trojans 12 -Sniffers 13 -Techniques to Hide One's Identity 14 -Destructive Devices IV Platforms -

Numerical Computation with Rational Functions

NUMERICAL COMPUTATION WITH RATIONAL FUNCTIONS (scalars only) Nick Trefethen, University of Oxford and ENS Lyon + thanks to Silviu Filip, Abi Gopal, Stefan Güttel, Yuji Nakatsukasa, and Olivier Sète 1/26 1. Polynomial vs. rational approximations 2. Four representations of rational functions 2a. Quotient of polynomials 2b. Partial fractions 2c. Quotient of partial fractions (= barycentric) 2d. Transfer function/matrix pencil 3. The AAA algorithm with Nakatsukasa and Sète, to appear in SISC 4. Application: conformal maps with Gopal, submitted to Numer. Math. 5. Application: minimax approximation with Filip, Nakatsukasa, and Beckermann, to appear in SISC 6. Accuracy and noise 2/26 1. Polynomial vs. rational approximation Newman, 1964: approximation of |x| on [−1,1] −휋 푛 퐸푛0 ~ 0.2801. ./푛 , 퐸푛푛 ~ 8푒 Poles and zeros of r: exponentially clustered near x=0, exponentially diminishing residues. Rational approximation is nonlinear, so algorithms are nontrivial. poles There may be nonuniqueness and local minima. type (20,20) 3/26 2. Four representations of rational functions Alpert, Carpenter, Coelho, Gonnet, Greengard, Hagstrom, Koerner, Levy, Quotient of polynomials 푝(푧)/푞(푧) SK, IRF, AGH, ratdisk Pachón, Phillips, Ruttan, Sanathanen, Silantyev, Silveira, Varga, White,… 푎푘 Beylkin, Deschrijver, Dhaene, Drmač, Partial fractions 푧 − 푧 VF, exponential sums Greengard, Gustavsen, Hochman, 푘 Mohlenkamp, Monzón, Semlyen,… Berrut, Filip, Floater, Gopal, Quotient of partial fractions 푛(푧)/푑(푧) Floater-Hormann, AAA Hochman, Hormann, Ionita, Klein, Mittelmann, Nakatsukasa, Salzer, (= barycentric) Schneider, Sète, Trefethen, Werner,… 푇 −1 Antoulas, Beattie, Beckermann, Berljafa, Transfer function/matrix pencil 푐 푧퐵 − 퐴 푏 IRKA, Loewner, RKFIT Druskin, Elsworth, Gugercin, Güttel, Knizhnerman, Meerbergen, Ruhe,… Sometimes the boundaries are blurry! 4/26 2a. -

Adobe Contribute CS5 What's

Contents Who uses Adobe Contribute? 2 Contribute CS5 What’s New Top new features of Adobe Contribute CS5 2 Predefined content types 2 XML editing 3 Cross-browser preview 4 Adobe® Contribute® CS5 Spry widget editing 4 Simplify website management Multipage search and replace 5 Integrate authoring, reviewing, and publishing into a collaborative workflow with Adobe Subversion support 5 Contribute CS5 software, an easy-to-use HTML editor and website management tool. Increase web publishing productivity while simplifying oversight and approval tasks. Image hotspot support 5 W3-compliant code when embedding SWF and FLV files 5 Quickly create and edit web pages collaboratively in a WYSIWYG HTML editor, without writing code. Adobe Contribute CS5 enables in-browser editing of text, images, and CSS for content authors, as well as Server Side Include editing 6 drag-and-drop input of FLV, SWF, and PDF files. Managers can encourage creative web development while easily controlling website look and feel, as well as workflow, review, and version processes to Text and image enhancements 6 produce static or dynamic websites with one-click publishing. Adobe Contribute CS5 is also Many businesses and web developers are saddled with unwieldy content management systems (CMS) available as a component of that cost huge sums of money to configure and customize, and equally large amounts of time for staff Still using Contribute CS3? 7 Adobe Creative Suite® 5 Web Premium and Adobe training—yet the results are often delayed site updates, bottlenecked HTML experts, and frustrated About Adobe Systems Incorporated 7 Creative Suite® 5 content authors. Similarly poor results are the norm when complex sites are managed with basic HTML Master Collection software. -

An Extension of Matlab to Continuous Functions and Operators∗

SIAM J. SCI. COMPUT. c 2004 Society for Industrial and Applied Mathematics Vol. 25, No. 5, pp. 1743–1770 AN EXTENSION OF MATLAB TO CONTINUOUS FUNCTIONS AND OPERATORS∗ † † ZACHARY BATTLES AND LLOYD N. TREFETHEN Abstract. An object-oriented MATLAB system is described for performing numerical linear algebra on continuous functions and operators rather than the usual discrete vectors and matrices. About eighty MATLAB functions from plot and sum to svd and cond have been overloaded so that one can work with our “chebfun” objects using almost exactly the usual MATLAB syntax. All functions live on [−1, 1] and are represented by values at sufficiently many Chebyshev points for the polynomial interpolant to be accurate to close to machine precision. Each of our overloaded operations raises questions about the proper generalization of familiar notions to the continuous context and about appropriate methods of interpolation, differentiation, integration, zerofinding, or transforms. Applications in approximation theory and numerical analysis are explored, and possible extensions for more substantial problems of scientific computing are mentioned. Key words. MATLAB, Chebyshev points, interpolation, barycentric formula, spectral methods, FFT AMS subject classifications. 41-04, 65D05 DOI. 10.1137/S1064827503430126 1. Introduction. Numerical linear algebra and functional analysis are two faces of the same subject, the study of linear mappings from one vector space to another. But it could not be said that mathematicians have settled on a language and notation that blend the discrete and continuous worlds gracefully. Numerical analysts favor a concrete, basis-dependent matrix-vector notation that may be quite foreign to the functional analysts. Sometimes the difference may seem very minor between, say, ex- pressing an inner product as (u, v)orasuTv. -

Adobe Systems FY2006 10-K/A

UNITED STATES SECURITIES AND EXCHANGE COMMISSION Washington, D.C. 20549 ________________ FORM 10-K/A (Amendment No. 1) ________________ (Mark One) [X] ANNUAL REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 For the fiscal year ended December 1, 2006 OR [ ] TRANSITION REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 For the transition period from to Commission file number: 0-15175 ADOBE SYSTEMS INCORPORATED (Exact name of registrant as specified in its charter) Delaware 77-0019522 (State or other jurisdiction of (I.R.S. Employer incorporation or organization) Identification No.) 345 Park Avenue, San Jose, California 95110-2704 (Address of principal executive offices and zip code) (408) 536-6000 (Registrant’s telephone number, including area code) Securities registered pursuant to Section 12(b) of the Act: Title of Each Class Name of Each Exchange on Which Registered Common Stock, $0.0001 par value per share The NASDAQ Stock Market LLC (NASDAQ Global Select Market) Securities registered pursuant to Section 12(g) of the Act: None Indicate by checkmark if the registrant is a well-known seasoned issuer, as defined in Rule 405 of the Securities Act. Yes [X] No [ ] Indicate by checkmark if the registrant is not required to file reports pursuant to Section 13 or Section 15(d) of the Act. Yes [ ] No [X] Indicate by checkmark whether the registrant (1) has filed all reports required to be filed by Section 13 or 15 (d) of the Securities Exchange Act of 1934 during the preceding 12 months (or for such shorter period that the registrant was required to file such reports), and (2) has been subject to such filing requirements for the past 90 days. -

The Healthstream Authoring Center

The HealthStream Authoring Center Administrator User Guide Updated February 19, 2014 HealthStream, Inc. 209 10th Avenue South | Suite 450 | Nashville, TN | 37203 Copyright 2014, HealthStream, Inc. The information contained herein is the sole property of HealthStream and may not be reproduced without HealthStream’s express written permission. Unauthorized use of these materials may result in violation of copyright, trademark, and other laws. Some of the features described in this guide are optional or role-specific, and might not be available to you. Table of Contents HealthStream Authoring Center .................................................................................................................... 1 Authoring Center Policies and Guidelines ............................................................................................. 1 Authoring Center FAQs.......................................................................................................................... 3 Content Manager ...................................................................................................................................... 4 Content Manager Overview ................................................................................................................... 4 Adding a Folder ...................................................................................................................................... 5 Adding a Page ...................................................................................................................................... -

Householder Triangularization of a Quasimatrix

IMA Journal of Numerical Analysis (2010) 30, 887–897 doi:10.1093/imanum/drp018 Advance Access publication on August 21, 2009 Householder triangularization of a quasimatrix LLOYD N.TREFETHEN† Oxford University Computing Laboratory, Wolfson Building, Parks Road, Downloaded from Oxford OX1 3QD, UK [Received on 4 July 2008] A standard algorithm for computing the QR factorization of a matrix A is Householder triangulariza- tion. Here this idea is generalized to the situation in which A is a quasimatrix, that is, a ‘matrix’ whose http://imajna.oxfordjournals.org/ ‘columns’ are functions defined on an intervala [ , b]. Applications are mentioned to quasimatrix least squares fitting, singular value decomposition and determination of ranks, norms and condition numbers, and numerical illustrations are presented using the chebfun system. Keywords: Householder triangularization; QR factorization; chebfun; quasimatrix; singular value decom- position. 1. QR factorization and Householder triangularization at Radcliffe Science Library, Bodleian Library on April 18, 2012 Let A be an m n matrix, where m n. A (reduced) QR factorization of A is a factorization × > x x x x q q q q r r r r x x x x q q q q r r r A QR, x x x x q q q q r r , (1.1) = x x x x q q q q r x x x x q q q q x x x x q q q q where Q is m n with orthonormal columns and R is upper triangular. Here and in subsequent equations we illustrate our× matrix operations by schematic pictures in which x denotes an arbitrary entry, r denotes an entry of an upper triangular matrix, q denotes an entry of an orthonormal column and a blank denotes a zero. -

MAARIJ HUSSAIN UX/UI DESIGNER [email protected] Kent, London Linkedin

maarijmedia.com +44 (0) 7947 4444 98 MAARIJ HUSSAIN UX/UI DESIGNER [email protected] Kent, London LinkedIn Career Objective After my master’s degree in Multimedia, I have started my design career back in 2008 starting my journey as a junior designer with Norwich Union. In those 12 years I have moved my way up the career level to Senior UX/UI design as well as the Java Scalable Vector Animator within the Banking, Local Government, Telecom, Automotive, Technology & Automation and Retail markets. I have broad in-depth knowledge of working within the user-centered end user interactive designs, moving media, visual effects and branding with the personal, business, corporate and enterprise clients. I have solved & built complex and successful user journeys, prototypes and their hi-fidelity interactive designs for all of my clients and pride myself in providing the best development and guidance to the built and the test processes. I always dedicate 100% to my projects and bring the hands on experience with-in the agile environment and strive to lead by example. As an artist I always visualise my project to its completion, end-user’s satisfaction and competitive robustness. Employment Summary E-Tech Solution [London] Senior UX/UI Designer (Digital Transformation) Aug 2020 – Present I am working with E-Tech Solution for one of their major fintech (Banking) client to digitally transform two of their products. Managing and liaising with the design, development and the testing team for the new & modernised user journey, effectively acting as a design scrum master for the digital transformation product road map. -

Publications by Lloyd N. Trefethen

Publications by Lloyd N. Trefethen I. Books I.1. Numerical Conformal Mapping, editor, 269 pages. Elsevier, 1986. I.2. Finite Difference and Spectral Methods for Ordinary and Partial Differential Equations, x+315 pages. Graduate textbook, privately published at http://comlab.ox.ac.uk/nick.trefethen/, 1996. I.3. Numerical Linear Algebra, with David Bau III, xii+361 pages. SIAM, 1997. I.4. Spectral Methods in MATLAB, xviii+165 pages. SIAM, 2000. I.5. Schwarz-Christoffel Mapping, with Tobin A. Driscoll, xvi+132 pages. Cambridge U. Press, 2002. I.6. Spectra and Pseudospectra: The Behavior of Nonnormal Matrices and Operators, with Mark Embree, xviii+606 pages. Princeton U. Press, 2005. I.7. Trefethen’s Index Cards: Forty years of Notes about People, Words, and Mathematics, xv + 368 pages. World Scientific Publishing, 2011. I.8. Approximation Theory and Approximation Practice. SIAM, to appear in 2013. In addition to these a book has been published by other authors about the international problem solving challenge I organised in 2002 (item VIII.8 below): F. Bornemann, D. Laurie, S. Wagon and D. Waldvogel, The SIAM 100-Digit Challenge, SIAM, 2004, to appear in German translation as Abenteuer Numerik: Eine Führung entlang der Herausforderung von Trefethen, Springer, 2006. II. Finite difference and spectral methods for partial differential equations II.1. Group velocity in finite difference schemes. SIAM Review 24 (1982), 113-136. II.2. Group velocity interpretation of the stability theory of Gustafsson, Kreiss, and Sundström. J. Comp. Phys. 49 (1983), 199-217. II.3. On Lp-instability and dispersion at discontinuities in finite difference schemes. -

CS3 Notepad.Indd

CONTACTS CHANNEL ADOBE CREATIVE SUITE 3 CONTACT DETAILS CHANNEL CONTACTS SUJATA ARUMUGAM Channel Inside Sales Support [email protected] FIONA CAMINO Channel Account Manager fi [email protected] GUY MCWILLIAM Channel Account Manager [email protected] IAN BROWN Channel Account Manager [email protected] LIZ WILKINS Channel Account Manager [email protected] MATT SHILLUM Channel Account Manager [email protected] TONI REDMAN Distribution Manager [email protected] ALYNA COPE Channel Marketing Manager [email protected] JULIE MURTAGH Channel Manager [email protected] NAJEEB KHAN Anti Piracy Manager [email protected] MAIN CONTACT NUMBERS ADOBE UK OFFICE 0208 606 1100 CUSTOMER SERVICES 0207 365 0733 TECHNICAL SUPPORT 0845 052 2222 USEFUL WEB LINKS CHANNEL WEBSITE http://partners.adobe.com LICENSING WEBSITE https://licensing.adobe.com ADOBE UK WEBSITE http://www.adobe.com/uk ANTI-PIRACY WEBSITE http://www.adobe.com/uk/aboutadobe/antipiracy © 2007 Adobe Systems Incorporated. All rights reserved. Adobe and the Adobe logo, Acrobat Standard, Acrobat Professional, Acrobat 3D, Adobe After Eff ects, Adobe Audition, Adobe Captivate, Macromedia Cold Fusion, Adobe Contribute, Adobe Design Premium, Adobe Design Standard, Adobe Master Collection, Adobe Production Premium, Adobe Web Premium, Adobe Web Standard , Adobe Dreamweaver, Adobe Fireworks, Adobe Flash Professional, Adobe Flash Media Server, Adobe Flexbuilder, Adobe Font Folio, Adobe Framemaker, Adobe GoLive, Adobe Illustrator, Adobe InCopy, Adobe InDesign, Adobe Photoshop, Adobe Photoshop Extended, Adobe Photoshop Elements, Adobe Photoshop Lightroom, Adobe Premiere Pro, Adobe Premiere Elements, Adobe RoboHelp, Adobe Soundbooth and Adobe Open Options are either registered trademarks or trademarks of Adobe Systems Incorporated in the United States and/or other countries. -

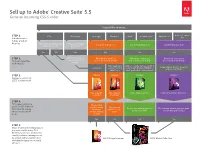

CS5.5 Battle Card

Sell up to Adobe® Creative Suite® 5.5 Grow an incoming CS5.5 order I would like to order.. STEP 1: CS5.5 Photoshop® InDesign® Illustrator® Flash® Dreamweaver® After Effects® Adobe Premiere® Ask about other Pro Adobe products they use. Already know which suite Using other Adobe edition is for you? products? Using Photoshop too? Using Photoshop too? Using Photoshop too? Yes No Yes Yes Yes Yes STEP 2: What kind of work What kind of work are What kind of work are What kind of work are Find out about the are you or your team you or your team doing? you or your team doing? you or your team doing? doing? work they do. Print, web, and Web or mobile devices, content Video editing, motion graphics, Just print content created created with Flash or HTML5, or and special effects with Flash web application development STEP 3: Suggest Suggest Suggest Suggest Suggest a version of CS5.5 for their work. CS5.5 Design CS5.5 Design CS5.5 Web Premium CS5.5 Production Premium Standard Premium STEP 4: Find opportunities to Do you also upsell to CS5.5 Master want to create Do you also Collection by asking interactive want to create Do you also want to produce Do you also want to produce print what else they want content without video? print and video? and do web publishing? to do. coding? Yes Yes Yes Yes STEP 5: Move them from shrinkwrapped purchases to licensing: Tell them they can save money and simplify software management by consolidating creative users CS5.5 Design Premium CS5.5 Master Collection and standardizing on a version of CS5.5.