Computing Text Semantic Relatedness Using the Contents and Links of a Hypertext Encyclopedia

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

![[Pennsylvania County Histories]](https://docslib.b-cdn.net/cover/6291/pennsylvania-county-histories-546291.webp)

[Pennsylvania County Histories]

f Digitized by the Internet Archive in 2018 with funding from This project is made possible by a grant from the Institute of Museum and Library Services as administered by the Pennsylvania Department of Education through the Office of Commonwealth Libraries https://arohive.org/details/pennsylvaniaooun18unse '/■ r. 1 . ■; * W:. ■. V / \ mm o A B B P^g^ B C • C D D E Page Page Page uv w w XYZ bird's-eive: vibw G VA ?es«rvoir NINEVEH From Nineveh to the Lake. .jl:^/SpUTH FORK .VIADUCT V„ fS.9 InaMSTowM. J*. Frorp per30i)al Sfcel'cb^s ai)d. Surveys of bl)p Ppipipsylvapia R. R., by perrpi^^iop. -A_XjEX. Y. XjEE, Architect and Civil Engineer, PITTSBURGH, PA. BRIDGE No.s'^'VgW “'■■^{Goncl - Viaduct Butlermill J.Unget^ SyAij'f By. Trump’* Caf-^ ^Carnp Cooemaugh rcTioN /O o ^ ^ ' ... ^!yup.^/««M4 •SANGTOLLOV^, COOPERSttAtc . Sonc ERl/ftANfJl, lAUGH \ Ru Ins of loundhouse/ Imohreulville CAMBRIA Cn 'sum iVl\ERHI LL Overhead Sridj^e Western Res^rvoi millviue Cambria -Iron Works 'J# H OW N A DAMS M:uKBoeK I No .15 SEVEim-1 WAITHSHED SOUTH FORK DAM PfTTSBURGH, p/ Copyright 1889 8v years back, caused u greater loss of life, ?Ttf ioHnsitowiv but the destructiou of property was slight in comparison with that of Johns¬ town and its vacinity. For eighteen hun¬ dred years Pompeii and Hevculancum have been favorite references as instances of unparalleled disasters in the annals (d the world; hut it was shown by an iirti- cle in the New Y’ork World, some days FRIDAY', JULY" 5, 1889. -

Husbandry Guidelines for African Lion Panthera Leo Class

Husbandry Guidelines For (Johns 2006) African Lion Panthera leo Class: Mammalia Felidae Compiler: Annemarie Hillermann Date of Preparation: December 2009 Western Sydney Institute of TAFE, Richmond Course Name: Certificate III Captive Animals Course Number: RUV 30204 Lecturer: Graeme Phipps, Jacki Salkeld, Brad Walker DISCLAIMER The information within this document has been compiled by Annemarie Hillermann from general knowledge and referenced sources. This document is strictly for informational purposes only. The information within this document may be amended or changed at any time by the author. The information has been reviewed by professionals within the industry, however, the author will not be held accountable for any misconstrued information within the document. 2 OCCUPATIONAL HEALTH AND SAFETY RISKS Wildlife facilities must adhere to and abide by the policies and procedures of Occupational Health and Safety legislation. A safe and healthy environment must be provided for the animals, visitors and employees at all times within the workplace. All employees must ensure to maintain and be committed to these regulations of OHS within their workplace. All lions are a DANGEROUS/ HIGH RISK and have the potential of fatally injuring a person. Precautions must be followed when working with lions. Consider reducing any potential risks or hazards, including; Exhibit design considerations – e.g. Ergonomics, Chemical, Physical and Mechanical, Behavioural, Psychological, Communications, Radiation, and Biological requirements. EAPA Standards must be followed for exhibit design. Barrier considerations – e.g. Mesh used for roofing area, moats, brick or masonry, Solid/strong metal caging, gates with locking systems, air-locks, double barriers, electric fencing, feeding dispensers/drop slots and ensuring a den area is incorporated. -

Macmillan Dictionary Buzzword: Zonkey

TEACHER’S NOTES zonkey www.macmillandictionary.com Overview: Suggestions for using the Macmillan Dictionary BuzzWord article on zonkey and the associated worksheets Total time for worksheet activities: 45 minutes Suggested level: Upper intermediate and above 1. If you intend to use the worksheets in animal they are describing, e.g. ‘I have paws class, go to the BuzzWord article at the and whiskers, what am I?’ (= cat). web address given at the beginning of the 6. All the words for baby animals in Exercise worksheet and print off a copy of the article. 4 have entries in the Macmillan Dictionary. Make a copy of the worksheet and the Ask students to complete the exercise BuzzWord article for each student. You might individually, starting with the words they know find it helpful not to print a copy of the Key for and then looking up any unfamiliar ones as each student but to check the answers as necessary. Check answers as a class. a class. 7. Exercise 5 explores some common 2. If the members of your class all have internet conversational idioms based on animals. access, ask them to open the worksheet Explain to students that using idiomatic before they go to the Buzzword article link. phrases like these can make conversational Make sure they do not scroll down to the Key English sound more natural, but getting until they have completed each exercise. them wrong is a very obvious mistake! Ask 3. Encourage students to read through the students to complete the exercise in pairs. questions in Exercise 1 before they look Explain that if they need to use a dictionary at the BuzzWord article. -

Kenneth Gandar Dower by DJ Smith

Driven by Devils (K. C. Gandar Dower) Pacifist, explorer, aviator, sportsman, cryptozoologist, and war correspondent Kenneth Cecil Gandar Dower (1908-1944) was an astonishingly versatile man, who faced triumph and adversity alike with contagious enthusiasm, indomitable spirit, cynicism, and easy, self-effacing humour. Known to his friends simply as Gander , few biographical details remain of this intriguing character, yet perhaps few are needed since he voiced his feelings clearly in the handful of books he wrote during his short life. In what was his most controversial work, The Spotted Lion (1937), he admitted: We have all had our day-dreams of adventure...I seem to be one of those unfortunates who is driven by devils to put them into practice . K. C. Gandar Dower was born at his parent s home in Regent s Park, London, on 31st August 1908. He was the fourth and youngest son of the independently wealthy Joseph Wilson Gandar Dower and his wife Amelia Frances Germaine. Two of his elder brothers, Eric and Alan, served as Conservative Party members in the House of Commons, with Eric responsible for the creation of Aberdeen Airport. As a youth Kenneth read avidly the works of Rider Haggard and showed an interest in writing from the age of eight, although his teachers at Harrow School deemed him awkward and slow-witted. Despite this he wrote for The Harrovian with his friend Terence Rattigan, and proved himself a keen sportsman in cricket, association football, Eton Fives, and rackets. After gaining an upper second he received a scholarship to Trinity College, Cambridge in 1927 to read history. -

We'd Love to Hear from You!

CSAMA News 1 of 10 Having trouble reading this email? View it in your browser. The Creation Science Association for Mid-America Volume 31: (7) July 2014 "It is better to trust in the Lord than to put confidence in man." Psalm 118:8 “A Close Encounter on Friday the 13th” “What is Baraminology? Part I” We’d love to hear from you! If you have questions or comments, or if you have suggestions for making our newsletter better, please feel free to contact us. We’ll do our best to respond to every query. THANK YOU! (Use the editor link on the contact page at www.csama.org.) th by Douglas Roger Dexheimer Last August, I was contacted by Dr. Don DeYoung, president of The Creation Research Society (CRS) to make advance plans for the CRS annual board meeting to be held in Kansas City in June of 2014. We exchanged several communications in the ensuing months. Then, on Friday, the 13th of June, Kevin Anderson and I enjoyed an unusually “close encounter” with the board members of CRS here in Kansas City. CSAMA was invited to join the leadership of CRS for dinner and, later, coffee. We did a "show & tell" with the CRS leaders, first at Smokehouse BBQ, and, following that, at the Chase Suites Hotel a few miles north. http://www.csamanewsletter.org/archives/HTML/201407/index.html CSAMA News 2 of 10 The group of CRS board members we met. We described to them some of the activities that CSAMA conducts, such as our monthly meetings, creation safaris, and other specially requested events. -

From the Director's Desk: Successful Birth Was in 1974

Ozoo1o0LOONCAL OARDIN, ALINORM. nsTD 1878 NEWS NEWSLETTER, Z0OLOGICAL GARDEN, ALIPORE, HALF YEARLY, OCTOBER' 2018 TO MARCH 2019 In This Edition: News from Animal Section: New Born. Zoo Educational & Training activities: Zoo Festival. Range Officers' Training Programme from TNFA. Training of Forest Guards from Kharagpur. DVM Intern Training. World Wildlife Day. Training Programme on Capturing of Deer andd other Animals. The Litigon Cubanacan Training of Students from Adamas Law School. Animal Hybridization is an interesting and Others: fascinating concept of breeding in captivity. Visit from Vishakhapatnam Zoo. Although the animal hybrid has little or no Veterinary Activities. scientific value, it has tremendous exhibit Footfll. value. The experimental production of the Adoption. Litigon in this Zoo is the first of its kind in the School and College visits. world. The tigon was produced experimentally Retirements. by pairing a Bengal Tiger Munna' and a female African Lioness Munni'. The experiment began here in 1964, but the first From The Director's Desk: successful birth was in 1974. At birth, the We are happily presenting the fifth edition of Zoo Tigon resembles a tiger. On attaining maturity the stripes become faint and the body colour Newsletter, covering zoo news from October, 2018 to becomes tawny like the lions. It roars like a lion but for a shorter duration. March, 2019 of Zoological Garden, Alipore. In this The offspring of a lion and a female tigon is period we have achieved great success on captive called a litigon. The first litigon was produced breeding, educational activities and adoption. WNe by crossing Tigon 'Rudrani' with Debabrata', an Indian Lion, on March 7, 1979. -

Our Forests Are Facing Many Challenges Chestnut Blight

Our forests are facing many challenges Chestnut Blight How a single gene may help save the American Chestnut Current research team: Andy Newhouse (PhD grad student) Bill Powell (Director) Tyler Desmarais (MS grad student) Chuck Maynard (Co-Director Emeritus) Dakota Matthews (MS grad student) Linda McGuigan (TC lab Manager) Vern Coffey (MS grad student) Allison Oakes (Post doctoral fellow) Yoks Bathula (MS grad student) Kaitlin Breda (Admin assistant) Xueqing Xiong (MS grad student) Andrew Teller (Research Analyst) Erik Carlson (MS grad student) Hannah Pilkey (MS grad student) Many undergrads, high school students, collaborators, and many volunteers… The work of well over 100 people over the years 2 Wood products Agricultural Social/historical Chestnuts roasting on an open fire, The Christmas Song (by Torme and Wells in 1946) Keystone forest species (environmental benefits) Restoration of the American chestnut may benefit many endangered species Carolina northern flying squirrel More mast, more rodents, supports (Glaucomys sabrinus) American burying beetle Small Whorled Pogonia, Isotria medeoloides, Habitat promoted by American chestnut American chestnut was predominant before these species were endangered American chestnut tree had diverse forms photo in MI, 1980s by Alan D. Hart Forest History Society Chestnut blight in the U.S. Chestnut blight on related species: ~50 years spread through natural range Allegheny Chinkapin, C. pumila var. pumila killing ~4 billion American chestnut trees Ozark Chinquapin, C. pumila var. ozarkensis In 1904, discovery of chestnut blight in the Bronx Zoo (Merkel) Chestnut blight also survives on oaks Spring 1912 1912 photo of blight in NY After over a century of unsuccessful attempts at combating the blight, what are the choices for restoration? Chestnut f1 hybrids are OK for ornamentals or crops, Not for restoration Japanese chestnut C. -

A Mashup World

A Mashup World A Mashup World: Hybrids, Crossovers and Post-Reality By Irina Perianova A Mashup World: Hybrids, Cross-Overs and Post-Reality By Irina Perianova This book first published 2019 Cambridge Scholars Publishing Lady Stephenson Library, Newcastle upon Tyne, NE6 2PA, UK British Library Cataloguing in Publication Data A catalogue record for this book is available from the British Library Copyright © 2019 by Irina Perianova All rights for this book reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, without the prior permission of the copyright owner. ISBN (10): 1-5275-2134-6 ISBN (13): 978-1-5275-2134-6 TABLE OF CONTENTS List of Images ............................................................................................. ix Acknowledgements .................................................................................... xi Prologue .................................................................................................... xiii Introduction ................................................................................................. 1 Chapter One ............................................................................................... 13 Lifestyle, Science: Hybrids – Interchange 1. Introduction ...................................................................................... 13 2. Definitions ...................................................................................... -

The Spirit of the Polar Bear

QUARTERLY PUBLICATION OF THE EUROPEAN ASSOCIATION OF ZOOS AND AQUARIA SPRINGZ 2015 OO QUARIAISSUE 89 BEARING UP WELL A SUSTAINABLE POLAR BEAR POPULATION IN EAZA AND BEYOND One last chance BAER’S POCHARD ON THE BRINK OF EXTINCTION IN THE WILD 1 1 Outside the box WELFARE SCIENCE POINTS TO BETTER ANIMAL ENRICHMENT Contents Zooquaria Spring 2015 8 28 22 6 12 4 From the Director’s chair 16 Endangered animals Myfanwy Griffith on cross-cultural collaboration Last chance for Baer’s pochard, plus a look at the symbolism of the polar bear and the 5 Announcements white-backed woodpecker A round-up of news from EAZA 22 Animal husbandry 6 Births and hatchings The first workshop for the spotted hyena A selection of important new arrivals 24 Nutrition 8 Campaigns A review of the eighth European conference How to support the Pole to Pole petition 26 Exhibit design 9 Corporate members The thinking behind an exciting new Dutch Behind the scenes with animal transporter Ekipa aviary 10 EAZA Academy 28 Conservation Celebrating a year of growth and change Twenty years of sea turtle protection 12 Communications 29 Welfare The details of a Parisian advertising campaign Are we doing enough to enrich our animals? 14 Interview Meet Kira Husher of the IUCN Zooquaria EDITORIAL BOARD: EAZA Executive Office, PO Box 20164, 1000 HD Amsterdam, The Netherlands. Executive Director Myfanwy Griffith ([email protected]) Email: [email protected] ISSN 2210-3392 Managing Editor David Williams-Mitchell ([email protected]) Cover image: Polar Bear (Ursus maritimus), Daniel J. Cox/NaturalExposures.com Editor Malcolm Tait ([email protected]) For information on print subscriptions to Zooquaria visit: http://tinyurl.com/zooquaria. -

Automatic Meaning Discovery Using Google: the Data of Experiments with Wordnet Categories

Automatic Meaning Discovery Using Google: The Data of Experiments with WordNet Categories 100 total trials. 87% average accuracy 1 Table 1: WordNet Comparison Test Results Experiment code Accuracy Experiment code Accuracy actor-hyponynm 80% administrativedistr-hyponynm 100% africa-partmeronym 90% animalorder-hyponynm 95% arachnida-membermeronym 90% area-hyponynm 60% arthropodfamily-hyponynm 100% artifact-hyponynm 80% asteriddicotgenus-hyponynm 100% author-hyponynm 90% aware-attribute 100% beetle-hyponynm 95% birdgenus-hyponynm 100% bulblike-similarto 80% bulbousplant-hyponynm 90% cactaceae-membermeronym 100% cardinal-similarto 90% change-hyponym 85% change-hyponynm 80% changeintegrity-hyponym 90% changeofstate-hyponynm 95% city-hyponynm 85% clothes-hyponynm 100% collection-hyponynm 85% compositae-membermeronym 95% compound-hyponynm 90% concaveshape-hyponynm 55% condition-hyponynm 75% criticize-hyponym 70% crucifer-hyponynm 100% decorate-hyponym 90% dicotgenus-hyponynm 90% direction-hyponynm 85% disease-hyponynm 95% dog-hyponynm 100% employee-hyponynm 90% ending-hyponynm 50% england-partmeronym 100% enter-hyponym 65% equipment-hyponynm 75% european-hyponynm 90% fern-hyponynm 100% shgenus-hyponynm 95% france-partmeronym 95% friend-hyponynm 80% front-hyponynm 95% fungusgenus-hyponynm 100% furniture-hyponynm 90% grass-hyponynm 100% herb-hyponynm 95% hindudeity-hyponynm 90% inammation-hyponynm 95% injured-attribute 90% intellectual-hyponynm 85% inventor-hyponynm 100% kill-hyponym 75% laborer-hyponynm 70% layer-hyponynm 60% lepidoptera-membermeronym -

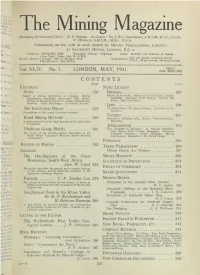

The Mining Magazine Managing Director and Editor : W .F

The Mining Magazine Managing Director and Editor : W .F . W h i t e . Assistants : S t . J. R .C . S h e p h e r d , A.R.S.M., D.I.C., F.G.S.; F. H i g h a m , A.R.S.M., M.Sc., F.G.S. Published on the 15th of each month by M ining Publications, L i m i t e d , at Salisbury House, London, E .C . 2 . Telephone : Metropolitan 8938. Telegraphic Address: Oligodase. Codes :M cNeill, both Editions, <5* Bentley. fNew York : Amer. Inst. M. & M.E. Branch Offices ? Chicago : 360, N. Michigan Blvd. Subscription {}.2Q-APe r« nnUm’ includit’g, P °staSe- , i.San Francisco : 681, Market. { U.S.A., $3 per annum, including postage. P R IGF Vol. XL1V. No. 5. LONDON, MAY, 1931 ONE SHILLING CONTENTS PAGE E d it o r ia l N e w s L e t t e r s Notes ........................................................ 258 B r i s b a n e .............................................................. 289 Safety in Mining Exhibition in Cologne ; British Mount Isa Activities ; Reduction of Coal Rates ; Palmer Engineering Standards Association Test Sieves ; Goldfield; Australian Gold Mining; Broken Hill Society of Chemical Industry’s Jubilee Celebrations; Mines ; New Gold Finds. Erratum ; Early Metallurgy ; Institution Awards. I p o h ...................................................................... 291 The Institution Dinner ........................ 258 Tin Restriction ; Tin Quota Scheme ; Evolution of the Industry. Proceedings at this annual function reviewed. T o ro n to .............................................................. 293 Rand Mining M ethods.......................... 259 Porcupine ; Kirkland Lake ; Rouyn ; Patricia District; A paper presented at the April meeting of the Institution Manitoba. -

To Consignors Hip Color & No

Index to Consignors Hip Color & No. Sex Name,Year Foaled Sire Dam Barn 37 Consigned by Allied Bloodstock, Agent I Broodmare 880 b. m. Provobay, 2000 Dynaformer Del Sovereign Barn 37 Consigned by Allied Bloodstock, Agent II Broodmare prospect 1053 b. m. Cuff Me, 2007 Officer She'sgotgoldfever Barn 37 Consigned by Allied Bloodstock, Agent III Yearling 1164 ch. c. unnamed, 2011 First Samurai Jill's Gem Barn 37 Consigned by Allied Bloodstock, Agent IV Racing or broodmare prospect 1022 dk. b./br. f. Blue Catch, 2008 Flatter Nabbed Barn 37 Consigned by Allied Bloodstock, Agent V Yearling 978 dk. b./br. c. unnamed, 2011 Old Fashioned With Hollandaise Barn 37 Consigned by Allied Bloodstock, Agent VI Yearling 992 dk. b./br. c. First Defender, 2011 First Defence Altos de Chavon Barn 37 Consigned by Allied Bloodstock, Agent VII Broodmare 890 gr/ro. m. Refrain, 1999 Unbridled's Song Inlaw Barn 37 Consigned by Allied Bloodstock, Agent for Don Ameche III and Hayden Noriega Yearling 1014 ch. c. unnamed, 2011 Any Given Saturday Babeinthewoods Barn 37 Consigned by Allied Bloodstock, Agent for Don Ameche III, Don Ameche Jr. and Prancing Horse Enterprise Yearling 1124 dk. b./br. c. unnamed, 2011 Harlan's Holiday Grandstone Barn 29 Consigned by Baccari Bloodstock LLC, Agent Racing or broodmare prospects 897 b. m. Rock Hard Baby, 2007 Rock Hard Ten Bully's Del Mar 916 b. f. Shesa Calendargirl, 2009 Pomeroy Memory Rock Barn 38 Consigned by Blackburn Farm (Michael T. Barnett), Agent Broodmares 873 b. m. Prado's Treasure, 2006 Yonaguska Madrid Lady 961 ch.