To Auto Scale Or Not to Auto Scale

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Pittsburgh I Remember Is a City That Will Use This Act of Hate to Build a Place of Love and Hope

THE PITTSBURGH I REMEMBER IS A CITY THAT WILL USE THIS ACT OF HATE TO BUILD A PLACE OF LOVE AND HOPE By Ron Sirak • @ronsirak October 28, 2018 I remember the old Pittsburgh, the grimy city of steel mills and pollution, a lunch pail-carrying kind of place where the sweat of the blast furnaces was washed away with a shot and a beer. I remember a fiercely proud area that viewed Eastern Pennsylvania as another state, perhaps even another country. Pittsburgh, Western Pennsylvania, even has its own language. Phrases such as: “What yinz doin’ after you red up the house?” clang on the outsider’s ear like the wail of a car alarm at 3 in the morning. My mother, who worked as a welder from 7 a.m. until 3:30 p.m. and then as a cleaning woman in an office from 6 p.m. to 9 p.m., said of Eastern Pa.: “They’re from the anthracite region; we’re from the bituminous region,” as if that was a stamp you’d get on your passport. My father drove a ladle crane in about every mill in Western Pa. and Eastern Ohio as the steel industry was dying. United Engineering. Mesta Machine. Youngstown Sheet & Tube, Sharon Steel, Jones & Laughlin and finally Pennsylvania Engineering in our hometown of New Castle, 50 miles from Pittsburgh. That’s where he was working when he died at the age of 45, the life sucked from him by relentlessly hard work. When I was a kid the Interstate hadn’t been built yet and the drive to Pittsburgh to see a Pirates game at Forbes Field was an arduous affair, following a windy road that that traced the river. -

“Battle of the Pronouns” 72 Texas Linguistics Forum 55:72-79 Pr

72 What’s up with y’all?: Sociopragmatic versatility in the “battle of the pronouns” Shawn Warner-Garcia University of California, Santa Barbara 1. Introduction There has been much debate – mostly of a historical or grammatical nature - over the development and use of the second-person plural (2PPL) pronominal form y’all in American English. Accurate historical documentation of the form is scant, resulting in a wide range of theories regarding its origin, with some authors proposing that y’all is a calque of a creole or African pronoun system (Lipski, 1993) and others arguing that it is an importation from the Scots-Irish dialect (Montgomery 1992). Grammatically, it is generally accepted that y’all is an analogous suppletion of the Early Modern English pronoun system (Maynor, 2000; Tillery, Wilke, and Bailey, 2000), though it is certainly not the only form that fills the 2PPL pronoun slot in modern-day American English. While you is the accepted singular second-person form, it also and often functions as an implicit plural. Other pronouns in American English comparable to y’all include yinz (Johnstone, 2001), you-uns (Montgomery, 2002; Donahue 2007), and you guys (Maynor, 2000). These forms, along with y’all, have been shown to index certain aspects of identity such as social class, region, or ethnicity. However, the discourse-pragmatic functions of these forms have not been investigated. In this paper, I show how y’all in particular serves distinct sociopragmatic purposes by indexing affective stances among young Texas speakers. I analyze a corpus of interactional video data in order to determine the state of y’all in what has been called the “battle of the pronouns” (Maynor, 2000). -

Potentiation of Neuromuscular Transmission As a Therapeutic Strategy to Improve Motor Function in Spinal Muscular Atrophy By

Title page Potentiation of neuromuscular transmission as a therapeutic strategy to improve motor function in spinal muscular atrophy by Kristine Susan Ojala B.A. Psychology, California State University, Long Beach, 2011 Submitted to the Graduate Faculty of the Dietrich School of Arts & Sciences in partial fulfillment of the requirements for the degree of Doctor of Philosophy University of Pittsburgh 2020 Committee page UNIVERSITY OF PITTSBURGH DIETRICH SCHOOL OF ARTS & SCIENCES This dissertation was presented by Kristine Susan Ojala It was defended on July 28, 2020 and approved by Anne-Marie Oswald, Associate Professor, University of Pittsburgh Jon W. Johnson, Professor, Department of Neuroscience, University of Pittsburgh Zachary P. Wills, Assistant Professor, Department of Neurobiology, University of Pittsburgh Christopher Donnelly, Assistant Professor, Department of Neuroscience, University of Pittsburgh Christine J. DiDonato, Associate Professor, Department of Pediatrics, Northwestern University Dissertation Director: Stephen D. Meriney, Professor, Department of Neuroscience, University of Pittsburgh ii Copyright © by Kristine Susan Ojala 2020 iii Abstract Potentiation of neuromuscular transmission as a therapeutic strategy to improve motor function in spinal muscular atrophy Kristine Ojala, PhD University of Pittsburgh, 2020 Spinal Muscular Atrophy (SMA) is a genetic disease caused by a null mutation of the SMN1 gene. Loss of SMN1 results in low levels of a protein called Survival of Motor Neuron (SMN), which is a protein that is critical for neuromuscular development. The first FDA-approved treatment for SMA utilizes intrathecal injections of an antisense oligonucleotide (ASO) to increase expression of SMN. Despite the immense excitement for this treatment, however, preliminary clinical observations and studies in SMA mouse models indicate persistent neuromuscular weakness, which reveals the need for an additional symptomatic treatment that targets neuromuscular function. -

'Hoods: Place Branding and the Reconstruction of Identity in Rick Sebak's Pittsburgh Documentaries

HOUSES, HOT DOGS, AND 'HOODS: PLACE BRANDING AND THE RECONSTRUCTION OF IDENTITY IN RICK SEBAK'S PITTSBURGH DOCUMENTARIES Bryan James McGeary A Dissertation Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY December 2012 Committee: Ellen Berry, Advisor Ellen W. Gorsevski Graduate Faculty Representative Cynthia Baron Scott Magelssen © 2012 Bryan James McGeary All Rights Reserved iii ABSTRACT Ellen Berry, Advisor This project investigates the implementation of place branding theory via documentary filmmaking focused closely on the local characteristics of a place/region. Employing a close reading of WQED filmmaker Rick Sebak’s Pittsburgh History Series focused upon recurrent themes about aspects of Pittsburgh’s unique identity framed in relation to rhetorical approach and documentary techniques, while also noting aspects left out of Sebak’s films, this dissertation demonstrates the progressive potential of publicly funded documentary filmmaking to enable the residents of a given place to rebrand their identity and foster revitalization, independent of the expectations of city planners or corporate sponsors, and without sacrificing the diversity of experiences that give that place its unique character. As a whole, Sebak’s body of work constructs a particular narrative of Western Pennsylvania’s identity that revamps some of the preexisting notions about that identity. As a project of self-definition and self-understanding, the Pittsburgh History Series provides the local populace with some agency in recreating its image, rather than being branded from the outside. The success of this place branding approach to documentary filmmaking for Sebak and Western Pennsylvania suggests that other cities and regions could use it as a model to take greater control of their identities and cultivate renewal. -

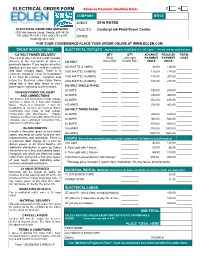

ELECTRICAL ORDER FORM Advance Payment Deadline Date: E M COMPANY: BTH

ELECTRICAL ORDER FORM Advance Payment Deadline Date: E M COMPANY: BTH # EVENT: 2016 RATES ELECTRICAL EXHIBITION SERVICES FACILITY: CenturyLink Field Event Center 5931 4th Avenue South, Seattle, WA 98108 Ph: (206) 781-2411 Fax: (206) 781-2270 DATES: [email protected] FOR YOUR CONVENIENCE PLACE YOUR ORDER ONLINE AT WWW.EDLEN.COM ORDER INSTRUCTIONS ELECTRICAL OUTLETS Approximately 120V/208V A.C. 60 Cycle - Prices are for entire event 120 VOLT POWER DELIVERY QTY QTY ADVANCE REGULAR TOTAL The cost of one 120-Volt outlet includes Show 24hrs/day PAYMENT PAYMENT COST delivery to the rear-center of inline or 120 VOLT Hours Only Double Rate PRICE PRICE peninsula booths. If you require an outlet distributed to any other location, material 500 WATTS (5 AMPS) 84.00 126.00 and labor charges apply. There is a 1000 WATTS (10 AMPS) 116.00 174.00 minimum charge of 1 hour for installation & 1/2 hour for removal. Complete and 1500 WATTS (15 AMPS) 138.00 207.00 return the Electrical Labor Order Form 2000 WATTS (20 AMPS) 158.00 237.00 along with a floor plan layout of your booth space indicating outlet locations. 208 VOLT SINGLE PHASE 20 AMPS 190.00 285.00 208/480V POWER DELIVERY 30 AMPS 240.00 360.00 AND CONNECTIONS The delivery and connection of high voltage 60 AMPS 402.00 630.00 services is done on a time and material basis. There is a minimum 1 hour for 100 AMPS 534.00 802.00 installation & 1/2 hour for removal. Edlen 208 VOLT THREE PHASE electricians must make all high voltage connections and disconnects. -

Youtube Yinzers: Stancetaking and the Performance of 'Pittsburghese' Scott F

To appear in Identity and Dialect Performance, edited by Reem Bassiouney. Routledge. Youtube Yinzers: Stancetaking and the performance of 'Pittsburghese' Scott F. Kiesling Performance, stance, class, gender In 1966 William Labov produced a work on the pronunciation of /r/ in New York City department stores that was, to some extent, ethnographic: Labov was a participant observer in asking for items on a particular floor of a department store, and he was familiar with the conventions necessary to find a clerk and get their attention. After a productive afternoon of finding shoes, and with the support of recordings of interviews from the Lower East Side, Labov was able to show that working-class folks tend to vocalize their /r/s more than middle-class folks in New York City. One reason Labov’s paper is foundational to the sociolinguistic canon is that it seems clear that Labov was able to ‘control’ for what was happening: Everyone he talked to was a clerk talking to a customer and was giving directions to shoes, or shirts, or some other item sold by the store. While this equivalency seems on the face of it to be an unassailable fact, the question remains whether every clerk was approaching the task in the same way: How did these clerks relate to the relatively young man in a fairly conservative but firmly middle-class suit and tie? Was he treated with the deference due to the king, as the customer should be? Or was he treated merely as some dude asking a question (perhaps a question that could have been answered by checking a Kiesling Youtube Yinzers 2 directory)? Or, was he an incidental interaction that required little attention? Or did he ask a question the clerk was unsure of? These are all possible variations of stance that a salesclerk could take when answering such a question. -

Name Pittsburgh Resident Action Comment Patrick Beckhorn

Name Pittsburgh Action Comment Resident Patrick Beckhorn Yes Remove Columbus was a delusional racist slave owner who caused genocide, so why exalt him by erecting and protecting a large public monument to him? One would only do that if they were ignorant of history. Well, they might do it if they were ignorant of history, but they would also do it if they were a racist piece of shit... Rohit Kartan Yes Remove Emilia Farmerie-Rishel No Remove Ty Hinkle Yes Remove Karsen Shoger Yes Remove The Columbus statue glorifies the history of colonialism, which caused extreme amounts of harm to native peoples in America as well as the environment in America. It is time to let go of these relics that glorify a destructive history, filled with violence and death, as many other cities across America are doing. Keeping the Columbus statue broadcasts a clear message exalting the history of lack of care for humanity of colonists and imperialists. If we want to stand by the ideals of equity and social justice, removing this symbol of inequity and harm from our city. Daniel Galvin Yes Remove The Christopher Columbus statue in Shenley Park should be immediately removed with all expediency - which is why the process of replacement or alternation, and accompanying delays, is unacceptable as well. The fact that it has remained there this long is an embarrassment to Pittsburgh, and an insult to the native people whose land this was for thousands of years. For native people - including friends of mine whose viewpoints I greatly value - this is tantamount to a statue of Hitler in a Jewish neighborhood, or a monument commemorating the cross-Atlantic slave trade being erected in a black neighborhood. -

GIS Applications in Studying Dialect of Western Pennsylvania Ayad Y

Mapping Dialect: GIS Applications in Studying Dialect of Western Pennsylvania Ayad Y. and Luthin H Mapping Dialect: GIS Applications in Studying Dialect of Western Pennsylvania Ayad, Y. ([email protected]) Luthin, H. ([email protected]) Clarion University of Pennsylvania Abstract Historically, most surveys of American dialect have focused on large‐scale regions of the country: the Linguistic Atlas of New England, the Linguistic Atlas of the Middle and South Atlantic States, and the Linguistic Atlas of the Gulf States, for example. However, even in a region as small and relatively homogeneous as western Pennsylvania, there is measurable diversity of language. This paper describes a dialect survey, in progress for the last 10 years that focuses precisely on dialect variation within western Pennsylvania. In recent years, the researchers have tapped the potential of GIS techniques to help make these distributions visible and assess the underlying speech patterns of the region. This paper is an attempt in this direction. It demonstrates the methods and techniques used to manipulate the collected data and the process of creating visual cartographic representations of the results. Introduction The essence of dialectology is the study of variation in language. Variation can be found at every level of language, from the nuances of pronunciation and the details of verb conjugations, to the structure of sentences, the content of the vocabulary, and patterns of interaction between individuals. The field of dialectology today is pursued down all of these avenues and more, and from a multitude of perspectives (social, anthropological, diachronic, synchronic, longitudinal, qualitative, quantitative, statistical), but it had its origins in the study of regional variation—the geography of language—and cartography was at the heart of its enterprise. -

Yinzcam: Experiences with In-Venue Mobile Video and Replays Nathan D

YinzCam: Experiences with In-Venue Mobile Video and Replays Nathan D. Mickulicz, Priya Narasimhan, and Rajeev Gandhi, YinzCam, Inc. and Carnegie Mellon University https://www.usenix.org/conference/lisa13/technical-sessions/papers/mickulicz This paper is included in the Proceedings of the 27th Large Installation System Administration Conference (LISA ’13). November 3–8, 2013 • Washington, D.C., USA ISBN 978-1-931971-05-8 Open access to the Proceedings of the 27th Large Installation System Administration Conference (LISA ’13) is sponsored by USENIX. YinzCam: Experiences with In-Venue Mobile Video and Replays Nathan D. Mickulicz, Priya Narasimhan, Rajeev Gandhi YinzCam, Inc. / Carnegie Mellon University Pittsburgh, PA [email protected], [email protected], [email protected] [email protected], [email protected], [email protected] Abstract YinzCam allows sport fans inside NFL/NHL/NBA venues to enjoy replays and live-camera angles from different per- spectives, on their smartphones. We describe the evolu- tion of the system infrastructure, starting from the initial in- stallation in 2010 at one venue, to its use across a dozen venues today. We address the challenges of scaling the system through a combination of techniques, including dis- tributed monitoring, remote administration, and automated replay-generation. In particular, we take an in-depth look at our unique automated replay-generation, including the dash- board, the remote management, the remote administration, and the resulting efficiency, using data from a 2013 NBA Playoffs game. Figure 1: Fan experience at the original Mellon Arena deployment for YinzCam, May 2010, showing three dif- ferent smartphones displaying three different live cam- 1 Introduction era angles for a Pittsburgh Penguins home game. -

Forms and Functions of Second Person Plural Forms in World Englishes: a Corpus-Based Study

Forms and Functions of Second Person Plural Forms in World Englishes: a corpus-based Study Liviana Galiano This dissertation is submitted for the degree of Doctor of Philosophy January 2020 Department of Linguistics and English Language 1 Declaration This thesis has not been submitted in support of an application for another degree at this or any other university. It is the result of my own work and includes nothing that is the outcome of work done in collaboration except where specifically indicated. Many of the ideas in this thesis were the product of discussion with my supervisor Willem Hollmann. Liviana Galiano Lancaster University, UK 2 Abstract The present work is a corpus-based research on the plural forms of the second person pronoun you (2PL forms henceforth) which focuses on the similarities and differences among twenty varieties of English. The corpus (GloWbe) contains 1.9 billion words collected on the web in 2012. The 2PL forms I have analysed are the result of both morphological and analytic strategies of number marking: yous(e), yi(s/z), yus, you guys, you all and y(')all, you two, you three, you four, you ones and y(ou)'uns/yin(s/z), you lot and other you + NP-PL expressions. The aim of my research is provide an empirically informed description of the forms and functions of 2PL forms in contemporary English. This is done by combining the analysis of corpus data with the literature on 2PL forms as well as the relevant theories on language change. The results show that there are two main geographically-related trends in the use of 2PL forms: analytic strategies are preferred in the US, whereas morphological strategies are preferred in the European and Australian varieties of English. -

Варіативність Мови Та Мовлення Мешканців М. Піттсбург На Прикладі Вживання Лексем «Yinz» ТА «YINZER»

ISSN 2222-551Х. ВІСНИК ДНІПРОПЕТРОВСЬКОГО УНІВЕРСИТЕТУ ІМЕНІ АЛЬФРЕДА НОБЕЛЯ. Серія «ФІЛОЛОГІЧНІ НАУКИ». 2017. № 1 (13) DOI 10.32342/2523-4463-2017-0-13-184-189 УДК 81’1 К.В. ВУКОЛОВА, аспірант кафедри англійської філології та перекладу Університету імені Альфреда Нобеля (м. Дніпро) ВАРІАТИВНІСТЬ МОВИ ТА МОВЛЕННЯ МЕШКАНЦІВ м. ПІТТСБУРГ НА ПРИКЛАДІ ВЖИВАННЯ ЛЕКСЕМ «yInz» ТА «yInzER» У статті йдеться про найпопулярнішу лексему піттсбурзького діалекту (Філадельфія, США) yinz та її варіативність у рамках діалекту. Відображено історію становлення цієї лексичної одиниці від ви- никнення до сьогодення, а також продемонстровано її функціонування на сучасних прикладах мов- лення мешканців м. Піттсбург. Ключові слова: yinz, yinzer, лексична одиниця, займенник, реклама та ЗМІ, піттсбурзький діалект. ова та мовлення мешканців м. Піттсбург, як і мовлення будь-яких мовців, характеризується певною варіативністю. Залежно від ситуації та особливостей Мстановища мовця слова можуть вживатися в різних значеннях, з різною часто- тою. Носії піттсбурзького діалекту створили низку унікальних слів-діалектизмів, поширених переважно в Піттсбурзі та околицях міста, що умовно називаються «піттсбургізмами» і які теж набувають різних значень залежно від контексту. Найпопулярнішими піттсбурзькими діалектизмами є лексеми yinz (Ви − 2 особа множини) і yinzer (мешканець м. Піттсбург). Саме ці слова стали символами міста та його мешканців, тому доцільно проаналізувати варіативність усного та писемного мовлення мешканців Піттсбурга на їх прикладах. Займенник другої особи множини yinz перебував у семіотичному ресурсі в Піттсбурзі з часів колоніальної епохи, коли його привезли в регіон шотландські та ірландські емігранти. Основна функція його використання відтоді майже не змінилася і полягає у зверненні до двох або більшої кількості людей. Водночас протягом XX ст. і на початку XXI ст. займен- ник yinz набув нових значень і таким чином став одним з найчастіше цитованих прикладів пістубрігізмів та найпопулярніших позначень місцевості Піттсбурга. -

Noun Phrases

Noun Phrases February 13, 2017 Next assignments • Hundred noun phrases • Hundred sentences • Morphological analyzer The Universe Why does syntax exist? Around seven seconds of puffed air Communication between sentient beings puts a tiny piece of the universe in the common ground with the intent of getting the pictures in your heads to be the same. Search google images for “common ground” https://www.google.com/url?sa=i&rct=j&q=&esrc=s&source=images&cd=&cad=rja&uact=8&ved=0ahUKEwiI78KNsaTRAhUBWSYKHeprCxAQjRwIBw&url =http%3A%2F%2Fwww.stefanomastrogiacomo.info%2Fcommon-ground%2F&psig=AFQjCNGIgEKtGzB4SqzYI6iqQi7QyGEb8Q&ust=1483478189655154 Metalinguistic Awareness • Being aware of what is happening in languages so that you can do it differently from English or other language you know Meanings that are expressed in noun phrases • Number (singular/plural) • Gender/noun class • Person • Definiteness • Cardinality • Ordinality • Classifiers • Possession • Quantification Additional things that are in noun phrases • Relative clauses – The book that I read – The book that annoyed you • Prepositional phrases – The book on the table – The book about linguistics • Adjectives – The blue book – The book (that is) bluer than the sky – The bird light enough to fly Note • We are not talking about case (nominative, accusative, ergative) yet because that is about the role of the noun phrase in the sentence (subject, object). • But we will talk about two cases that are important in noun phrases: genitive and possessive. Kinds of nouns • Common nouns • Proper nouns • Pronouns – Anaphoric – Demonstrative Grammatical Number • https://en.wikipedia.org/wiki/Grammatical_number • This article covers number in noun phrases and in agreement between nouns and verbs.