An Optimal Cloud Based Road Traffic Management System with Novel VM Machine Migration Technique

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

All India Council for Technical Education (A Statutory Body Under Ministry of HRD, Govt

All India Council for Technical Education (A Statutory body under Ministry of HRD, Govt. of India) Nelson Mandela Marg,Vasant Kunj, New Delhi-110070 Website: www.aicte-india.org APPROVAL PROCESS 2018-19 Extension of Approval (EoA) F.No. South-Central/1-3513847155/2018/EOA Date: 16-Apr-2018 To, The Principal Secretary (Higher Education) Govt. of Telangana, D Block, 117 Telangana Secretariat, Hyderabad Sub: Extension of Approval for the Academic Year 2018-19 Ref: Application of the Institution for Extension of approval for the Academic Year 2018-19 Sir/Madam, In terms of the provisions under the All India Council for Technical Education (Grant of Approvals for Technical Institutions) Regulations 2016 notified by the Council vide notification number F.No.AB/AICTE/REG/2016 dated 30/11/2016 and amended on December 5, 2017 and norms standards, procedures and conditions prescribed by the Council from time to time, I am directed to convey the approval to Permanent Id 1-5041803 Application Id 1-3513847155 Name of the Institute HOLY MARY INSTITUTE OF Name of the Society/Trust HOLY TRINTITY EDUCATIONAL TECHNOLOGY & SCIENCE SOCIETY Institute Address BOGARAM VILLAGE Society/Trust Address 8-2-248/A,G-2,MAHARSHI KEESARA MANADAL HOUSE,ROAD NO-3,BANZARA BOGARAM HILLS,HYDERABAD- KEESARA MANDA 34,HYDERABAD,HYDERABAD,And R R DISTRICT hra Pradesh,500034 ANDHRA PRADESH 501301, BOGARAM, RANGAREDDI, Telangana, 501301 Institute Type Unaided - Private Region South-Central Opted for Change from No Change from Women to Co-Ed NA Women to Co-Ed and vice and vice versa -

A Case Study from Ramannapeta Mandal, Nalgonda, Telangana, India: Fluoride Contamination of Ground Water

A Case Study From Ramannapeta Mandal, Nalgonda, Telangana, India: Fluoride Contamination of Ground Water Dr.G.Machender Ganaboina ( [email protected] ) Mahatma Gandhi University Narsimha Kota Osmania University Research Article Keywords: Fluoride Contamination, Groundwater, Remote sensing, Ramannapeta Mandal Posted Date: July 30th, 2021 DOI: https://doi.org/10.21203/rs.3.rs-681568/v1 License: This work is licensed under a Creative Commons Attribution 4.0 International License. Read Full License Page 1/14 Abstract The groundwater quality evaluation for uoride element was studied in Ramannapeta Mandal, Nalgonda District, and Telangana State, India. The water samples were collected in pre and post monsoon seasons in the year of 2015-2016 from hand pumps bore wells or dug wells in the villages of Ramannapeta Mandal. The collected water samples were analyzed within a week. The Spatial distributions of uoride maps were prepared with the help of the Remote Sensing Imaginary (RSI) and Geographical Information System (GIS) techniques. The range of uoride in the study area varied from 0.6 to 5.6 ppm whereas the maximum permissible limit in drinking water is 1.5 ppm (As per Bureau of Indian Standard (BIS) guideline-IS: 10500: 1991). The high contamination 4.0- 5.5 ppm of uoride in drinking water was observed in Siripuram, Dubbaka villages. During the study, it was found that the most of villages in Ramannapeta Mandal are affected with high uoride content in drinking water in the range of 1.5-3.0 ppm. Nalgonda district including Ramannapeta Mandal is underlain by different rocks such as granites (80%), gneisses, dolerite, dykes (10%), older metamorphic and intrusive (10%). -

1. Agriculture

1. AGRICULTURE • Agriculture sector plays a pivotal role in meeting the dietary requirements of one of the most densely populated districts - Medchal – Malkajgiri. • Total Agriculture land in the District (as per RB record) : 70000 Ac Net cultivated area : 26807 Ac • Predominant crops: Paddy (47% of cultivated area), Maize (11%), Fodder (6%), Redgram (3%), Cotton (2%), Other food crops (30%) of cultivated area. • Soils : Red soil is predominant (70%), Black soils (30%) • Source of irrigation: Bore wells : 14000 Ac Minor irrigation tanks: 2600 Ac Musi : 3000 Ac • Rainfall Normal :711 mm Actual: 813.7mm % Deviation: 14 Normal No .of Rainy Days : 59 • Market facility : Through procurement centres, AMC Medchal, Pvt traders, Local markets • Agri Input supply : Subsidy seed is distributed to farmers through online portal (OSSDS) at 7 notified sale points (3PACS, 3FACS, 1 MGC), Major sales are through private dealers(22) • Fertilizer supply through PACS, FSCS, Pvt dealers (42). • Rythu Samanvaya Samithies: 101 GRSS, 6MRSS 1ZRSS: Total 108 Flagship programmes / Schemes implemented during the Yr. 2019-20 Sl. Achievem % Scheme Target Remarks No ent Ach I FLAG SHIP PROG. Bank details of 27451farmers (except give it up, not 33334 23951 Far 71% interested cases) updated and Rythu Bandhu 1 Farmers 25.35 sent to C&DA. However Kharif 2019 34.85 Crores Crores 73% Amount credited to 23951 accounts by C&DA to the extent of budget released. 21307 Far 18327 86 % Rabi 2019- 20 Under progress at C&DA level 23.53 Crore 13.69Cr 56% 2 Rythu Bima 39640 39640 a -

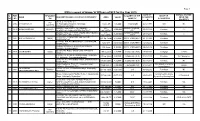

IPR in Respect of Group 'A' Officers of NFC for the Year 2019

Page 1 IPR in respect of Group 'A' Officers of NFC for the Year 2019 DATE OF ANNUAL INCOME SL EMP DESIGNATI ACQUIRED IN THE MODE OF NAME DESCRIPTION AND LOCATION OF PROPERTY AREA VALUE ACQUISITIO FROM THE NO NO ON NAME OF ACQUISITION N PROPERTY H.No. 2-171, St.No 3, AO- 1 2985 T PADMAVATHI Sri Padmapriya Nilayam, Vaninagar, 1354.5 Sft 1,05,000 T.Padmavathi 05-07-1991 Gift Nil II(ACCTS) Malkajgiri,Hyderabad HOUSE, H.NO. C2-317, NFC NAGAR, 283 BADER HUSSAIN, 2 3219 BADER HUSSAIN FMAN(C) 10,00,000 05-06-1992 Purchase 0 GHATKESAR, RANGA REDDY DISTRICT SQ.YARDS SELF HOUSE, H.NO. 21-4-898/5, GULAB SINGH BOWLI, 215 BADER HUSSAIN, 45,00,000 28-08-2015 Purchase 0 HYDERABAD, TENLANGANA SQ.YARDS SELF 24-143/28/2, VISHNUPURI, MALKAJGIRI, 3 3607 M N V VISWANATH SO(G) 293 Sq. Yards 5,00,000 M.N.V. VISWANATH 26-10-1988 Purchase HYDERABAD-47 FLAT NO. 404, RAJ RESIDENCY, VIDYANAGAR, 1280 SFT 25,30,000 M.N.V. VISWANATH 12-03-2012 Purchase HYDERABAD SURVEY 475/R ETC, BASWAPUR PULKAL 4.35 Acres 5,85,000 M.N.V. VISWANATH 05-06-2018 Purchase MANDAL, RANGA REDDY B2-563,NFC Nagar, Ghatakeswar, RR District 4 3787 J GURUNADH SO(G) 250 sq yards 1,50,000 J Gurunadh (Self) 17-05-1991 mortigage 12000 (House) Plat No.113, SR-94, Seetharam Nagar, RK puram 220 sq. yards 3,50,000 J.Gurunadh (Self) 01-02-1995 Purchase Self Occupied PO, Malkajgiri, Secunderabad(house) Sevey No.157, Ankushapur village, Ghatakeswar,RR 686 Sq yards 4,92,000 J.Gurunadh (Self) 05-03-2010 Purchase Nil District (Plot) Naidu Residency, F.No 201, Dayanand Nagar, Mortigaged to reliance 1860 Sq feet 27,00,000 J Gurunadh (self) 21-03-2011 48000 Malkajgiri, Hyderabad (Flat) home finance YALAVARTHI Plot No.15, bit-1, Vivekananda Nagar, Kukatpally, 5 3857 FMAN(C) 267 sq. -

All India Council for Technical Education (A Statutory Body Under Ministry of HRD, Govt

All India Council for Technical Education (A Statutory body under Ministry of HRD, Govt. of India) Nelson Mandela Marg,Vasant Kunj, New Delhi-110070 Website: www.aicte-india.org APPROVAL PROCESS 2020-21 Extension of Approval (EoA) - Corrigendum - F.No. South-Central/1-7002529383/2020/EOA/ Corrigendum-3 Date:13-Oct-2020 To, The Principal Secretary (Higher Education) Govt. of Telangana, D Block, 117 Telangana Secretariat, Hyderabad Sub: Extension of Approval for the Academic Year 2020-21 Ref: Application of the Institution for Extension of approval for the Academic Year 2020-21 EOA Issued on F.No. South-Central/1- 15-Jun-2020 7002529383/2020/EOA Corrigendum 1 F.No. South-Central/1- 16-Jul-2020 7002529383/2020/EOA/Corrigendum-1 Corrigendum 2 F.No. South-Central/1- 09-Oct-2020 7002529383/2020/EOA/Corrigendum-2 Corrigendum 3 F.No. South-Central/1- 13-Oct-2020 7002529383/2020/EOA/Corrigendum-3 Ref: Application of the Institution for Extension of Approval for the Academic Year 2020-21 Sir/Madam, In terms of the provisions under the All India Council for Technical Education (Grant of Approvals for Technical Institutions) Regulations 2020 notified by the Council vide notification number F.No. AB/AICTE/REG/2020 dated 4th February 2020 and norms standards, procedures and conditions prescribed by the Council from time to time, I am directed to convey the approval to Permanent Id 1-5041803 Application Id 1-7002529383 HOLY MARY INSTITUTE OF HOLY TRINTITY EDUCATIONAL Name of the Institution Name of the Society/Trust TECHNOLOGY & SCIENCE SOCIETY BOGARAM -

Hyderabad's Pharmaceutical Pollution Crisis

Hyderabad’s pharmaceutical pollution crisis Hyderabad’s pharmaceutical pollution crisis: Heavy metal and solvent contamination at factories in a major Indian drug manufacturing hub Hyderabad’s pharmaceutical pollution crisis 2 3 Hyderabad’s pharmaceutical pollution crisis Executive Summary 5 1. Introduction 11 A. Background to the report 11 Sustainable Finance at Nordea Nordea is the largest wealth manager in the Nordics with approximately 300 billion euro in Asset under B. The Indian pharma industry 13 Management and 11 million customers across Nordea Group. Nordea’s sustainable finance approach is 2. The situation in Hyderabad 15 about integrating sustainability into all our business activities and products. A. A city blighted by pollution with inadequate monitoring and enforcement 15 Driving change through engagements Critical water pollution 15 Nordea engages with companies and other stakeholders, including policy makers, on different themes, individually or through collaborative initiatives. We believe that company engagement is key in order Soil and air pollution 19 for us to be a responsible owner and drive change within an industry or market. Illegal waste dumping 20 Water risk is one of our focus areas within stewardship and engagement. In 2016 the World Economic Forum identified water crises as one of the top five global risks of highest concern over the next 10 Pollution Index watered down 21 years. The water issue is not only a present challenge for countries, but also a risk and opportunity for Pharmaceutical pollution as a driver of AMR 23 the entities in which we invest, and ultimately the assets we manage. B. The industry response 25 Our decision to engage the pharmaceutical industry sector on water pollution in India is an example of how we as a financial actor practice responsible ownership to move the industry towards a more Industry Roadmap for Progress on Combating Antimicrobial Resistance 25 sustainable management and to protect our customers’ long-term assets. -

1 1 Curriculum Vitae 1. Name Dr. Vaddiraju Anil Kumar 2

1 Curriculum Vitae 1. Name Dr. Vaddiraju Anil Kumar 2. Designation and Affiliation: Associate Professor and Head of the Centre, Centre for Political Institutions, Governance and Development (CPIGD), Institute for Social and Economic Change (ISEC), Bengaluru-560072, Karnataka, India. Web address: www.isec.ac.in 3. Date of Birth: 26-11-1964 4. Father's Name: Late Vaddiraju Radha Kishan Rao 6. Address for Correspondence: Vaddiraju Anil Kumar Associate Professor and Head, Centre for Political Institutions Governance and Development Institute for Social and Economic Change, Nagarabhavi, Bengaluru-560072, Karnataka. E-mail: [email protected] [email protected] Phone: (Office) 080 – 23215519 7. Marital Status: Married and with one child 8. Educational Qualifications: Qualification Institution Year Class %of Marks Ph. D. University of Delhi 2004 Doctoral Fellowship ICSSR (Indian Council Open Doctoral Fellow of Social Science between 1993-97 Research) M. Phil. University of Delhi 1991 I 66.6% M.A. (Political Science) JNU/CPS, New Delhi 1988 II B+ (FGPA '6'=60%) ------------------------------------------------------------------------------------------------------------ ------------------- 1 2 9. Research degrees: PhD on: Capitalist Development and Agrarian Politics in Andhra Pradesh, 1960-1990. Research involved intensive empirical case study of a District within Andhra Pradesh namely, Nalgonda, which had regional diversity within it. Capitalist development and grassroots politics were studied in three Mandals of the district. The three Mandals respectively were from irrigated, semi-irrigated and totally unirrigated parts of the district. The phenomena of freedom of wage labour, tenancy and intra-rural circulation of labour were examined in detail. It was found that between 1960-1990 backward castes emerged in semi-irrigated region; in irrigated region semi-feudal landlords transformed themselves into capitalist landlords; while in the totally unirrigated region ecologically induced proletarianization and out migration of peasantry continues. -

Collegecode Collegename Address District 8P a a R

COLLEGECODE COLLEGENAME ADDRESS DISTRICT vyasapuri, bandlaguda, post keshavgiri,, hyderabad, 8P A A R Mahaveer Engineering College bandlaguda, HYDERABAD, Telangana, 500005 HYDERABAD Survey No : 175 & 181, Ankushapur (V), Ghatkesar (M) India, Ankushapur (V), Ghatkesar, MEDCHAL , Telangana, AG ACE Engineering College 501301 MEDCHAL BATASINGARAM VILLAGE ,MOUNT OPERA ARJUN COLLEGE OF TECHNOLOGY COMPOUND, NAER RAMOJI FILIM CITY , HYDERABAD, W8 & SCEINCES HAYATH NAGAR, RANGA REDDY, Telangana, 501512 RANGA REDDY ASHOKA Institute of Engineering & Malkapur Village, Choutuppal Mandal, , Malkapur, 5T Technology Choutuppal, NALGONDA, Telangana, 508252 NALGONDA GUNTHAPALLY(V) , HAYATHNAGAR(M), RANGA AVANTHI INSTITUTE OF REDDY DIST. , HYDERABAD, ABDULLAPURMET, Q6 ENGINEERING & TECHNOLOGY RANGA REDDY, Telangana, 501512 RANGA REDDY AVANTHI'S SCIENTIFIC GUNTHAPALLY(v) , HAYATHNAGAR(M),R.R DIST , TECHNOLOGICAL & RESEARCH HYDERABAD, HAYATHNAGAR, RANGA REDDY, PT ACADEMY Telangana, 501512 RANGA REDDY Koheda Road, Ibrahimpatnam(M), R.R.dist, Ramdaspally AVN INSTITUTE OF ENGINEERING (Post), Ibrahimpatnam, RANGA REDDY, Telangana, 5U AND TECHNOLOGY 501510 RANGA REDDY Abdul Kalam Institute of Technological Vepalagada, Kothagudem, SUJATHANAGAR, BHADRADRI EK Sciences BHADRADRI KOTHAGUDEM, Telangana, 507120 KOTHAGUDEM Piglipur,Batasingaram(Panchayath),HayatNagar(Md), Annamacharya Institute of Technology Hyderabad, HAYATHNAGAR, RANGA REDDY, T8 & Sciences Telangana, 501512 RANGA REDDY KSP ROAD. PALONCHA-507115, KHAMMAM (Dt) AP, PALONCHA, PALONCHA, BHADRADRI KOTHAGUDEM, -

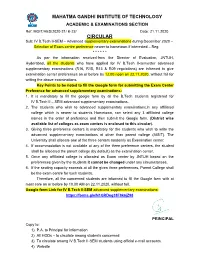

MAHATMA GANDHI INSTITUTE of TECHNOLOGY ACADEMIC & EXAMINATIONS SECTION Ref

MAHATMA GANDHI INSTITUTE OF TECHNOLOGY ACADEMIC & EXAMINATIONS SECTION Ref. MGIT/AES/2020-21/ B-22/ Date: 21.11.2020 CIRCULAR Sub: IV B.Tech II-SEM – Advanced supplementary examinations during December 2020 – Selection of Exam centre preference nearer to hometown if interested – Reg * * * * * * As per the information received from the Director of Evaluation, JNTUH, Hyderabad, all the students who have applied for IV B.Tech II-semester advanced supplementary examinations (R16, R15, R13 & R09 regulations) are informed to give examination center preferences on or before by 12.00 noon on 22.11.2020, without fail for writing the above examinations. Key Points to be noted to fill the Google form for submitting the Exam Center Preference for advanced supplementary examinations: 1. It is mandatory to fill the google form by all the B.Tech students registered for IV B.Tech II – SEM advanced supplementary examinations. 2. The students who wish to advanced supplementary examinations.in any affiliated college which is nearer to students Hometown, can select any 3 affiliated college names in the order of preference and then submit the Google form. (District wise available list of colleges as exam centers is enclosed to this circular). 3. Giving three preference centers is mandatory for the students who wish to write the advanced supplementary examinations at other than parent college (MGIT). The University shall allocate one of the three centers randomly as Examination center. 4. If accommodation is not available at any of the three preference centers, the student shall be allocated the parent college (by default) as the examination center. -

SANDSTON Sree Ramakrishna Towers and Success Story Starts with SANDSTONE Beside Image Hospital Infra India Pvt

Pocharam County Layout @Rampally to Bhogaram Road LOCATION MAP A .S R ao Nagar R Apollo Pharmacy Towards SBH MIC Electronics Janapriya Shamirpet Sai Baba Temple Kushaiguda Apartments RPF Training oad ECIL Center Kapra Jn Veg Market Cheeriyal Lalapet Flyover ECIL ECIL - Keesara Road NFC R Varun Motors Rampally Tarnaka Moulali Nagaram T ECIL owards R oad X Roads Cherlapally IDA Cocacola Keesara T Daira owards T IICT Campus Best Price Vimta Labs ampally to Bogaram Reqelford SITE Surana Telecom International School TIFR y College ar Bogaram naka Cherlapally X Roads T owards to Bogaram Ghatkesar Chengicharla R Rajiv Swagruha Tirumala Inorbit Mall Holy Mar Bodupal R SNITS Infosys Eng. College Complex Brooke Bond Ghatkesar KGR Instutite of Habsiguda Silver Oak VIMTW Railway Station Sri Vidya Nikethan Bunglows Technology & Science oad Jodimetla CPRI International School X R oad samskruti Singapore Andhra Bank oads College Narapalle Township Genpact ICICI Bank Asian Cinemas ushodayam green homes Towards Warangal Highway Ramnathapur NH-202 NH-202 Narayana T Proposed Annojiguda owards L.B Nagar Uppal Junior College 8 Screen Multiplex Ghatkesar X Roads Bypass R ORR Juncton Ghatkesar Ghatkesar oad Emarald Heights Pocharam County @Rampally to Bhogaram Road A project by Ofce Address: Contact For Details: Everyone's Journey starts with the First Step... Flat No. 112 & 113 SANDSTON Sree Ramakrishna Towers And Success Story Starts with SANDSTONE Beside Image Hospital infra India Pvt. Ltd Ameerpet, Hyderabad - 16 witness the growth www.sandstoneinfraindia.com Note: This Brochure is only a conceptual presentation of the project and not a legal offering. The promoters reserve the right to make changes in the elevation, plans and specifications as deemed fit. -

Details of Area of Operation of Rehabilitative Homes Under Ujjawala Scheme As on 25.8.2015

DETAILS OF AREA OF OPERATION OF REHABILITATIVE HOMES UNDER UJJAWALA SCHEME AS ON 25.8.2015 S. Name & Address Of The Area Of Location Of P & R Home Date Of No. Organization Operation Commence ment Of The Project Andhra Pradesh 1. People Environmental & Action For Rangareddy Shri P. Venkateswara 01-11-2008 Community Education H.No 2-29, Rao, R/O Chilaka Nagar Kachavani Singaram, Ghatkesar, Uppal Ranga Reddy Rangareddy, Andhra Pradesh District Hyderabad Tel.No.09490422676 E- mail ID. [email protected] Andhra Pradesh 2. Gram Vikas Khammam Shri M. Guravaia, R/O 12-11-2008 H.No.16-31/1 Vidyanagar Road H.No. 7-2-110, Dwarka Sathupalli Khammam District,A.P – Nagar Khammam - 507303 Tel.No.08761-283593 E- mail ID. 507002 Andhra Pradesh [email protected] 3. Hyderabad City Women’s Welfare Hyderabad Shri P.Veeraswamy, R/O 21-11-2008 Councils.S.Nagar, Gahsiguda, Plot No. 07, Hyderabad,A.P. Tel.No.040-27171818 E- mail Chennareddynagar ID. [email protected] Amberpet Hyderabad - 500013 Andhra Pradesh 4. Sthree (Society To Help Rural Anantpur Ms. Hema Bedi R/O 27-11-2008 Empowerment And Education), Yaradodi Village And Yaradodi Village And Post, Kadiri Post, Kadiri Mandal Mandal 515591, Anantpur A.P. 515591, Anantpur Andhra Tel.No.09849866494 Email ID. sthree Pradesh [email protected] 5. Anukurum Women And Child Rangareddy Smt. Anjana Devi, R/O 15-09-2009 Development Society, 12-13-309, St Sankalpam Nilayam 4-98 No.19, Tanaka Secunderabad- Bogaram Dayara Road 500017, Andhra Pradesh E-mail: Keesara Mandal Ranga [email protected] Phone Reddy District Andhra Pradesh 0 No.040-27017446 6 Community Association For Rural Nellore Shri Mr. -

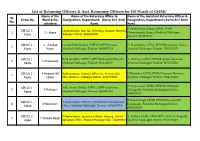

List of Returning Officers & Asst. Returning Officers for 150 Wards Of

List of Returning Officers & Asst. Returning Officers for 150 Wards of GHMC Name of the Name of the Returning Officer & Name of the Assistant Returning Officer & Sl. Circle No. Ward & No. Designation, Department. (Sarva Sri/ Smt/ Designation, Department (Sarva Sri/ Smt/ No. attached Kum) Kum) P.Venkat Ram Reddy, GHM, ZPHS CIRCLE 1 - Chinta Niranjan Rao, AD, CPO Wing, Keesara, Medchal- 1 1 - Kapra Dammaiguda, Kapra, Medchal Malkajgiri Kapra Malkajgiri District. 9866609090 District. 9391010142 CIRCLE 1 - 2 - A.S.Rao Jonnala Padmavathy, MPDO, MPP Keesara, D. Narshimha, GHM, ZPHS Balajinagar, Kapra, 2 Kapra Nagar Medchal-Malkajgiri District. 9849903334 Medchal Malkajgiri District. 7893291679 CIRCLE 1 - Kota Suvidha, MPDO, MPP Muduchinthalapally, A. Srinivas, GHM, ZPHS Bogaram, Keesara, 3 3-Cherlapally Kapra Medchal-Malkajgiri District. 9121245697 Medchal Malkajgiri District. 9177262558 CIRCLE 1 - 4-Meerpet HB Radhapadmaja, Sectorial Officer-III, Keesara, O/o G.Ravinder, GHM, ZPHS Cheeryal, Keesara, 4 Kapra Colony DEO, Medchal – Malkajgiri district. 9247773330 Medchal Malkajgiri District. 9704033864 A.Vidyasagar, GHM, ZPHS Hydernagar, CIRCLE 1 - Yella Aruna Reddy, MPDO, MPP Ghatkesar, 5 5-Mallapur Kukatpally, Medchal Malkajgiri District. Kapra Medchal-Malkajgiri District. 9849903335 9849579162 R.Rajpal Singh, GHM, ZPHS Moosapet (B), CIRCLE 1 - T. Jhansi Rani, DBCDO, District BC Development 6 6-Nacharam Kukatpally, Medchal Malkajgiri District. Kapra Office, Medchal-Malkajgiri District. 8978597373 8919023114 CIRCLE 2 - V.Madhavilatha, Agriculture Officer, Keesara, District V. Kishan, GHM, ZPHS RP Colony, Kukatpally, 7 7-Chiluka Nagar Uppal Agriculture Office, Medchal-Malkajgiri Dist. 7288894641 Medchal Malkajgiri District. 9441118823 Name of the Name of the Returning Officer & Name of the Assistant Returning Officer & Sl. Circle No.