Pcie, Nvlink, NV-SLI, Nvswitch and Gpudirect

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

CUDA by Example

CUDA by Example AN INTRODUCTION TO GENERAL-PURPOSE GPU PROGRAMMING JASON SaNDERS EDWARD KANDROT Upper Saddle River, NJ • Boston • Indianapolis • San Francisco New York • Toronto • Montreal • London • Munich • Paris • Madrid Capetown • Sydney • Tokyo • Singapore • Mexico City Sanders_book.indb 3 6/12/10 3:15:14 PM Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and the publisher was aware of a trademark claim, the designations have been printed with initial capital letters or in all capitals. The authors and publisher have taken care in the preparation of this book, but make no expressed or implied warranty of any kind and assume no responsibility for errors or omissions. No liability is assumed for incidental or consequential damages in connection with or arising out of the use of the information or programs contained herein. NVIDIA makes no warranty or representation that the techniques described herein are free from any Intellectual Property claims. The reader assumes all risk of any such claims based on his or her use of these techniques. The publisher offers excellent discounts on this book when ordered in quantity for bulk purchases or special sales, which may include electronic versions and/or custom covers and content particular to your business, training goals, marketing focus, and branding interests. For more information, please contact: U.S. Corporate and Government Sales (800) 382-3419 [email protected] For sales outside the United States, please contact: International Sales [email protected] Visit us on the Web: informit.com/aw Library of Congress Cataloging-in-Publication Data Sanders, Jason. -

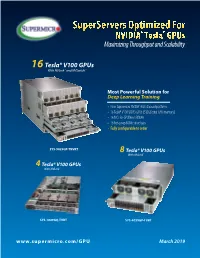

Supermicro GPU Solutions Optimized for NVIDIA Nvlink

SuperServers Optimized For NVIDIA® Tesla® GPUs Maximizing Throughput and Scalability 16 Tesla® V100 GPUs With NVLink™ and NVSwitch™ Most Powerful Solution for Deep Learning Training • New Supermicro NVIDIA® HGX-2 based platform • 16 Tesla® V100 SXM3 GPUs (512GB total GPU memory) • 16 NICs for GPUDirect RDMA • 16 hot-swap NVMe drive bays • Fully configurable to order SYS-9029GP-TNVRT 8 Tesla® V100 GPUs With NVLink™ 4 Tesla® V100 GPUs With NVLink™ SYS-1029GQ-TVRT SYS-4029GP-TVRT www.supermicro.com/GPU March 2019 Maximum Acceleration for AI/DL Training Workloads PERFORMANCE: Highest Parallel peak performance with NVIDIA Tesla V100 GPUs THROUGHPUT: Best in class GPU-to-GPU bandwidth with a maximum speed of 300GB/s SCALABILITY: Designed for direct interconections between multiple GPU nodes FLEXIBILITY: PCI-E 3.0 x16 for low latency I/O expansion capacity & GPU Direct RDMA support DESIGN: Optimized GPU cooling for highest sustained parallel computing performance EFFICIENCY: Redundant Titanium Level power supplies & intelligent cooling control Model SYS-1029GQ-TVRT SYS-4029GP-TVRT • Dual Intel® Xeon® Scalable processors with 3 UPI up to • Dual Intel® Xeon® Scalable processors with 3 UPI up to 10.4GT/s CPU Support 10.4GT/s • Supports up to 205W TDP CPU • Supports up to 205W TDP CPU • 8 NVIDIA® Tesla® V100 GPUs • 4 NVIDIA Tesla V100 GPUs • NVIDIA® NVLink™ GPU Interconnect up to 300GB/s GPU Support • NVIDIA® NVLink™ GPU Interconnect up to 300GB/s • Optimized for GPUDirect RDMA • Optimized for GPUDirect RDMA • Independent CPU and GPU thermal zones -

20201130 Gcdv V1.0.Pdf

DE LA RECHERCHE À L’INDUSTRIE Architecture evolutions for HPC 30 Novembre 2020 Guillaume Colin de Verdière Commissariat à l’énergie atomique et aux énergies alternatives - www.cea.fr Commissariat à l’énergie atomique et aux énergies alternatives EUROfusion G. Colin de Verdière 30/11/2020 EVOLUTION DRIVER: TECHNOLOGICAL CONSTRAINTS . Power wall . Scaling wall . Memory wall . Towards accelerated architectures . SC20 main points Commissariat à l’énergie atomique et aux énergies alternatives EUROfusion G. Colin de Verdière 30/11/2020 2 POWER WALL P: power 푷 = 풄푽ퟐ푭 V: voltage F: frequency . Reduce V o Reuse of embedded technologies . Limit frequencies . More cores . More compute, less logic o SIMD larger o GPU like structure © Karl Rupp https://software.intel.com/en-us/blogs/2009/08/25/why-p-scales-as-cv2f-is-so-obvious-pt-2-2 Commissariat à l’énergie atomique et aux énergies alternatives EUROfusion G. Colin de Verdière 30/11/2020 3 SCALING WALL FinFET . Moore’s law comes to an end o Probable limit around 3 - 5 nm o Need to design new structure for transistors Carbon nanotube . Limit of circuit size o Yield decrease with the increase of surface • Chiplets will dominate © AMD o Data movement will be the most expensive operation • 1 DFMA = 20 pJ, SRAM access= 50 pJ, DRAM access= 1 nJ (source NVIDIA) 1 nJ = 1000 pJ, Φ Si =110 pm Commissariat à l’énergie atomique et aux énergies alternatives EUROfusion G. Colin de Verdière 30/11/2020 4 MEMORY WALL . Better bandwidth with HBM o DDR5 @ 5200 MT/s 8ch = 0.33 TB/s Thread 1 Thread Thread 2 Thread Thread 3 Thread o HBM2 @ 4 stacks = 1.64 TB/s 4 Thread Skylake: SMT2 . -

The Intro to GPGPU CPU Vs

12/12/11! The Intro to GPGPU . Dr. Chokchai (Box) Leangsuksun, PhD! Louisiana Tech University. Ruston, LA! ! CPU vs. GPU • CPU – Fast caches – Branching adaptability – High performance • GPU – Multiple ALUs – Fast onboard memory – High throughput on parallel tasks • Executes program on each fragment/vertex • CPUs are great for task parallelism • GPUs are great for data parallelism Supercomputing 20082 Education Program 1! 12/12/11! CPU vs. GPU - Hardware • More transistors devoted to data processing CUDA programming guide 3.1 3 CPU vs. GPU – Computation Power CUDA programming guide 3.1! 2! 12/12/11! CPU vs. GPU – Memory Bandwidth CUDA programming guide 3.1! What is GPGPU ? • General Purpose computation using GPU in applications other than 3D graphics – GPU accelerates critical path of application • Data parallel algorithms leverage GPU attributes – Large data arrays, streaming throughput – Fine-grain SIMD parallelism – Low-latency floating point (FP) computation © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007! ECE 498AL, University of Illinois, Urbana-Champaign! 3! 12/12/11! Why is GPGPU? • Large number of cores – – 100-1000 cores in a single card • Low cost – less than $100-$1500 • Green computing – Low power consumption – 135 watts/card – 135 w vs 30000 w (300 watts * 100) • 1 card can perform > 100 desktops 12/14/09!– $750 vs 50000 ($500 * 100) 7 Two major players 4! 12/12/11! Parallel Computing on a GPU • NVIDIA GPU Computing Architecture – Via a HW device interface – In laptops, desktops, workstations, servers • Tesla T10 1070 from 1-4 TFLOPS • AMD/ATI 5970 x2 3200 cores • NVIDIA Tegra is an all-in-one (system-on-a-chip) ATI 4850! processor architecture derived from the ARM family • GPU parallelism is better than Moore’s law, more doubling every year • GPGPU is a GPU that allows user to process both graphics and non-graphics applications. -

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27

Case M:07-cv-01826-WHA Document 249 Filed 11/08/2007 Page 1 of 34 1 BOIES, SCHILLER & FLEXNER LLP WILLIAM A. ISAACSON (pro hac vice) 2 5301 Wisconsin Ave. NW, Suite 800 Washington, D.C. 20015 3 Telephone: (202) 237-2727 Facsimile: (202) 237-6131 4 Email: [email protected] 5 6 BOIES, SCHILLER & FLEXNER LLP BOIES, SCHILLER & FLEXNER LLP JOHN F. COVE, JR. (CA Bar No. 212213) PHILIP J. IOVIENO (pro hac vice) 7 DAVID W. SHAPIRO (CA Bar No. 219265) ANNE M. NARDACCI (pro hac vice) KEVIN J. BARRY (CA Bar No. 229748) 10 North Pearl Street 8 1999 Harrison St., Suite 900 4th Floor Oakland, CA 94612 Albany, NY 12207 9 Telephone: (510) 874-1000 Telephone: (518) 434-0600 Facsimile: (510) 874-1460 Facsimile: (518) 434-0665 10 Email: [email protected] Email: [email protected] [email protected] [email protected] 11 [email protected] 12 Attorneys for Plaintiff Jordan Walker Interim Class Counsel for Direct Purchaser 13 Plaintiffs 14 15 UNITED STATES DISTRICT COURT 16 NORTHERN DISTRICT OF CALIFORNIA 17 18 IN RE GRAPHICS PROCESSING UNITS ) Case No.: M:07-CV-01826-WHA ANTITRUST LITIGATION ) 19 ) MDL No. 1826 ) 20 This Document Relates to: ) THIRD CONSOLIDATED AND ALL DIRECT PURCHASER ACTIONS ) AMENDED CLASS ACTION 21 ) COMPLAINT FOR VIOLATION OF ) SECTION 1 OF THE SHERMAN ACT, 15 22 ) U.S.C. § 1 23 ) ) 24 ) ) JURY TRIAL DEMANDED 25 ) ) 26 ) ) 27 ) 28 THIRD CONSOLIDATED AND AMENDED CLASS ACTION COMPLAINT BY DIRECT PURCHASERS M:07-CV-01826-WHA Case M:07-cv-01826-WHA Document 249 Filed 11/08/2007 Page 2 of 34 1 Plaintiffs Jordan Walker, Michael Bensignor, d/b/a Mike’s Computer Services, Fred 2 Williams, and Karol Juskiewicz, on behalf of themselves and all others similarly situated in the 3 United States, bring this action for damages and injunctive relief under the federal antitrust laws 4 against Defendants named herein, demanding trial by jury, and complaining and alleging as 5 follows: 6 NATURE OF THE CASE 7 1. -

An Emerging Architecture in Smart Phones

International Journal of Electronic Engineering and Computer Science Vol. 3, No. 2, 2018, pp. 29-38 http://www.aiscience.org/journal/ijeecs ARM Processor Architecture: An Emerging Architecture in Smart Phones Naseer Ahmad, Muhammad Waqas Boota * Department of Computer Science, Virtual University of Pakistan, Lahore, Pakistan Abstract ARM is a 32-bit RISC processor architecture. It is develop and licenses by British company ARM holdings. ARM holding does not manufacture and sell the CPU devices. ARM holding only licenses the processor architecture to interested parties. There are two main types of licences implementation licenses and architecture licenses. ARM processors have a unique combination of feature such as ARM core is very simple as compare to general purpose processors. ARM chip has several peripheral controller, a digital signal processor and ARM core. ARM processor consumes less power but provide the high performance. Now a day, ARM Cortex series is very popular in Smartphone devices. We will also see the important characteristics of cortex series. We discuss the ARM processor and system on a chip (SOC) which includes the Qualcomm, Snapdragon, nVidia Tegra, and Apple system on chips. In this paper, we discuss the features of ARM processor and Intel atom processor and see which processor is best. Finally, we will discuss the future of ARM processor in Smartphone devices. Keywords RISC, ISA, ARM Core, System on a Chip (SoC) Received: May 6, 2018 / Accepted: June 15, 2018 / Published online: July 26, 2018 @ 2018 The Authors. Published by American Institute of Science. This Open Access article is under the CC BY license. -

It's Meant to Be Played

Issue 10 $3.99 (where sold) THE WAY It’s meant to be played Ultimate PC Gaming with GeForce All the best holiday games with the power of NVIDIA Far Cry’s creators outclass its already jaw-dropping technology Battlefi eld 2142 with an epic new sci-fi battle World of Warcraft: Company of Heroes Warhammer: The Burning Crusade Mark of Chaos THE NEWS Notebooks are set to transform Welcome... PC gaming Welcome to the 10th issue of The Way It’s Meant To Be Played, the he latest must-have gaming system is… T magazine dedicated to the very best in a notebook PC. Until recently considered mainly PC gaming. In this issue, we showcase a means for working on the move or for portable 30 games, all participants in NVIDIA’s presentations, laptops complete with dedicated graphic The Way It’s Meant To Be Played processing units (GPUs) such as the NVIDIA® GeForce® program. In this program, NVIDIA’s Go 7 series are making a real impact in the gaming world. Latest thing: Laptops developer technology engineers work complete with dedicated The advantages are obvious – gamers need no longer be graphic processing units with development teams to get the are making an impact in very best graphics and effects into tied to their desktop set-up. the gaming world. their new titles. The games are then The new NVIDIA® GeForce® Go 7900 notebook rigorously tested by three different labs GPUs are designed for extreme HD gaming, and gaming at NVIDIA for compatibility, stability, and hardware specialists such as Alienware and Asus have performance to ensure that any game seen the potential of the portable platform. -

High Performance Computing and AI Solutions Portfolio

Brochure High Performance Computing and AI Solutions Portfolio Technology and expertise to help you accelerate discovery and innovation Discovery and innovation have always started with great minds Go ahead. dreaming big. As artificial intelligence (AI), high performance computing (HPC) and data analytics continue to converge and Dream big. evolve, they are fueling the next industrial revolution and the next quantum leap in human progress. And with the help of increasingly powerful technology, you can dream even bigger. Dell Technologies will be there every step of the way with the technology you need to power tomorrow’s discoveries and the expertise to bring it all together, today. 463 exabytes The convergence of HPC and AI is driven by data. The data‑driven age is dramatically reshaping industries and reinventing the future. As of data will be created each day by 20251 vast amounts of data pour in from increasingly diverse sources, leveraging that data is both critical and transformational. Whether you’re working to save lives, understand the universe, build better machines, neutralize financial risks or anticipate customer sentiment, 44X ROI data informs and drives decisions that impact the success of your organization — and Average return on investment (ROI) shapes the future of our world. for HPC2 AI, HPC and data analytics are technologies designed to unlock the value of your data. While they have long been treated as separate, the three technologies are converging as 83% of CIOs it becomes clear that analytics and AI are both big‑data problems that require the power, Say they are investing in AI scalable compute, networking and storage provided by HPC. -

Data Sheet FUJITSU Tablet STYLISTIC M702

Data Sheet FUJITSU Tablet STYLISTIC M702 Data Sheet FUJITSU Tablet STYLISTIC M702 Designed for the toughest businesses The Fujitsu STYLISTIC M702 is the ideal choice if you are looking for a robust yet lightweight high- performance tablet PC to support you in the field and to increase business continuity. Being water- and dustproof, it protects components most frequently damaged, making it perfect for semi-ruggedized applications. The 25.7 cm (10.1-inch) STYLISTIC M702 provides protection against environmental conditions, ensuring uncompromising productivity whether exposed to rain, humidity or dust. Moreover, it can easily be integrated into your company’s Virtual Desktop Infrastructure (VDI), thus ensuring protected anytime access to your company data while its business software lets you securely manage your contacts and emails. Embedded 4G/LTE delivers latest high-speed connectivity for end-to-end performance in the most challenging situations and the Android operating system’s rich multimedia features also make it perfect for private use. Semi-Ruggedized Ensure absolute business continuity and protect your tablet from environmental conditions.Work from anywhere while exposing the tablet to rain, humidity or dust without causing any component damage. Waterproof (tested for IPX5/7/8 specifications), dustproof (tested for IP5X specification), durable with toughened glass Business Match Ultimate productivity from anywhere. Securely access your business applications, data and company intranet with the business software selection and protect -

Science-Driven Development of Supercomputer Fugaku

Special Contribution Science-driven Development of Supercomputer Fugaku Hirofumi Tomita Flagship 2020 Project, Team Leader RIKEN Center for Computational Science In this article, I would like to take a historical look at activities on the application side during supercomputer Fugaku’s climb to the top of supercomputer rankings as seen from a vantage point in early July 2020. I would like to describe, in particular, the change in mindset that took place on the application side along the path toward Fugaku’s top ranking in four benchmarks in 2020 that all began with the Application Working Group and Computer Architecture/Compiler/ System Software Working Group in 2011. Somewhere along this path, the application side joined forces with the architecture/system side based on the keyword “co-design.” During this journey, there were times when our opinions clashed and when efforts to solve problems came to a halt, but there were also times when we took bold steps in a unified manner to surmount difficult obstacles. I will leave the description of specific technical debates to other articles, but here, I would like to describe the flow of Fugaku development from the application side based heavily on a “science-driven” approach along with some of my personal opinions. Actually, we are only halfway along this path. We look forward to the many scientific achievements and so- lutions to social problems that Fugaku is expected to bring about. 1. Introduction In this article, I would like to take a look back at On June 22, 2020, the supercomputer Fugaku the flow along the path toward Fugaku’s top ranking became the first supercomputer in history to simul- as seen from the application side from a vantage point taneously rank No. -

BRKIOT-2394.Pdf

Unlocking the Mystery of Machine Learning and Big Data Analytics Robert Barton Jerome Henry Distinguished Architect Principal Engineer @MrRobbarto Office of the CTAO CCIE #6660 @wirelessccie CCDE #2013::6 CCIE #24750 CWNE #45 BRKIOT-2394 Cisco Webex Teams Questions? Use Cisco Webex Teams to chat with the speaker after the session How 1 Find this session in the Cisco Events Mobile App 2 Click “Join the Discussion” 3 Install Webex Teams or go directly to the team space 4 Enter messages/questions in the team space BRKIOT-2394 © 2020 Cisco and/or its affiliates. All rights reserved. Cisco Public 3 Tuesday, Jan. 28th Monday, Jan. 27th Wednesday, Jan. 29th BRKIOT-2600 BRKIOT-2213 16:45 Enabling OT-IT collaboration by 17:00 From Zero to IOx Hero transforming traditional industrial TECIOT-2400 networks to modern IoT Architectures IoT Fundamentals 08:45 BRKIOT-1618 Bootcamp 14:45 Industrial IoT Network Management PSOIOT-1156 16:00 using Cisco Industrial Network Director Securing Industrial – A Deep Dive. Networks: Introduction to Cisco Cyber Vision PSOIOT-2155 Enhancing the Commuter 13:30 BRKIOT-1775 Experience - Service Wireless technologies and 14:30 BRKIOT-2698 BRKIOT-1520 Provider WiFi at the Use Cases in Industrial IOT Industrial IoT Routing – Connectivity 12:15 Cisco Remote & Mobile Asset speed of Trains and Beyond Solutions PSOIOT-2197 Cisco Innovates Autonomous 14:00 TECIOT-2000 Vehicles & Roadways w/ IoT BRKIOT-2497 BRKIOT-2900 Understanding Cisco's 14:30 IoT Solutions for Smart Cities and 11:00 Automating the Network of Internet Of Things (IOT) BRKIOT-2108 Communities Industrial Automation Solutions Connected Factory Architecture Theory and 11:00 Practice PSOIOT-2100 BRKIOT-1291 Unlock New Market 16:15 Opening Keynote 09:00 08:30 Opportunities with LoRaWAN for IOT Enterprises Embedded Cisco services Technologies IOT IOT IOT Track #CLEMEA www.ciscolive.com/emea/learn/technology-tracks.html Cisco Live Thursday, Jan. -

NVIDIA Quadro Technical Specifications

NVIDIA Quadro Technical Specifications NVIDIA Quadro Workstation GPU High-resolution Antialiasing ° Dassault CATIA • Full 128-bit floating point precision • Up to 16x full-scene antialiasing (FSAA), ° ESRI ArcGIS pipeline at resolutions up to 1920 x 1200 ° ICEM Surf • 12-bit subpixel precision • 12-bit subpixel sampling precision ° MSC.Nastran, MSC.Patran • Hardware-accelerated antialiased enhances AA quality ° PTC Pro/ENGINEER Wildfire, points and lines • Rotated-grid FSAA significantly 3Dpaint, CDRS The NVIDIA Quadro® family of In addition to a full line up of 2D and • Hardware OpenGL overlay planes increases color accuracy and visual ° SolidWorks • Hardware-accelerated two-sided quality for edges, while maintaining ° UDS NX Series, I-deas, SolidEdge, professional solutions for workstations 3D workstation graphics solutions, the lighting performance3 Unigraphics, SDRC delivers the fastest application NVIDIA Quadro professional products • Hardware-accelerated clipping planes and many more… Memory performance and the highest quality include a set of specialty solutions that • Third-generation occlusion culling • Digital Content Creation (DCC) graphics. have been architected to meet the • 16 textures per pixel • High-speed memory (up to 512MB Alias Maya, MOTIONBUILDER needs of a wide range of industry • OpenGL quad-buffered stereo (3-pin GDDR3) ° NewTek Lightwave 3D Raw performance and quality are only sync connector) • Advanced lossless compression ° professionals. These specialty Autodesk Media and Entertainment the beginning. The NVIDIA