2020 Infra Surface Weather Observations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Relative Forecast Impact from Aircraft, Profiler, Rawinsonde, VAD, GPS-PW, METAR and Mesonet Observations for Hourly Assimilation in the RUC

16.2 Relative forecast impact from aircraft, profiler, rawinsonde, VAD, GPS-PW, METAR and mesonet observations for hourly assimilation in the RUC Stan Benjamin, Brian D. Jamison, William R. Moninger, Barry Schwartz, and Thomas W. Schlatter NOAA Earth System Research Laboratory, Boulder, CO 1. Introduction A series of experiments was conducted using the Rapid Update Cycle (RUC) model/assimilation system in which various data sources were denied to assess the relative importance of the different data types for short-range (3h-12h duration) wind, temperature, and relative humidity forecasts at different vertical levels. This assessment of the value of 7 different observation data types (aircraft (AMDAR and TAMDAR), profiler, rawinsonde, VAD (velocity azimuth display) winds, GPS precipitable water, METAR, and mesonet) on short-range numerical forecasts was carried out for a 10-day period from November- December 2006. 2. Background Observation system experiments (OSEs) have been found very useful to determine the impact of particular observation types on operational NWP systems (e.g., Graham et al. 2000, Bouttier 2001, Zapotocny et al. 2002). This new study is unique in considering the effects of most of the currently assimilated high-frequency observing systems in a 1-h assimilation cycle. The previous observation impact experiments reported in Benjamin et al. (2004a) were primarily for wind profiler and only for effects on wind forecasts. This new impact study is much broader than that the previous study, now for more observation types, and for three forecast fields: wind, temperature, and moisture. Here, a set of observational sensitivity experiments (Table 1) were carried out for a recent winter period using 2007 versions of the Rapid Update Cycle assimilation system and forecast model. -

Weatherscope Weatherscope Application Information: Weatherscope Is a Stand-Alone Application That Makes Viewing Weather Data Easier

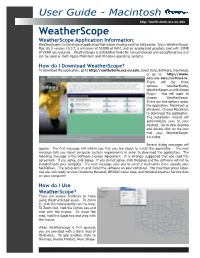

User Guide - Macintosh http://earthstorm.ocs.ou.edu WeatherScope WeatherScope Application Information: WeatherScope is a stand-alone application that makes viewing weather data easier. To run WeatherScope, Mac OS X version 10.3.7, a minimum of 512MB of RAM, and an accelerated graphics card with 32MB of VRAM are required. WeatherScope is distributed freely for noncommercial and educational use and can be used on both Apple Macintosh and Windows operating systems. How do I Download WeatherScope? To download the application, go to http://earthstorm.ocs.ou.edu, select Data, Software, Download, or go to http://www. ocs.ou.edu/software. There will be three options: WeatherBuddy, WeatherScope, and WxScope Plugin. You will want to choose WeatherScope. There are two options under the application: Macintosh or Windows. Choose Macintosh to download the application. The installation wizard will automatically save to your desktop. Go to your desktop and double click on the icon that says WeatherScope- x.x.x.pkg. Several dialog messages will appear. The fi rst message will inform you that you are about to install the application. The next message tells you about computer system requirements in order to download the application. The following message is the Software License Agreement. It is strongly suggested that you read this agreement. If you agree, click Agree. If you do not agree, click Disagree and the software will not be installed onto your computer. The next message asks you to select a destination drive (usually your hard drive). The setup will run and install the software on your computer. You may then press Close. -

Reading Mesonet Rain Maps

Oklahoma’s Weather Network Lesson 2 - Rain Maps Reading Mesonet Rain Maps Estimated Lesson Time: 30 minutes Introduction Every morning when you get ready for school, you decide what you are going to wear for the day. Often you might ask your parents what the weather is like or check the weather yourself before getting dressed. Then you can decide if you will wear a t-shirt or sweater, flip-flops or rain boots. The Oklahoma Mesonet, www.mesonet.org, is a weather and climate network covering the state. The Mesonet collects measurements such as air tempera- ture, rainfall, wind speed, and wind direction, every five minutes . These mea- surements are provided free to the public online. The Mesonet has 120 remote weather stations across the state collecting data. There is at least one in every county which means there is one located near you. Our data is used by people across the state. Farmers use our data to grow their crops, and firefighters use it to help put out a fire. Emergency managers in your town use it to warn you of tornadoes, and sound the town’s sirens. Mesonet rainfall data gives a statewide view, updated every five minutes. When reading the Mesonet rainfall accumulation maps, notice each Mesonet site displays accumulated rainfall. The map also displays the National Weather Service (NWS) River Forecast Center’s rainfall estimates (in color) across Oklahoma based on radar (an instrument that can locate precipitation and its motion). For example, areas in blue have lower rainfall than areas in red or purple. -

Alternative Earth Science Datasets for Identifying Patterns and Events

https://ntrs.nasa.gov/search.jsp?R=20190002267 2020-02-17T17:17:45+00:00Z Alternative Earth Science Datasets For Identifying Patterns and Events Kaylin Bugbee1, Robert Griffin1, Brian Freitag1, Jeffrey Miller1, Rahul Ramachandran2, and Jia Zhang3 (1) University of Alabama in Huntsville (2) NASA MSFC (3) Carnegie Mellon Universityv Earth Observation Big Data • Earth observation data volumes are growing exponentially • NOAA collects about 7 terabytes of data per day1 • Adds to existing 25 PB archive • Upcoming missions will generate another 5 TB per day • NASA’s Earth observation data is expected to grow to 131 TB of data per day by 20222 • NISAR and other large data volume missions3 Over the next five years, the daily ingest of data into the • Other agencies like ESA expect data EOSDIS archive is expected to grow significantly, to more 4 than 131 terabytes (TB) of forward processing. NASA volumes to continue to grow EOSDIS image. • How do we effectively explore and search through these large amounts of data? Alternative Data • Data which are extracted or generated from non-traditional sources • Social media data • Point of sale transactions • Product reviews • Logistics • Idea originates in investment world • Include alternative data sources in investment decision making process • Earth observation data is a growing Image Credit: NASA alternative data source for investing • DMSP and VIIRS nightlight data Alternative Data for Earth Science • Are there alternative data sources in the Earth sciences that can be used in a similar manner? • -

TC Modelling and Data Assimilation

Tropical Cyclone Modeling and Data Assimilation Jason Sippel NOAA AOML/HRD 2021 WMO Workshop at NHC Outline • History of TC forecast improvements in relation to model development • Ongoing developments • Future direction: A new model History: Error trends Official TC Track Forecast Errors: • Hurricane track forecasts 1990-2020 have improved markedly 300 • The average Day-3 forecast location error is 200 now about what Day-1 error was in 1990 100 • These improvements are 1990 2020 largely tied to improvements in large- scale forecasts History: Error trends • Hurricane track forecasts have improved markedly • The average Day-3 forecast location error is now about what Day-1 error was in 1990 • These improvements are largely tied to improvements in large- scale forecasts History: Error trends Official TC Intensity Forecast Errors: 1990-2020 • Hurricane intensity 30 forecasts have only recently improved 20 • Improvement in intensity 10 forecast largely corresponds with commencement of 0 1990 2020 Hurricane Forecast Improvement Project HFIP era History: Error trends HWRF Intensity Skill 40 • Significant focus of HFIP has been the 20 development of the HWRF better 0 Climo better HWRF model -20 -40 • As a result, HWRF intensity has improved Day 1 Day 3 Day 5 significantly over the past decade HWRF skill has improved up to 60%! Michael Talk focus: How better use of data, particularly from recon, has helped improve forecasts Michael Talk focus: How better use of data, particularly from recon, has helped improve forecasts History: Using TC Observations -

Evaluating NEXRAD Multisensor Precipitation Estimates for Operational Hydrologic Forecasting

JUNE 2000 YOUNG ET AL. 241 Evaluating NEXRAD Multisensor Precipitation Estimates for Operational Hydrologic Forecasting C. BRYAN YOUNG,A.ALLEN BRADLEY,WITOLD F. K RAJEWSKI, AND ANTON KRUGER Iowa Institute of Hydraulic Research and Department of Civil and Environmental Engineering, The University of Iowa, Iowa City, Iowa MARK L. MORRISSEY Environmental Veri®cation and Analysis Center, University of Oklahoma, Norman, Oklahoma (Manuscript received 9 August 1999, in ®nal form 10 January 2000) ABSTRACT Next-Generation Weather Radar (NEXRAD) multisensor precipitation estimates will be used for a host of applications that include operational stream¯ow forecasting at the National Weather Service River Forecast Centers (RFCs) and nonoperational purposes such as studies of weather, climate, and hydrology. Given these expanding applications, it is important to understand the quality and error characteristics of NEXRAD multisensor products. In this paper, the issues involved in evaluating these products are examined through an assessment of a 5.5-yr record of multisensor estimates from the Arkansas±Red Basin RFC. The objectives were to examine how known radar biases manifest themselves in the multisensor product and to quantify precipitation estimation errors. Analyses included comparisons of multisensor estimates based on different processing algorithms, com- parisons with gauge observations from the Oklahoma Mesonet and the Agricultural Research Service Micronet, and the application of a validation framework to quantify error characteristics. This study reveals several com- plications to such an analysis, including a paucity of independent gauge data. These obstacles are discussed and recommendations are made to help to facilitate routine veri®cation of NEXRAD products. 1. Introduction WSR-88D radars and a network of gauges that report observations to the RFC in near±real time (Fulton et al. -

Mesorefmatl.PM6.5 For

What is the Oklahoma Mesonet? Overview The Oklahoma Mesonet is a world-class network of environ- mental monitoring stations. The network was designed and implemented by scientists at the University of Oklahoma (OU) and at Oklahoma State University (OSU). The Oklahoma Mesonet consists of 114 automated stations covering Oklahoma. There is at least one Mesonet station in each of Oklahoma’s 77 counties. Definition of “Mesonet” “Mesonet” is a combination of the words “mesoscale” and “network.” • In meteorology, “mesoscale” refers to weather events that range in size from a few kilometers to a few hundred kilo- meters. Mesoscale events last from several minutes to several hours. Thunderstorms and squall lines are two examples of mesoscale events. • A “network” is an interconnected system. Thus, the Oklahoma Mesonet is a system designed to mea- sure the environment at the size and duration of mesoscale weather events. At each site, the environment is measured by a set of instru- ments located on or near a 10-meter-tall tower. The measure- ments are packaged into “observations” every 5 minutes, then the observations are transmitted to a central facility every 15 minutes – 24 hours per day year-round. The Oklahoma Climatological Survey (OCS) at OU receives the observations, verifies the quality of the data and provides the data to Mesonet customers. It only takes 10 to 20 minutes from the time the measurements are acquired until they be- come available to customers, including schools. OCS Weather Series Chapter 1.1, Page 1 Copyright 1997 Oklahoma Climatological Survey. All rights reserved. As of 1997, no other state or nation is known to have a net- Fun Fact work that boasts the capabilities of the Oklahoma Mesonet. -

Observations of Extreme Variations in Radiosonde Ascent Rates

Observations of Significant Variations in Radiosonde Ascent Rates Above 20 km. A Preliminary Report W.H. Blackmore Upper air Observations Program Field Systems Operations Center NOAA National Weather Service Headquarters Ryan Kardell Weather Forecast Office, Springfield, Missouri NOAA National Weather Service Latest Edition, Rev D, June 14, 2012 1. Introduction: Commonly known measurements obtained from radiosonde observations are pressure, temperature, relative humidity (PTU), dewpoint, heights and winds. Yet, another measurement of significant value, obtained from high resolution data, is the radiosonde ascent rate. As the balloon carrying the radiosonde ascends, its' rise rate can vary significantly owing to vertical motions in the atmosphere. Studies on deriving vertical air motions and other information from radiosonde ascent rate data date from the 1950s (Corby, 1957) to more recent work done by Wang, et al. (2009). The causes for the vertical motions are often from atmospheric gravity waves that are induced by such phenomena as deep convection in thunderstorms (Lane, et al. 2003), jet streams, and wind flow over mountain ranges. Since April, 1995, the National Weather Service (NWS) has archived radiosonde data from the MIcroART upper air system at six second intervals for nearly all stations in the NWS 92 station upper air network. With the network deployment of the Radiosonde Replacement System (RRS) beginning in August, 2005, the resolution of the data archived increased to 1 second intervals and also includes the GPS radiosonde height data. From these data, balloon ascent rate can be derived by noting the rate of change in height for a period of time The purpose of this study is to present observations of significant variations of radiosonde balloon ascent rates above 20 km in the stratosphere taken close to (less than 150 km away) and near the time of severe and non- severe thunderstorms. -

(BOW ECHO and MCV EXPERIMENT) NCAR/ATD -OCTOBER 2002 OFAP MEETING Submitted on 15 June 2002

REQUEST FOR NRL P-3, ELDORA, MGLASS, ISFF, DROPSONDE AND SONDE SUPPORT BAMEX (BOW ECHO AND MCV EXPERIMENT) NCAR/ATD -OCTOBER 2002 OFAP MEETING Submitted on 15 June 2002 PART I: GENERAL INFORMATION Corresponding Principal Investigator Name Chris Davis Institution NCAR/MMM Address P.O. Box 3000, Boulder, Colorado 80307 Phone 303-497-8990 FAX 303-497-8181 Email [email protected] Project Description Project Title Bow Echo and MCV Experiment (BAMEX) Co-Investigator(s) and Affiliation(s) Facility PIs: Michael Biggerstaff, University of Oklahoma Roger Wakimoto, UCLA Christopher Davis, NCAR David Jorgensen, NSSL Kevin Knupp, University of Alabama, Huntsville Morris Weisman (NCAR) David Dowell (NCAR) Other Co-investigators (major contributors to science planning): George Bryan, Penn State University Robert Johns, Storm Prediction Center Brian Klimowski, NWSFO, Rapid City, S.D. Ron Przybylinski, NWSFO, St. Louis, Missouri Gary Schmocker, NWSFO, St. Louis, Missouri Jeffrey Trapp, NSSL Stanley Trier, NCAR Conrad Ziegler, NSSL A complete list of expected participants appears in Appendix A of the Science Overview Document (SOD). Location of Project St. Louis, Missouri Start and End Dates of Project 20 May - 6 July 2003 Version 01-10 Davis et al. –BAMEX – NRL P-3, ELDORA, Dropsondes, MGLASS, ISFF, sondes ABSTRACT OF PROPOSED PROJECT BAMEX is a study using highly mobile platforms to examine the life cycles of mesoscale convective systems. It represents a combination of two related programs to investigate (a) bow echoes, principally those which produce damaging surface winds and last at least 4 hours and (b) larger convective systems which produce long lived mesoscale convective vortices (MCVs). -

The Impact of Unique Meteorological Phenomena Detected by the Oklahoma Mesonet and ARS Micronet on Automated Quality Control Christopher A

The Impact of Unique Meteorological Phenomena Detected by the Oklahoma Mesonet and ARS Micronet on Automated Quality Control Christopher A. Fiebrich and Kenneth C. Crawford Oklahoma Climatological Survey, Norman, Oklahoma ABSTRACT To ensure quality data from a meteorological observing network, a well-designed quality control system is vital. Automated quality assurance (QA) software developed by the Oklahoma Mesonetwork (Mesonet) provides an effi- cient means to sift through over 500 000 observations ingested daily from the Mesonet and from a Micronet spon- sored by the Agricultural Research Service of the United States Department of Agriculture (USDA). However, some of nature's most interesting meteorological phenomena produce data that fail many automated QA tests. This means perfectly good observations are flagged as erroneous. Cold air pooling, "inversion poking," mesohighs, mesolows, heat bursts, variations in snowfall and snow cover, and microclimatic effects produced by variations in vegetation are meteorological phenomena that pose a problem for the Mesonet's automated QA tests. Despite the fact that the QA software has been engineered for most observa- tions of real meteorological phenomena to pass the various tests—but is stringent enough to catch malfunctioning sensors—erroneous flags are often placed on data during extreme events. This manuscript describes how the Mesonet's automated QA tests responded to data captured from microscale meteorological events that, in turn, were flagged as erroneous by the tests. The Mesonet's operational plan is to cata- log these extreme events in a database so QA flags can be changed manually by expert eyes. 1. Introduction and personnel at the central processing site for the Oklahoma Mesonet in Norman, Oklahoma. -

Jp2.5 Madis Support for Urbanet

To be presented at the 14th Symposium on Meteorological Observations and Instrumentation January 14 - 18, 2007, San Antonio, Texas JP2.5 MADIS SUPPORT FOR URBANET Patricia A. Miller*, Michael F. Barth, and Leon A. Benjamin1 NOAA Research – Earth System Research Laboratory (ESRL) Boulder, Colorado Richard S. Artz and William R. Pendergrass NOAA Research – Air Resources Laboratory (ARL) Silver Spring, Maryland 1. INTRODUCTION (API) that provides users with easy access to the data and QC information. The API allows each user to specify NOAA’s Earth System Research Laboratory’s station and observation types, as well as QC choices, Global Systems Division (ESRL/GSD) has established and domain and time boundaries. Many of the the MADIS (Meteorological Assimilation Data Ingest implementation details that arise in data ingest programs System) project to make integrated, quality-controlled are automatically performed, greatly simplifying user datasets available to the greater meteorological access to the disparate datasets, and effectively community. The goals of MADIS are to promote integrating the database by allowing, for example, users comprehensive data collection and distribution of to access NOAA surface observations, and non-NOAA operational and experimental observation systems, to surface mesonets through a single interface. decrease the cost and time required to access new MADIS datasets were first made publicly available observing systems, to blend and coordinate other- in July 2001, and have proven to be popular within the agency observations with NOAA observations, and to meteorological community. GSD now supports hundreds make the integrated observations easily accessible and of MADIS users, including NWS forecast offices, the usable to the greater meteorological community. -

Creating Weather History

www.mesonet.org Volume 1 — Issue 3 — Dec 2010/Jan 2011 co nnec t io n CREATING WEATHER HISTORY As every minute passes, the Mesonet collects weather observations that are rich in quality and quantity. THE MESONET IS considered the gold standard for statewide weather networks because of the volume and quality of its weather observations. And, with more than 4 billion observations in its archive – all of which have been checked and rechecked for accuracy, it’s easy to see why. “The Mesonet has the best quality assured meteorological data in the world,” said Gary McManus, Associate State Climatologist for Oklahoma. Even though the Mesonet is an automated system, there is a team of people behind the scenes making sure the weather observations are accurate and valid. There is an entire system of checks and balances in place including a calibration lab that checks each sensor, routine maintenance where “The Mesonet has the best sensors are periodically cleaned and tested, automated software that checks data in real- quality assured meteorological time and manual quality control which is done by quality assurance meteorologists, data in the world” said Chris Fiebrich, Associate Director of the GARY MCMANUS, ASSOCIATE STATE CLIMATOLOGIST Mesonet. If a problem is found, the data are still saved, but removed from Mesonet Each observation is checked for accuracy websites so users don’t see bad observations. The computer flags data if they and with 575,000 observations taken each fail quality assurance testing, but a meteorologist goes back and inspects the day, this is a large task. “Whenever we receive data to determine whether they are OK, said Morgan.