Timbre Is a Many-Splendored Thing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Playing (With) Sound of the Animation of Digitized Sounds and Their Reenactment by Playful Scenarios in the Design of Interactive Audio Applications

Playing (with) Sound Of the Animation of Digitized Sounds and their Reenactment by Playful Scenarios in the Design of Interactive Audio Applications Dissertation by Norbert Schnell Submitted for the degree of Doktor der Philosophie Supervised by Prof. Gerhard Eckel Prof. Rolf Inge Godøy Institute of Electronic Music and Acoustics University of Music and Performing Arts Graz, Austria October 2013 Abstract Investigating sound and interaction, this dissertation has its foundations in over a decade of practice in the design of interactive audio applications and the development of software tools supporting this design practice. The concerned applications are sound installations, digital in- struments, games, and simulations. However, the principal contribution of this dissertation lies in the conceptualization of fundamental aspects in sound and interactions design with recorded sound and music. The first part of the dissertation introduces two key concepts, animation and reenactment, that inform the design of interactive audio applications. While the concept of animation allows for laying out a comprehensive cultural background that draws on influences from philosophy, science, and technology, reenactment is investigated as a concept in interaction design based on recorded sound materials. Even if rarely applied in design or engineering – or in the creative work with sound – the no- tion of animation connects sound and interaction design to a larger context of artistic practices, audio and music technologies, engineering, and philosophy. Starting from Aristotle’s idea of the soul, the investigation of animation follows the parallel development of philosophical con- cepts (i.e. soul, mind, spirit, agency) and technical concepts (i.e. mechanics, automation, cybernetics) over many centuries. -

Recommended Solos and Ensembles Tenor Trombone Solos Sång Till

Recommended Solos and Ensembles Tenor Trombone Solos Sång till Lotta, Jan Sandström. Edition Tarrodi: Stockholm, Sweden, 1991. Trombone and piano. Requires modest range (F – g flat1), well-developed lyricism, and musicianship. There are two versions of this piece, this and another that is scored a minor third higher. Written dynamics are minimal. Although phrases and slurs are not indicated, it is a SONG…encourage legato tonguing! Stephan Schulz, bass trombonist of the Berlin Philharmonic, gives a great performance of this work on YouTube - http://www.youtube.com/watch?v=Mn8569oTBg8. A Winter’s Night, Kevin McKee, 2011. Available from the composer, www.kevinmckeemusic.com. Trombone and piano. Explores the relative minor of three keys, easy rhythms, keys, range (A – g1, ossia to b flat1). There is a fine recording of this work on his web site. Trombone Sonata, Gordon Jacob. Emerson Edition: Yorkshire, England, 1979. Trombone and piano. There are no real difficult rhythms or technical considerations in this work, which lasts about 7 minutes. There is tenor clef used throughout the second movement, and it switches between bass and tenor in the last movement. Range is F – b flat1. Recorded by Dr. Ron Babcock on his CD Trombone Treasures, and available at Hickey’s Music, www.hickeys.com. Divertimento, Edward Gregson. Chappell Music: London, 1968. Trombone and piano. Three movements, range is modest (G-g#1, ossia a1), bass clef throughout. Some mixed meter. Requires a mute, glissandi, and ad. lib. flutter tonguing. Recorded by Brett Baker on his CD The World of Trombone, volume 1, and can be purchased at http://www.brettbaker.co.uk/downloads/product=download-world-of-the- trombone-volume-1-brett-baker. -

1Er Février 2015 — 15 H Salle De Concert Bourgie Prixopus.Qc.Ca

e 18 GALA1er février 2015 — 15 h Salle de concert Bourgie prixOpus.qc.ca LE CONSEIL QUÉBÉCOIS DE LA MUSIQUE VOUS SOUHAITE LA BIENVENUE À LA DIX-HUITIÈME ÉDITION DU GALA DES PRIX OPUS 14 h : accueil 15 h : remise des prix 17 h 30 : réception à la Galerie des bronzes, pavillon Michal et Renata Hornstein du Musée des beaux-arts de Montréal Écoutez l’émission spéciale consacrée au dix-huitième gala des prix Opus, animée par Katerine Verebely le lundi 2 février 2015 à 20 h sur les ondes d’ICI Musique (100,7 FM à Montréal) dans le cadre des Soirées classiques. Réalisation : Michèle Patry La Fondation Arte Musica offre ses félicitations à tous les finalistes et aux lauréats des prix Opus de l’an 18. La Fondation est fière de contribuer à l’excellence du milieu musical québécois par la production et la diffusion de concerts et d’activités éducatives, à la croisée des arts. Au nom de toute l’équipe du Musée des beaux-arts de Montréal et de la Fondation Arte Musica, je vous souhaite un excellent gala ! Isolde Lagacé Directrice générale et artistique sallebourgie.ca 514-285-2000 # 4 Soyez les bienvenus à cette 18e édition du gala des prix Opus. Votre fête de la musique. Un événement devenu incontournable au fil des ans ! Le gala des prix Opus est l’occasion annuelle de célébrer l’excellence du travail des interprètes, compositeurs, créateurs, auteurs et diffuseurs. Tout au long de la saison 2013-2014, ces artistes ont mis leur talent, leur créativité et leur ténacité au service de la musique de concert. -

Performance Commentary

PERFORMANCE COMMENTARY . It seems, however, far more likely that Chopin Notes on the musical text 3 The variants marked as ossia were given this label by Chopin or were intended a different grouping for this figure, e.g.: 7 added in his hand to pupils' copies; variants without this designation or . See the Source Commentary. are the result of discrepancies in the texts of authentic versions or an 3 inability to establish an unambiguous reading of the text. Minor authentic alternatives (single notes, ornaments, slurs, accents, Bar 84 A gentle change of pedal is indicated on the final crotchet pedal indications, etc.) that can be regarded as variants are enclosed in order to avoid the clash of g -f. in round brackets ( ), whilst editorial additions are written in square brackets [ ]. Pianists who are not interested in editorial questions, and want to base their performance on a single text, unhampered by variants, are recom- mended to use the music printed in the principal staves, including all the markings in brackets. 2a & 2b. Nocturne in E flat major, Op. 9 No. 2 Chopin's original fingering is indicated in large bold-type numerals, (versions with variants) 1 2 3 4 5, in contrast to the editors' fingering which is written in small italic numerals , 1 2 3 4 5 . Wherever authentic fingering is enclosed in The sources indicate that while both performing the Nocturne parentheses this means that it was not present in the primary sources, and working on it with pupils, Chopin was introducing more or but added by Chopin to his pupils' copies. -

Developing Sound Spatialization Tools for Musical Applications with Emphasis on Sweet Spot and Off-Center Perception

Sweet [re]production: Developing sound spatialization tools for musical applications with emphasis on sweet spot and off-center perception Nils Peters Music Technology Area Department of Music Research Schulich School of Music McGill University Montreal, QC, Canada October 2010 A thesis submitted to McGill University in partial fulfillment of the requirements for the degree of Doctor of Philosophy. c 2010 Nils Peters 2010/10/26 i Abstract This dissertation investigates spatial sound production and reproduction technology as a mediator between music creator and listener. Listening experiments investigate the per- ception of spatialized music as a function of the listening position in surround-sound loud- speaker setups. Over the last 50 years, many spatial sound rendering applications have been developed and proposed to artists. Unfortunately, the literature suggests that artists hardly exploit the possibilities offered by novel spatial sound technologies. Another typical drawback of many sound rendering techniques in the context of larger audiences is that most listeners perceive a degraded sound image: spatial sound reproduction is best at a particular listening position, also known as the sweet spot. Structured in three parts, this dissertation systematically investigates both problems with the objective of making spatial audio technology more applicable for artistic purposes and proposing technical solutions for spatial sound reproductions for larger audiences. The first part investigates the relationship between composers and spatial audio tech- nology through a survey on the compositional use of spatialization, seeking to understand how composers use spatialization, what spatial aspects are essential and what functionali- ties spatial audio systems should strive to include. The second part describes the development process of spatializaton tools for musical applications and presents a technical concept. -

The Shaping of Time in Kaija Saariaho's Emilie

THE SHAPING OF TIME IN KAIJA SAARIAHO’S ÉMILIE: A PERFORMER’S PERSPECTIVE Maria Mercedes Diaz Garcia A Dissertation Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of DOCTOR OF MUSICAL ARTS May 2020 Committee: Emily Freeman Brown, Advisor Brent E. Archer Graduate Faculty Representative Elaine J. Colprit Nora Engebretsen-Broman © 2020 Maria Mercedes Diaz Garcia All Rights Reserved iii ABSTRACT Emily Freeman Brown, Advisor This document examines the ways in which Kaija Saariaho uses texture and timbre to shape time in her 2008 opera, Émilie. Building on ideas about musical time as described by Jonathan Kramer in his book The Time of Music: New Meanings, New Temporalities, New Listening Strategies (1988), such as moment time, linear time, and multiply-directed time, I identify and explain how Saariaho creates linearity and non-linearity in Émilie and address issues about timbral tension/release that are used both structurally and ornamentally. I present a conceptual framework reflecting on my performance choices that can be applied in a general approach to non-tonal music performance. This paper intends to be an aid for performers, in particular conductors, when approaching contemporary compositions where composers use the polarity between tension and release to create the perception of goal-oriented flow in the music. iv To Adeli Sarasola and Denise Zephier, with gratitude. v ACKNOWLEDGMENTS I would like to thank the many individuals who supported me during my years at BGSU. First, thanks to Dr. Emily Freeman Brown for offering me so many invaluable opportunities to grow musically and for her detailed corrections of this dissertation. -

The Perception of Melodic Consonance: an Acoustical And

The perception of melodic consonance: an acoustical and neurophysiological explanation based on the overtone series Jared E. Anderson University of Pittsburgh Department of Mathematics Pittsburgh, PA, USA Abstract The melodic consonance of a sequence of tones is explained using the overtone series: the overtones form “flow lines” that link the tones melodically; the strength of these flow lines determines the melodic consonance. This hypothesis admits of psychoacoustical and neurophysiological interpretations that fit well with the place theory of pitch perception. The hypothesis is used to create a model for how the auditory system judges melodic consonance, which is used to algorithmically construct melodic sequences of tones. Keywords: auditory cortex, auditory system, algorithmic composition, automated com- position, consonance, dissonance, harmonics, Helmholtz, melodic consonance, melody, musical acoustics, neuroacoustics, neurophysiology, overtones, pitch perception, psy- choacoustics, tonotopy. 1. Introduction Consonance and dissonance are a basic aspect of the perception of tones, commonly de- scribed by words such as ‘pleasant/unpleasant’, ‘smooth/rough’, ‘euphonious/cacophonous’, or ‘stable/unstable’. This is just as for other aspects of the perception of tones: pitch is described by ‘high/low’; timbre by ‘brassy/reedy/percussive/etc.’; loudness by ‘loud/soft’. But consonance is a trickier concept than pitch, timbre, or loudness for three reasons: First, the single term consonance has been used to refer to different perceptions. The usual convention for distinguishing between these is to add an adjective specifying what sort arXiv:q-bio/0403031v1 [q-bio.NC] 22 Mar 2004 is being discussed. But there is not widespread agreement as to which adjectives should be used or exactly which perceptions they are supposed to refer to, because it is difficult to put complex perceptions into unambiguous language. -

Girl Power: Feminine Motifs in Japanese Popular Culture David Endresak [email protected]

Eastern Michigan University DigitalCommons@EMU Senior Honors Theses Honors College 2006 Girl Power: Feminine Motifs in Japanese Popular Culture David Endresak [email protected] Follow this and additional works at: http://commons.emich.edu/honors Recommended Citation Endresak, David, "Girl Power: Feminine Motifs in Japanese Popular Culture" (2006). Senior Honors Theses. 322. http://commons.emich.edu/honors/322 This Open Access Senior Honors Thesis is brought to you for free and open access by the Honors College at DigitalCommons@EMU. It has been accepted for inclusion in Senior Honors Theses by an authorized administrator of DigitalCommons@EMU. For more information, please contact lib- [email protected]. Girl Power: Feminine Motifs in Japanese Popular Culture Degree Type Open Access Senior Honors Thesis Department Women's and Gender Studies First Advisor Dr. Gary Evans Second Advisor Dr. Kate Mehuron Third Advisor Dr. Linda Schott This open access senior honors thesis is available at DigitalCommons@EMU: http://commons.emich.edu/honors/322 GIRL POWER: FEMININE MOTIFS IN JAPANESE POPULAR CULTURE By David Endresak A Senior Thesis Submitted to the Eastern Michigan University Honors Program in Partial Fulfillment of the Requirements for Graduation with Honors in Women's and Gender Studies Approved at Ypsilanti, Michigan, on this date _______________________ Dr. Gary Evans___________________________ Supervising Instructor (Print Name and have signed) Dr. Kate Mehuron_________________________ Honors Advisor (Print Name and have signed) Dr. Linda Schott__________________________ Dennis Beagan__________________________ Department Head (Print Name and have signed) Department Head (Print Name and have signed) Dr. Heather L. S. Holmes___________________ Honors Director (Print Name and have signed) 1 Table of Contents Chapter 1: Printed Media.................................................................................................. -

1907 Virginian State Female Normal School

Longwood University Digital Commons @ Longwood University Yearbooks Library, Special Collections, and Archives 1-1-1907 1907 Virginian State Female Normal School Follow this and additional works at: http://digitalcommons.longwood.edu/yearbooks Recommended Citation State Female Normal School, "1907 Virginian" (1907). Yearbooks. 53. http://digitalcommons.longwood.edu/yearbooks/53 This Article is brought to you for free and open access by the Library, Special Collections, and Archives at Digital Commons @ Longwood University. It has been accepted for inclusion in Yearbooks by an authorized administrator of Digital Commons @ Longwood University. For more information, please contact [email protected]. .^oODcq fe 5 Digitized by tine Internet Arciiive in 2010 witii funding from Lyrasis IVIembers and Sloan Foundation http://www.archive.org/details/virginian1907stat o THE VIRGINIAN STATE FEMALE NORMAL SCHOOL 1907 Farmville, Virginia Greetiwcs this, the sixth volume of The Virginian, succeeds in after life in bringing to the IF minds of its readers happy recollections of student life at the State Normal School, it has fully accomplished its purpose. We take this opportunity of thanking those who have so kindly assisted us in making this Annual a success. To Dr. Messenger, who has given us valuable assist- ance in the literary department, we extend our hearty thanks. We wish to acknow- ledge gratefully our appreciation of the contributions to the art department, being especially grateful to Mr. Thomas Mitchell Pierce, who presented us with our frontis- piece, and to Mr. Mattoon, Mrs. J. L. Bugg, and Miss CouUing. We realize the fact that no one could have given more time and thought towards making this volume a success than Miss Lula O. -

June 2020 Volume 87 / Number 6

JUNE 2020 VOLUME 87 / NUMBER 6 President Kevin Maher Publisher Frank Alkyer Editor Bobby Reed Reviews Editor Dave Cantor Contributing Editor Ed Enright Creative Director ŽanetaÎuntová Design Assistant Will Dutton Assistant to the Publisher Sue Mahal Bookkeeper Evelyn Oakes ADVERTISING SALES Record Companies & Schools Jennifer Ruban-Gentile Vice President of Sales 630-359-9345 [email protected] Musical Instruments & East Coast Schools Ritche Deraney Vice President of Sales 201-445-6260 [email protected] Advertising Sales Associate Grace Blackford 630-359-9358 [email protected] OFFICES 102 N. Haven Road, Elmhurst, IL 60126–2970 630-941-2030 / Fax: 630-941-3210 http://downbeat.com [email protected] CUSTOMER SERVICE 877-904-5299 / [email protected] CONTRIBUTORS Senior Contributors: Michael Bourne, Aaron Cohen, Howard Mandel, John McDonough Atlanta: Jon Ross; Boston: Fred Bouchard, Frank-John Hadley; Chicago: Alain Drouot, Michael Jackson, Jeff Johnson, Peter Margasak, Bill Meyer, Paul Natkin, Howard Reich; Indiana: Mark Sheldon; Los Angeles: Earl Gibson, Andy Hermann, Sean J. O’Connell, Chris Walker, Josef Woodard, Scott Yanow; Michigan: John Ephland; Minneapolis: Andrea Canter; Nashville: Bob Doerschuk; New Orleans: Erika Goldring, Jennifer Odell; New York: Herb Boyd, Bill Douthart, Philip Freeman, Stephanie Jones, Matthew Kassel, Jimmy Katz, Suzanne Lorge, Phillip Lutz, Jim Macnie, Ken Micallef, Bill Milkowski, Allen Morrison, Dan Ouellette, Ted Panken, Tom Staudter, Jack Vartoogian; Philadelphia: Shaun Brady; Portland: Robert Ham; San Francisco: Yoshi Kato, Denise Sullivan; Seattle: Paul de Barros; Washington, D.C.: Willard Jenkins, John Murph, Michael Wilderman; Canada: J.D. Considine, James Hale; France: Jean Szlamowicz; Germany: Hyou Vielz; Great Britain: Andrew Jones; Portugal: José Duarte; Romania: Virgil Mihaiu; Russia: Cyril Moshkow. -

番号 曲名 Name インデックス 作曲者 Composer 編曲者 Arranger作詞

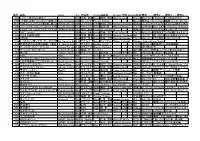

番号 曲名 Name インデックス作曲者 Composer編曲者 Arranger作詞 Words出版社 備考 備考2 備考3 備考4 595 1 2 3 ~恋がはじまる~ 123Koigahajimaru水野 良樹 Yoshiki鄕間 幹男 Mizuno Mikio Gouma ウィンズスコアWSJ-13-020「カルピスウォーター」CMソングいきものがかり 1030 17世紀の古いハンガリー舞曲(クラリネット4重奏)Early Hungarian 17thDances フェレンク・ファルカシュcentury from EarlytheFerenc 17th Hungarian century Farkas Dances from the Musica 取次店:HalBudapest/ミュージカ・ブダペスト Leonard/ハル・レナード編成:E♭Cl./B♭Cl.×2/B.Cl HL50510565 1181 24のプレリュードより第4番、第17番24Preludes Op.28-4,1724Preludesフレデリック・フランソワ・ショパン Op.28-4,17Frédéric福田 洋介 François YousukeChopin Fukuda 音楽之友社バンドジャーナル2019年2月スコアは4番と17番分けてあります 840 スリー・ラテン・ダンス(サックス4重奏)3 Latin Dances 3 Latinパトリック・ヒケティック Dances Patric 尾形Hiketick 誠 Makoto Ogata ブレーンECW-0010Sax SATB1.Charanga di Xiomara Reyes 2.Merengue Sempre di Aychem sunal 3.Dansa Lationo di Maria del Real 997 3☆3ダンス ソロトライアングルと吹奏楽のための 3☆3Dance福島 弘和 Hirokazu Fukushima 音楽之友社バンドジャーナル2017年6月号サンサンダンス(日本語) スリースリーダンス(英語) トレトレダンス(イタリア語) トゥワトゥワダンス(フランス語) 973 360°(miwa) 360domiwa、NAOKI-Tmiwa、NAOKI-T西條 太貴 Taiki Saijyo ウィンズスコアWSJ-15-012劇場版アニメ「映画ドラえもん のび太の宇宙英雄記(スペースヒーローズ)」主題歌 856 365日の紙飛行機 365NichinoKamihikouki角野 寿和 / 青葉 紘季Toshikazu本澤なおゆき Kadono / HirokiNaoyuki Honzawa Aoba M8 QH1557 歌:AKB48 NHK連続テレビ小説『あさが来た』主題歌 685 3月9日 3Gatu9ka藤巻 亮太 Ryouta原田 大雪 Fujimaki Hiroyuki Harada ウィンズスコアWSL-07-0052005年秋に放送されたフジテレビ系ドラマ「1リットルの涙」の挿入歌レミオロメン歌 1164 6つのカノン風ソナタ オーボエ2重奏Six Canonic Sonatas6 Canonicゲオルク・フィリップ・テレマン SonatasGeorg ウィリアム・シュミットPhilipp TELEMANNWilliam Schmidt Western International Music 470 吹奏楽のための第2組曲 1楽章 行進曲Ⅰ.March from Ⅰ.March2nd Suiteグスタフ・ホルスト infrom F for 2ndGustav Military Suite Holst -

Music: Broken Symmetry, Geometry, and Complexity Gary W

Music: Broken Symmetry, Geometry, and Complexity Gary W. Don, Karyn K. Muir, Gordon B. Volk, James S. Walker he relation between mathematics and Melody contains both pitch and rhythm. Is • music has a long and rich history, in- it possible to objectively describe their con- cluding: Pythagorean harmonic theory, nection? fundamentals and overtones, frequency Is it possible to objectively describe the com- • Tand pitch, and mathematical group the- plexity of musical rhythm? ory in musical scores [7, 47, 56, 15]. This article In discussing these and other questions, we shall is part of a special issue on the theme of math- outline the mathematical methods we use and ematics, creativity, and the arts. We shall explore provide some illustrative examples from a wide some of the ways that mathematics can aid in variety of music. creativity and understanding artistic expression The paper is organized as follows. We first sum- in the realm of the musical arts. In particular, we marize the mathematical method of Gabor trans- hope to provide some intriguing new insights on forms (also known as short-time Fourier trans- such questions as: forms, or spectrograms). This summary empha- sizes the use of a discrete Gabor frame to perform Does Louis Armstrong’s voice sound like his • the analysis. The section that follows illustrates trumpet? the value of spectrograms in providing objec- What do Ludwig van Beethoven, Ben- • tive descriptions of musical performance and the ny Goodman, and Jimi Hendrix have in geometric time-frequency structure of recorded common? musical sound. Our examples cover a wide range How does the brain fool us sometimes • of musical genres and interpretation styles, in- when listening to music? And how have cluding: Pavarotti singing an aria by Puccini [17], composers used such illusions? the 1982 Atlanta Symphony Orchestra recording How can mathematics help us create new of Copland’s Appalachian Spring symphony [5], • music? the 1950 Louis Armstrong recording of “La Vie en Rose” [64], the 1970 rock music introduction to Gary W.